目录

1.execute启动时,如何知道要执行哪些DataStream

用于记录自己学习flink整套流程的一篇博客,本文主要讨论,flink的一个job中,多个stream转化为dag的大致步骤

以org.apache.flink.streaming.examples.wordcount.WordCount为例

贴一张 stream转为dag执行的流程图

大体就是

Stream->StreamGraph->JobGraph->ExecutionGraph->物理执行一.客户端编写flink代码的过程

- 1.StreamExecutionEnvironment 流运行环境(注:这个流运行环境类是干什么的,当前我们还不清楚)

- 1.1获得StreamExecutionEnvironment

- 1.2 指定StreamExecutionEnvironment的一些配置,例如并行度,checkpoint等

- 2.DataStream数据流相关

- 2.1创建DataStreamSource数据输入流

- 2.2以source为开头,通过算子创建下游的DataStream

- 2.3最后的下游DataStream指想输出流DataStreamSink

- 3.懒执行 env.execute 启动流任务

总结一下,.我们获得了StreamExecutionEnvironment,我们也创建了DataStream和相关算子操作,最后执行的是StreamExecutionEnvironment.execute,那么有几个简单问题:

- StreamExecutionEnvironment怎么获得我们写在main方法里的DataStream的,我们并没有将DataStream加入到StreamExecutionEnvironment中,那execute启动时,如何知道要执行哪些DataStream呢?

- flink是怎么按照上下游执行DataStream的?

带着2个基本的问题,我们开始调试源码。

注:调试过程不会按照代码顺序执行,为什么这样呢?因为看过源码的人都知道,你一点点去看所有的源码,最后的结果是栈溢出,什么都看不懂,所以,在这里我的目的不是去详细分析代码的内容,是去解答上面的问题,一点点从问题本身深入,所以顺着问题回去找答案,这样的思路可以弄清楚我们的问题。

二.flink执行job的过程

1.execute启动时,如何知道要执行哪些DataStream

先查看execute StreamExecutionEnvironment.getExecutionEnvironment()返回一个LocalStreamEnvironment对象,查看LocalStreamEnvironment.execute()

public JobExecutionResult execute(String jobName) throws Exception {

// 转换stream 为 StreamGraph

StreamGraph streamGraph = getStreamGraph();首先将stream 转化为 StreamGraph,

protected final List<StreamTransformation<?>> transformations = new ArrayList<>();

public StreamGraph getStreamGraph() {

if (transformations.size() <= 0) {

throw new IllegalStateException("No operators defined in streaming topology. Cannot execute.");

}

return StreamGraphGenerator.generate(this, transformations);

}通过debug可以看出,transformations.size = 3 :

- OneInputTransformation{id=2, name='Flat Map', outputType=Java Tuple2<String, Integer>, parallelism=4}

- OneInputTransformation{id=4, name='Keyed Aggregation', outputType=Java Tuple2<String, Integer>, parallelism=4}

- SinkTransformation{id=5, name='Print to Std. Out', outputType=GenericType<java.lang.Object>, parallelism=4}

说明这个时候Stream已经在StreamExecutionEnvironment中了,那么stream是什么时候加入transformations的呢?

注:transformation表示算子操作,对流数据的处理过程称为一种算子操作

然后,还有一个问题,注意看上面3个transformation和wordcount的代码,发现wordcount的流程为

source ->flatmap -> Keyby -> sum -> print(sink)但是 transformation只有 3个算子

flatmap -> Keyed Aggregation -> Print to Std. Out,这里的个数不相同,不同的步骤去哪了?这里留一个疑问!---疑问1.1 后面有解答

先继续研究,transformation是什么时候加入StreamExecutionEnvironment的,我们从flatmap看起

进入flatMap()方法 -> transform(),发现了这一行代码

//这一行transformation添加到环境中

getExecutionEnvironment().addOperator(resultTransform);

public void addOperator(StreamTransformation<?> transformation) {

Preconditions.checkNotNull(transformation, "transformation must not be null.");

this.transformations.add(transformation);

}可以看出就是addOperator添加了transformation,同时,通过debug keyBy的过程,发现keyBy并没有执行addOperator, Keyed Aggregation 对应的是sum 操作,keyBy本身不对应任何流操作,所以,keyBy不算,应该有4个算子操作,Source,flat map,keyed aggregation , sink,那么 ,source去哪了?-----疑问1.2 后面有解答

问题1.execute启动时,如何知道要执行哪些DataStream 的答案

在我们做对流做算子操作时,这些stream的算子操作就保存在StreamExecutionEnvironment当中了

2.flink是怎么按照上下游执行DataStream的

这个问题可以拆解一下,比如,上面保存的算子操作,怎么区分上下游?

先看Stream如何转化为StreamGraph,顺着execute往下debug,org.apache.flink.streaming.api.graph.StreamGraphGenerator类的generateInternal()方法

private StreamGraph generateInternal(List<StreamTransformation<?>> transformations) {

for (StreamTransformation<?> transformation: transformations) {

transform(transformation);

}

return streamGraph;

}查看transform方法,关键逻辑代码如下 其他代码属于配置类型的,这里不贴出

private Collection<Integer> transform(StreamTransformation<?> transform) {

//如果之前就转化了这个算子,就返回转化的结果

if (alreadyTransformed.containsKey(transform)) {

return alreadyTransformed.get(transform);

}

Collection<Integer> transformedIds;

if (transform instanceof OneInputTransformation<?, ?>) {

transformedIds = transformOneInputTransform((OneInputTransformation<?, ?>) transform);

} else if (transform instanceof TwoInputTransformation<?, ?, ?>) {

transformedIds = transformTwoInputTransform((TwoInputTransformation<?, ?, ?>) transform);

} else if (transform instanceof SourceTransformation<?>) {

transformedIds = transformSource((SourceTransformation<?>) transform);

} else if (transform instanceof SinkTransformation<?>) {

transformedIds = transformSink((SinkTransformation<?>) transform);

} else if (transform instanceof UnionTransformation<?>) {

transformedIds = transformUnion((UnionTransformation<?>) transform);

} else if (transform instanceof SplitTransformation<?>) {

transformedIds = transformSplit((SplitTransformation<?>) transform);

} else if (transform instanceof SelectTransformation<?>) {

transformedIds = transformSelect((SelectTransformation<?>) transform);

} else if (transform instanceof FeedbackTransformation<?>) {

transformedIds = transformFeedback((FeedbackTransformation<?>) transform);

} else if (transform instanceof CoFeedbackTransformation<?>) {

transformedIds = transformCoFeedback((CoFeedbackTransformation<?>) transform);

} else if (transform instanceof PartitionTransformation<?>) {

transformedIds = transformPartition((PartitionTransformation<?>) transform);

} else if (transform instanceof SideOutputTransformation<?>) {

transformedIds = transformSideOutput((SideOutputTransformation<?>) transform);

} else {

throw new IllegalStateException("Unknown transformation: " + transform);

}

return transformedIds;

}可以看出,主要是根据算子的类型,进行不同类型的转化操作。我们进入transformOneInputTransform看一下代码逻辑

private <IN, OUT> Collection<Integer> transformOneInputTransform(OneInputTransformation<IN, OUT> transform) {

//这一行,非常重要,在转化当前的算子之前,先转化他的上游,这样就形成了边,第一个算子的上游为source

Collection<Integer> inputIds = transform(transform.getInput());

// 避免浪费的转化

if (alreadyTransformed.containsKey(transform)) {

return alreadyTransformed.get(transform);

}

//获得 slot share 的组名,没有设置就采用default

String slotSharingGroup = determineSlotSharingGroup(transform.getSlotSharingGroup(), inputIds);

//给StreamGraph添加一个节点

streamGraph.addOperator(transform.getId(),

slotSharingGroup,

transform.getOperator(),

transform.getInputType(),

transform.getOutputType(),

transform.getName());

if (transform.getStateKeySelector() != null) {

TypeSerializer<?> keySerializer = transform.getStateKeyType().createSerializer(env.getConfig());

streamGraph.setOneInputStateKey(transform.getId(), transform.getStateKeySelector(), keySerializer);

}

streamGraph.setParallelism(transform.getId(), transform.getParallelism());

streamGraph.setMaxParallelism(transform.getId(), transform.getMaxParallelism());

//给streamGraph添加一个边 上游 transform id 到当前的 transform id 的边

for (Integer inputId: inputIds) {

streamGraph.addEdge(inputId, transform.getId(), 0);

}

//返回 当前算子的id 也是 transform id

return Collections.singleton(transform.getId());

}可以看出,transformation的主要操作就是,使用transformation算子的id作为识别符,

- 添加输入的算子和当前的算子为节点

- 添加输入id到当前算子id形成的边

注:这里解答了疑问1.2的问题,为什么生成的算子操作,没有source,1.source不算算子操作,2.Stream生成图的时候,Source做为下游的输入,同样会被写入到StreamGraph中,不会丢失。

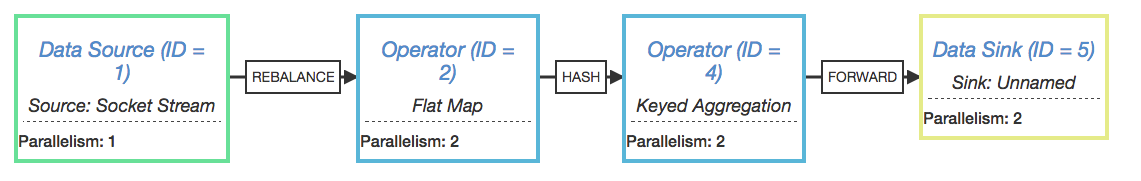

由此,StreamGraph形成了一张图,这张图满足上下游关系,不过这个图很简单,如下图

总结一下,Stream->StreamGraph的过程如下:

- 1.创建运行环境

- 2.创建流和算子

- 3.创建流和算子的时候,保存对应的算子信息到运行环境,算子中记录id,上游和输出结果类型等信息

- 4.execute的时候,遍历算子数组,将每一个算子的上游和自己作为StreamGraph的节点,将上游到自己的路径设置为边

至于节点和边的主要构成部分,之后再进行深入的分析,这里只了解一个大体流程即可,然后继续回头看execute的代码

//转换StreamGraph 为 jobGraph

JobGraph jobGraph = streamGraph.getJobGraph();

jobGraph看一下StreamGraph->jobGraph的过程

private JobGraph createJobGraph() {

// 确保所有的顶点都立即开始

jobGraph.setScheduleMode(ScheduleMode.EAGER);

// 为节点生成确定性散列,以便在提交过程中识别它们(如果它们没有更改)。

Map<Integer, byte[]> hashes = defaultStreamGraphHasher.traverseStreamGraphAndGenerateHashes(streamGraph);

// 为向后兼容性生成遗留版本散列

List<Map<Integer, byte[]>> legacyHashes = new ArrayList<>(legacyStreamGraphHashers.size());

for (StreamGraphHasher hasher : legacyStreamGraphHashers) {

legacyHashes.add(hasher.traverseStreamGraphAndGenerateHashes(streamGraph));

}

Map<Integer, List<Tuple2<byte[], byte[]>>> chainedOperatorHashes = new HashMap<>();

//设置 链路 往下传递的即链路,

setChaining(hashes, legacyHashes, chainedOperatorHashes);

//设置物理边

setPhysicalEdges();

//设置 slotShard

setSlotSharing();

configureCheckpointing();

// 将已注册的缓存文件添加到作业配置中

for (Tuple2<String, DistributedCache.DistributedCacheEntry> e : streamGraph.getEnvironment().getCachedFiles()) {

DistributedCache.writeFileInfoToConfig(e.f0, e.f1, jobGraph.getJobConfiguration());

}

// 最后设置ExecutionConfig

try {

jobGraph.setExecutionConfig(streamGraph.getExecutionConfig());

}

catch (IOException e) {

throw new IllegalConfigurationException("Could not serialize the ExecutionConfig." +

"This indicates that non-serializable types (like custom serializers) were registered");

}

return jobGraph;

}JobGraph的结构如下图左边

然后 jobGraph生成以后,继续看execute

//启动一个miniCluster

miniCluster.start();

//设置访问地址

configuration.setInteger(RestOptions.PORT, miniCluster.getRestAddress().getPort());

//执行jobGraph

return miniCluster.executeJobBlocking(jobGraph);查看executeJobBlocking,里面主要代码部分为submit(job)这一句,继续往里面debug

public CompletableFuture<JobSubmissionResult> submitJob(JobGraph jobGraph) {

final DispatcherGateway dispatcherGateway;

try {//获得调度员

dispatcherGateway = getDispatcherGateway();

} catch (LeaderRetrievalException | InterruptedException e) {

ExceptionUtils.checkInterrupted(e);

return FutureUtils.completedExceptionally(e);

}

// 我们必须允许队列调度在Flip-6模式,因为我们需要从resourcemanager 请求插槽

//这句话不太懂

jobGraph.setAllowQueuedScheduling(true);

final CompletableFuture<Void> jarUploadFuture = uploadAndSetJarFiles(dispatcherGateway, jobGraph);

//这里执行了真正的提交job操作

final CompletableFuture<Acknowledge> acknowledgeCompletableFuture = jarUploadFuture.thenCompose(

(Void ack) -> dispatcherGateway.submitJob(jobGraph, rpcTimeout));

//返回运行结果

return acknowledgeCompletableFuture.thenApply(

(Acknowledge ignored) -> new JobSubmissionResult(jobGraph.getJobID()));

}然后这里的dispatcherGateWay是多线程调试,因为线程比较多,这里debug比较麻烦,根据大神写的文章,找到线索,

这里的Dispatcher是一个接收job,然后指派JobMaster去启动任务的类,我们可以看看它的类结构,有两个实现。在本地环境下启动的是MiniDispatcher,在集群上提交任务时,集群上启动的是StandaloneDispatcher。

所以查看MiniDispatcher和StandaloneDispatcher,调试后发现dispatcher的实例为StandaloneDispatcher,进入Dispatcher的submitJob

public CompletableFuture<Acknowledge> submitJob(JobGraph jobGraph, Time timeout) {

final JobID jobId = jobGraph.getJobID();

log.info("Submitting job {} ({}).", jobId, jobGraph.getName());

final RunningJobsRegistry.JobSchedulingStatus jobSchedulingStatus;

try {

jobSchedulingStatus = runningJobsRegistry.getJobSchedulingStatus(jobId);

} catch (IOException e) {

return FutureUtils.completedExceptionally(new FlinkException(String.format("Failed to retrieve job scheduling status for job %s.", jobId), e));

}

if (jobSchedulingStatus == RunningJobsRegistry.JobSchedulingStatus.DONE || jobManagerRunnerFutures.containsKey(jobId)) {

return FutureUtils.completedExceptionally(

new JobSubmissionException(jobId, String.format("Job has already been submitted and is in state %s.", jobSchedulingStatus)));

} else {

//主要逻辑可以看这一行,等待任务结束或终止,然后进入this::persistAndRunJob看看

final CompletableFuture<Acknowledge> persistAndRunFuture = waitForTerminatingJobManager(jobId, jobGraph, this::persistAndRunJob)

.thenApply(ignored -> Acknowledge.get());

return persistAndRunFuture.exceptionally(

(Throwable throwable) -> {

final Throwable strippedThrowable = ExceptionUtils.stripCompletionException(throwable);

log.error("Failed to submit job {}.", jobId, strippedThrowable);

throw new CompletionException(

new JobSubmissionException(jobId, "Failed to submit job.", strippedThrowable));

});

}

}然后根据 persistAndRunJob() -> runJob() ->createJobManagerRunner()->startJobManagerRunner()

然后进入JobManagerRunner查看内部代码,(很复杂,不用仔细看)

大体逻辑是 JobManagerRunner 创建的时候会注册两个服务 JobMaster 和 leaderElectionService

然后调用链如下

- 1.Dispatcher调用jobManagerRunner.start()

- 2.进入leaderElectionService.start(this) 把jobManagerRunner传递进去

- 3.EmbeddedLeaderElectionService.start()

- 4.addContender()->updateLeader()->execute(new GrantLeadershipCall())

- 5.GrantLeadershipCall 类 run方法中 contender.grantLeadership(leaderSessionId)

- 6.回调jobManagerRuner.grantLeadership(); start的时候将jobManagerRunner传进去保存了。这时候调用

- 7.verifyJobSchedulingStatusAndStartJobManager(leaderSessionID);

- 8.final CompletableFuture<Acknowledge> startFuture = jobMaster.start(new JobMasterId(leaderSessionId), rpcTimeout);

- 9.进入jobMaster.start ->startJobExecution()->resetAndScheduleExecutionGraph() 终于,到了graph转化的地方

- 10.final ExecutionGraph newExecutionGraph = createAndRestoreExecutionGraph(newJobManagerJobMetricGroup)

- 11.ExecutionGraph newExecutionGraph = createExecutionGraph(currentJobManagerJobMetricGroup)

- 12.进入ExecutionGraphBuilder.buildGraph->buildGraph()

注:leaderElectionService接口表示选举服务,用于选举JobManager,负责JobManager的HA,本地模式下选主服务为EmbeddedLeaderElectionService,zookeeper的时候,采用ZooKeeperLeaderElectionService进行选主

- 终于到了jobGraph转化为ExecutionGraph的构造方法了,总结一下上面的逻辑:

- 生成jobGraph之后,启动MiniCluster,miniCluster中注册了一个Dispatcher调度服务

- Dispatcher调度服务内部注册了JobManagerRunner服务,JobManagerRunner这个名字的确有问题,这个服务是用来启动JobMaster的,为什么要叫JobManagerRunner呢?应该叫JobMasterRunner。这个的原因参考Flink中JobManager与JobMaster的作用及区别

- 通过MiniCluster的submitJob中的这一行代码

-

final CompletableFuture<Acknowledge> acknowledgeCompletableFuture = jarUploadFuture.thenCompose( (Void ack) -> dispatcherGateway.submitJob(jobGraph, rpcTimeout)); - 执行了Dispatcher的submitJob方法,层层嵌套,最后执行了jobManagerRunner.start()。

- jobManagerRunner服务内部注册了JobMaster 和 leaderElectionService 两个服务,

- JobManagerRunner调用leaderElectionService 选主服务,然后委托选主服务,启动JobMaster服务

- JobMaster将JobGraph转换为ExecutionGraph,并分发给TaskManager执行

好吧,虽然搞的这么复杂,但是Flink中JobManager与JobMaster的作用及区别这个帖子中,三句话就形容完了,

- 1.Flink中JobManager负责与Client和TaskManager交互,Client将JobGraph提交给JobManager,然后其将JobGraph转换为ExecutionGraph,并分发到TaskManager上执行

- 2.对于JobMaster,Flink Dispatcher通过JobManagerRunner将JobGraph发给JobMaster,JobMaster然后将JobGraph转换为ExecutionGraph,并分发给TaskManager执行

- 3.这个是历史原因。JobManager是老的runtime框架,JobMaster是社区 flip-6引入的新的runtime框架。目前起作用的应该是JobMaster

然后ExecutionGraph的逻辑视图,在上面的图里已经贴了,代码逻辑比较复杂,这里不贴了,

对flink四层图结构详细有兴趣的可以看理解flink的图结构这篇文章

贴一下StreamGraph和JobGraph和ExecutionGraph和物理执行图的结构图吧

总结

最后,回归主题,main方法中的Stream如何转化为flink的执行过程的。

1.算子操作会把保留输入,function,输出类型,并行度等信息,并把该算子添加到运行环境中

2.调用execute的时候,env将Stream的算子操作,根据上下游关系,转化为StreamGraph

3.StreamGraph转为JobGraph

4.启动MiniCluster,dispatcher,jobmaster等服务,集群模式下,jobmaster在远程服务器上

5.jobmaster将JobGraph转为ExecutionGraph

6.ExecutionGraph根据taskmanager的slot数,集群情况,将ExecutionGraph下放到taskmanager执行