Flink on Yarn启动流程分析

本章简单介绍一下Flink on Yarn的大体流程,以便更清晰的了解JobManager & TaskManager

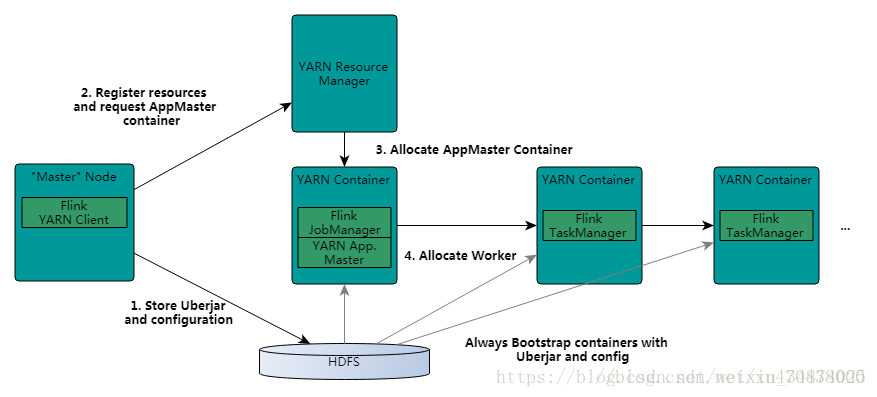

Flink Cluster on Yarn启动过程中,大体可以分为二个阶段

Flink Client发起请求

- 安装Flink:只需在一台可以连接至Yarn & HDFS集群的任意节点安装即可

- 启动脚本(命令):./bin/yarn-session.sh -n {num} -jm {num} -tm {num}

- 运行实例:yarn-session.sh中运行的最后命令是:java … org.apache.flink.yarn.cli.FlinkYarnSessionCli

简单描述FlinkYarnSessionCli的主要内容

- 根据FLINK_CONF_DIR & (YARN_CONF_DIR | HADOOP_CONF_DIR) load相关配置

- 创建yarnClient,并申请一个applicationId

- 将Flink集群运行所需要的Jar & Conf PUT至HDFS上

- 封装ApplicationMaster启动需要的Env & Cmd至Request对象中,并用yarnClient对象发起请求,等待相应

- 确认启动成功后,将重要信息封装成properties文件,并持久化至本地磁盘

注意事项:

- 步骤三中的HDFS路径,默认为:/user/{user}/.flink/{applicationId}

- 如果HDFS没有为该user创建 /user/{user} 目录,将抛出异常

- 由于该步骤中需要使用到applicationId,所以需要先通过yarnClient申请applicationId- 步骤四才会真正的向Yarn申请资源运行ApplicationMaster

- AM并不是Yarn的接口实现类,而是封装至Context中的启动命令 & 环境变量等相关信息- 启动成功后生成的properties文件中 最重要的信息为applicationId,将在之后的Flink Job提交时用于查找Cluster信息

- properties文件持久化路径,默认为:/tmp/.yarn-properties-{user}

- 如果在一个节点启动多个Session,则需要注意这个文件位置(目前还未研究)

Yarn启动AM

两组件,三阶段

- RM接收请求,并查询可用NM,并使其启动Container来运行AM

- NM接收调度,并依据信息相关信息,将Jar & Conf从HDFS下载至Local,同时还依据Cmd & Env在本地生成launcher脚本

- 通过运行launcher脚本,来启动ApplicationMaster(从源码中可以发现,Flink Client发送来的Cmd为:java … YarnSessionClusterEntrypoint)

简单描述FlinkSessionClusterEntrypoint的主要内容

- 启动基于Akka的 RPC Service & Metric Register Service

- 启动HA Service & Heartbeat Server

- 启动BLOB Server & ArchivedExecutionGraphStore (会在Local创建临时目录用于存储)

- 启动Web Monitor Service(任务管理平台)

- 启动JobManager服务,用以管理TaskManager进程

注意事项:

- 步骤二中用于存储Jar & Conf以及launcher脚本的地址为:/data/hadoop/yarn/local/usercache/{user}/appcache/application_{applicationId}/container_{applicationId}_…,其中包含一下内容

- launch_container.sh – 启动命令 & 环境变量

- flink-conf.yaml & log配置文件 – 启动配置 & 日志配置

- flink.jar & lib – 运行依赖Jar- 步骤三中运行YarnSessionClusterEntrypoint,以此来启动JobManager,而后的TaskManager,则有JobManager来启动并管理

- 实际上,在on Yarn模式下,TaskManager的启动 是推迟到了Filnk Job的调度发起的时候,并且,当一段时间没有接收到Job时,TaskManager将自动退出,释放资源

其他说明:

- 日志管理

- FlinkYarnSessionCli的启动,由Client发起,归属于Flink管理,所以日志内容存储在Flink安装目录的log/

- YarnSessionClusterEntrypoint的启动,又Yarn发起,归属于Yarn管理,所以日志内容存储在Yarn管理的目录/data/hadoop/yarn/log/…

- 进程管理

- FlinkYarnSessionCli进程由Flink管理,YarnSessionClusterEntrypoint进程由Yarn管理

- 当不通过FlinkYarnSessionCli来stop YarnSessionClusterEntrypoint时,需要使用yarn application -kill …,但是这种方式无法清理由FlinkYarnSessionCli管理和控制的资源,如:/tmp/.yarn-properties-{user}

- 发起yarn application -kill …命令,请求停止Cluster时,会先停止TaskManager,然后停止JobManager,但是不会清理HDFS上的缓存

- 通过FlinkYarnSessionCli的interact模式,可以对*/tmp/.yarn-properties-{user}* & HDFS缓存统一进行清理

- Job提交

- 这种模式下,Client将从本地查找/tmp/.yarn-properties-{user}配置,以获取applicationId来定位Cluster,所以Job提交最好是在FlinkYarnSessionCli的启动节点,否则需要指定applicationId

- 集群安装

- on Yarn模式下,Flink只需要安装至 一个节点,因为后续的进程,都会从HDFS上获取Jar & Conf来进行启动

官网文档

The YARN client needs to access the Hadoop configuration to connect to the YARN resource manager and to HDFS. It determines the Hadoop configuration using the following strategy:

- Test if YARN_CONF_DIR, HADOOP_CONF_DIR or HADOOP_CONF_PATH are set (in that order). If one of these variables are set, they are used to read the configuration.

- If the above strategy fails (this should not be the case in a correct YARN setup), the client is using the HADOOP_HOME environment variable. If it is set, the client tries to access $HADOOP_HOME/etc/hadoop (Hadoop 2) and $HADOOP_HOME/conf (Hadoop 1).

When starting a new Flink YARN session, the client first checks if the requested resources (containers and memory) are available. After that, it uploads a jar that contains Flink and the configuration to HDFS (step 1).

The next step of the client is to request (step 2) a YARN container to start the ApplicationMaster (step 3). Since the client registered the configuration and jar-file as a resource for the container, the NodeManager of YARN running on that particular machine will take care of preparing the container (e.g. downloading the files). Once that has finished, the ApplicationMaster (AM) is started.

The JobManager and AM are running in the same container. Once they successfully started, the AM knows the address of the JobManager (its own host). It is generating a new Flink configuration file for the TaskManagers (so that they can connect to the JobManager). The file is also uploaded to HDFS. Additionally, the AM container is also serving Flink’s web interface. All ports the YARN code is allocating are ephemeral ports. This allows users to execute multiple Flink YARN sessions in parallel.

After that, the AM starts allocating the containers for Flink’s TaskManagers, which will download the jar file and the modified configuration from the HDFS. Once these steps are completed, Flink is set up and ready to accept Jobs.