版权声明:本文为博主原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。

做东西,最重要的就是动手了,所以这篇文章动手跑了一个fcn32s和fcn8s以及deeplab v3+的例子,这个例子的数据集选用自动驾驶相关竞赛的kitti数据集, FCN8s在训练过程中用tensorflow2.0自带的评估能达到91%精确率, deeplab v3+能达到97%的准确率。这篇文章适合入门级选手,在文章中不再讲述fcn的结构,直接百度就可以搜到。

文章使用的是tensorflow2.0框架,该框架集成了keras,在模型的训练方面极其简洁,不像tf1.x那么复杂,综合其他深度学习框架,发现这个是最适合新手使用的一种。

文章中用到的库函数,参数等均可在tensorflow2.0 api中查找到。

文章的代码在github可以获取,地址:https://github.com/fengshilin/tf2.0-FCN

文章的结构如下:

4.模型建立

这里会给出三个模型的框架,FCN32S,FCN8S, DeepLabV3+,精确度依次递增,训练时间也依次递增。

建议初学者先复制模型代码跑一遍,再打印关键层的输出shape,然后再对模型做研究。

FCN32S

FCN32s的模型相对简单,在VGG16的模型基础上加上分类层即可。

# model

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, Conv2DTranspose, UpSampling2D

from tensorflow.keras.layers import Dropout, Input

from tensorflow.keras.initializers import Constant

# from tensorflow.nn import conv2d_transpose

image_shape = (160, 576)

def bilinear_upsample_weights(factor, number_of_classes):

"""初始化权重参数"""

filter_size = factor*2 - factor%2

factor = (filter_size + 1) // 2

if filter_size % 2 == 1:

center = factor - 1

else:

center = factor - 0.5

og = np.ogrid[:filter_size, :filter_size]

upsample_kernel = (1 - abs(og[0] - center) / factor) * (1 - abs(og[1] - center) / factor)

weights = np.zeros((filter_size, filter_size, number_of_classes, number_of_classes),

dtype=np.float32)

for i in range(number_of_classes):

weights[:, :, i, i] = upsample_kernel

return weights

class MyModel(tf.keras.Model):

def __init__(self, n_class):

super().__init__()

self.vgg16_model = self.load_vgg()

self.conv_test = Conv2D(filters=n_class, kernel_size=(1, 1)) # 分类层

self.deconv_test = Conv2DTranspose(filters=n_class,

kernel_size=(64, 64),

strides=(32, 32),

padding='same',

activation='sigmoid',

kernel_initializer=Constant(bilinear_upsample_weights(32, n_class))) # 上采样层

def call(self, input):

x = self.vgg16_model(input)

x = self.conv_test(x)

x = self.deconv_test(x)

return x

def load_vgg(self):

# 加载vgg16模型,其中注意input_tensor,include_top

vgg16_model = tf.keras.applications.vgg16.VGG16(weights='imagenet', include_top=False, input_tensor=Input(shape=(image_shape[0], image_shape[1], 3)))

for layer in vgg16_model.layers[:15]:

layer.trainable = False # 不训练前15层模型

return vgg16_model

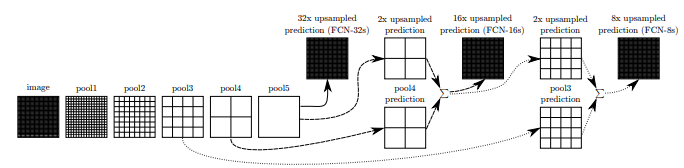

FCN8s

fcn8s在fcn32s的基础上还要再加上vgg16第四次pool的输出的分类结果,以此结果再与第三次pool的输出分类后的结果。

# model

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, Conv2DTranspose, UpSampling2D, Add, BatchNormalization, MaxPooling2D

from tensorflow.keras.layers import Dropout, Input

from tensorflow.keras.initializers import Constant

# from tensorflow.nn import conv2d_transpose

def bilinear_upsample_weights(factor, number_of_classes):

filter_size = factor*2 - factor%2

factor = (filter_size + 1) // 2

if filter_size % 2 == 1:

center = factor - 1

else:

center = factor - 0.5

og = np.ogrid[:filter_size, :filter_size]

upsample_kernel = (1 - abs(og[0] - center) / factor) * (1 - abs(og[1] - center) / factor)

weights = np.zeros((filter_size, filter_size, number_of_classes, number_of_classes),

dtype=np.float32)

for i in range(number_of_classes):

weights[:, :, i, i] = upsample_kernel

return weights

class MyModel(tf.keras.Model):

def __init__(self, NUM_OF_CLASSESS):

super().__init__()

vgg16_model = self.load_vgg()

self.conv1_1 = vgg16_model.layers[1]

self.conv1_2 = vgg16_model.layers[2]

self.pool1 = vgg16_model.layers[3]

#(128,128)

self.conv2_1 = vgg16_model.layers[4]

self.conv2_2 = vgg16_model.layers[5]

self.pool2 = vgg16_model.layers[6]

#(64,64)

self.conv3_1 = vgg16_model.layers[7]

self.conv3_2 = vgg16_model.layers[8]

self.conv3_3 = vgg16_model.layers[9]

self.pool3 = vgg16_model.layers[10]

#(32,32)

self.conv4_1 = vgg16_model.layers[11]

self.conv4_2 = vgg16_model.layers[12]

self.conv4_3 = vgg16_model.layers[13]

self.pool4 = vgg16_model.layers[14]

#(16,16)

self.conv5_1 = vgg16_model.layers[15]

self.conv5_2 = vgg16_model.layers[16]

self.conv5_3 = vgg16_model.layers[17]

self.pool5 = vgg16_model.layers[18]

self.conv6 = Conv2D(4096,(7,7),(1,1),padding="same",activation="relu")

self.drop6 = Dropout(0.5)

self.conv7 = Conv2D(4096,(1,1),(1,1),padding="same",activation="relu")

self.drop7 = Dropout(0.5)

self.score_fr = Conv2D(NUM_OF_CLASSESS,(1,1),(1,1),padding="valid",activation="relu")

self.score_pool4 = Conv2D(NUM_OF_CLASSESS,(1,1),(1,1),padding="valid",activation="relu")

self.conv_t1 = Conv2DTranspose(NUM_OF_CLASSESS,(4,4),(2,2),padding="same")

self.fuse_1 = Add()

self.conv_t2 = Conv2DTranspose(NUM_OF_CLASSESS,(4,4),(2,2),padding="same")

self.score_pool3 = Conv2D(NUM_OF_CLASSESS,(1,1),(1,1),padding="valid",activation="relu")

self.fuse_2 = Add()

self.conv_t3 = Conv2DTranspose(NUM_OF_CLASSESS,(16,16),(8,8),padding="same", activation="sigmoid", kernel_initializer=Constant(bilinear_upsample_weights(8, NUM_OF_CLASSESS)))

def call(self, input):

x = self.conv1_1(input)

x = self.conv1_2(x)

x = self.pool1(x)

x = self.conv2_1(x)

x = self.conv2_2(x)

x = self.pool2(x)

x = self.conv3_1(x)

x = self.conv3_2(x)

x = self.conv3_3(x)

x_3 = self.pool3(x)

x = self.conv4_1(x_3)

x = self.conv4_2(x)

x = self.conv4_3(x)

x_4 = self.pool4(x)

x = self.conv5_1(x_4)

x = self.conv5_2(x)

x = self.conv5_3(x)

x = self.pool5(x)

x = self.conv6(x)

x = self.drop6(x)

x = self.conv7(x)

x = self.drop7(x)

x = self.score_fr(x) # 第5层pool分类结果

x_score4 = self.score_pool4(x_4) # 第4层pool分类结果

x_dconv1 = self.conv_t1(x) # 第5层pool分类结果上采样

x = self.fuse_1([x_dconv1,x_score4]) # 第4层pool分类结果+第5层pool分类结果上采样

x_dconv2 = self.conv_t2(x) # 第一次融合后上采样

x_score3 = self.score_pool3(x_3) # 第三次pool分类

x = self.fuse_2([x_dconv2,x_score3]) # 第一次融合后上采样+第三次pool分类

x = self.conv_t3(x) # 上采样

return x

def load_vgg(self):

# 加载vgg16模型,其中注意input_tensor,include_top

vgg16_model = tf.keras.applications.vgg16.VGG16(weights='imagenet', include_top=False, input_tensor=Input(shape=(image_shape[0], image_shape[1], 3)))

for layer in vgg16_model.layers[:18]:

layer.trainable = False

return vgg16_model

DeepLab V3+模型的建立

import tensorflow as tf

from tensorflow.keras import backend as K

from tensorflow.keras.models import Model

from tensorflow.keras.layers import AveragePooling2D, Lambda, Conv2D, Conv2DTranspose, Activation, Reshape, concatenate, Concatenate, BatchNormalization, ZeroPadding2D

from tensorflow.keras.applications import ResNet50

def Upsample(tensor, size):

'''bilinear upsampling'''

name = tensor.name.split('/')[0] + '_upsample'

def bilinear_upsample(x, size):

resized = tf.image.resize(

images=x, size=size)

return resized

y = Lambda(lambda x: bilinear_upsample(x, size),

output_shape=size, name=name)(tensor)

return y

def ASPP(tensor):

'''atrous spatial pyramid pooling'''

dims = K.int_shape(tensor)

y_pool = AveragePooling2D(pool_size=(

dims[1], dims[2]), name='average_pooling')(tensor)

y_pool = Conv2D(filters=256, kernel_size=1, padding='same',

kernel_initializer='he_normal', name='pool_1x1conv2d', use_bias=False)(y_pool)

y_pool = BatchNormalization(name=f'bn_1')(y_pool)

y_pool = Activation('relu', name=f'relu_1')(y_pool)

y_pool = Upsample(tensor=y_pool, size=[dims[1], dims[2]])

y_1 = Conv2D(filters=256, kernel_size=1, dilation_rate=1, padding='same',

kernel_initializer='he_normal', name='ASPP_conv2d_d1', use_bias=False)(tensor)

y_1 = BatchNormalization(name=f'bn_2')(y_1)

y_1 = Activation('relu', name=f'relu_2')(y_1)

y_6 = Conv2D(filters=256, kernel_size=3, dilation_rate=6, padding='same',

kernel_initializer='he_normal', name='ASPP_conv2d_d6', use_bias=False)(tensor)

y_6 = BatchNormalization(name=f'bn_3')(y_6)

y_6 = Activation('relu', name=f'relu_3')(y_6)

y_12 = Conv2D(filters=256, kernel_size=3, dilation_rate=12, padding='same',

kernel_initializer='he_normal', name='ASPP_conv2d_d12', use_bias=False)(tensor)

y_12 = BatchNormalization(name=f'bn_4')(y_12)

y_12 = Activation('relu', name=f'relu_4')(y_12)

y_18 = Conv2D(filters=256, kernel_size=3, dilation_rate=18, padding='same',

kernel_initializer='he_normal', name='ASPP_conv2d_d18', use_bias=False)(tensor)

y_18 = BatchNormalization(name=f'bn_5')(y_18)

y_18 = Activation('relu', name=f'relu_5')(y_18)

y = concatenate([y_pool, y_1, y_6, y_12, y_18], name='ASPP_concat')

y = Conv2D(filters=256, kernel_size=1, dilation_rate=1, padding='same',

kernel_initializer='he_normal', name='ASPP_conv2d_final', use_bias=False)(y)

y = BatchNormalization(name=f'bn_final')(y)

y = Activation('relu', name=f'relu_final')(y)

return y

def DeepLabV3Plus(img_height, img_width, nclasses=2):

print('*** Building DeepLabv3Plus Network ***')

# 这里加载ResNet50模型,并且使用其两个模块的结果。

base_model = ResNet50(input_shape=(

img_height, img_width, 3), weights='imagenet', include_top=False)

# 可以像模型加载章节的加载部分打印ResNet50模型,查看结构,“conv4_block6_2_relu”为层的名字, 加上.output表示这一层的输出。

image_features = base_model.get_layer('conv4_block6_2_relu').output

x_a = ASPP(image_features)

x_a = Upsample(tensor=x_a, size=[img_height // 4, img_width // 4])

x_b = base_model.get_layer('conv2_block3_2_relu').output

x_b = Conv2D(filters=48, kernel_size=1, padding='same',

kernel_initializer='he_normal', name='low_level_projection', use_bias=False)(x_b)

x_b = BatchNormalization(name=f'bn_low_level_projection')(x_b)

x_b = Activation('relu', name='low_level_activation')(x_b)

x = concatenate([x_a, x_b], name='decoder_concat')

x = Conv2D(filters=256, kernel_size=3, padding='same', activation='relu',

kernel_initializer='he_normal', name='decoder_conv2d_1', use_bias=False)(x)

x = BatchNormalization(name=f'bn_decoder_1')(x)

x = Activation('relu', name='activation_decoder_1')(x)

x = Conv2D(filters=256, kernel_size=3, padding='same', activation='relu',

kernel_initializer='he_normal', name='decoder_conv2d_2', use_bias=False)(x)

x = BatchNormalization(name=f'bn_decoder_2')(x)

x = Activation('relu', name='activation_decoder_2')(x)

x = Upsample(x, [img_height, img_width])

x = Conv2D(nclasses, (1, 1), name='output_layer')(x)

'''

x = Activation('softmax')(x)

tf.losses.SparseCategoricalCrossentropy(from_logits=True)

Args:

from_logits: Whether `y_pred` is expected to be a logits tensor. By default,

we assume that `y_pred` encodes a probability distribution.

'''

model = Model(inputs=base_model.input, outputs=x, name='DeepLabV3_Plus')

print(f'*** Output_Shape => {model.output_shape} ***')

return model