Hadoop2.7.3下Hive 与MySQL

Hadoop安装环境参考:Hadoop安装

如果ubuntu安装软件,一直安装不上,参考:Storm下面有sourcelist

第一步: 安装Hive

1. 先安装 mySql

执行命令:

sudo apt-get install mysql-server

期间会跳出几个窗口,稍微看一下,是用户名 密码默认是 root root

启动mySQL命令:

sudo mysql -u root -p

默认密码是root

为Hive做准备:

先下载:

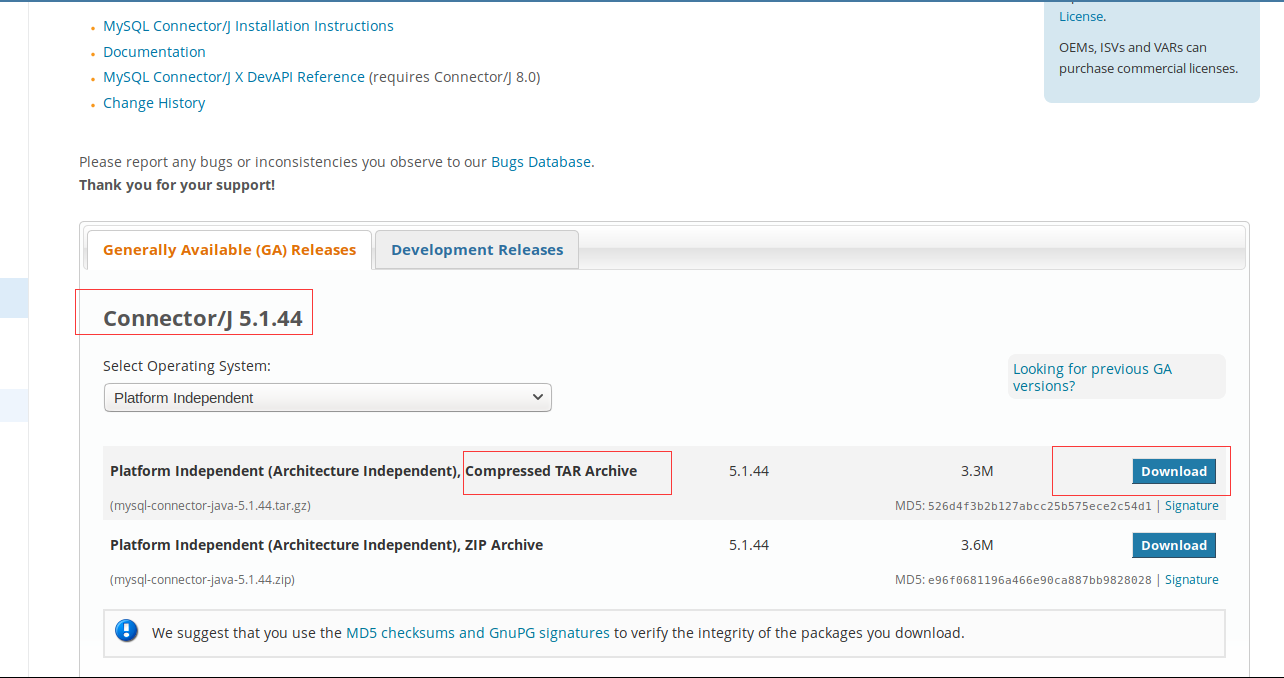

mysql-connector-java-5.1.38.tar.gz 下载地址:

http://dev.mysql.com/downloads/connector/j/

未来要将其解压出jar 包放入 hive 的lib 文件夹下

1.登录MYSQL:

mysql -u root -p --如果有问题 前面加sudo 出现输入密码 默认是root

2.创建 数据库:

create database hive;

3. 查看创建

show databases;4. 设置 编码格式

alter database hive character set latin1;

5.需要进行用户授权 即 这里设置 用户 hive 密码hive 登录该库:

DROP USER 'hive'@'%';

create user 'hive'@'%' identified by 'hive';

-- 赋予权限

grant all privileges on *.* to 'hive'@'%' with grant option;

6.更新权限:

flush privileges;

第二步安装 Hive:

下载地址:

http://www.apache.org/dyn/closer.cgi/hive/http://mirror.bit.edu.cn/apache/hive/hive-2.3.0/

下载tar.gz 解压得到:

/usr/local/apache-hive-2.3.0-bin安装完成后配置Hive:

sudo gedit ~/.bashrc

#hive

export HIVE_HOME=/usr/local/apache-hive-2.3.0-bin

export PATH=$PATH:${HIVE_HOME}/bin

export CLASSPATH=$CLASSPATH.:{HIVE_HOME}/lib

修改hive 配置:在 hive conf 目录下

sudo cp hive-env.sh.template hive-env.sh

这里说下主要几个东西:

conf hadoop_home等:

指定HADOOP_HOME及HIVE_CONF_DIR的路径如下:

HADOOP_HOME=/home/../hadoop

export HIVE_CONF_DIR=/home/../hive/conf

# export HADOOP_HEAPSIZE=512可能遇到的问题:

问题1 :描述:

MetaException(message:Version information not found in metastore. )

解决办法:

修改conf/hive-site.xml中的“hive.metastore.schema.verification” 值为 false 即可解决“Caused by:MetaException(message:Version information not found in metastore. )”

问题2:

hive 配置 mysql时的问题(Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D) 解决办法:

将hive-site.xml配置文件的

hive.querylog.location

hive.exec.local.scratchdir

hive.downloaded.resources.dir

三个值(原始为$标识的相对路径)写成绝对值

问题3:

解决办法:

chgrp -R hadoop iotmp

chown -R hadoop iotmp

下面本来想给出 hive-site.xml一份配置有些乱码。

现在先给出一个hive-site.xml下载地址:点击打开链接

问题解决,给大家发福利,有有效期的, 支付宝扫码, 赶紧 过期不候啊

这个给份好用的:

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Set Hive and Hadoop environment variables here. These variables can be used

# to control the execution of Hive. It should be used by admins to configure

# the Hive installation (so that users do not have to set environment variables

# or set command line parameters to get correct behavior).

#

# The hive service being invoked (CLI etc.) is available via the environment

# variable SERVICE

# Hive Client memory usage can be an issue if a large number of clients

# are running at the same time. The flags below have been useful in

# reducing memory usage:

#

# if [ "$SERVICE" = "cli" ]; then

# if [ -z "$DEBUG" ]; then

# export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:+UseParNewGC -XX:-UseGCOverheadLimit"

# else

# export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:-UseGCOverheadLimit"

# fi

# fi

# The heap size of the jvm stared by hive shell script can be controlled via:

#

# export HADOOP_HEAPSIZE=1024

#

# Larger heap size may be required when running queries over large number of files or partitions.

# By default hive shell scripts use a heap size of 256 (MB). Larger heap size would also be

# appropriate for hive server.

# Set HADOOP_HOME to point to a specific hadoop install directory

HADOOP_HOME=/usr/local/hadoop

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/usr/local/apache-hive-2.3.0-bin/conf

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=/usr/local/apache-hive-2.3.0-bin/lib

接着修改 hive-site.xml

目录下本没有这个文件,需要:

cp hive-default.xml.template hive-site.xml

大致有如下几个参数要修改:

< property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value> <!--不能用biyuzhe -->

<description> biyuzhe使用的数据库?charcherEncoding=UTF-8</description>

< /property>

< property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>使用的链接方式</description>

< /property>

< property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>mysql用户名</description>

< /property>

< property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

< /property>

这里也给一份好用的:

启动Hadoop:

sbin/start-dfs.sh和sbin/start-yarn.sh

验证下是否正确:

CREATE EXTERNAL TABLE IF NOT EXISTS pokes

(foo INT,bar STRING)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t' ;

导入数据:

LOAD DATA LOCAL INPATH '/usr/local/files/kv1.txt' OVERWRITE INTO TABLE pokes;

要注意 kv1.txt 文件中空格一定要手动敲入TAb 键盘左上角

期间可能需要的问题:

http://blog.csdn.net/youngqj/article/details/19987727

安装Hive 过程中要注意

1,MySQL 是否正常运行

2. 创建好mysql 用户并分配好相应的访问权限以及数据库端口号等

3. mysql-connector-Java-5.1.26-bin.jar 是否放到hive/lib 目录下 建议修改权限为777 (chmod 777 mysql-connector-java-5.1.26-bin.jar)

4. 修改conf/hive-site.xml 中的 “hive.metastore.schema.verification” 值为 false 即可解决 “Caused by: MetaException(message:Version information not found in metastore. )”

调试 模式命令 hive -hiveconf hive.root.logger=DEBUG,console

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<description>

Enforce metastore schema version consistency.

True: Verify that version information stored in metastore matches with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manully migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

</description>

</property>

出现问题:

java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7Dhttp://blog.csdn.net/lanchunhui/article/details/50858092

解决:

解决方案:将hive-site.xml配置文件的

hive.querylog.location

hive.exec.local.scratchdir

hive.downloaded.resources.dir

三个值(原始为$标识的相对路径)写成绝对值最后启动一下:

hive --service metastore -p <port_num> //端口号 25856

还有可能出现的问题:

启动hive出错,提示没有权限刚开始以为是和mysql连接问题,后来发现是文件夹无写入权限。

在hive的配置文件定义了/usr/local/hive/iotmp文件夹,使用root账号创建了该文件夹,运行hive时使用的是hadoop账号,所以导致该问题。

http://www.cnblogs.com/30go/p/7132896.html解决办法:

chgrp -R hadoop iotmp

chown -R hadoop iotmp

扫码 有红包, 发福利 有有效期的 赶紧 扫 支付宝