# -*- coding: utf-8 -*- import tensorflow as tf import numpy as np import helper ####################################################### 定义模型 PAD = 0 EOS = 1 # UNK = 2 # GO = 3 vocab_size = 10 #字典中共有10个数字 input_embedding_size = 20 #词向量的维度,即每个词用多个数字表示 encoder_hidden_units = 20 decoder_hidden_units = encoder_hidden_units encoder_inputs = tf.placeholder(shape=(None, None), dtype=tf.int32, name='encoder_inputs') decoder_targets = tf.placeholder(shape=(None, None), dtype=tf.int32, name='decoder_targets') decoder_inputs = tf.placeholder(shape=(None, None), dtype=tf.int32, name='decoder_inputs') ####################################################### embedding embeddings = tf.Variable(tf.truncated_normal([vocab_size, input_embedding_size], mean=0.0, stddev=0.1), dtype=tf.float32)#[10,20] encoder_inputs_embedded = tf.nn.embedding_lookup(embeddings, encoder_inputs) decoder_inputs_embedded = tf.nn.embedding_lookup(embeddings, decoder_inputs) #print(encoder_inputs_embedded)#shape=(?, ?, 20) ####################################################### 编码 encoder_cell = tf.contrib.rnn.BasicLSTMCell(encoder_hidden_units) lstm_layers = 4 cell = tf.contrib.rnn.MultiRNNCell([encoder_cell] * lstm_layers) encoder_outputs, encoder_final_state = tf.nn.dynamic_rnn(cell,encoder_inputs_embedded, dtype=tf.float32,time_major=True) del encoder_outputs#删除了 encoder_outputs, 因为在这个场景中我们是不关注的,我们需要的是最后的 encoder_final_state #print(encoder_final_state) #如果没有引入attention机制,encoder_final_state 就是decoder的唯一输入, #用他来作为decoder的init_state来解出decoder_targets。 ####################################################### 解码 decoder_cell = tf.contrib.rnn.BasicLSTMCell(decoder_hidden_units) decoder = tf.contrib.rnn.MultiRNNCell([decoder_cell] * lstm_layers) decoder_outputs, decoder_final_state = tf.nn.dynamic_rnn( decoder, decoder_inputs_embedded, initial_state=encoder_final_state, dtype=tf.float32, time_major=True, scope="plain_decoder", ) decoder_logits = tf.contrib.layers.fully_connected(decoder_outputs,vocab_size,activation_fn=None, weights_initializer = tf.truncated_normal_initializer(stddev=0.1), biases_initializer=tf.zeros_initializer()) #print(decoder_logits)#shape=(?, ?, 10) decoder_prediction = tf.argmax(decoder_logits,2)#2表明的是在哪个维度上求 argmax #print(decoder_prediction)#shape=(?, ?) stepwise_cross_entropy = tf.nn.softmax_cross_entropy_with_logits( labels=tf.one_hot(decoder_targets, depth=vocab_size, dtype=tf.float32), logits=decoder_logits, ) loss = tf.reduce_mean(stepwise_cross_entropy) train_op = tf.train.AdamOptimizer().minimize(loss) ####################################################### 模拟训练 #我们为了简单起见,产生了随机的输入序列,然后decoder原模原样的输出 batch_size = 100 batches = helper.random_sequences(length_from=3, length_to=8, vocab_lower=2, vocab_upper=10, batch_size=batch_size) print('head of the batch:') for seq in next(batches)[:10]: print(seq) def next_feed(): batch = next(batches)#每次随机产生100一维个数组,合并为一个二维数组 #print('batch__________',batch) encoder_inputs_, _ = helper.batch(batch) #print('encoder_inputs_',encoder_inputs_) decoder_targets_, _ = helper.batch( [(sequence) + [EOS] for sequence in batch] ) #print('decoder_targets_',decoder_targets_) decoder_inputs_, _ = helper.batch( [[EOS] + (sequence) for sequence in batch] ) #print('decoder_inputs_',decoder_inputs_) return { encoder_inputs: encoder_inputs_, decoder_inputs: decoder_inputs_, decoder_targets: decoder_targets_, } loss_track = [] max_batches = 10001 batches_in_epoch = 1000 with tf.Session() as sess: sess.run(tf.global_variables_initializer()) try: for batch in range(max_batches):#10001 fd = next_feed() _, l = sess.run([train_op, loss], fd) loss_track.append(l) if batch == 0 or batch % batches_in_epoch == 0: print('batch {}'.format(batch)) print(' minibatch loss: {}'.format(sess.run(loss, fd))) predict_ = sess.run(decoder_prediction, fd) for i, (inp, pred) in enumerate(zip(fd[encoder_inputs].T, predict_.T)): print(' sample {}:'.format(i + 1)) print(' input > {}'.format(inp)) print(' predicted > {}'.format(pred)) if i >= 2: break print() except KeyboardInterrupt: print('training interrupted')

以上代码为摘自 https://github.com/zhuanxuhit/nd101/blob/master/1.Intro_to_Deep_Learning/11.How_to_Make_a_Language_Translator/1-seq2seq.ipynb 中的Seq2Seq解读。

训练过程还需要 helper.py,可以从网址中找到复制到自己路径下。

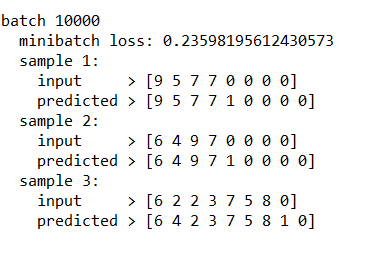

我训练3001代不能达到很好的效果,于是就选取了10001代。

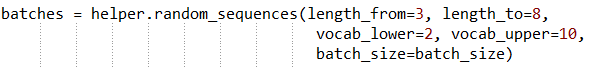

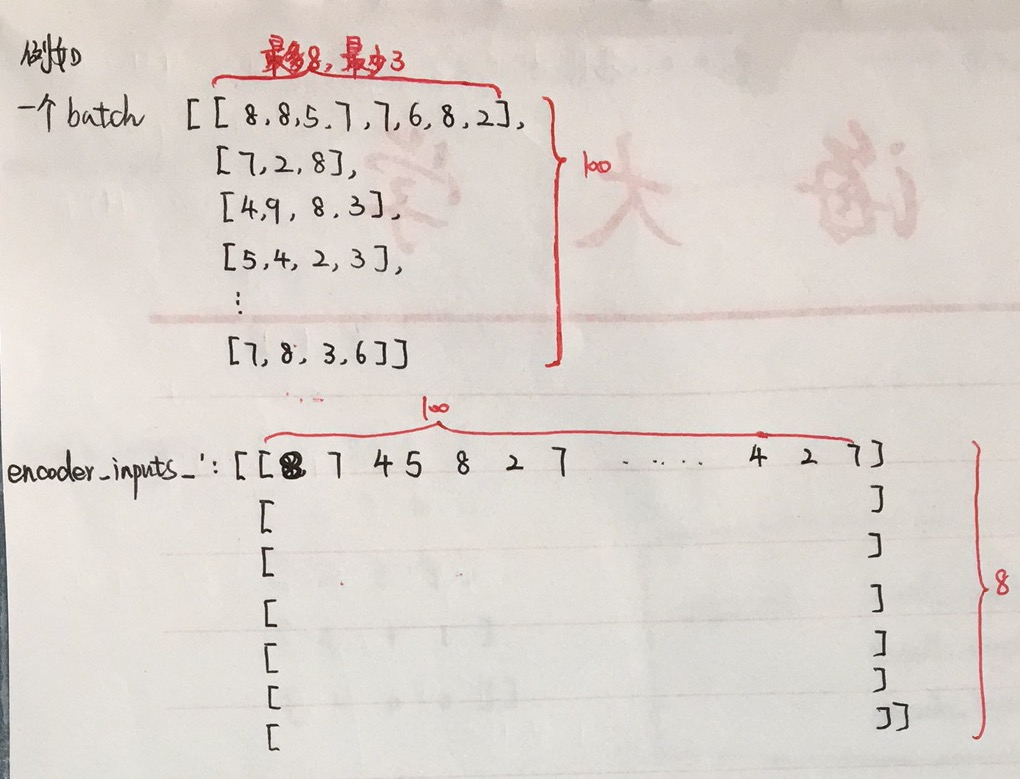

在生成输入序列时,设定序列最小长度为3,最大长度为8。每个序列的包含的数字为2~9。(由于最后需要加上加上标志1,代表解码器的输入及输出序列开始或结束,长度不够的地方需要padding填0)

在读代码时候,对于网络的输入数据结构不是很清楚,也就是下面一段代码

def next_feed(): batch = next(batches)#每次随机产生100一维个数组,合并为一个二维数组 #print('batch__________',batch) encoder_inputs_, _ = helper.batch(batch) #print('encoder_inputs_',encoder_inputs_) decoder_targets_, _ = helper.batch( [(sequence) + [EOS] for sequence in batch] ) #print('decoder_targets_',decoder_targets_) decoder_inputs_, _ = helper.batch( [[EOS] + (sequence) for sequence in batch] ) #print('decoder_inputs_',decoder_inputs_) return { encoder_inputs: encoder_inputs_, decoder_inputs: decoder_inputs_, decoder_targets: decoder_targets_, }

在输出部分的代码中,.T代表矩阵转置

batch_size = 100

我看了很多遍输入输出数据没有看明白,在经过打印之后就更模糊了,于是我将他们写出来,并举个小例子来为大家解读。

最后训练出的结果为