http://blog.csdn.net/pipisorry/article/details/78404964

当我们训练完成一个自然语言生成模型后,需要使用这个模型生成新的语言(句子),如何生成这些句子,使用如下的方法:采样,集束搜索,随机搜索。

采样Sampling(greedy search)

just sample the first word according to p 1 , then provide the corresponding embedding as input and sample p 2 , continuing like this until we sample the special end-of-sentence token or some maximum length.

集束搜索BeamSearch

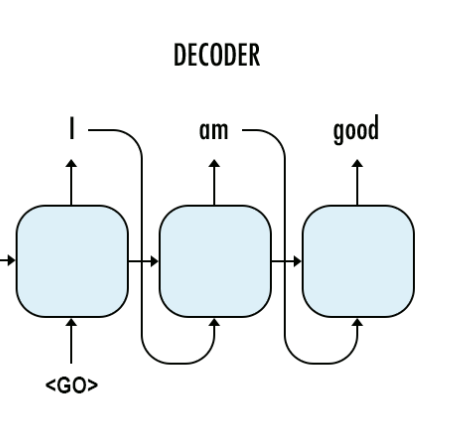

在sequence2sequence模型[深度学习:Seq2seq模型 ]中,beam search的方法只用在测试的情况(decoder解码的时候),因为在训练过程中,每一个decoder的输出是有正确答案的,也就不需要beam search去加大输出的准确率。

predict阶段的decoder

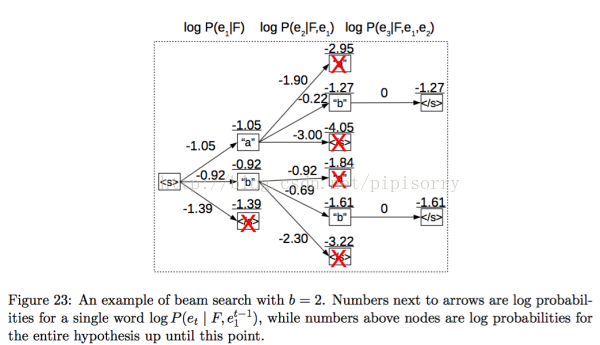

BeamSearch: iteratively consider the set of the k best sentences up to time t as candidates to generate sentences of size t þ 1, and keep only the resulting best k of them.

bs很好地近似了

lz: BS极端情况:beam size 为 1 就是 greedy search;而beam size最大应该是词典大小吧,这时当然使用viterbi算法[HMM:隐马尔科夫模型 - 预测和解码 ]最好了。

BeamSearch示例

test的时候,假设词表大小(beam size)为3,内容为a,b,c。

1: 生成第1个词的时候,选择概率最大的2个词,假设为a,c,那么当前序列就是a,c

2:生成第2个词的时候,我们将当前序列a和c,分别与词表中的所有词进行组合,得到新的6个序列aa ab ac ca cb cc,然后从其中选择2个得分最高的,作为当前序列,假如为aa cb

3:后面会不断重复这个过程,直到遇到结束符为止。最终输出2个得分最高的序列。

[seq2seq中的beam search算法过程?] [seq2seq中的beam search算法过程]

Beam size的选择

一般越大结果越好:if the model was well trained and the likelihood was aligned with human judgement, increasing the beam size should always yield better sentences.

如果不是可能是过拟合了或者评估标准不一致:The fact that we obtained the best performance with a relatively small beam size is an indication that either the model has overfitted or the objective function used to train it (likelihood) is not aligned with human judgement.

减小beam size会使生成的句子更新颖:reducing the beam size (i.e., with a shallower search over sentences), we increase the novelty of generated sentences. Indeed, instead of generating captions which repeat training captions 80 percent of the time, this gets reduced to 60 percent.

减小beam size结果好说明overfit了,同时也说明减小beam siez是regularize的一种方法。This hypothesis supports the fact that the model has overfitted to the training set, and we see this reduced beam size technique as another way to regularize (by adding some noise to the inference process).

[Vinyals, O., Toshev, A., Bengio, S., & Erhan, D. Show and tell: Lessons learned from the 2015 mscoco image captioning challenge. TPAMI2017]

随机搜索random search

...

Scheduled Sampling

curriculum learning strategy: proposed a curriculum learning strategy to gently change the training process from a fully guided scheme using the true previous word, towards a less guided scheme which mostly uses the model generated word instead.

[S. Bengio, O. Vinyals, N. Jaitly, and N. Shazeer, “Scheduled sampling for sequence prediction with recurrent neural networks,” NIPS2015.]

from: http://blog.csdn.net/pipisorry/article/details/78404964

ref: