1.爬取京东商品信息

功能为:在京东上搜索“墨菲定律”将所有的商品信息爬取、在一页爬取完之后爬取下一页直到爬取完所有信息

from selenium import webdriver from selenium.webdriver.common.keys import Keys from selenium.webdriver.common.by import By import time def get_good(driver): number = 1 try: time.sleep(5) js_code=''' window.scrollTo(0,5000) ''' driver.execute_script(js_code) time.sleep(5) good_list = driver.find_elements_by_class_name('gl-item') for good in good_list: good_name = good.find_element_by_css_selector('.p-name em').text good_url = good.find_element_by_css_selector('.p-name a').get_attribute('href') good_price = good.find_element_by_class_name('p-price').text good_commit = good.find_element_by_class_name('p-commit').text good_content = f''' 序号:{number} 商品名称:{good_name} 商品链接:{good_url} 商品价格:{good_price} 商品评价: {good_commit} \n ''' print(good_content) with open('jd3.text', 'a', encoding='utf-8') as f: f.write(good_content) number += 1 print("商品信息写入成功!!") next_tag=driver.find_element_by_class_name('pn-next') next_tag.click() time.sleep(5) get_good(driver) finally: driver.close() if __name__ == '__main__': driver=webdriver.Chrome() try: driver.implicitly_wait(10) driver.get('https://www.jd.com/') input_tag = driver.find_element_by_id('key') input_tag.send_keys('墨菲定律') input_tag.send_keys(Keys.ENTER) get_good(driver) finally: driver.close()

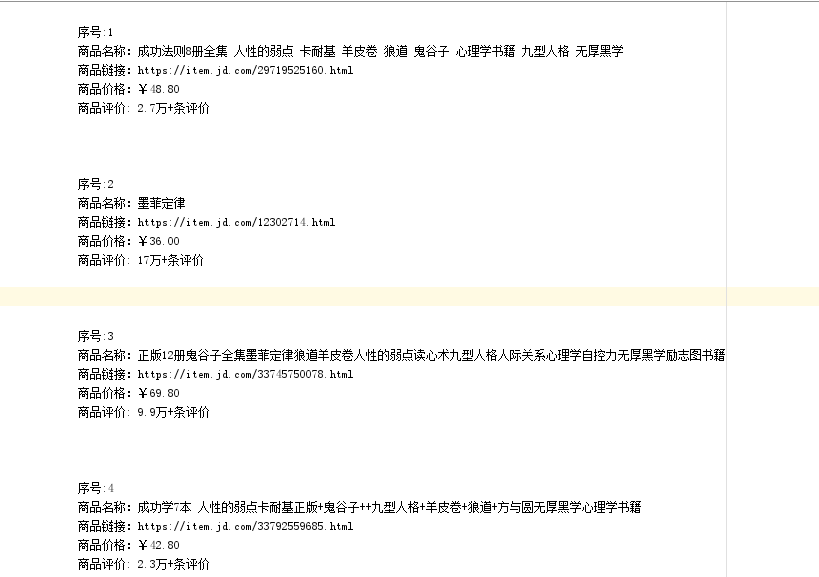

部分结果截图展示:

2.将一个元素拖拽到指定位置处

2.1 瞬间拖动

from selenium import webdriver from selenium.webdriver.common.keys import Keys from selenium.webdriver import ActionChains import time driver=webdriver.Chrome() try: driver.implicitly_wait(10) driver.get('https://www.runoob.com/try/try.php?filename=jqueryui-api-droppable') time.sleep(5) driver.switch_to.frame('iframeResult') time.sleep(1) source = driver.find_element_by_id('draggable') target = driver.find_element_by_id('droppable') ActionChains(driver).drag_and_drop(source,target).perform() time.sleep(10) finally: driver.close()

2.2 缓慢移动

from selenium import webdriver from selenium.webdriver.common.keys import Keys from selenium.webdriver import ActionChains import time driver=webdriver.Chrome() try: driver.implicitly_wait(10) driver.get('https://www.runoob.com/try/try.php?filename=jqueryui-api-droppable') time.sleep(5) driver.switch_to.frame('iframeResult') time.sleep(1) source = driver.find_element_by_id('draggable') target = driver.find_element_by_id('droppable') distance = target.location['x']-source.location['x'] ActionChains(driver).click_and_hold(source).perform() s=0 while s<distance: ActionChains(driver).move_by_offset(xoffset=5,yoffset=0).perform() s+=5 time.sleep(0.1) ActionChains(driver).release().perform() time.sleep(10) finally: driver.close()

3.BeautifulSoup4

html_doc = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="sister"><b>$37</b></p> <p class="story" id="p">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" >Elsie</a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """ from bs4 import BeautifulSoup soup = BeautifulSoup(html_doc,'lxml') # 1.直接使用 # print(soup.html) # print(type(soup.html)) # print(soup.a) # print(soup.p) # 2.获取标签的名称 # print(soup.a.name) # 3.获取标签的属性 # print(soup.a.attrs) # print(soup.a.attrs['href']) # print(soup.a.attrs['class']) #4.获取标签的文本内容 # print(soup.p.text) # 5.嵌套选择 # print(soup.html.body.p) # 6.子节点,子孙节点 # print(soup.p.children)# 返回迭代器对象 # print(list(soup.p.children)) # 7.父节点、祖先节点 # print(soup.b.parent) # print(soup.b.parents) # print(list(soup.b.parents))

总结:

find:找第一个

find_all:找所有

name ---标签名

attrs-----属性查找匹配

text-----文本匹配

标签:

------字符串过滤器 字符串全局匹配

------正则过滤器

re模块匹配

------列表过滤器

列表内的数据匹配

------bool过滤器

True匹配

------方法过滤器

用于一些要的属性以及不需要的属性查找

属性:

-clas-

-id-