作业要求来源:https://edu.cnblogs.com/campus/gzcc/GZCC-16SE1/homework/3159

可以用pandas读出之前保存的数据:

newsdf = pd.read_csv(r'F:\duym\gzccnews.csv')

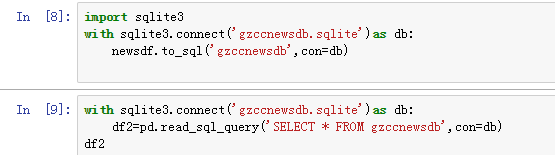

一.把爬取的内容保存到数据库sqlite3

import sqlite3

with sqlite3.connect('gzccnewsdb.sqlite') as db:

newsdf.to_sql('gzccnews',con = db)

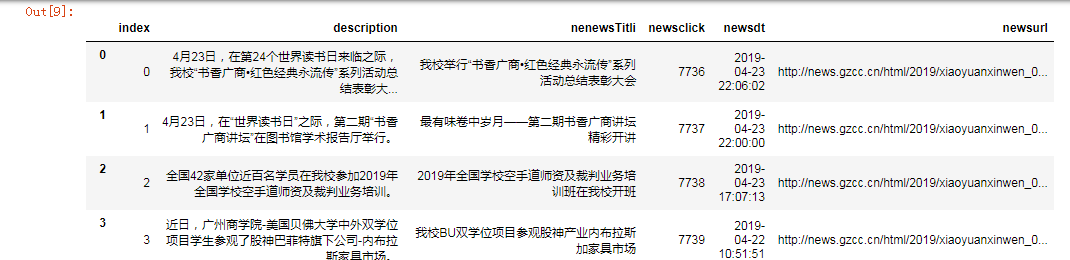

with sqlite3.connect('gzccnewsdb.sqlite') as db:

df2 = pd.read_sql_query('SELECT * FROM gzccnews',con=db)

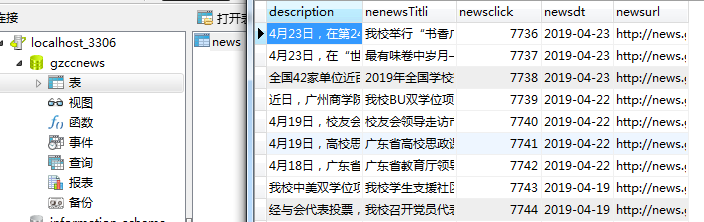

保存到MySQL数据库

- import pandas as pd

- import pymysql

- from sqlalchemy import create_engine

- conInfo = "mysql+pymysql://user:passwd@host:port/gzccnews?charset=utf8"

- engine = create_engine(conInfo,encoding='utf-8')

- df = pd.DataFrame(allnews)

- df.to_sql(name = ‘news', con = engine, if_exists = 'append', index = False)

二.爬虫综合大作业

- 选择一个热点或者你感兴趣的主题。

- 选择爬取的对象与范围。

- 了解爬取对象的限制与约束。

- 爬取相应内容。

- 做数据分析与文本分析。

- 形成一篇文章,有说明、技术要点、有数据、有数据分析图形化展示与说明、文本分析图形化展示与说明。

- 文章公开发布。

我感兴趣的主题:最近重温怦然心动电影,爬取其评论

爬取对象:猫眼http://m.maoyan.com/movie/46818?_v_=yes&channelId=4&cityId=20&$from=canary#

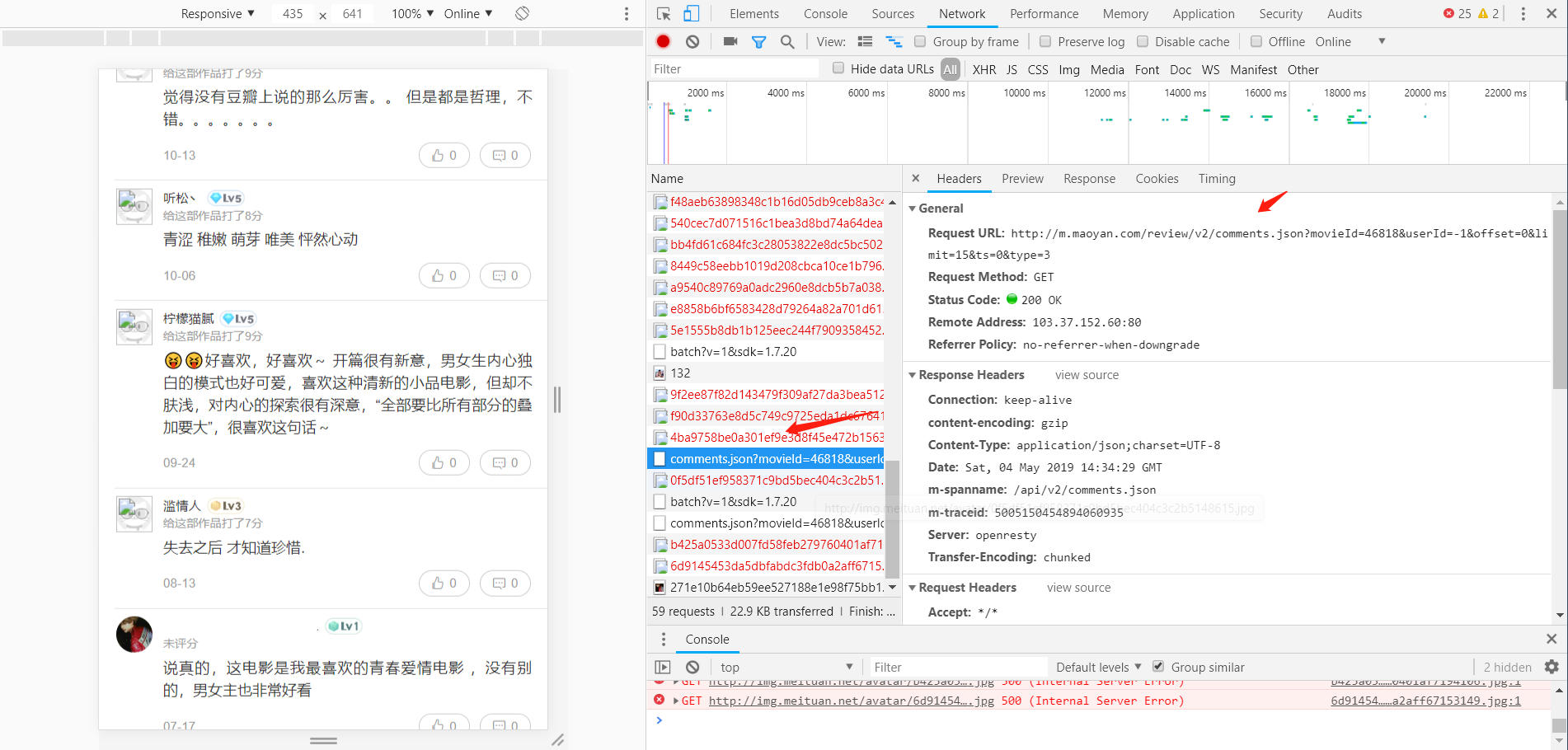

获取的是猫眼APP的评论数据,如图所示::

通过分析发现猫眼APP的评论数据接口为:http://m.maoyan.com/review/v2/comments.json?movieId=46818&userId=-1&offset=0&limit=15&ts=0&type=3

或者使用网上借鉴资料接口:

http://m.maoyan.com/mmdb/comments/movie/46818.json?_v_=yes&offset=0&startTime=2015-05-25%2019%3A17%3A16

代码实现:

先定义一个函数,用来根据指定url获取数据,且只能获取到指定的日期向前获取到15条评论数据

# 获取数据,根据url获取 def get_data(url): headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36' } req = request.Request(url, headers=headers) response = request.urlopen(req) if response.getcode() == 200: return response.read() return None

处理数据:

# 处理数据 def parse_data(html): data = json.loads(html)['cmts'] # 将str转换为json comments = [] for item in data: comment = { 'id': item['id'], 'nickName': item['nickName'], 'cityName': item['cityName'] if 'cityName' in item else '', # 处理cityName不存在的情况 'content': item['content'].replace('\n', ' ', 10), # 处理评论内容换行的情况 'score': item['score'], 'startTime': item['startTime'] } comments.append(comment) return comments

存储数据:

# 存储数据,存储到文本文件 def save_to_txt(): start_time = datetime.now().strftime('%Y-%m-%d %H:%M:%S') # 获取当前时间,从当前时间向前获取 end_time = '2015-06-13 00:00:00' while start_time > end_time: url = 'http://m.maoyan.com/mmdb/comments/movie/46818.json?_v_=yes&offset=0&startTime=' + start_time.replace( ' ', '%20') html = None ''' 问题:当请求过于频繁时,服务器会拒绝连接,实际上是服务器的反爬虫策略 解决:1.在每个请求间增加延时0.1秒,尽量减少请求被拒绝 2.如果被拒绝,则0.5秒后重试 ''' try: html = get_data(url) except Exception as e: time.sleep(0.5) html = get_data(url) else: time.sleep(0.1) comments = parse_data(html) print(comments) start_time = comments[14]['startTime'] # 获得末尾评论的时间 start_time = datetime.strptime(start_time, '%Y-%m-%d %H:%M:%S') + timedelta( seconds=-1) # 转换为datetime类型,减1秒,避免获取到重复数据 start_time = datetime.strftime(start_time, '%Y-%m-%d %H:%M:%S') # 转换为str for item in comments: with open('comments.txt', 'a', encoding='utf-8') as f: f.write(str(item['id']) + ',' + item['nickName'] + ',' + item['cityName'] + ',' + item[ 'content'] + ',' + str(item['score']) + ',' + item['startTime'] + '\n')

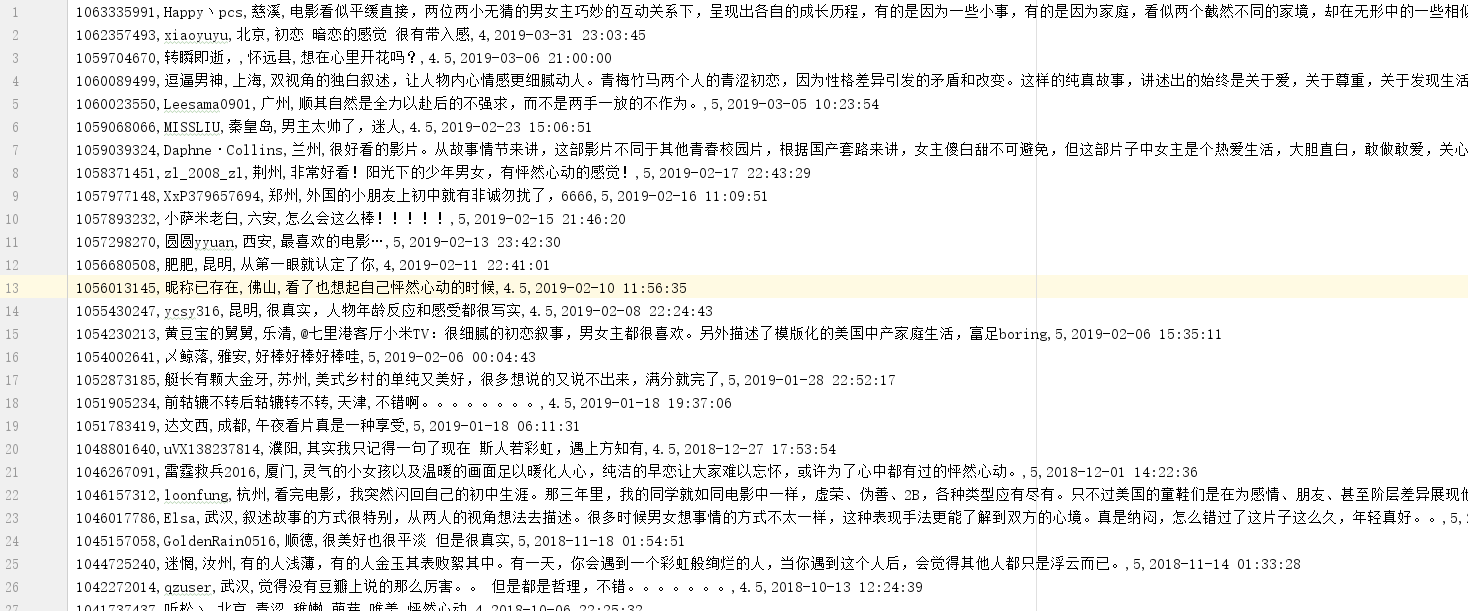

存储的数据:

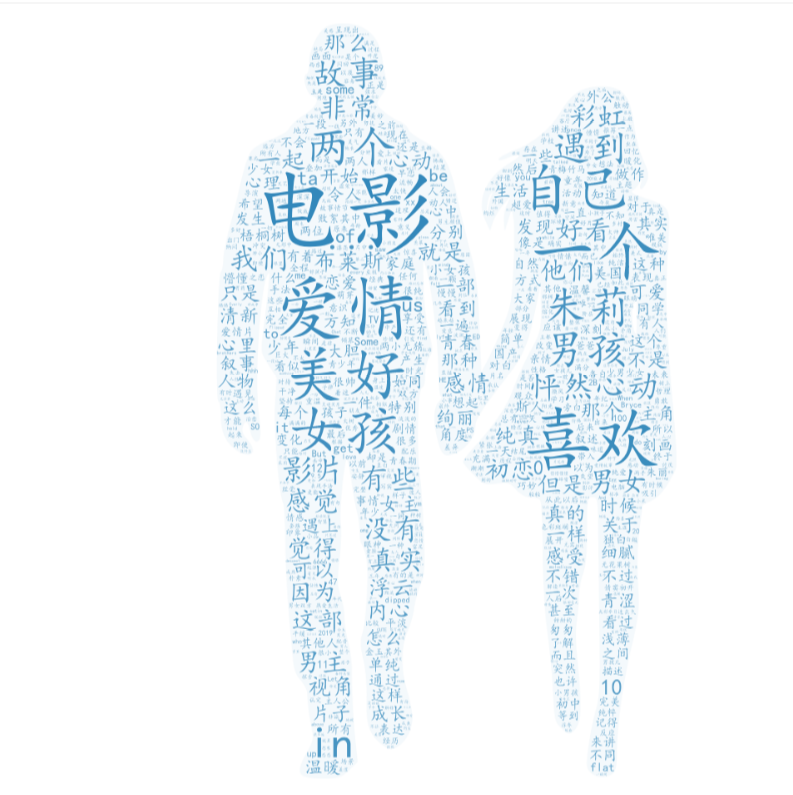

评论词云图:

# coding=utf-8 # 导入jieba模块,用于中文分词 import jieba # 获取所有评论 import pandas as pd comments = [] with open('comments.txt', mode='r', encoding='utf-8') as f: rows = f.readlines() for row in rows: comment = row.split(',')[3] if comment != '': comments.append(comment) # 设置分词 comment_after_split = jieba.cut(str(comments), cut_all=False) # 非全模式分词,cut_all=false words = " ".join(comment_after_split) # 以空格进行拼接 print(words) ch="《》\n:,。、-!?" for c in ch: words = words.replace(c,'') wordlist=jieba.lcut(words) wordict={} for w in wordlist: if len(w)==1: continue else: wordict[w] = wordict.get(w,0)+1 wordsort=list(wordict.items()) wordsort.sort(key= lambda x:x[1],reverse=True) for i in range(20): print(wordsort[i]) pd.DataFrame(wordsort).to_csv('xindong.csv', encoding='utf-8')

怦然心动词云截图:

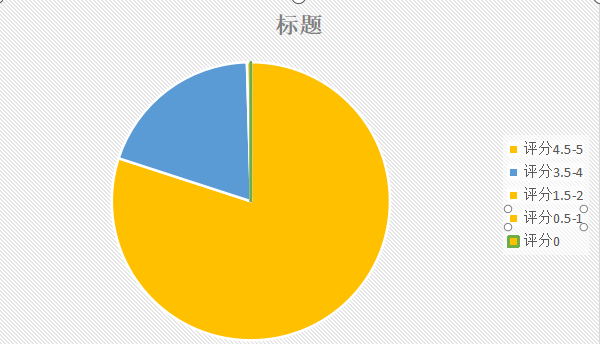

点评评分星级:

# coding=utf-8 # 获取评论中所有评分 rates = [] with open('comments.txt', mode='r', encoding='utf-8') as f: rows = f.readlines() for row in rows: rates.append(row.split(',')[4]) print(rates) # 定义星级,并统计各星级评分数量 value = [ rates.count('5') + rates.count('4.5'), rates.count('4') + rates.count('3.5'), rates.count('3') + rates.count('2.5'), rates.count('2') + rates.count('1.5'), rates.count('1') + rates.count('0.5') ] print(value)