一、DenseNet

Densely Connected Convolutional Networks (密集连接卷积网络)

- 论文链接:https://arxiv.org/pdf/1608.06993.pdf

- 论文代码:

1、(tf)https://github.com/taki0112/Densenet-Tensorflow

2、https://github.com/liuzhuang13/DenseNet

二、DenseNet框架

1、DenseNet优势:

- 减轻了vanishing-gradient(梯度消失)

- 加强了feature的传递

- 更有效地利用了feature

- 一定程度上较少了参数数量

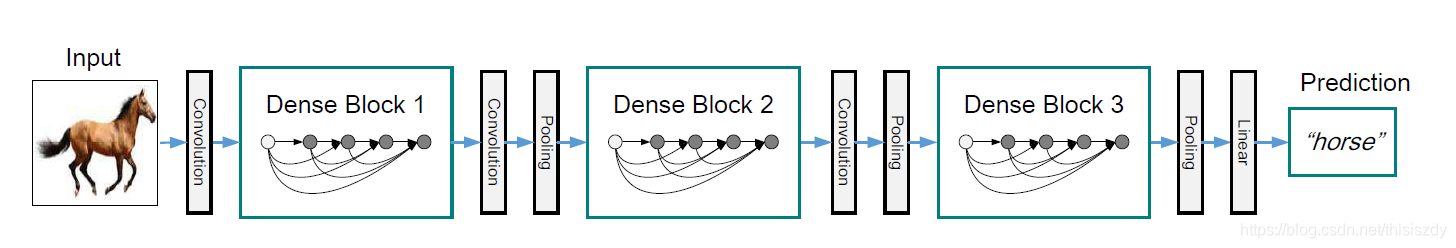

2、DenseNet主要框架

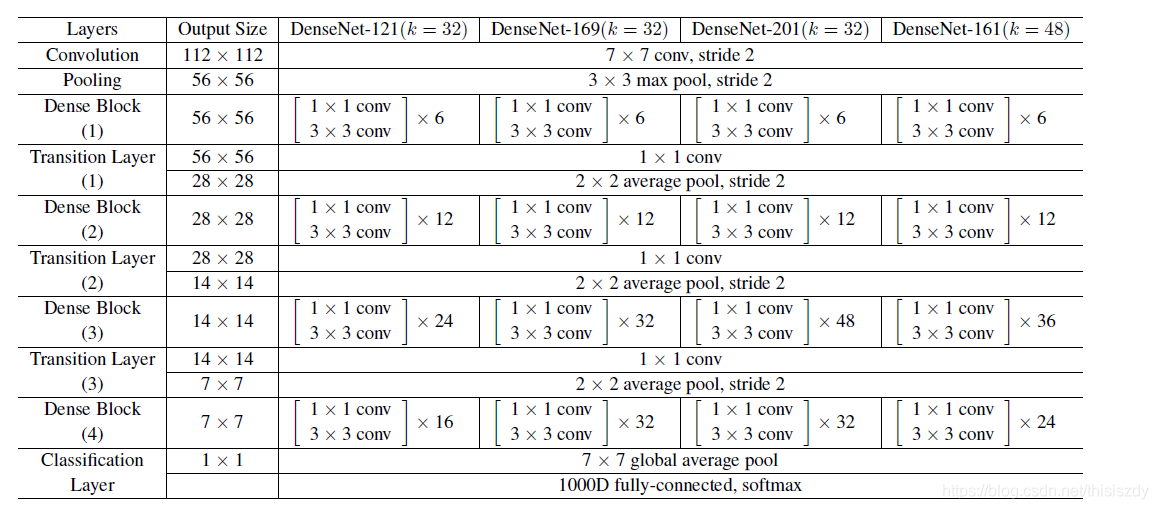

DenseNet会先对输入的tensor做一个卷积核大小为[7×7],步长为2卷积,然后再进行核大小为[3×3],步长为2的最大池化。 之后,便是DenseNet和transition的交替连接,最后跟一个含有[7×7]全局平局池化、1000的全连接和softmax的分类层。

TensoeFlow代码:

def Dense_net(self, input_x):

x = conv_layer(input_x, filter=2 * self.filters, kernel=[7,7], stride=2, layer_name='conv0')

# x = Max_Pooling(x, pool_size=[3,3], stride=2)

"""

for i in range(self.nb_blocks) :

# 6 -> 12 -> 48

x = self.dense_block(input_x=x, nb_layers=4, layer_name='dense_'+str(i))

x = self.transition_layer(x, scope='trans_'+str(i))

"""

x = self.dense_block(input_x=x, nb_layers=6, layer_name='dense_1')

x = self.transition_layer(x, scope='trans_1')

x = self.dense_block(input_x=x, nb_layers=12, layer_name='dense_2')

x = self.transition_layer(x, scope='trans_2')

x = self.dense_block(input_x=x, nb_layers=48, layer_name='dense_3')

x = self.transition_layer(x, scope='trans_3')

x = self.dense_block(input_x=x, nb_layers=32, layer_name='dense_final')

# 100 Layer

x = Batch_Normalization(x, training=self.training, scope='linear_batch')

x = Relu(x)

x = Global_Average_Pooling(x)

x = flatten(x)

x = Linear(x)

# x = tf.reshape(x, [-1, 10])

return x

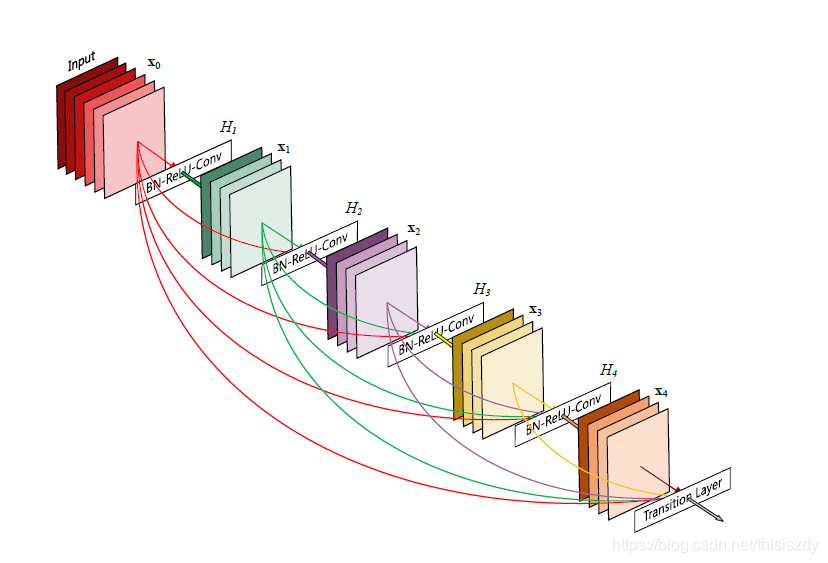

3、Dense Block

在传统的卷积神经网络中,如果你有L层,那么就会有L个连接,但是在DenseNet中,会有L(L+1)/2个连接。简单讲,就是每一层的输入来自前面所有层的输出。如上图:x0是input,H1的输入是x0(input),H2的输入是x0和x1(x1是H1的输出)……

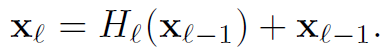

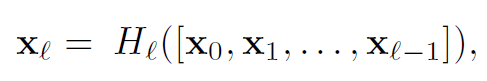

ResNet和DenseNet公式:

- ResNet:ℓ表示层,xℓ表示ℓ层的输出,Hℓ表示一个非线性变换。所以对于ResNet而言,ℓ层的输出是ℓ-1层的输出加上对ℓ-1层输出的非线性变换。

- DenseNet:[x0,x1,…,xℓ-1]表示将0到ℓ-1层的输出Feature Map做concatenation;concatenation是做通道的合并,就像Inception那样。而前面ResNet是做值的相加,通道数是不变的。Hℓ包括BN,ReLU和3*3的卷积。

Dense Block的TensorFlow代码:

def dense_block(self, input_x, nb_layers, layer_name):

with tf.name_scope(layer_name):

layers_concat = list()

layers_concat.append(input_x)

x = self.bottleneck_layer(input_x, scope=layer_name + '_bottleN_' + str(0))

layers_concat.append(x)

for i in range(nb_layers - 1):

x = Concatenation(layers_concat)

x = self.bottleneck_layer(x, scope=layer_name + '_bottleN_' + str(i + 1))

layers_concat.append(x)

x = Concatenation(layers_concat)

return x

4、Bottleneck_layer

Bottleneck由两个部分组成:[1×1]的卷积组和[3×3]的卷积组,其意义在于[1×1]的卷积层能减少输入的特征图,之后再用[3×3]的卷积核进行处理。

TensorFlow代码:

def bottleneck_layer(self, x, scope):

# print(x)

with tf.name_scope(scope):

x = Batch_Normalization(x, training=self.training, scope=scope+'_batch1')

x = Relu(x)

x = conv_layer(x, filter=4 * self.filters, kernel=[1,1], layer_name=scope+'_conv1')

x = Drop_out(x, rate=dropout_rate, training=self.training)

x = Batch_Normalization(x, training=self.training, scope=scope+'_batch2')

x = Relu(x)

x = conv_layer(x, filter=self.filters, kernel=[3,3], layer_name=scope+'_conv2')

x = Drop_out(x, rate=dropout_rate, training=self.training)

# print(x)

return x

5、Transition_layer

Transition_layer是介于两个Dense block之间的转换模块,每一个Dense block输出的feature maps都比较多,如果统统都输入到下一层,将会极大的增加神经网络的参数,所以transition_layer的主要工作就是降维。

扫描二维码关注公众号,回复:

6039587 查看本文章

TensorFlow代码:

def transition_layer(self, x, scope):

with tf.name_scope(scope):

x = Batch_Normalization(x, training=self.training, scope=scope+'_batch1')

x = Relu(x)

# x = conv_layer(x, filter=self.filters, kernel=[1,1], layer_name=scope+'_conv1')

# https://github.com/taki0112/Densenet-Tensorflow/issues/10

in_channel = x.shape[-1]

x = conv_layer(x, filter=in_channel*0.5, kernel=[1,1], layer_name=scope+'_conv1')

x = Drop_out(x, rate=dropout_rate, training=self.training)

x = Average_pooling(x, pool_size=[2,2], stride=2)

return x