Kettle 提交spark sql 作业到 spark2!

- Kettle 7.1

- HDP 2.6.4

kettle 7.1自带hdp2.5插件,测试spark-submit到hdp2.6.4正常运行

参考官方资料

测试包

设置

kettle 7.1上传到Spark服务器

/home/hdfs/data-integration

vi /etc/profile

export SPARK_HOME=/usr/hdp/2.6.4.0-91/spark2

export HADOOP_CONF_DIR=/usr/hdp/2.6.4.0-91/hadoop/conf

source /etc/profile

cd /usr/hdp/2.6.4.0-91/spark2/jars

zip -r jars.zip ./*.jar

mv jars.zip /home/hdfs/

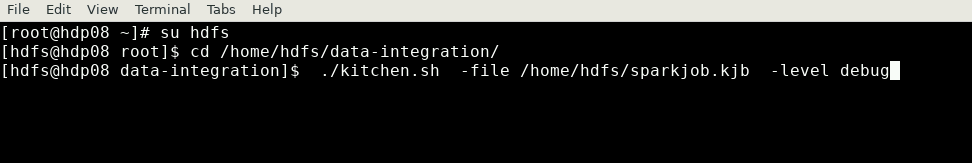

su hdfs

hdfs dfs -mkdir hdfs://hdp01.bigdata:8020/apps/spark/lib

hdfs dfs -put -f ./jars.zip hdfs://hdp01.bigdata:8020/apps/spark/lib

exit

编辑spark-defaults.conf

追加下面四行到/usr/hdp/2.6.4.0-91/spark2/conf/spark-defaults.conf

spark.hadoop.yarn.timeline-service.enabled false

spark.driver.extraJavaOptions -Dhdp.version=2.6.4.0-91

spark.yarn.am.extraJavaOptions -Dhdp.version=2.6.4.0-91

spark.yarn.archive hdfs://hdp01.bigdata:8020/apps/spark/lib/jars.zip 最终spark-defaults.conf

spark.driver.extraLibraryPath /usr/hdp/current/hadoop-client/lib/native:/usr/hdp/current/hadoop-client/lib/native/Linux-amd64-64

spark.eventLog.dir hdfs:///spark2-history/

spark.eventLog.enabled true

spark.executor.extraLibraryPath /usr/hdp/current/hadoop-client/lib/native:/usr/hdp/current/hadoop-client/lib/native/Linux-amd64-64

spark.history.fs.logDirectory hdfs:///spark2-history/

spark.history.kerberos.keytab none

spark.history.kerberos.principal none

spark.history.provider org.apache.spark.deploy.history.FsHistoryProvider

spark.history.ui.port 18081

spark.yarn.historyServer.address hdp08.bigdata:18081

spark.yarn.queue default

spark.hadoop.yarn.timeline-service.enabled false

spark.driver.extraJavaOptions -Dhdp.version=2.6.4.0-91

spark.yarn.am.extraJavaOptions -Dhdp.version=2.6.4.0-91

spark.yarn.archive hdfs://hdp01.bigdata:8020/apps/spark/lib/jars.zip 用

/usr/hdp/2.6.4.0-91/spark2/conf/spark-defaults.conf

覆盖

/usr/hdp/2.6.4.0-91/etc/spark2/conf/spark-defaults.conf

/home/hdfs/data-integration/plugins/pentaho-big-data-plugin/hadoop-configurations/hdp25/spark-defaults.conf

重启spark2,重启后/usr/hdp/2.6.4.0-91/spark2/conf/spark-defaults.conf会恢复原状

最后

./kitchen.sh -file /home/hdfs/sparkjob.kjb -level debug

成功

成功

Scala

IDEAConfig

package com.xxx.bigdata.config

import org.apache.log4j.{Level, Logger}

class IDEAConfig {

Logger.getLogger("org").setLevel(Level.WARN)

val jars:String="hdfs://hdp01.bigdata:8020/apps/spark/hdpbigdata-1.0-SNAPSHOT.jar," +

"hdfs://hdp01.bigdata:8020/apps/scala/scala-library-2.11.8.jar," +

"hdfs://hdp01.bigdata:8020/apps/scala/scala-reflect-2.11.8.jar," +

"hdfs://hdp01.bigdata:8020/apps/scala/scala-compiler-2.11.8.jar"

}

package com.xxx.bigdata.service

import com.xxx.bigdata.config.IDEAConfig

import org.apache.spark.sql.SparkSession

object xBigdata extends IDEAConfig {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().appName(this.getClass.getSimpleName)

.config("spark.jars",jars)

.enableHiveSupport().getOrCreate()

// For implicit conversions like converting RDDs to DataFrames

import spark.implicits._

// $example off:init_session$

spark.sql("use db1")

rddTest(spark)

spark.stop()

}

private def queryT(spark: SparkSession): Unit = {

spark.sql("select top_n,r.region_no,r.province_no,sum(d.amt) amt " +

"from xxx" +

"group by top_n,r.region_no,r.province_no").show()

}

//testing function

private def functionTest (spark:SparkSession):Unit={

}

//testing RDD DF and mapreduce

private def rddTest (spark:SparkSession):Unit={

import spark.implicits._

val regionDF=spark.sql("select * from t").toDF()

regionDF.cache()

regionDF.map(region=> "region:"+region.getAs[String]("region_name")).show()

spark.sql("select * from t_organ_province").show()

}

}