1.模型流程

2.数据处理

#导入运行库

from keras.datasets import imdb

from keras.preprocessing import sequence

from keras.preprocessing.text import Tokenizer

import numpy as np

np.random.seed(10)

#进行数据预处理

import re

re_tag = re.compile(r'<[^>]+>')

def rm_tags(text):

return re_tag.sub('', text)

import os

def read_files(filetype):

path = "data/aclImdb/"

file_list=[]

positive_path=path + filetype+"/pos/"

for f in os.listdir(positive_path):

file_list+=[positive_path+f]

negative_path=path + filetype+"/neg/"

for f in os.listdir(negative_path):

file_list+=[negative_path+f]

print('read',filetype, 'files:',len(file_list))

all_labels = ([1] * 12500 + [0] * 12500)

all_texts = []

for fi in file_list:

with open(fi,encoding='utf8') as file_input:

all_texts += [rm_tags(" ".join(file_input.readlines()))]

return all_labels,all_texts

y_train,train_text=read_files("train")

y_test,test_text=read_files("test")

#建立token字典

token = Tokenizer(num_words=2000)

token.fit_on_texts(train_text)

#将字符转化为数字列表

x_train_seq = token.texts_to_sequences(train_text)

x_test_seq = token.texts_to_sequences(test_text)

#进行取长补短操作

x_train = sequence.pad_sequences(x_train_seq, maxlen=100)

x_test = sequence.pad_sequences(x_test_seq, maxlen=100)

3.建立模型

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation,Flatten

from keras.layers.embeddings import Embedding #提供了嵌入层api可以将数字列表转化为向量列表

model = Sequential()

model.add(Embedding(output_dim=32,

input_dim=2000, #对应2000个token字典中的单词

input_length=100))

model.add(Dropout(0.2)) #放弃掉20%

model.add(Flatten()) #加入平坦层,准备连接全连接层

model.add(Dense(units=256,

activation='relu' ))

model.add(Dropout(0.2))

model.add(Dense(units=1,

activation='sigmoid' )) #进行二分类

model.summary()

4.训练模型

#设定模型初始设置

model.compile(loss='binary_crossentropy', #对模型进行设置

optimizer='adam',

metrics=['accuracy'])

#进行模型训练

train_history =model.fit(x_train, y_train,batch_size=100,

epochs=10,verbose=2,

validation_split=0.2)#开始进行训练,设置80%作为训练集,20#作为验证集

#显示过程

%pylab inline

import matplotlib.pyplot as plt

def show_train_history(train_history,train,validation):

plt.plot(train_history.history[train])

plt.plot(train_history.history[validation])

plt.title('Train History')

plt.ylabel(train)

plt.xlabel('Epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.show()

show_train_history(train_history,'acc','val_acc')

show_train_history(train_history,'loss','val_loss')

5.进行模型预测结果预测

scores = model.evaluate(x_test, y_test, verbose=1)

scores[1]

- 查看预测概率

probility=model.predict(x_test)

- 查看数字预测结果

predict=model.predict_classes(x_test)

#查看前十个预测结果

predict[:10]

#发现前十个预测结果为二维的,所以转化为一维进行显示

predict_classes=predict.reshape(-1)

predict_classes[:10]

- 文章对应预测结果

SentimentDict={1:'正面的',0:'负面的'}

def display_test_Sentiment(i):

print(test_text[i])

print('标签label:',SentimentDict[y_test[i]],

'预测结果:',SentimentDict[predict_classes[i]])

6.对新的影评进行情感分析

- 需要先对影评进行一些处理

#输入新的影评

input_text='''

Oh dear, oh dear, oh dear: where should I start folks. I had low expectations already because I hated each and every single trailer so far, but boy did Disney make a blunder here. I'm sure the film will still make a billion dollars - hey: if Transformers 11 can do it, why not Belle? - but this film kills every subtle beautiful little thing that had made the original special, and it does so already in the very early stages. It's like the dinosaur stampede scene in Jackson's King Kong: only with even worse CGI (and, well, kitchen devices instead of dinos).

The worst sin, though, is that everything (and I mean really EVERYTHING) looks fake. What's the point of making a live-action version of a beloved cartoon if you make every prop look like a prop? I know it's a fairy tale for kids, but even Belle's village looks like it had only recently been put there by a subpar production designer trying to copy the images from the cartoon. There is not a hint of authenticity here. Unlike in Jungle Book, where we got great looking CGI, this really is the by-the-numbers version and corporate filmmaking at its worst. Of course it's not really a "bad" film; those 200 million blockbusters rarely are (this isn't 'The Room' after all), but it's so infuriatingly generic and dull - and it didn't have to be. In the hands of a great director the potential for this film would have been huge.

Oh and one more thing: bad CGI wolves (who actually look even worse than the ones in Twilight) is one thing, and the kids probably won't care. But making one of the two lead characters - Beast - look equally bad is simply unforgivably stupid. No wonder Emma Watson seems to phone it in: she apparently had to act against an guy with a green-screen in the place where his face should have been.

'''

#将影评转化为数字列表

input_seq = token.texts_to_sequences([input_text])#将影评转化为数字列表

#进行取长补短操作

pad_input_seq = sequence.pad_sequences(input_seq , maxlen=100)#进行取长补短操作

#送入模型进行预测

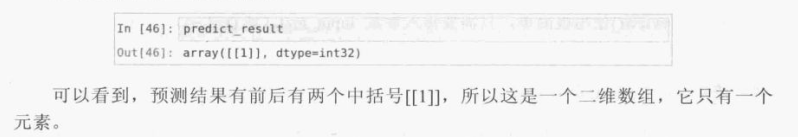

predict_result=model.predict_classes(pad_input_seq)#放入模型进行分析

#因结果为二维结果故预测结果为

predict_result[0][0]

#可以利用本文前面的函数反转回中文的情感检测

SentimentDict[predict_result[0][0]]

#对上述所有操作进行整合,构造一个处理数据加预测的函数

def predict_review(input_text):

input_seq = token.texts_to_sequences([input_text])

pad_input_seq = sequence.pad_sequences(input_seq , maxlen=100)

predict_result=model.predict_classes(pad_input_seq)

print(SentimentDict[predict_result[0][0]])

7.保存整个预测模型

model_json = model.to_json()

with open("SaveModel/Imdb_RNN_model.json", "w") as json_file:

json_file.write(model_json)

model.save_weights("SaveModel/Imdb_RNN_model.h5")

print("Saved model to disk")