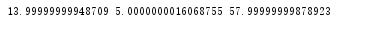

o1 = 0 o2 = 0 o3 = 0 a = 0.002 x = [1,3,5,3,5,7,2,6,7,3,6,9,4,8,9,6,5,3,3,2,7,1,1,1,2,2,2,3,3,3,4,4,4,5,5,5,6,6,6,7,7,7,8,8,8,9,9,9] #生成训练数据 x = x * 250 #将训练数据扩大50倍 y = [] #生成训练数据的理论结果 for i in range(int(len(x)/3)): y.append(14*x[i] + 5*x[i+1] + 58*x[i+2]) # print('y[{}]:{}'.format(i,y[i])) #进行随机梯度下降 每用一行数据,就下降一次,需要样本足够且,a值合理 for i in range(len(y)): o1 = o1 - a * (o1 * x[i] + o2 * x[i+1] + o3 * x[i+2] - y[i]) * x[i] o2 = o2 - a * (o1 * x[i] + o2 * x[i+1] + o3 * x[i+2] - y[i]) * x[i+1] o3 = o3 - a * (o1 * x[i] + o2 * x[i+1] + o3 * x[i+2] - y[i]) * x[i+2] print(o1 * x[i] + o2 * x[i+1] + o3 * x[i+2],y[i]) print(o1,o2,o3)

结果: