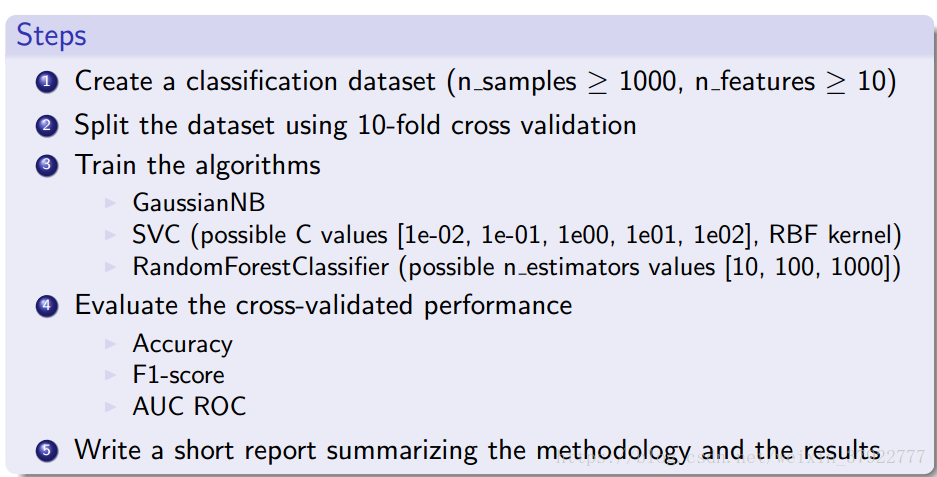

Steps

1 Create a classification dataset (n samples 1000, n features 10)

2 Split the dataset using 10-fold cross validation

3 Train the algorithms

I GaussianNB

I SVC (possible C values [1e-02, 1e-01, 1e00, 1e01, 1e02], RBF kernel)

I RandomForestClassifier (possible n estimators values [10, 100, 1000])

4 Evaluate the cross-validated performance

I Accuracy

I F1-score

I AUC ROC

1 Create a classification dataset (n samples 1000, n features 10)

2 Split the dataset using 10-fold cross validation

3 Train the algorithms

I GaussianNB

I SVC (possible C values [1e-02, 1e-01, 1e00, 1e01, 1e02], RBF kernel)

I RandomForestClassifier (possible n estimators values [10, 100, 1000])

4 Evaluate the cross-validated performance

I Accuracy

I F1-score

I AUC ROC

5 Write a short report summarizing the methodology and the results

程序实现:

from sklearn import datasets

from sklearn import metrics

"""

1 Create a classification dataset (n samples 1000, n features 10)

2 Split the dataset using 10-fold cross validation

3 Train the algorithms

I GaussianNB

I SVC (possible C values [1e-02, 1e-01, 1e00, 1e01, 1e02], RBF kernel)

I RandomForestClassifier (possible n estimators values [10, 100, 1000])

4 Evaluate the cross-validated performance

I Accuracy

I F1-score

I AUC ROC

5 Write a short report summarizing the methodology and the results

"""

# 1 Create a classification dataset (n samples 1000, n features 10)

dataset = datasets.make_classification(n_samples=1000, n_features=10,

n_informative=2, n_redundant=2, n_repeated=0, n_classes=2)

# 2 Split the dataset using 10-fold cross validation

from sklearn import cross_validation

kf = cross_validation.KFold(1000, n_folds=10, shuffle=True)

# 3 Train the algorithms

for train_index, test_index in kf:

X_train, y_train = dataset[0][train_index], dataset[1][train_index]

X_test, y_test = dataset[0][test_index], dataset[1][test_index]

# GaussianNB

from sklearn.naive_bayes import GaussianNB

clf = GaussianNB()

clf.fit(X_train, y_train)

pred = clf.predict(X_test)

# Inspect the data structures

print('GaussianNB:')

print('Pred:')

print(pred)

print('y_test:')

print(y_test)

# SVC (possible C values [1e-02, 1e-01, 1e00, 1e01, 1e02], RBF kernel)

from sklearn.svm import SVC

clf = SVC(C=1e-01, kernel='rbf' , gamma=0.1)

clf.fit(X_train, y_train)

pred = clf.predict(X_test)

print('SVC:')

print('Pred:')

print(pred)

print('y_test:')

print(y_test)

# RandomForestClassifier (possible n estimators values [10, 100, 1000])

# Random Forest

from sklearn.ensemble import RandomForestClassifier

clf = RandomForestClassifier(n_estimators=6)

clf.fit(X_train, y_train)

pred = clf.predict(X_test)

print('Random Forest:')

print('Pred:')

print(pred)

print('y_test:')

print(y_test)

# 4 Evaluate the cross-validated performance

# Accuracy

acc = metrics.accuracy_score(y_test, pred)

print('Accuracy:')

print(acc)

# F1-score

f1 = metrics.f1_score(y_test, pred)

print('F1-score:')

print(f1)

# AUC ROC

print('AUC ROC:')

auc = metrics.roc_auc_score(y_test, pred)

print(auc)