一丶作业要求

| 标题 | 内容 |

|---|---|

| 这个作业属于哪个课程 | 班级博客的链接 |

| 这个作业的要求在哪里 | 作业要求的链接 |

| 我在这个课程的目标是 | 完成一个完整的项目,学以致用 |

| 这个作业在哪个具体方面帮助我实现目标 | 帮助我了解SGD |

二、解决方法

1) 随机选取数据的方式

def shuffle_batch(X, y, batch_size):

rnd_idx = np.random.permutation(len(X))

n_batches = len(X)//batch_size

for batch_idx in np.array_split(rnd_idx, n_batches):

X_batch, y_batch = X[batch_idx], y[batch_idx]

yield X_batch, y_batch

2)核心代码

代码进行了简单的反向传播,实际上对不同的batch_size最终结果几乎无影响。

此数据集过于简单了。

import numpy as np

import pandas as pd

from pathlib import Path

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = (8, 8)

x_data_name = "D:\datasets\Simple\TemperatureControlXData.dat"

y_data_name = "D:\datasets\Simple\TemperatureControlYData.dat"

def save_fig(name=None, tight_layout=True):

path = f"D:\datasets\Simple\{name}.png"

print("Saving figure")

if tight_layout:

plt.tight_layout()

plt.savefig(path, format='png', dpi=80)

def ReadData():

Xfile = Path(x_data_name)

Yfile = Path(y_data_name)

if Xfile.exists() & Yfile.exists():

X = np.load(Xfile)

Y = np.load(Yfile)

return X.reshape(-1, 1),Y.reshape(-1, 1)

else:

return None,None

def shuffle_batch(X, y, batch_size):

rnd_idx = np.random.permutation(len(X))

n_batches = len(X)//batch_size

for batch_idx in np.array_split(rnd_idx, n_batches):

X_batch, y_batch = X[batch_idx], y[batch_idx]

yield X_batch, y_batch

def forward_prop(X, W, b):

return np.dot(X, W) + b

def backward_prop(X, y, y_hat):

dZ = y_hat - y

dW = 1/len(X)*X.T.dot(dZ)

db = 1/len(X)*np.sum(dZ, axis=0, keepdims=True)

return dW, db

def compute_loss(y_hat, y):

return np.mean(np.square(y_hat - y))/2

X, y = ReadData()

N, D = X.shape

learning_rate = 0.1

n_epochs = 50

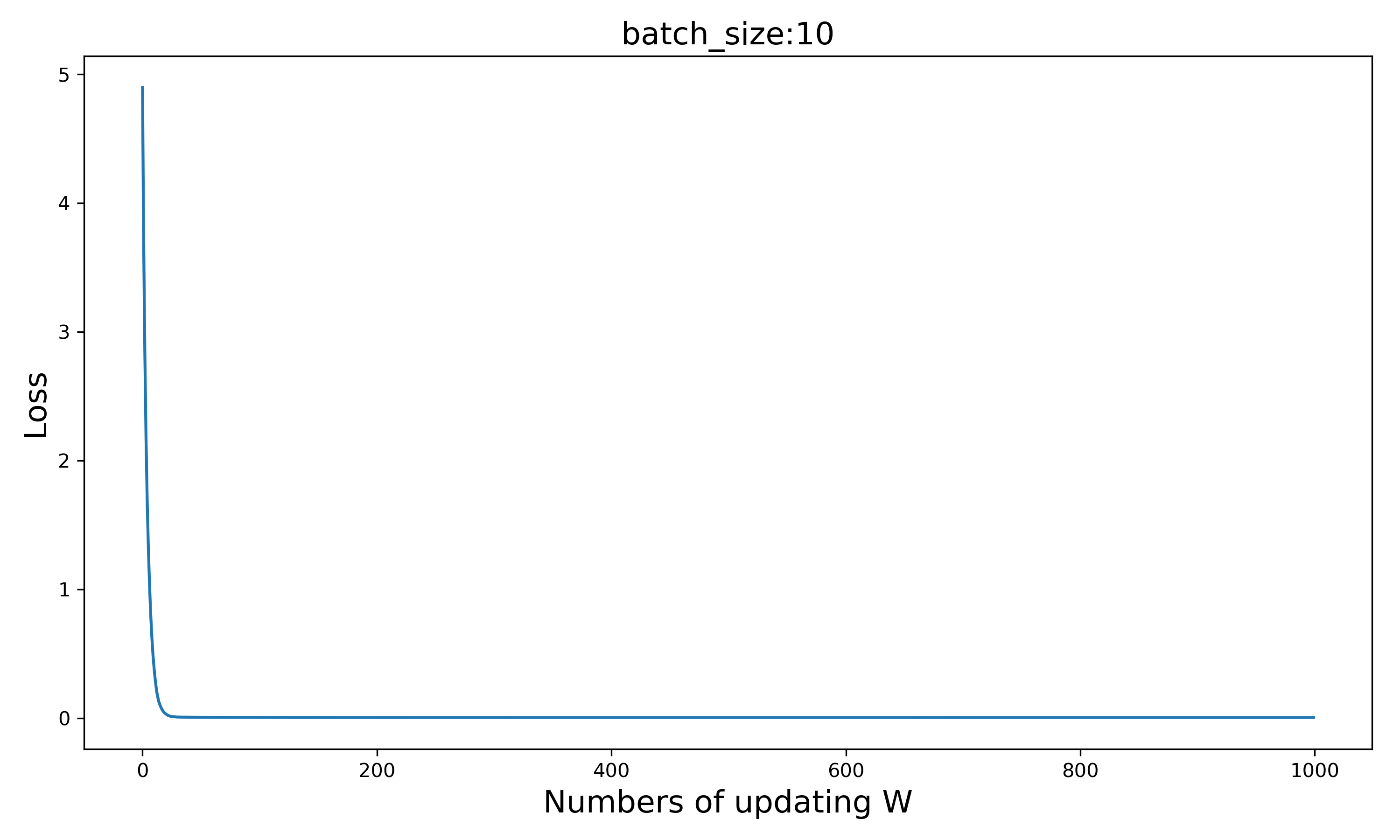

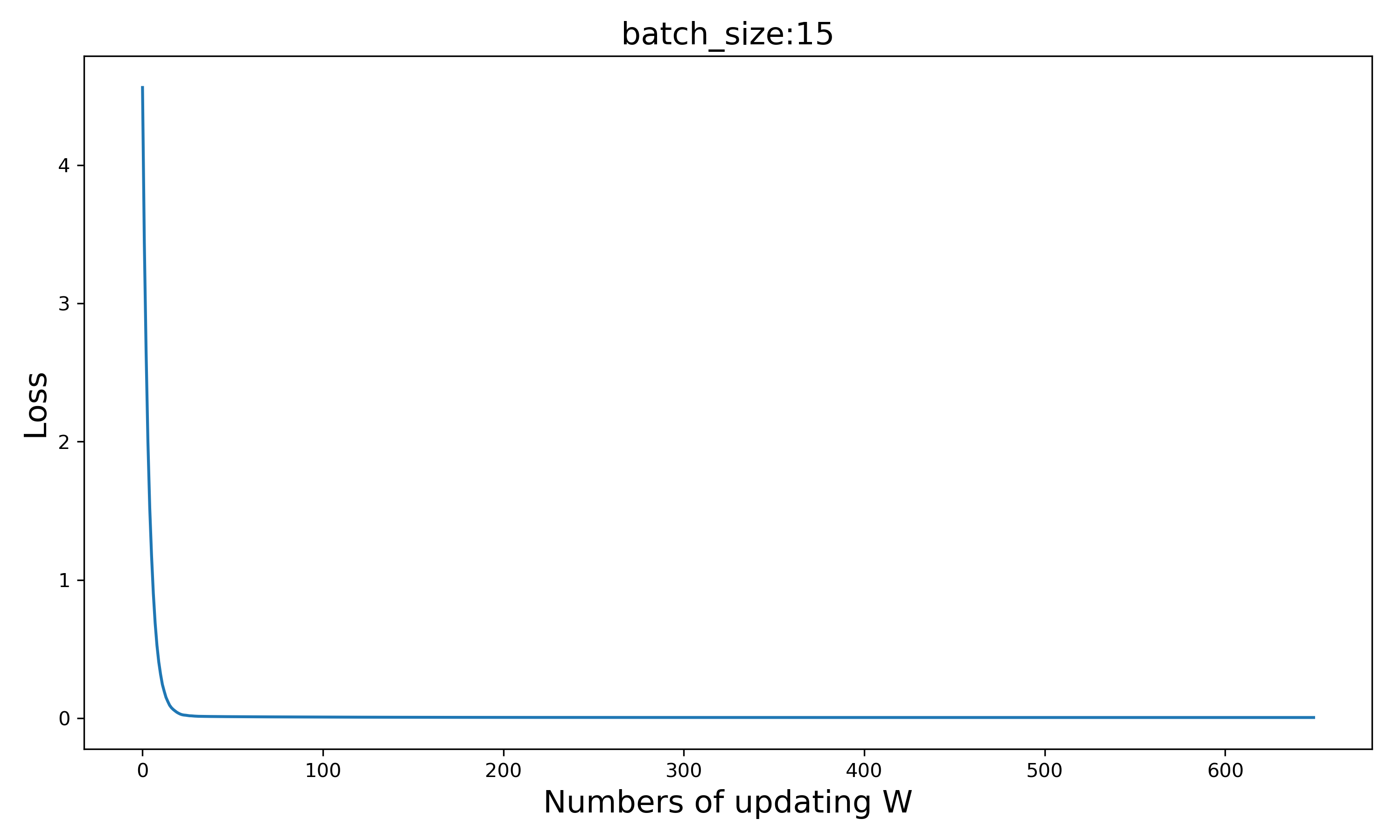

batch_size = 10

W = np.random.randn(D, 1)

b = np.zeros(1)

best_loss = np.infty

W_lis = []

b_lis = []

loss_lis = []

for epoch in range(n_epochs):

for X_batch, y_batch in shuffle_batch(X, y, batch_size):

y_hat = forward_prop(X_batch, W, b)

dW, db = backward_prop(X_batch, y_batch, y_hat)

W = W - learning_rate*dW

b = b - learning_rate*db

W_lis.append(W[0, 0])

b_lis.append(b[0, 0])

loss = compute_loss(forward_prop(X, W, b), y)

loss_lis.append(loss)

if loss < best_loss:

best_loss = loss

plt.figure(figsize=(10, 6))

plt.title(f"batch_size:{batch_size}", fontsize=16)

plt.plot(loss_lis)

plt.xlabel("Numbers of updating W", fontsize=16)

plt.ylabel("Loss", fontsize=16)

plt.show()

3)问题解答

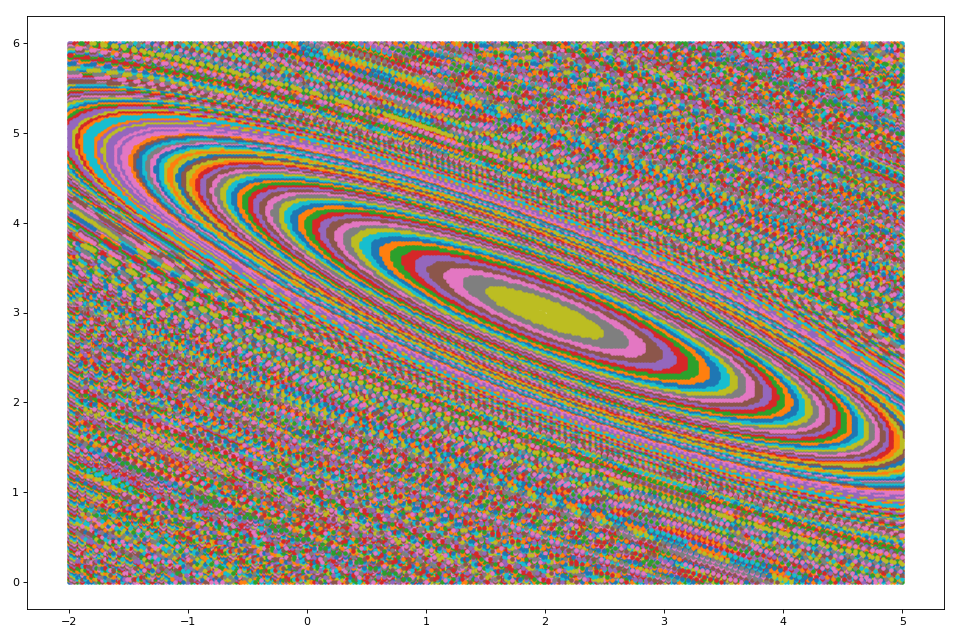

首先注意图画出来的细节:

1)为什么是椭圆而不是圆?如何把这个图变成一个圆?

def loss_2d(x,y):

s = 300

W = np.linspace(-2,5,s)

B = np.linspace(0,6,s)

LOSS = np.zeros((s,s))

for i in range(len(W)):

for j in range(len(B)):

w = W[i]

b = B[j]

a = w*x + b

loss = compute_loss(a, y)

LOSS[i,j] = np.round(loss, 2)

while(True):

X = []

Y = []

is_first = True

loss = 0

for i in range(len(W)):

for j in range(len(B)):

if LOSS[i,j] != 0:

if is_first:

loss = LOSS[i,j]

X.append(W[i])

Y.append(B[j])

LOSS[i,j] = 0

is_first = False

elif LOSS[i,j] == loss or (abs(loss / LOSS[i,j] - 1) < 0.02):

X.append(W[i])

Y.append(B[j])

LOSS[i,j] = 0

if is_first == True:

break

plt.plot(X,Y,'.')引用自:Microsoft/ai-edu

1)注意到代码中 loss 进行了四舍五入,且对 plot 相近 loss 的w,b对。

2)注意到 plt.plot(X, Y, '.') 中每次 plot 的离散点颜色不同,且只分析4万个(w, b)组合

简单来说,椭圆产生原因是由于w, b对 loss 影响力不同。中间为一块区域实际上是一堆离散的点,只是图被缩小则看上去像是一个区域。

深层的来说,椭圆归结于损失公式:

\(Loss = \frac{1}{m}\sum_{i=1}^{m}(wx_i+b-y_i)^2 \\\ =\frac{1}{m}\sum_{i=1}^{m}(x_i^2w^2+b^2+2x_iwb-2y_ib-2x_iy_iw)+const\\\ =\frac{1}{m}\sum_{i=1}^{m}x_i^2w^2+b^2+\frac{2}{m}\sum_{i=1}^{m}x_iwb-\frac{2}{m}\sum_{i=1}^{m}y_ib-\frac{2}{m}\sum_{i=1}^{m}x_iy_iw +const\)

只需要保证交叉项\(wb\)前面系数为零且二次项\(w^2, b^2\)前系数相同,损失区域即为圆形。

编程上:我们只需两段代码转换数据集即可将椭圆变成圆形:

X_2 = X - X.mean(axis=0)

X_2 = X_2*np.sqrt((len(X_2)/np.sum(np.square(X_2))))

2)为什么中心是个椭圆区域而不是一个点?

损失函数椭圆区域实际上是一堆离散的点,只是图被缩小,所以看上去像区域。

而且由于我们分析的(w, b)点对有限,所以很大可能并不存在全局最小的(w, b)点对,只能是区域。

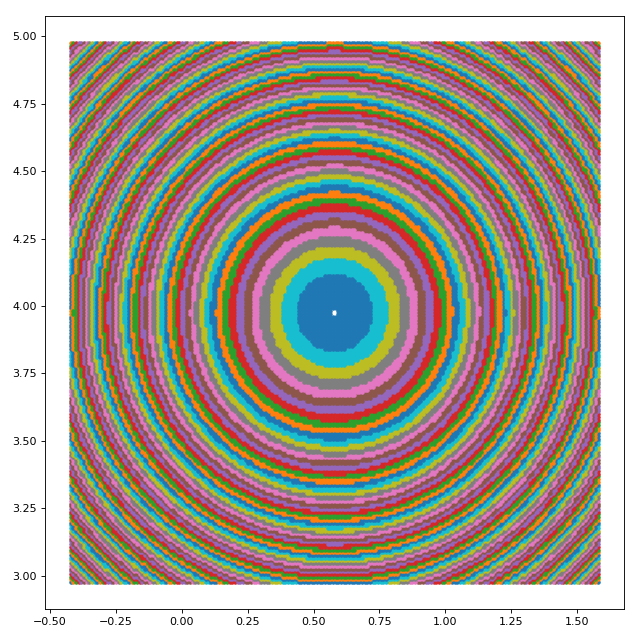

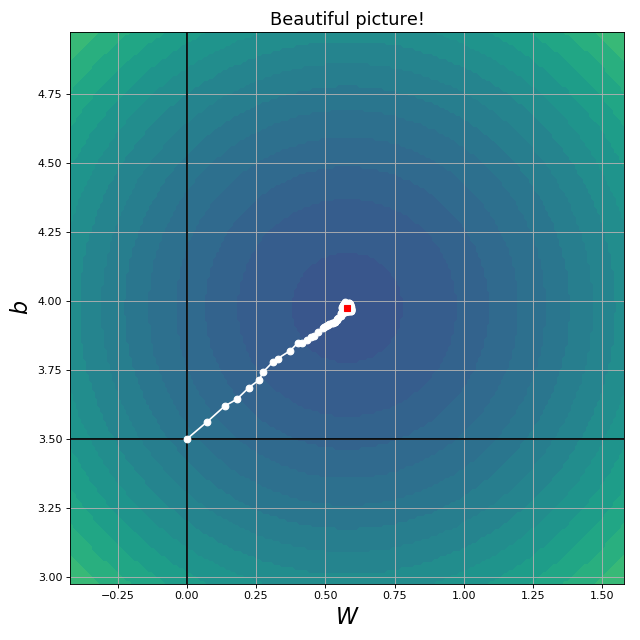

三、画美观的图

core code:

def loss_2d_try(x,y):

s = 200

W = np.linspace(-0.4214,1.5786,s)

B = np.linspace(2.97598,4.97598,s)

LOSS = np.zeros((s,s))

for i in range(len(W)):

for j in range(len(B)):

w = W[i]

b = B[j]

a = w*x + b

loss = compute_loss(a, y)

LOSS[i,j] = np.round(loss, 2)

return LOSS

JR = loss_2d_try(X_2, y)

t1a, t1b, t2a, t2b = -0.4214, 1.5786, 2.97598,4.97598

levelsJR=(np.exp(np.linspace(-1, 1, 40)) - 1) * (np.max(JR) - np.min(JR)) + np.min(JR)

plt.grid(True)

plt.axhline(y=3.5, color='k')

plt.axvline(x=0, color='k')

plt.contourf(t1, t2, JR, levels=levelsJR, alpha=1)

plt.plot(W_lis, b_lis, "w-o")

plt.plot(w_real, b_real, "rs")

plt.xlabel("$W$", fontsize=20)

plt.ylabel("$b$", fontsize=20)

plt.title("Beautiful picture!", fontsize=16)

plt.axis([t1a, t1b, t2a, t2b])

plt.show()