一丶作业要求

| 标题 | 内容 |

|---|---|

| 这个作业属于哪个课程 | 班级博客的链接 |

| 这个作业的要求在哪里 | 作业要求的链接 |

| 我在这个课程的目标是 | 完成一个完整的项目,学以致用 |

| 这个作业在哪个具体方面帮助我实现目标 | 帮助我了解反向传播 |

二、解决方法

1) 训练一个逻辑与门和逻辑或门

有时候不想写太多函数,几行就够了。

以下为Sigmoid + learning_rate = 1.

import numpy as np

np.random.seed(42)

X_and = np.array([[0, 0],[0, 1],[1, 0],[1, 1]])

y_and = np.array([0, 0, 0, 1])

y_and_one_hot = np.zeros((4, 2))

y_and_one_hot[np.arange(4),y_and]=1

weights = np.sqrt(2/3)*np.random.randn(2, 2)

bias = np.ones((1, 2))

loss = np.infty

while loss > 1e-2:

output = 1/(1 + np.exp(-np.dot(X_and, weights)-bias))

weights -= np.dot(X_and.T ,(output - y_and_one_hot))

bias -= np.mean(output-y_and_one_hot, axis=0, keepdims=True)

loss = -np.mean(np.log(output)*y_and_one_hot)

#output:

#array([[9.99980695e-01, 1.99816334e-05],

# [9.75014897e-01, 2.52661750e-02],

# [9.75014897e-01, 2.52661750e-02],

# [2.85591765e-02, 9.71119454e-01]])

#loss:0.009991269981324791

X_or = np.array([[0, 0],[0, 1],[1, 0],[1, 1]])

y_or = np.array([0, 1, 1, 1])

y_or_one_hot = np.zeros((4, 2))

y_or_one_hot[np.arange(4),y_or]=1

weights = np.sqrt(2/3)*np.random.randn(2, 2)

bias = np.ones((1, 2))

loss = np.infty

while loss > 1e-2:

output = 1/(1 + np.exp(-np.dot(X_or, weights)-bias))

weights -= np.dot(X_or.T ,(output - y_or_one_hot))

bias -= np.mean(output-y_or_one_hot, axis=0, keepdims=True)

loss = -np.mean(np.log(output[np.arange(4), y_or]))

#output

#array([[9.73856986e-01, 2.69389503e-02],

# [6.49497719e-03, 9.93314951e-01],

# [6.48613192e-03, 9.93311743e-01],

# [1.14572236e-06, 9.99998745e-01]])

#loss:0.0099775725983246262)二分类可视化

core code

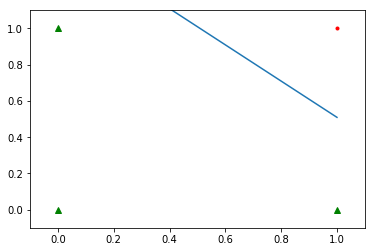

def ShowData(X,Y, W, B):

for i in range(X.shape[0]):

if Y[i,0] == 0:

plt.plot(X[i,0], X[i,1], '.', c='r')

elif Y[i,0] == 1:

plt.plot(X[i,0], X[i,1], '^', c='g')

# end if

# end for

plt.axis([-0.1, 1.1, -0.1, 1.1])

w12 = -W[0,0]/W[1,0]

b12 = -B[0,0]/W[1,0]

x = np.linspace(0,1,10)

y = x*w12 + b12

plt.plot(x,y)

plt.show()与门:

或门:

3)经典多分类svm

def svm_loss(x, y):

"""

Computes the loss and gradient using for multiclass SVM classification.

Inputs:

- x: Input data, of shape (N, C) where x[i, j] is the score for the jth

class for the ith input.

- y: Vector of labels, of shape (N,) where y[i] is the label for x[i] and

0 <= y[i] < C

Returns a tuple of:

- loss: Scalar giving the loss

- dx: Gradient of the loss with respect to x

"""

N = x.shape[0]

correct_class_scores = x[np.arange(N), y]

margins = np.maximum(0, x - correct_class_scores[:, np.newaxis] + 1.0)

margins[np.arange(N), y] = 0

loss = np.sum(margins) / N

num_pos = np.sum(margins > 0, axis=1)

dx = np.zeros_like(x)

dx[margins > 0] = 1

dx[np.arange(N), y] -= num_pos

dx /= N

return loss, dx