已知以下参数:

左相机内参(依次为fx\cx\fy\cy):

double Ml[4] = { 1674.86, 658.302, 1674.76, 529.925 };

左相机畸变系数:(k1\k2\p1\p2\k3\k4\k5\k6)

double Dl[8] = { -0.0315542, - 0.0298582, 0.000139779, - 0.000262658, - 0.308588, 0.0312938 ,- 0.100438, - 0.376244 };

右相机内参(依次为fx\cx\fy\cy):

double Mr[4] = { 1671.91, 686.756, 1671.78, 516.332 };

左右相机之间旋转矩阵R:

double R[3][3] = { 0.888061, - 0.00125292, 0.459724,

0.00433001, 0.999975, - 0.0056391,

- 0.459705, 0.00699847, 0.888044 };

左右相机之间平移向量T:

double T[3] = { -204.265, - 0.252961, 50.1539 };

其中RT满足关系:R*Pl+T=Pr。Pl为左相机中某一三维点(xl,yl,zl),Pr为右相机相机坐标系中某一三维点(xr,yr,zr)。

问:现有左相机图像像素坐标系中一二维点uvL(900,615)(未进行畸变校正),和右相机坐标系图像像素坐标系中一二维点uvR(939.325073,597.613770)(已进行畸变校正)。已知两点为正确匹配点对,根据以上信息,编程实现:

1、 重建以右相机为世界坐标系下的真实三维点坐标(X,Y,Z)。

2、 求满足关系:R’*Pr+T’=Pl的旋转矩阵R’和平移向量T’。

3、 求重建出来的三维坐标(X,Y,Z)重投影回左右相机后分别的图像坐标uvL’和uvR’,以及误差值。

4、 求二维点uvR(939.325073,597.613770)与二维点uvL(900,615)在右相机中的极线L的距离。

- 解析:

- 先将左边的像素点去畸变,然后通过中垂线投影解算出三维坐标

- 已知三维坐标投影到两个camera中,求取像素坐标

重点解析第1题:

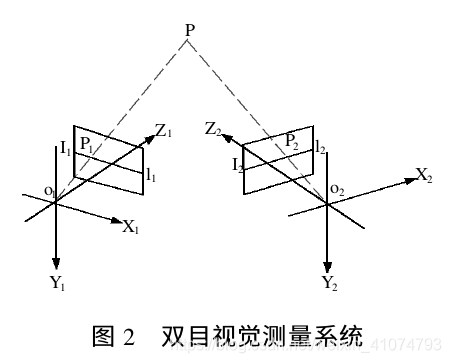

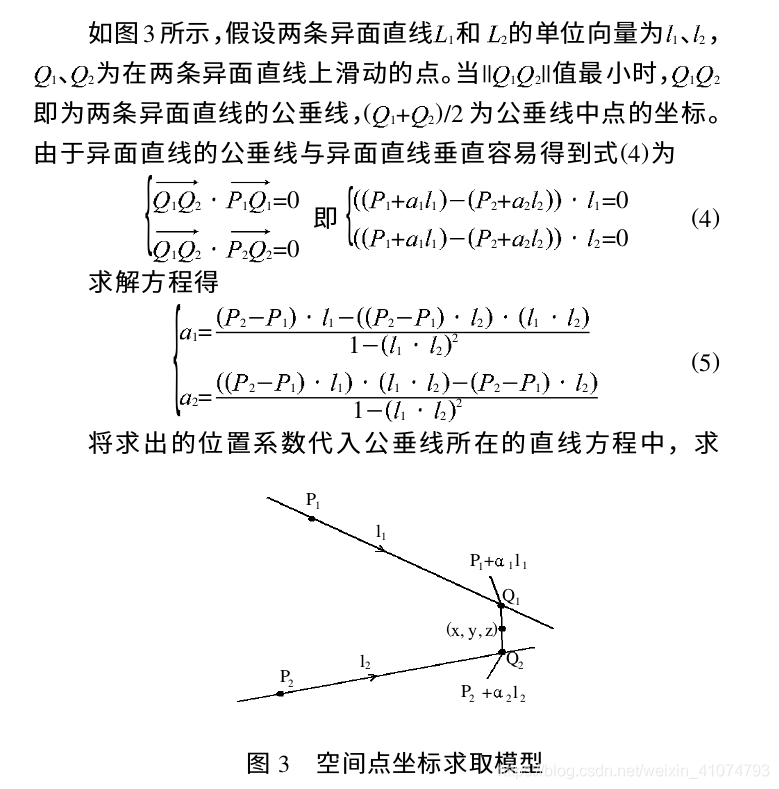

使用异面直线公垂线中点去逼近物体空间点的模型如下(来自于参考文献1的内容)

注意:要相使用上述模型,必须将所有点的坐标转换到以右camera为世界坐标系下的值

- 左相机原点:

- 左像素点:

- 第一步:像素坐标转换到左camera坐标下的三维坐标(归一化平面坐标)

- 第二步:将归一化坐标转换到右camera下的坐标

- 第一步:像素坐标转换到左camera坐标下的三维坐标(归一化平面坐标)

- 右相机原点:

- 右像素点:

#include <iostream>

#include <Eigen/Core>

#include <Eigen/Geometry>

#include <opencv2/core/eigen.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include "sophus/so3.h"

#include "sophus/se3.h"

using namespace std;

using namespace Eigen;

using namespace cv;

void pixel2camera(const Mat &k , const Point2d &point_uv, Vector3d &point_cam){

point_cam.x()=(point_uv.x-k.at<double>(0,2))/k.at<double>(0,0);

point_cam.y()=(point_uv.y-k.at<double>(1,2))/k.at<double>(1,1);

point_cam.z()=1;

}

void pixel2camera(const Mat &k , const Vector2d &point_uv, Vector3d &point_cam){

point_cam.x()=(point_uv.x()-k.at<double>(0,2))/k.at<double>(0,0);

point_cam.y()=(point_uv.y()-k.at<double>(1,2))/k.at<double>(1,1);

point_cam.z()=1;

}

void camera2pixel(const Mat &k , Vector2d &point_uv, const Point2d &point_cam){

point_uv.x() = k.at<double>(0,0) * point_cam.x+ k.at<double>(0,2);

point_uv.y() = k.at<double>(1,1) * point_cam.y+ k.at<double>(1,2);

}

int main(){

Matrix3d R;

Vector3d T;

Vector3d PL,PR; //p点在左右相机下的三维坐标

Vector2d uvl; //左边去畸变的像素坐标

Point2d uvr= Point2d(939.325073,597.613770); //右边去畸变的像素坐标

Point2d uvl_undistorted = Point2d(900,615); //左camera的带畸变的像素坐标

Vector2d uvl_,uvr_; //重投影坐标

R<<0.888061, -0.00125292, 0.459724,

0.00433001, 0.999975, -0.0056391,

-0.459705, 0.00699847, 0.888044 ;

T<<-204.265,-0.252961,50.1539;

Mat k_left, k_right; //左相机内参

k_left=(Mat_<double>(3,3)<<1674.86, 0, 658.302,

0, 1674.76, 529.925,

0, 0, 1);

k_right = (Mat_<double>(3,3)<<1671.91, 0, 686.756,

0, 1617.78, 516.332,

0, 0, 1);

Matrix3d k_left_,k_right_;

cv2eigen(k_left,k_left_);

cv2eigen(k_right,k_right_);

vector<Point2d> uvl_array; //为调用去畸变函数准备

vector<Point2d> uvl_undistorted_array; //为调用去畸变函数准备

uvl_undistorted_array.push_back(uvl_undistorted);

//左camera的畸变参数

vector<double> Distort_Coefficients_left;

double k1= -0.0315542;double k2= -0.0298582;double p1= 0.000139779;double p2= -0.000262658;

double k3= -0.308588;double k4= 0.0312938;double k5= -0.100438;double k6= -0.376244;

Distort_Coefficients_left.push_back(k1);Distort_Coefficients_left.push_back(k2);

Distort_Coefficients_left.push_back(p1);Distort_Coefficients_left.push_back(p2);

Distort_Coefficients_left.push_back(k3);Distort_Coefficients_left.push_back(k4);

Distort_Coefficients_left.push_back(k5);Distort_Coefficients_left.push_back(k6);

undistortPoints(uvl_undistorted_array,uvl_array,k_left,Distort_Coefficients_left); //矫正左边的点

//需要将变换后的点先变化为齐次坐标系,然后还需要乘以相机参数矩阵,才是最终的变化的坐标。

Point2d _uvl_; //去畸变的归一化平面坐标

_uvl_=uvl_array.front();

camera2pixel(k_left,uvl,_uvl_);

cout<<"左边去畸变的像素坐标uvl = \n"<<uvl<<endl;

/***************公垂线中点坐标解算三维点P坐标*************/

Vector3d Or = Vector3d(0,0,0);

Vector3d Ol = T;

Vector3d Pr = Vector3d(0,0,0);

pixel2camera(k_right,uvr,Pr); //得到在右camera坐标下的uvr三维坐标

Vector3d Pl_ = Vector3d(0,0,0); //左像素点的归一化平面坐标

Vector3d Pl = Vector3d(0,0,0); //左像素点在右camera下的三维坐标

pixel2camera(k_left,uvl,Pl_);

Pl = R*Pl_+T; //得到在右camera坐标下的uvl三维坐标

Vector3d Pl_Ol = Pl-Ol;

Vector3d Pr_Or = Pr-Or;

Vector3d ll = Pl_Ol/sqrt( pow(Pl_Ol.x(),2) + pow(Pl_Ol.y(),2) + pow(Pl_Ol.z(),2) );

Vector3d lr = Pr_Or/sqrt( pow(Pr_Or.x(),2) + pow(Pr_Or.y(),2) + pow(Pr_Or.z(),2) ); //单位方向向量

Vector3d Pr_Pl = Pr-Pl;

double l1l2=ll.dot(lr);

double a1 = ( Pr_Pl.dot(ll) - ((Pr_Pl.dot(lr)) * l1l2 ))/( 1 - pow(l1l2,2) );

double a2 = ( Pr_Pl.dot(ll) * l1l2 - Pr_Pl.dot(lr) )/( 1- pow(l1l2,2) );

Vector3d Ql = Pl+a1*ll;

Vector3d Qr = Pr+a2*lr;

Vector3d Ql_Qr = Ql-Qr;

PR=(Ql+Qr)/2;

cout<<"公垂线长度||Ql_Qr|| = \n"<<sqrt(pow(Ql_Qr.x(),2)+pow(Ql_Qr.y(),2)+pow(Ql_Qr.z(),2))<<endl;

cout<<"以右camera为世界坐标系的三维坐标 PR = \n"<<PR<<endl;

cout<<"满足关系:R’*Pr+T’=Pl的旋转矩阵R' = \n"<<R.transpose()<<endl; //右camera到左camera的转换

cout<<"满足关系:R’*Pr+T’=Pl的平移向量T' = \n"<<-T<<endl;

//计算重投影像素坐标

Sophus::SE3 SE3_Rt(R.transpose(), -T); //注意旋转矩阵的方向

Vector4d p_=SE3_Rt.matrix() * Vector4d(PR.x(),PR.y(),PR.z(),1); //齐次坐标化为非齐次坐标,注意维度

Vector3d uvl_1 = k_left_ * Vector3d( p_.x()/p_.z(),p_.y()/p_.z(),1); //注意:先除z再与K相乘

uvl_=Vector2d(uvl_1.x(),uvl_1.y());

cout<<"左camera重投影的像素坐标 uvl'= \n"<<uvl_<<endl;

uvr_.x()=k_right_(0,0)*PR.x()/PR.z()+k_right_(0,2);

uvr_.y()=k_right_(1,1)*PR.y()/PR.z()+k_right_(1,2);

cout<<"右camera重投影的像素坐标 uvr' = \n"<<uvr_<<endl;

//计算重投影坐标误差,两点距离

double error_left =sqrt(pow(uvl.x()-uvl_.x(),2)+pow(uvl.y()-uvl_.y(),2) );

double error_right=sqrt(pow(uvr.x-uvr_.x(),2)+pow(uvr.y-uvr_.y(),2) );

cout<<"左边的重投影误差为:"<<error_left<<endl;

cout<<"右边的重投影误差为:"<<error_right<<endl;

//计算F_matrix

Matrix3d f_matrix;

Matrix3d T_hat = Sophus::SO3::hat(T);

f_matrix = (k_right_.inverse().transpose()) * T_hat * R * k_right_.inverse();

cout<<"fundamental_matrix = \n"<<f_matrix<<endl;

Mat fundamental;

eigen2cv(f_matrix,fundamental);

//计算极线L的距离

Mat correspondent_lines;

vector<Point2d> points;

points.push_back(uvr);

computeCorrespondEpilines(points,2,fundamental,correspondent_lines); //极线计算函数

//whichImage Index of the image (1 or 2) that contains the points .

cout<<"correspondent_lines= \n"<<correspondent_lines<<endl;

return 0;

}

- 参考文献:

[1] 罗世民, 李茂西. 双目视觉测量中三维坐标的求取方法研究[D]. , 2006.

[2] Gao X, Zhang T, Liu Y, et al. Visual SLAM fourteen lectures: From theory to practice[M].Beijing: Electronic Industry Press, 2017.高翔, 张涛, 刘毅, 等. 视觉SLAM十四讲:从理论到实践[M]. 北京: 电子工业出版社, 2017.

[3] Bradski G, Kaehler A, 于仕琪, 等. 学习 OpenCV (中文版)[J]. 2009.