在学习完SVM之后,我体会到SVM确实是一个很好的算法,首先它是我学习到的第二个非线性分类器,在之前的229课程中,我们知道核函数以及软间隔的引入成功地帮助我们可以用SVM解决线性不可分的问题。我们学习了不少二分类的分类器,比如SVM、逻辑斯蒂回归等,这些算法在解决二分类问题时很有用,但我们日常碰到的问题往往有多个类别,这个时候我们显得举足无措,所以我在这里想谈的是如何将二分类算法应用到多分类问题上(以SVM这个二分类算法)为例。公式不好编辑,我都是在word中写好再截图的。

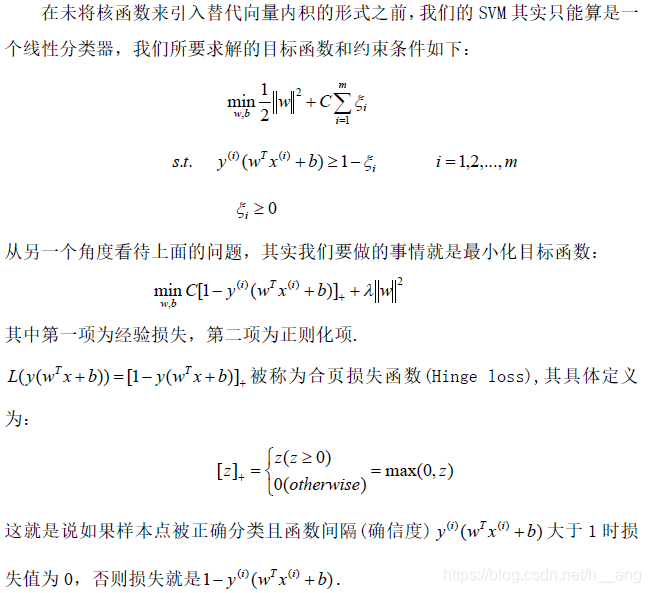

一:从合页损失函数的角度看二分类SVM

二:二分类算法应用到多分类问题的方法

1.直接法:直接在目标函数上进行修改,将多个分类面的参数求解合并到一个最优化问题中,通过求解该最优化问题“一次性”实现多类分类。这种方法实现起来比较困难,需要自己重新构造目标函数,只适合用于小型问题中。

2.间接法:主要是通过组合多个二分类器来实现多分类的构造,常见的方法有one-versus-rest(一对多法)和one-versus-one(一对一法)

想要的具体了解这两种方法优缺点可以参考这篇博文:

www.cnblogs.com/CheeseZH/p/5265959.html

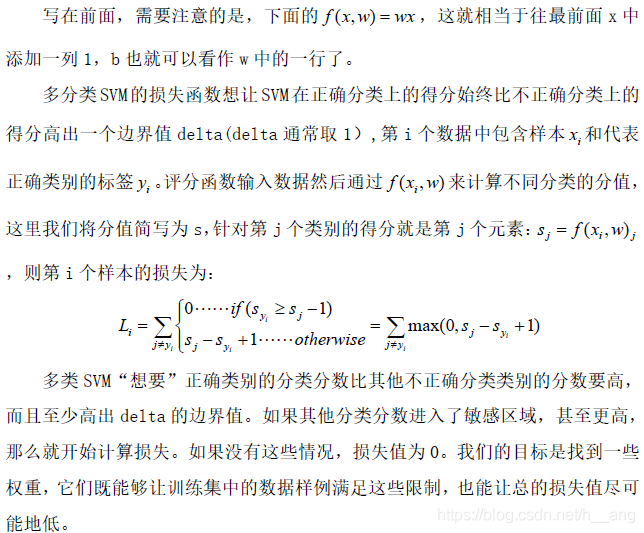

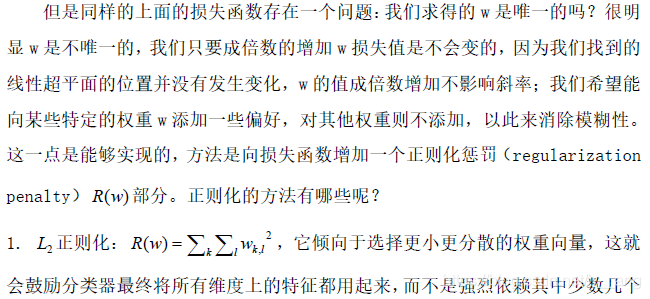

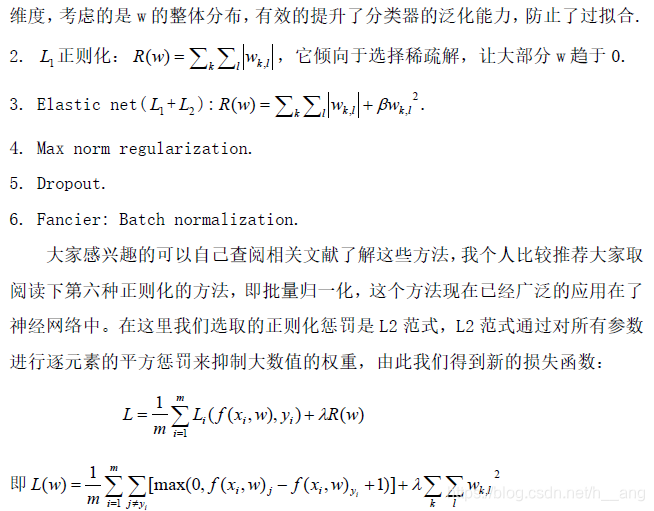

三:多分类SVM(直接法)

# 使用循环迭代的python代码

def svm_loss_naive(W, X, y, reg):

dW = np.zeros(W.shape)

# compute the loss and the gradient

num_classes = W.shape[1]

num_train = X.shape[0]

loss = 0.0

# 逐个计算每个样本的loss

for i in range(num_train):

# 计算每个样本的各个分类得分

scores = X[i].dot(W)

correct_class_score = scores[y[i]]

# 计算每个分类的得分,计入loss中

for j in range(num_classes):

# 根据公式,j==y[i]的就是本身的分类,不用算了

if j == y[i]:

continue

margin = scores[j] - correct_class_score + 1 # note delta = 1

#如果计算的margin > 0,那么就要算入loss,

if margin > 0:

loss += margin

dW[:, y[i]] += -X[i].T

dW[:, j] += X[i].T

loss /= num_train

dW /= num_train

# Add regularization to the loss.

loss += reg * np.sum(W * W)

dW += reg * W

return loss, dW

# 使用向量计算的python代码

def svm_loss_vectorized(W, X, y, reg):

loss = 0.0

dW = np.zeros(W.shape) # initialize the gradient as zero

scores = X.dot(W)

num_train = X.shape[0]

#利用np.arange(),correct_class_score变成了 (num_train,y)的矩阵

correct_class_score = scores[np.arange(num_train), y]

correct_class_score = np.reshape(correct_class_score, (num_train, -1))

margins = scores - correct_class_score + 1

margins = np.maximum(0, margins)

#然后这里计算了j=y[i]的情形,所以把他们置为0

margins[np.arange(num_train), y] = 0

loss += np.sum(margins) / num_train

loss += reg * np.sum( W * W)

margins[margins > 0] = 1

# 因为j=y[i]的那一个元素的grad要计算 >0 的那些次数次

row_sum = np.sum(margins, axis=1)

margins[np.arange(num_train), y] = -row_sum.T

dW = np.dot(X.T, margins)

dW /= num_train

dW += reg * W

return loss, dW

四:多分类SVM(间接法)

我自己写了一个十分类的手写数字识别算法,采用的是一对多的方法,由于这个方法直接使用的话会存在正负样本数目不匹配的问题,所以我先对原样本进行了抽取,分别建立了十个文件夹,每个文件夹中包括原样本中要建立的分类器的数字的所有文件和其他数字的文件的一部分,保证正负样本数目差不多一样多。比如我先做是不是1的分类器,原样本中数字0-9的样本各有180个,那我就会1的所有样本作为正样本,而从0和2-9的样本中随机抽取20个样本合起来就有180个负样本了,这样就有效的解决了正负样本不匹配的问题;我之所以选用一对多的方法是因为10分类的问题要是用一对一的方法会存在分类器数量过多,需要45个分类器,而采用这个一对多只需要10个分类器就足够了。

有关二分类SVM的实现方法可以看我以前的文章:

SVM(上):https://blog.csdn.net/h__ang/article/details/84589030

SVM(下):https://blog.csdn.net/h__ang/article/details/84644695

先对源文件夹进行处理,下面的代码很粗糙,但是可以大概看出来我是怎么做的:

import numpy as np

import os

import random

import shutil

# 获取原始样本中数字1-9各自的数目

def get_digitalnum(dirname):

files = os.listdir(dirname)

m = len(files)

m_num = [0]*10

for i in range(m):

file_name = files[i]

filestr = file_name.split('.')[0]

labelnum = int(filestr.split('_')[0])

if (labelnum == 0):

m_num[0] += 1

elif(labelnum == 1):

m_num[1] += 1

elif(labelnum == 2):

m_num[2] += 1

elif(labelnum == 3):

m_num[3] += 1

elif(labelnum == 4):

m_num[4] += 1

elif (labelnum == 5):

m_num[5] += 1

elif (labelnum == 6):

m_num[6] += 1

elif (labelnum == 7):

m_num[7] += 1

elif (labelnum == 8):

m_num[8] += 1

elif (labelnum == 9):

m_num[9] += 1

return m_num

# 分开数据集

def make_diffnum(sou_file, tar_file):

# shutil.rmtree(tar_file)

# 创建文件夹

os.makedirs(tar_file)

# 取图片的原始路径

pathDir = os.listdir(sou_file)

m = len(pathDir)

souce_num = int(tar_file.split('_')[0])

for i in range(m):

file_name = pathDir[i]

filestr = file_name.split('.')[0]

labelnum = int(filestr.split('_')[0])

if(labelnum == souce_num):

shutil.copy(sou_file + '/' + file_name, tar_file + '/')

# 制作一对多的数据集,观察到每个数字的图片数量在180左右,共十个数字,为了保证正负样本的图片集数量差不多,

# 每次从其他数字的负样本中随机挑选出20个图片放入新的文件夹

def move_file(source_num):

# 清空文件夹

# shutil.rmtree(tar_file)

# 创建文件名

target_file = str(source_num)+"_vs_othernum"

# 创建文件夹

os.makedirs(target_file)

# 自定义抽取图片的比例,这里考虑到每一个分类器正例的数目选择20

picknumber = 10

for i in range(10):

if(source_num == i):

files = os.listdir(str(source_num)+"_testDigits")

print(files)

number = len(files)

for i in range(number):

file_name = files[i]

shutil.copy(str(source_num)+"_testDigits"+'/'+file_name, target_file + '/')

else:

pathdir = os.listdir(str(i)+"_testDigits")

sample = random.sample(pathdir, picknumber) # 随机选取picknumber数量的样本图片

for name in sample:

shutil.copy(str(i)+"_testDigits"+'/'+name, target_file + '/')

if __name__ == "__main__":

# train_m = get_digitalnum("trainingDigits")

# print(train_m)

test_m = get_digitalnum("testDigits")

print(test_m)

for i in range(10):

m1 = get_digitalnum(str(i)+"_vs_othernum")

print(m1)

"""

# 源文件地址前缀

source_file = "_testDigits"

for i in range(10):

move_file(i)

"""

"""

source_file = "testDigits"

# 将每一类数字分开作为一个单独的数据集

for i in range(10):

#make_diffnum(source_file, str(i)+"_testDigits")

m2 = get_digitalnum(str(i)+"_testDigits")

print(m2)"""

接下来就是10分类SVM的代码:

import numpy as np

import os

import random

import matplotlib.pyplot as plt

# 将 32*32 的数字格式转换为一个 1*1024 的数组

def ImageToVector(filename):

fr = open(filename)

m = len(fr.readlines())

vector = np.zeros((1, 1024))

fr = open(filename)

for i in range(m):

row = fr.readline().strip()

for j in range(m):

vector[0, 32*i+j] = row[j]

return vector

# 制作数据集

def load_Image(dirname, target_num):

files = os.listdir(dirname)

m = len(files)

trainset = np.zeros((m, 1024))

labelset = []

for i in range(m):

file_name = files[i]

filestr = file_name.split('.')[0]

labelnum = int(filestr.split('_')[0])

if(labelnum == target_num):

labelset.append(1)

trainset[i] = ImageToVector("%s/%s" % (dirname, file_name))

else:

labelset.append(-1)

trainset[i] = ImageToVector("%s/%s" % (dirname, file_name))

return np.mat(trainset), np.mat(labelset).T

# 核函数

def keanel_func(X, A, kTup):

m, n = np.shape(X)

K = np.mat(np.zeros((m, 1)))

if(kTup[0] == 'lin'):

K = X * A.T

elif(kTup[0] == 'rbf'):

for j in range(m):

divider = X[j] - A

divider_up = divider * divider.T

K[j] = np.exp(divider_up/(-1 * (kTup[1]**2)))

else:

raise NameError("That Kernel is not recognized")

return K

# 创建一个对象,包含程序需要的多种属性参数

class opstruct:

def __init__(self, dataMat, labelMat, C, toler, KTup):

self.dataMat = dataMat

self.labelMat = labelMat

self.C = C

self.tol = toler

self.m = np.shape(dataMat)[0]

self.alphas = np.mat(np.zeros((self.m, 1)))

self.b = 0

# Ei的缓存变量

self.eicache = np.mat(np.zeros((self.m, 2)))

self.K = np.mat(np.zeros((self.m, self.m)))

for i in range(self.m):

self.K[:, i] = keanel_func(self.dataMat, self.dataMat[i], KTup)

# 随机选择一个和i不同的j

def selectjrand(i, m):

j = i

while(j == i):

j = int(random.uniform(0, m))

return j

# 对alphas[j]的值进行调整

def clipAlphs(alphaj, H, L):

if(alphaj > H):

alphaj = H

if(alphaj < L):

alphaj = L

return alphaj

# 计算Ek

def calculate_Ek(os, k):

fxk = float(np.multiply(os.alphas, os.labelMat).T * os.K[:, k]) + os.b

Ek = fxk - float(os.labelMat[k])

return Ek

# 启发式内循环,选择alphaj

def selectj(i, os, Ei):

maxk = -1

maxDeltaE = 0

Ej = 0

os.eicache[i] = [1, Ei]

# validEieacheList = np.nonzero(os.eicache[:, 0].A)[0]

validEieacheList = np.where(os.eicache[:, 0].A == 1)[0]

if (len(validEieacheList > 1)):

for k in validEieacheList:

if (k == i):

continue

Ek = calculate_Ek(os, k)

deltaE = abs(Ek - Ei)

if(deltaE > maxDeltaE):

maxk = k

maxDeltaE = deltaE

Ej = Ek

return maxk, Ej

else:

j = selectj(i, os.m)

Ej = calculate_Ek(os, j)

return j, Ej

# 计算误差Ek放入eieache缓存

def updateEk(os, k):

Ek = calculate_Ek(os, k)

os.eicache[k] = [1, Ek]

# 更新每次启发式选择的两个alpha的值

def inner(i, os):

# 计算Ei

Ei = calculate_Ek(os, i)

# 如果alphas[i]不满足kkt条件就进入下面的优化

if (((os.labelMat[i] * Ei < -os.tol) and (os.alphas[i] < os.C)) or

((os.labelMat[i] * Ei > os.tol) and (os.alphas[i] > 0))):

# 由内循环选择的j

j, Ej = selectj(i, os, Ei)

# 保存alphai和alphaj的值

alphai_old = os.alphas[i].copy()

alphaj_old = os.alphas[j].copy()

# L和H用于将alphas[j]调整到0-C之间。如果L==H,就不做任何改变,直接return 0

if (os.labelMat[i] != os.labelMat[j]):

L = max(0, os.alphas[j] - os.alphas[i])

H = min(os.C, os.C + os.alphas[j] - os.alphas[i])

else:

L = max(0, os.alphas[j] + os.alphas[i] - os.C)

H = min(os.C, os.alphas[j] + os.alphas[i])

if L == H:

print("L==H")

return 0

# 计算alphaj的值

# eta = os.dataMat[i] * os.dataMat[i].T + os.dataMat[j] * os.dataMat[j].T - 2 * os.dataMat[i] * os.dataMat[j].T

eta = os.K[i, i] + os.K[j, j] - 2 * os.K[i, j]

if (eta <= 0):

print("eta <= 0")

return 0

os.alphas[j] += os.labelMat[j] * (Ei - Ej) / eta

os.alphas[j] = clipAlphs(os.alphas[j], H, L)

updateEk(os, j)

if (os.alphas[j] - alphaj_old <= 0.00001):

print("j not changing enough")

return 0

os.alphas[i] += os.labelMat[i] * os.labelMat[j] * (alphaj_old - os.alphas[j])

updateEk(os, i)

# 计算b

b1 = (os.b - Ei - os.labelMat[i] * os.K[i, i] * (os.alphas[i] - alphai_old) - os.labelMat[i] * os.K[i, j] *

(os.alphas[j] - alphaj_old))

b2 = (os.b - Ej - os.labelMat[i] * os.K[i, j] * (os.alphas[i] - alphai_old) - os.labelMat[i] * os.K[j, j] *

(os.alphas[j] - alphaj_old))

if (0 < os.alphas[i]) and (os.alphas[i] < os.C):

os.b = b1

elif (0 < os.alphas[j]) and (os.alphas[j] < os.C):

os.b = b2

else:

os.b = (b1 + b2) / 2.0

return 1

else:

return 0

# 完整的smo算法

def smo_full(dataMat, labelMat, C, toler, maxIter, kTup):

# 创建一个opstruct对象

os = opstruct(dataMat, labelMat, C, toler, kTup)

iter = 0

entireset = True

alphaPairsChanged = 0

while ((iter < maxIter) and ((entireset) or (alphaPairsChanged > 0))):

alphaPairsChanged = 0

if (entireset):

for i in range(os.m):

alphaPairsChanged += inner(i, os)

print("fullSet, iter: %d i:%d, pairs changed %d" % (iter, i, alphaPairsChanged))

iter += 1

else:

# 遍历所有的非边界alpha值,也就是不在边界0或C上的值。

nonBoundIs = np.nonzero((os.alphas.A > 0) * (os.alphas.A < C))[0]

for i in nonBoundIs:

alphaPairsChanged += inner(i, os)

print("non-bound, iter: %d i:%d, pairs changed %d" % (iter, i, alphaPairsChanged))

iter += 1

if (entireset):

entireset = False

elif (alphaPairsChanged == 0):

entireset = True

print("iteration number: %d" % iter)

print("entireset:", entireset, "alphaPairsChanged:", alphaPairsChanged)

return os.b, os.alphas

# 基于上面的alphas求w

def calculate_w(dataMat,labelMat,alphas):

print("dataMat: ", dataMat.shape)

print("labelMat: ", labelMat.shape)

m, n = dataMat.shape

w = np.zeros((n, 1))

for i in range(m):

w += np.multiply(alphas[i]*labelMat[i], dataMat[i].T)

return w

# 测试函数

def Test(w, b, datamat, labelmat, alphas, kTup):

print("testing:")

test_accurancy = -1

train_accurancy = -1

m, n = np.shape(datamat)

svInd = np.nonzero(alphas.A > 0)[0]

print(svInd)

sVs = datamat[svInd]

labelsVs = labelmat[svInd]

print("sVs_shape:", sVs.shape)

print("there are %d Support Vectors" %(len(svInd)))

errorcount1 = 0

for i in range(m):

kernelEval = keanel_func(sVs, datamat[i], kTup)

#print(kernelEval)

predict = kernelEval.T * np.multiply(labelsVs, alphas[svInd]) + b

#print(np.sign(predict), labelmat[i])

if(np.sign(predict) != labelmat[i]):

errorcount1 += 1

train_accurancy = 1 - float(errorcount1)/m

print("the training error rate is:%f" %(float(errorcount1)/m))

return train_accurancy, len(svInd), svInd, sVs, labelsVs

# 定义一个 plotData 函数,输入参数是 数据 X 和标志 flag: y,返回作图操作 plt, p1, p2 , 目的是为了画图

def plotData(X, y):

# 找到标签为1和0的索引组成数组赋给pos 和 neg

pos = np.where(y == 1)

neg = np.where(y == -1)

p1 = plt.scatter(X[pos, 0].tolist(), X[pos, 1].tolist(), marker='s', color='red')

p2 = plt.scatter(X[neg, 0].tolist(), X[neg, 1].tolist(), marker='o', color='green')

return p1,p2

# 加载整个数据集

def load_Image2(filename):

files = os.listdir(filename)

m = len(files)

trainset = np.zeros((m, 1024))

labelset = []

for i in range(m):

file_name = files[i]

filestr = file_name.split('.')[0]

labelnum = int(filestr.split('_')[0])

labelset.append(labelnum)

trainset[i] = ImageToVector("%s/%s" % (filename, file_name))

return np.mat(trainset), np.mat(labelset).T

if __name__ == "__main__":

train_accur = [0] * 10

sv_num = [0]*10

svInd = [0]*10

sVs = [0]*10

labelsVs = [0]*10

k1 = 10

ktup = ('rbf', k1)

# 测试

testmat, testlabelmat = load_Image2("testDigits")

print(testmat.shape)

print(testlabelmat.shape)

predict = [-1]*10

m1, n1 = np.shape(testmat)

print(m1)

k = random.randint(0, m1)

print(k)

print(testlabelmat[k])

accur = 0

for i in range(m1):

for j in range(10):

trainmat, labelmat = load_Image("for_train_onevsmore/" + str(j) + "_vs_othernum", j)

print("trainmat.shape:", trainmat.shape)

print("labelmat.shape:", labelmat.shape)

b, alphas = smo_full(trainmat, labelmat, 200, 0.0001, 10000, ktup)

w = calculate_w(trainmat, labelmat, alphas)

train_accur[j], sv_num[j], svInd[j], sVs[j], labelsVs[j] = Test(w, b, trainmat, labelmat, alphas, ktup)

kernelEval = keanel_func(sVs[j], testmat[k], ktup)

predict[j] = float(kernelEval.T * np.multiply(labelsVs[j], alphas[svInd[j]]) + b)

print(np.argmax(predict), float(testlabelmat[k]))

"""

# 设置核函数有关参数

k1 = 9.5

train_accurancy_list = []

test_accurancy_list = []

k_list = []

svnum_list = []

while (k1 <= 10.5):

ktup = ('rbf', k1)

b, alphas = smo_full(trainmat, labelmat, 200, 0.0001, 10000, ktup)

w = calculate_w(trainmat, labelmat, alphas)

train_accur, test_accur, sv_num = Test(w, b, trainmat, labelmat, alphas, ktup)

k_list.append(k1)

train_accurancy_list.append(train_accur)

test_accurancy_list.append(test_accur)

svnum_list.append(sv_num)

k1 += 0.1

print(train_accurancy_list)

print(test_accurancy_list)

print(k_list)

print(svnum_list)

"""