1.创建项目:scrapy startproject mySpider

2.创建爬虫:scrapy genspider –t crawl tencent3 hr.tencent.com

3.安装需要的软件包

4.tencent3.py代码

# -*- coding: utf-8 -*-

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from scrapy_redis.spiders import RedisCrawlSpider

class TencentSpider(RedisCrawlSpider):

name = 'tencent3'

allowed_domains = ['hr.tencent.com']

#start_urls = ['https://hr.tencent.com/position.php']

redis_key='tencent3:start_urls'

rules = (

Rule(LinkExtractor(restrict_xpaths=('//tr[@class="f"]',)), follow=True),

Rule(LinkExtractor(restrict_xpaths=('//tr[@class="odd"]','//tr[@class="even"]')), callback='parse_item'),

)

def parse_item(self, response):

item = {}

item['name'] = response.xpath('//td[@id="sharetitle"]/text()').extract_first()

item['address'] = response.xpath('//tr[@class="c bottomline"]/td[1]/text()').extract_first()

print(item)

# yield item

'''

启动命令:

sudo redis-server /etc/redis/redis.conf

redis-cli

select 15

LPUSH tencent3:start_urls https://hr.tencent.com/position.php

'''

5.配置settings.py里面的文件

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

SCHEDULER_PERSIST = True

REDIS_URL = 'redis://192.168.12.189:6379/15

#192.168.12.189为本地虚拟机ip地址

当然还需要在settings.py其他基础配置,这里不做详细介绍

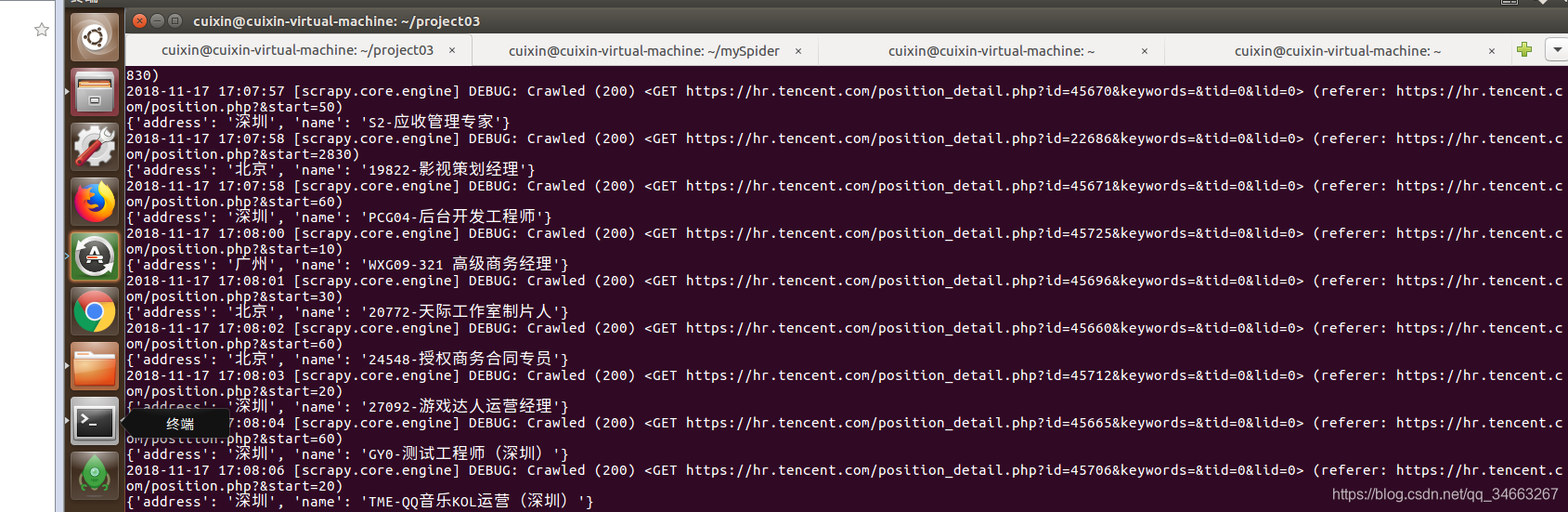

5.运行爬虫项目scrapy crawl tencent3

6.开启redis并配置建和值

sudo redis-server /etc/redis/redis.conf

redis-cli

select 15

LPUSH tencent3:start_urls https://hr.tencent.com/position.php

7.成功爬取