版权声明:本文为博主原创文章,转载请注明出处。 https://blog.csdn.net/Yellow_python/article/details/86707663

内置样本

影评正负2分类(已编码)

from keras.datasets import imdb # Internet Movie Database

# num_words设定较小时,会发现高频词多是停词

(x, y), _ = imdb.load_data(num_words=1)

print(x.shape, y.shape) # (25000,) (25000,)

# 词与ID间的映射

word2id = imdb.get_word_index()

id2word = {i: w for w, i in word2id.items()}

print(x[0])

print(' '.join([id2word[i] for i in x[0]]))

序列截断或补齐为等长

from keras.preprocessing.sequence import pad_sequences

maxlen = 2

print(pad_sequences([[1, 2, 3], [1]], maxlen))

"""[[2 3] [0 1]]"""

print(pad_sequences([[1, 2, 3], [1]], maxlen, value=9))

"""[[2 3] [9 1]]"""

print(pad_sequences([[1, 2, 3], [1]], maxlen, padding='post'))

"""[[2 3] [1 0]]"""

print(pad_sequences([[1, 2, 3], [1]], maxlen, truncating='post'))

"""[[1 2] [0 1]]"""

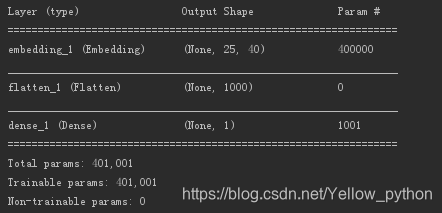

词嵌入

from keras.datasets.imdb import load_data # 影评情感2分类

from keras.preprocessing.sequence import pad_sequences

from keras.models import Sequential

from keras.layers import Embedding, Flatten, Dense

"""配置"""

num_words = 10000 # 按词频大小取样本前10000个词

input_dim = num_words # 词库大小(必须>=num_words)

maxlen = 25 # 序列长度

output_dim = 40 # 词向量维度

batch_size = 128

epochs = 2

"""数据读取与处理"""

(x, y), _ = load_data(num_words=num_words)

x = pad_sequences(x, maxlen)

"""建模"""

model = Sequential()

# 词嵌入:词库大小、词向量维度、固定序列长度

model.add(Embedding(input_dim, output_dim, input_length=maxlen))

# 平坦化:maxlen * output_dim

model.add(Flatten())

# 输出层:2分类

model.add(Dense(units=1, activation='sigmoid'))

# RMSprop优化器、二元交叉熵损失

model.compile('rmsprop', 'binary_crossentropy', ['acc'])

# 训练

model.fit(x, y, batch_size, epochs)

"""模型可视化"""

model.summary()

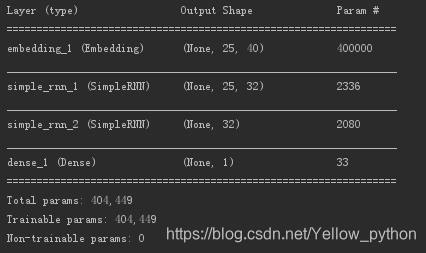

词嵌入+RNN

from keras.datasets.imdb import load_data # 影评情感2分类

from keras.preprocessing.sequence import pad_sequences

from keras.models import Sequential

from keras.layers import Embedding, Dense, SimpleRNN

import matplotlib.pyplot as mp

"""配置"""

num_words = 10000 # 按词频大小取样本前10000个词

input_dim = num_words # 词库大小(必须>=num_words)

maxlen = 25 # 序列长度

output_dim = 40 # 词向量维度

units = 32 # RNN神经元数量

batch_size = 128

epochs = 3

"""数据读取与处理"""

(x, y), _ = load_data(num_words=num_words)

x = pad_sequences(x, maxlen)

"""建模"""

model = Sequential()

model.add(Embedding(input_dim, output_dim, input_length=maxlen))

model.add(SimpleRNN(units, return_sequences=True)) # 返回序列全部结果

model.add(SimpleRNN(units, return_sequences=False)) # 返回序列最尾结果

model.add(Dense(units=1, activation='sigmoid'))

model.summary()

"""编译、训练"""

model.compile('rmsprop', 'binary_crossentropy', ['acc'])

history = model.fit(x, y, batch_size, epochs, verbose=2,

validation_split=.1) # 取10%样本作验证

"""精度曲线"""

acc = history.history['acc']

val_acc = history.history['val_acc']

mp.plot(range(epochs), acc)

mp.plot(range(epochs), val_acc)

mp.show()

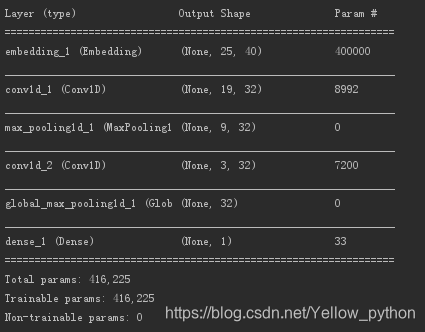

词嵌入+CNN

from keras.datasets.imdb import load_data # 影评情感2分类

from keras.preprocessing.sequence import pad_sequences

from keras.models import Sequential

from keras.layers import Embedding, Dense,\

Conv1D, MaxPool1D, GlobalMaxPool1D

"""配置"""

num_words = 10000 # 按词频大小取样本前10000个词

input_dim = num_words # 词库大小(必须>=num_words)

maxlen = 25 # 序列长度

output_dim = 40 # 词向量维度

filters = 32 # 卷积层滤波器数量

kernel_size = 7 # 卷积层滤波器大小

batch_size = 128

epochs = 3

"""数据读取与处理"""

(x, y), _ = load_data(num_words=num_words)

x = pad_sequences(x, maxlen)

"""建模"""

model = Sequential()

model.add(Embedding(input_dim, output_dim, input_length=maxlen))

model.add(Conv1D(filters, kernel_size, activation='relu'))

model.add(MaxPool1D(pool_size=2)) # strides默认等于pool_size

model.add(Conv1D(filters, kernel_size, activation='relu'))

model.add(GlobalMaxPool1D()) # 对于时序数据的全局最大池化

model.add(Dense(units=1, activation='sigmoid'))

model.summary()

"""编译、训练"""

model.compile('rmsprop', 'binary_crossentropy', ['acc'])

model.fit(x, y, batch_size, epochs, 2)

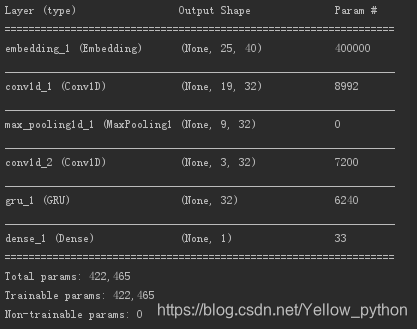

CNN+RNN

from keras.datasets.imdb import load_data # 影评情感2分类

from keras.preprocessing.sequence import pad_sequences

from keras.models import Sequential

from keras.layers import Embedding, Dense,\

Conv1D, MaxPool1D, GRU # Gated Recurrent Unit

"""配置"""

num_words = 10000 # 按词频大小取样本前10000个词

input_dim = num_words # 词库大小(必须>=num_words)

maxlen = 25 # 序列长度

output_dim = 40 # 词向量维度

filters = 32 # 卷积层滤波器数量

kernel_size = 7 # 卷积层滤波器大小

units = 32 # RNN神经元数量

batch_size = 128

epochs = 3

"""数据读取与处理"""

(x, y), _ = load_data(num_words=num_words)

x = pad_sequences(x, maxlen)

"""建模"""

model = Sequential()

model.add(Embedding(input_dim, output_dim, input_length=maxlen))

model.add(Conv1D(filters, kernel_size, activation='relu'))

model.add(MaxPool1D())

model.add(Conv1D(filters, kernel_size, activation='relu'))

model.add(GRU(units)) # 门限循环单元网络

model.add(Dense(1, activation='sigmoid'))

model.summary()

"""编译、训练"""

model.compile('rmsprop', 'binary_crossentropy', ['acc'])

model.fit(x, y, batch_size, epochs, 2)