本文将和大家一起一步步尝试对Fashion MNIST数据集进行调参,看看每一步对模型精度的影响。(调参过程中,基础模型架构大致保持不变)

废话不多说,先上任务:

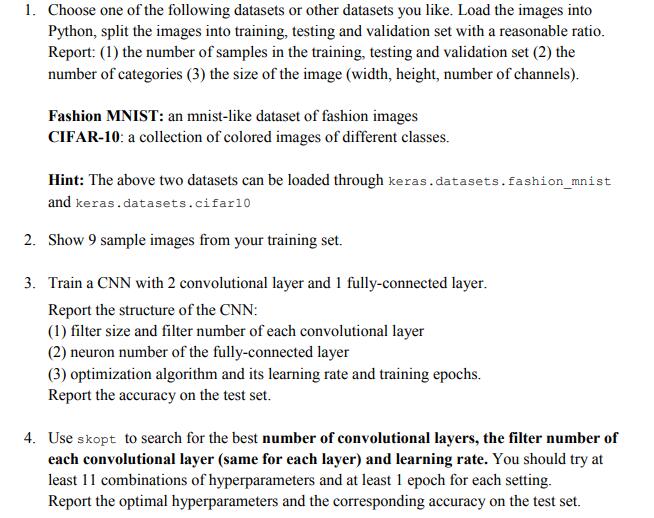

模型的主体框架如下(此为拿到的原始代码,使用的框架是keras):

裸跑的精度为

--

将epochs由1变为12

当加上dropout之后时,model的结构如下:

=================================================================

layer_conv_1 (Conv2D) (None, 28, 28, 32) 320

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 14, 14, 32) 0

_________________________________________________________________

layer_conv_2 (Conv2D) (None, 14, 14, 64) 18496

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 7, 7, 64) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 7, 7, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 3136) 0

_________________________________________________________________

dense_1 (Dense) (None, 64) 200768

_________________________________________________________________

dropout_2 (Dropout) (None, 64) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 650

=================================================================

Total params: 220,234

Trainable params: 220,234

Non-trainable params: 0

此时的精度为:

48384/55000 [=========================>....] - ETA: 0s - loss: 0.2562 - acc: 0.9068

49536/55000 [==========================>...] - ETA: 0s - loss: 0.2573 - acc: 0.9065

50688/55000 [==========================>...] - ETA: 0s - loss: 0.2572 - acc: 0.9064

51840/55000 [===========================>..] - ETA: 0s - loss: 0.2570 - acc: 0.9065

52992/55000 [===========================>..] - ETA: 0s - loss: 0.2574 - acc: 0.9063

54144/55000 [============================>.] - ETA: 0s - loss: 0.2573 - acc: 0.9062

55000/55000 [==============================] - 3s 50us/step - loss: 0.2574 - acc: 0.9063 - val_loss: 0.2196 - val_acc: 0.9192

Test loss: 0.2387163639187813

Test accuracy: 0.9133

Model saved

Select the first 9 images

加上dropout之后,对模型的分类精度还是有着不错的提升。提升了0.0276。

之后,尝试着将epoches增加,但对精度已经没有什么影响。

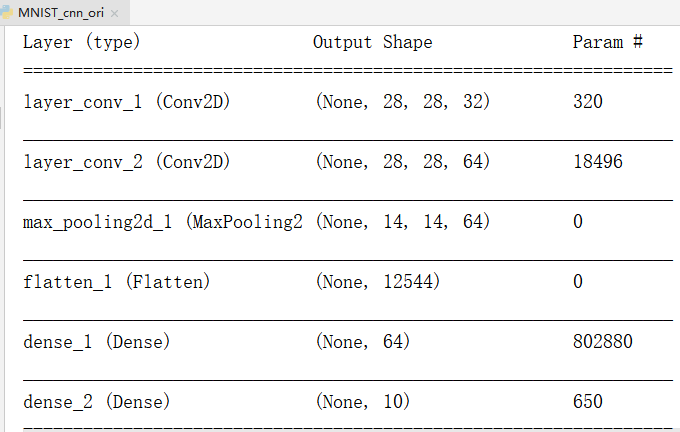

因此考虑数据增强,看看对分类精度的变化。代码如下:

采取的epoches为24,因为epoches数太少,可能导致训练的不充分而使得精度不高,结果如下:

426/429 [============================>.] - ETA: 0s - loss: 0.5142 - acc: 0.8114

428/429 [============================>.] - ETA: 0s - loss: 0.5140 - acc: 0.8114

429/429 [==============================] - 22s 52ms/step - loss: 0.5140 - acc: 0.8115 - val_loss: 0.3480 - val_acc: 0.8751

Test loss: 0.3479545822381973

Test accuracy: 0.8751

Model saved

Select the first 9 images

可以看出,训练的精度为0.8115,验证的精度为0.8751。表明模型还有进步空间,离过拟合,还有一段距离。增加轮次和增加神经网络的节点数,对模型精度还能继续提高。

但是数据增强后,模型的收敛速度变慢了,精度也下降了些。

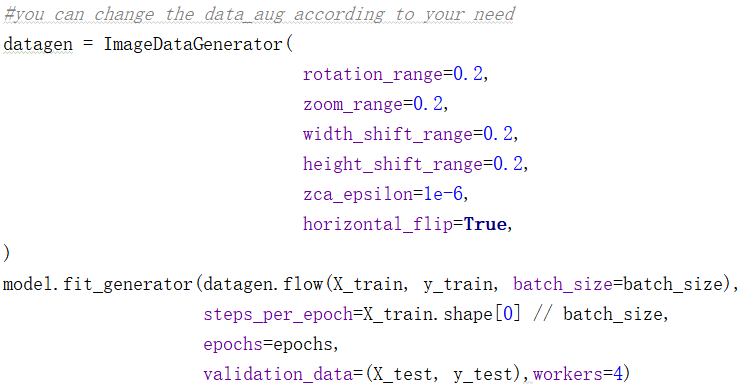

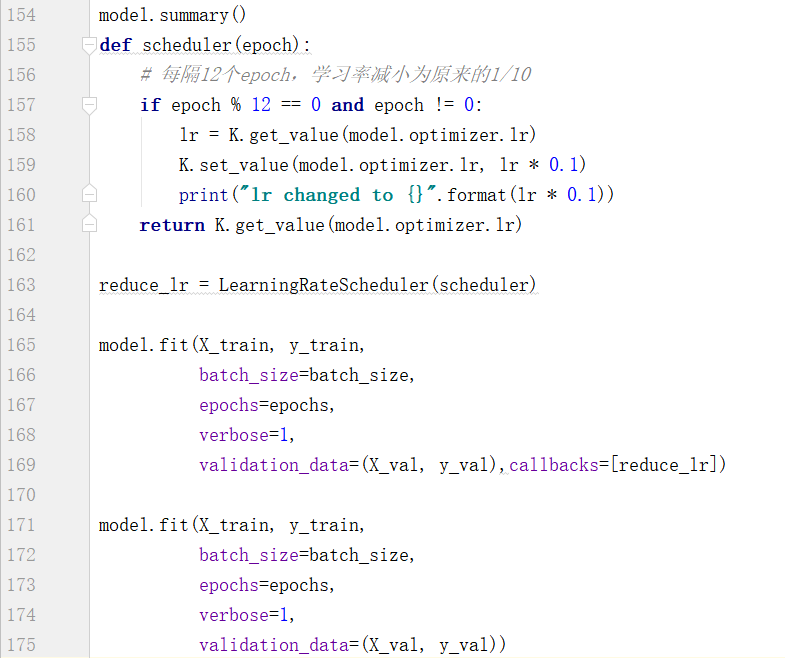

接下去,对模型学习率进行调整。初始学习率为1e-3

每12个epoches,学习率为原来的 1/10,总的epoches数为36,代码,结果如下:

53248/55000 [============================>.] - ETA: 0s - loss: 0.1977 - acc: 0.9287

54400/55000 [============================>.] - ETA: 0s - loss: 0.1981 - acc: 0.9287

55000/55000 [==============================] - 3s 53us/step - loss: 0.1980 - acc: 0.9288 - val_loss: 0.2026 - val_acc: 0.9266

Test loss: 0.22499265049099923

Test accuracy: 0.9216

Model saved

Select the first 9 images

精度还是有着不错的提升,达到了0.9216.

时间有限,文末将放所有完整的github代码链接: https://github.com/air-y/pythontrain_cnn

(PS:有兴趣的朋友可以自己调试下玩玩(基本保持2个卷积和全连接的结构不变,尽可能的提升模型精度),我们交流下。真是好久没用keras了,一直在使用pytorch)