fashion_mnist数据集的大小同手写数字

并且也是10分类问题,图片大小一样为28 * 28的单通道图片

此程序采用5层网络结构,Adam优化器,cross entry为loss进行train

需要注意的细节

对数据的预处理及类型转换, train data需要shuffle和batch, test data需要分batch

train时,迭代的是dataset类型的原生db

model.summary() # 打印网络信息 并且在这之前必须build输入,如: model.build([None, 28 * 28])

Sequential 接收layers时接收一个列表

losses.MSE => [b] 需要mean成标量才能运算梯度

cross_entryopy注意from_logits设置为True,并且第二个参数为logits

optimizers.apply_gradients时,需要用zip函数处理梯度和需要更新梯度的参数

test data的y不用作one_hot

argmax返回的时int64而 prob需要为int32 [b, 10] 并且argmax时,注意此处是在第二个维度求最大值 axis=1

reduce_sum求出来的时scalar,需要转为int才能加到corr_num上

# shape返回的为**列表**[b, ..]

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

def preprocess(x, y):

x = tf.cast(x, dtype=tf.float32) / 255.

y = tf.cast(y, dtype=tf.int32)

return x, y

(x, y), (x_test, y_test) = datasets.fashion_mnist.load_data()

print(x.shape, y.shape)

BATCH_SIZE = 128

# [[x1, y1], [x2, y2]....]

db = tf.data.Dataset.from_tensor_slices((x, y))

db_test = tf.data.Dataset.from_tensor_slices((x_test, y_test))

db = db.map(preprocess).shuffle(10000).batch(BATCH_SIZE)

db_test = db_test.map(preprocess).batch(BATCH_SIZE)

# train时,迭代的是原生db

db_iter = iter(db)

print(next(db_iter)[0].shape)

# squ 接收一个列表

model = Sequential([

layers.Dense(256, activation=tf.nn.relu), # [b, 784]@[784, 256] + [256] => [b, 256]

layers.Dense(128, activation=tf.nn.relu), # [b, 256]@[256, 128] + [128] => [b, 128]

layers.Dense(64, activation=tf.nn.relu), # [b, 128]@[128, 64] + [64] => [b, 64]

layers.Dense(32, activation=tf.nn.relu), # [b, 64]@[64, 32] + [32] => [b, 32]

layers.Dense(10) # logits [b, 32]@[32, 10] + [10]=> [b, 10]

])

model.build([None, 28 * 28])

model.summary() # 打印网络信息

optimizers = optimizers.Adam(learning_rate=1e-3)

def main():

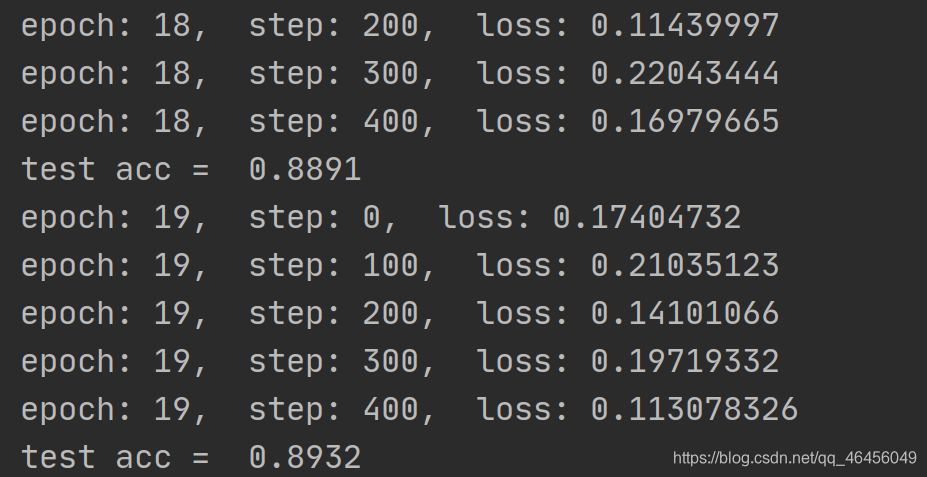

for epoch in range(1, 20):

for step, (x, y) in enumerate(db):

# [b, 28, 28] => [b, 784]

x = tf.reshape(x, [-1, 28 * 28])

with tf.GradientTape() as tape:

logits = model(x)

y = tf.one_hot(y, depth=10)

prob = tf.nn.softmax(logits)

# MSE => [b] 需要mean成标量才能运算

loss_mse = tf.reduce_mean(tf.losses.MSE(y, prob))

# cross_entryopy注意from_logits设置为True

loss_cros = tf.reduce_mean(tf.losses.categorical_crossentropy(y, logits, from_logits=True))

# 求gradient

grads = tape.gradient(loss_cros, model.trainable_variables)

# 此处需要zip,zip后[(w1_grad, w1),(w2_grad, w2),(w3_grad, w3)..]

optimizers.apply_gradients(zip(grads, model.trainable_variables))

if step % 100 == 0:

print("epoch: %s, step: %s, loss: %s" % (epoch, step, loss_cros.numpy()))

# test

# test集y不用作one_hot

corr_num = 0

total_num = 0

for (x, y) in db_test:

# x:[b, 28, 28] => [b, 784]

x = tf.reshape(x, [-1, 28*28])

logits = model(x)

prob = tf.nn.softmax(logits)

# prob:int64 [b, 10]

# 注意此处是在第二个维度求最大值

prob = tf.argmax(prob, axis=1)

prob = tf.cast(prob, dtype=tf.int32)

res = tf.cast(tf.equal(y, prob), dtype=tf.int32)

# reduce_sum求出来的时scalar

corr_num += int(tf.reduce_sum(res))

# shape返回的时列表[b, ..]

total_num += x.shape[0]

acc = corr_num / total_num

print("test acc = ", acc)

if __name__ == '__main__':

main()