目录:

1.交叉熵

2.Dropout

3.优化

4.优化器

1.交叉熵

更改loss函数

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=prediction))

对比两次结果:

二次代价函数:

loss = tf.reduce_mean(tf.square(y - prediction))Iter0,Testing Accuracy0.9259

Iter1,Testing Accuracy0.9291

Iter2,Testing Accuracy0.9302

Iter3,Testing Accuracy0.9307

Iter4,Testing Accuracy0.9309

Iter5,Testing Accuracy0.9314

Iter6,Testing Accuracy0.9309

Iter7,Testing Accuracy0.9308

Iter8,Testing Accuracy0.9307

Iter9,Testing Accuracy0.9308

Iter10,Testing Accuracy0.9297

Iter11,Testing Accuracy0.9297

Iter12,Testing Accuracy0.9296

Iter13,Testing Accuracy0.9302

Iter14,Testing Accuracy0.9301

Iter15,Testing Accuracy0.9296

Iter16,Testing Accuracy0.9303

Iter17,Testing Accuracy0.9309

Iter18,Testing Accuracy0.9314

Iter19,Testing Accuracy0.9311

Iter20,Testing Accuracy0.9314

交叉熵:

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=prediction))Iter0,Testing Accuracy0.9283

Iter1,Testing Accuracy0.9297

Iter2,Testing Accuracy0.9301

Iter3,Testing Accuracy0.93

Iter4,Testing Accuracy0.9299

Iter5,Testing Accuracy0.93

Iter6,Testing Accuracy0.93

Iter7,Testing Accuracy0.9296

Iter8,Testing Accuracy0.93

Iter9,Testing Accuracy0.9301

Iter10,Testing Accuracy0.9294

Iter11,Testing Accuracy0.9297

Iter12,Testing Accuracy0.9294

Iter13,Testing Accuracy0.9295

Iter14,Testing Accuracy0.9295

Iter15,Testing Accuracy0.9294

Iter16,Testing Accuracy0.929

Iter17,Testing Accuracy0.9293

Iter18,Testing Accuracy0.9287

Iter19,Testing Accuracy0.9289

Iter20,Testing Accuracy0.9287

(理论上交叉熵应该比二次代价函数好,但我没看出来)

2.Dropout

# -*- coding:utf-8 -*-

# author: aihan time: 2019/1/21

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

import tensorflow as tf

import numpy as np

import matplotlib as plt

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/",one_hot=True)

#每个批次的大小

batch_size = 100

#计算一共有多少个批次

n_batch = mnist.train.num_examples

x = tf.placeholder(tf.float32,[None,784])

y = tf.placeholder(tf.float32,[None,10])

keep_prob = tf.placeholder(tf.float32)

#创建一个神经网络

W1 = tf.Variable(tf.truncated_normal([784,500],stddev=0.1))

b1 = tf.Variable(tf.zeros([500])+0.1)

L1 = tf.nn.tanh(tf.matmul(x,W1)+b1) #激活函数为双曲正切

L1_drop = tf.nn.dropout(L1,keep_prob)

#隐含层

W2 = tf.Variable(tf.truncated_normal([500,500],stddev=0.1))

b2 = tf.Variable(tf.zeros([500])+0.1)

L2 = tf.nn.tanh(tf.matmul(L1_drop,W2)+b2) #激活函数为双曲正切

L2_drop = tf.nn.dropout(L2,keep_prob)

W3 = tf.Variable(tf.truncated_normal([500,500],stddev=0.1))

b3 = tf.Variable(tf.zeros([500])+0.1)

L3 = tf.nn.tanh(tf.matmul(L2_drop,W3)+b3) #激活函数为双曲正切

L3_drop = tf.nn.dropout(L3,keep_prob)

W4 = tf.Variable(tf.truncated_normal([500,10],stddev=0.1))

b4 = tf.Variable(tf.zeros([10])+0.1)

prediction = tf.nn.softmax(tf.matmul(L3_drop,W4)+b4)

# W = tf.Variable(tf.zeros([784,10]))

# b = tf.Variable(tf.zeros([10]))

#prediction = tf.nn.softmax(tf.matmul(x,W)+b)

# #二次代价函数

# loss = tf.reduce_mean(tf.square(y - prediction))

#交叉熵 作为代价函数 效果更好

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=prediction))

#使用梯度下降法

train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss)

#初始化变量

init = tf.global_variables_initializer()

#结果对比(true,false)存放在一个bool型列表中

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(prediction,1))#argmax返回一维张量中最大的值所在的位置

#求准确率

accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

with tf.Session() as sess:

sess.run(init)

for epoch in range(10):

for betch in range(n_batch):

batch_xs,batch_ys = mnist.train.next_batch(batch_size)

sess.run(train_step,feed_dict={x:batch_xs,y:batch_ys,keep_prob:1.0})

#keep_prob:1.0 全部工作

test_acc = sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels,keep_prob:1.0})

train_ass = sess.run(accuracy,feed_dict={x:mnist.train.images,y:mnist.train.labels,keep_prob:1.0})

print("Iter"+str(epoch)+",Testing Accuracy"+str(test_acc)+",Training Accuracy"+str(train_ass))

结果:

3.优化

SGD

Momentum

NAG

Adagrad

RMSprop

Adadelta

4.优化器

# -*- coding:utf-8 -*-

# author: aihan time: 2019/1/22

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data",one_hot=True)

batch_size = 100

n_batch = mnist.train.num_examples

x = tf.placeholder(tf.float32,[None,784])

y = tf.placeholder(tf.float32,[None,10])

W = tf.Variable(tf.zeros([784,10]))

b = tf.Variable(tf.zeros([10]))

prediction = tf.nn.softmax(tf.matmul(x,W)+b)

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=prediction))

#train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss)

#优化器 1e-3为10的-3次方 (学习率)

train_step = tf.train.AdamOptimizer(1e-2).minimize(loss)

init = tf.global_variables_initializer()

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(prediction,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

with tf.Session() as sess:

sess.run(init)

for epoch in range(21):

for betch in range(n_batch):

batch_xs,batch_ys = mnist.train.next_batch(batch_size)

sess.run(train_step, feed_dict={x: batch_xs, y: batch_ys})

acc = sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels})

print("Iter" + str(epoch) + ",Testing Accuracy" + str(acc))

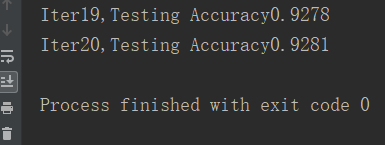

结果: