- 学习率:表示了每次更新参数的幅度大小。学习率过大,会导致待优化的参数在最小值附近波动,不收敛;学习率过小,会导致待优化的参数收敛缓慢。

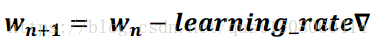

在训练过程中,参数的更新相纸损失函数梯度下降的方向。参数的更新公式为:

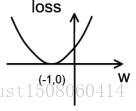

假设损失函数为 loss = (w + 1)2。梯度是损失函数 loss 的导数为 ∇=2w+2。如参数初值为 5,学习率为 0.2,则参数和损失函数更新如下:

1 次 参数 w: 5 5 - 0.2 * (2 * 5 + 2) = 2.6

2 次 参数 w: 2.6 2.6 - 0.2 * (2 * 2.6 + 2) = 1.16

3 次 参数 w: 1.16 1.16 – 0.2 * (2 * 1.16 + 2) = 0.296

4 次 参数 w: 0.296

损失函数 loss = (w + 1)2 的图像为:

由图可知,损失函数loss的最小值会在(-1,0)处得到,此时损失函数的倒数为0,得到最终参数w = -1。代码如下

#coding:utf-8 #设损失函数 loss=(w+1)^2,令w初始值为5。反向传播就是求最优w,即最小loss对应的w值 import tensorflow as tf w = tf.Variable(tf.constant(5, dtype=tf.float32)) loss = tf.square(w+1) train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss) with tf.Session() as sess: init_op = tf.global_variables_initializer() sess.run(init_op) for i in range(40): sess.run(train_step) w_val = sess.run(w) loss_val = sess.run(loss) print "After %s steps: w is %f, loss is %f." %(i, w_val, loss_val)运行结果如下:

After 35 steps: w is -1.000000, loss is 0.000000. After 36 steps: w is -1.000000, loss is 0.000000. After 37 steps: w is -1.000000, loss is 0.000000. After 38 steps: w is -1.000000, loss is 0.000000. After 39 steps: w is -1.000000, loss is 0.000000.若上述代码学习率设置为1,则运行结果如下:

After 35 steps: w is 5.000000, loss is 36.000000. After 36 steps: w is -7.000000, loss is 36.000000. After 37 steps: w is 5.000000, loss is 36.000000. After 38 steps: w is -7.000000, loss is 36.000000. After 39 steps: w is 5.000000, loss is 36.000000.若学习率设置为0.001,则运行结果如下:

After 35 steps: w is 4.582785, loss is 31.167484. After 36 steps: w is 4.571619, loss is 31.042938. After 37 steps: w is 4.560476, loss is 30.918892. After 38 steps: w is 4.549355, loss is 30.795341. After 39 steps: w is 4.538256, loss is 30.672281. -

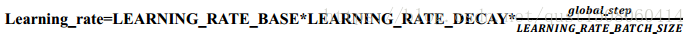

指数衰减学习率:随着训练轮数变化而动态更新

学习率计算公式如下:

用 Tensorflow 的函数表示为:

global_step = tf.Variable(0, trainable=False)

learning_rate=tf.train.exponential_decay(LEARNING_RATE_BASE,global_step,LEARNING_RATE_STEP,LEARNING_RATE_DECAY,staircase=True/False)

其中, LEARNING_RATE_BASE 为学习率初始值, LEARNING_RATE_DECAY 为学习率衰减率,global_step 记录了当前训练轮数,为不可训练型参数。学习率 learning_rate 更新频率为输入数据集总样本数除以每次喂入样本数。若 staircase 设置为 True 时,表示 global_step/learning rate step 取整数,学习率阶梯型衰减;若 staircase 设置为 false 时,学习率会是一条平滑下降的曲线。

例如:在本例中,模型训练过程不设定固定的学习率,使用指数衰减学习率进行训练。其中,学习率初值设置为 0.1,学习率衰减率设置为 0.99, BATCH_SIZE 设置为 1。

#coding:utf-8 #设损失函数 loss=(w+1)^2,令w初始值为10。反向传播就是求最优w,即求最小loss对应的w值 #使用指数衰减的学习率,在迭代初期得到较高的下降速度,可以在较小的训练轮数下取得更有效的收敛速度。 import tensorflow as tf LEARNING_RATE_BASE = 0.1 #最初学习率 LEARNING_RATE_DECAY = 0.99 #学习率衰减率 LEARNING_RATE_STEP = 1 #喂入多少轮BATCH_SIZE后,更新一此学习率,一般为:总样本数/BATCH_SIZE #运行了几轮BATCH_SIZE的计数器,初始值为0,设为不被训练 global_step = tf.Variable(0, trainable=False) #定义指数下降学习率 learning_rate = tf.train.exponential_decay(LEARNING_RATE_BASE, global_step, LEARNING_RATE_STEP, LEARNING_RATE_DECAY, staircase=True) w = tf.Variable(tf.constant(5, dtype=tf.float32)) loss = tf.square(w+1) train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step = global_step) with tf.Session() as sess: init_op = tf.global_variables_initializer() sess.run(init_op) for i in range(40): sess.run(train_step) learning_rate_val = sess.run(learning_rate) global_step_val = sess.run(global_step) w_val = sess.run(w) loss_val = sess.run(loss) print "After %s steps: gloabal_step is %f, w is %f, learning rate is %f, loss is %f" %(i, global_step_val, w_val, learning_rate_val, loss_val运行结果如下:

After 35 steps: gloabal_step is 36.000000, w is -0.992297, learning rate is 0.069641, loss is 0.000059 After 36 steps: gloabal_step is 37.000000, w is -0.993369, learning rate is 0.068945, loss is 0.000044 After 37 steps: gloabal_step is 38.000000, w is -0.994284, learning rate is 0.068255, loss is 0.000033 After 38 steps: gloabal_step is 39.000000, w is -0.995064, learning rate is 0.067573, loss is 0.000024 After 39 steps: gloabal_step is 40.000000, w is -0.995731, learning rate is 0.066897, loss is 0.000018由结果可以看出,随着训练轮数增加学习率在不断减小

TensorFlow笔记之神经网络优化——学习率

猜你喜欢

转载自blog.csdn.net/qust1508060414/article/details/81268722

今日推荐

周排行