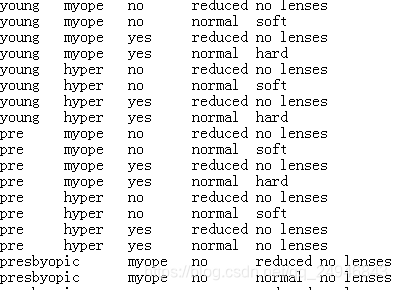

隐形眼镜数据集是著名的数据集,它包含很多患者眼部状况的观察条件以及医生推荐的隐形眼镜类型。隐形眼镜的类型包括硬材质、软材质以及不适合佩戴隐形眼镜。数据集如下图所示,第一列代表年龄‘age’,第二列代表医生的建议‘prescript’,第三列代表是否散光‘astigmatic’,第四列代表戴眼镜的频率‘tearRate’。

1.导入数据集,将数据集转换到列表中

fr = open('lenses.txt')

lenses = [line.strip().split('\t') for line in fr.readlines()]

lensesLabels = ['age','prescript','astigmatic','tearRate']

lenses

运行结果:

[['young', 'myope', 'no', 'reduced', 'no lenses'],

['young', 'myope', 'no', 'normal', 'soft'],

['young', 'myope', 'yes', 'reduced', 'no lenses'],

['young', 'myope', 'yes', 'normal', 'hard'],

['young', 'hyper', 'no', 'reduced', 'no lenses'],

['young', 'hyper', 'no', 'normal', 'soft'],

['young', 'hyper', 'yes', 'reduced', 'no lenses'],

['young', 'hyper', 'yes', 'normal', 'hard'],

['pre', 'myope', 'no', 'reduced', 'no lenses'],

['pre', 'myope', 'no', 'normal', 'soft'],

['pre', 'myope', 'yes', 'reduced', 'no lenses'],

['pre', 'myope', 'yes', 'normal', 'hard'],

['pre', 'hyper', 'no', 'reduced', 'no lenses'],

['pre', 'hyper', 'no', 'normal', 'soft'],

['pre', 'hyper', 'yes', 'reduced', 'no lenses'],

['pre', 'hyper', 'yes', 'normal', 'no lenses'],

['presbyopic', 'myope', 'no', 'reduced', 'no lenses'],

['presbyopic', 'myope', 'no', 'normal', 'no lenses'],

['presbyopic', 'myope', 'yes', 'reduced', 'no lenses'],

['presbyopic', 'myope', 'yes', 'normal', 'hard'],

['presbyopic', 'hyper', 'no', 'reduced', 'no lenses'],

['presbyopic', 'hyper', 'no', 'normal', 'soft'],

['presbyopic', 'hyper', 'yes', 'reduced', 'no lenses'],

['presbyopic', 'hyper', 'yes', 'normal', 'no lenses']]2.计算原始数据香农熵

#计算原始数据的香农熵

import numpy as np

import math

from math import log

def shannonEntropy(dataSet):

num = len(dataSet)

classCount = {}

for a in dataSet:

label = a[-1]#最后一列为类别标签

classCount[label] = classCount.get(label,0)+1

shangnon = 0.0

for key in classCount:

prob = float(classCount[key])/num

shangnon += -prob*log(prob,2)#香农熵计算公式

return shangnon

shannonEntropy(lenses)

运行结果:1.32608752536429833.划分数据集

#划分数据集

def splitDataSet(dataSet,feature_index,feature_value):

subDataSet = []

for b in dataSet:

if b[feature_index]==feature_value:

temp = b[:feature_index]#注意这里不能直接用del删除而应该用切片,用del原数据集会改变

temp.extend(b[feature_index+1:])

subDataSet.append(temp)

return subDataSet4.选择根节点

#选择根节点

def selectRootNode(dataSet):

baseEntropy = shannonEntropy(dataSet)#计算原始香农熵

numFeatures = len(dataSet[0])-1#特征个数

maxInfoGain = 0.0;bestFeature = 0

for i in range(numFeatures):

featList = [example[i] for example in dataSet]

uniqVals = set(featList)

newEntropy = 0.0

for j in uniqVals:

subDataSet = splitDataSet(dataSet,i,j)

prob = len(subDataSet)/float(len(dataSet))

newEntropy += prob * shannonEntropy(subDataSet)

infoGain = baseEntropy - newEntropy#信息增益

if(infoGain>maxInfoGain):

maxInfoGain = infoGain

bestFeature = i

return bestFeature5.构建树结构

#选择根节点

def selectRootNode(dataSet):

baseEntropy = shannonEntropy(dataSet)#计算原始香农熵

numFeatures = len(dataSet[0])-1#特征个数

maxInfoGain = 0.0;bestFeature = 0

for i in range(numFeatures):

featList = [example[i] for example in dataSet]

uniqVals = set(featList)

newEntropy = 0.0

for j in uniqVals:

subDataSet = splitDataSet(dataSet,i,j)

prob = len(subDataSet)/float(len(dataSet))

newEntropy += prob * shannonEntropy(subDataSet)

infoGain = baseEntropy - newEntropy#信息增益

if(infoGain>maxInfoGain):

maxInfoGain = infoGain

bestFeature = i

return bestFeature

lensesLabels = ['age', 'prescript', 'astigmatic','tearRate']

myTree = createTree(lenses,lensesLabels)

myTree

运行结果:{'tearRate': {'normal': {'astigmatic': {'no': {'age': {'young': 'soft',

'pre': 'soft',

'presbyopic': {'prescript': {'hyper': 'soft', 'myope': 'no lenses'}}}},

'yes': {'prescript': {'hyper': {'age': {'young': 'hard',

'pre': 'no lenses',

'presbyopic': 'no lenses'}},

'myope': 'hard'}}}},

'reduced': 'no lenses'}}6.使用树结构执行分类

def classifier(myTree,featLabels,testVec):

firstFeat = list(myTree.keys())[0]

secondDict = myTree[firstFeat]

featIndex = featLabels.index(firstFeat)

for key in secondDict.keys():

if testVec[featIndex] == key:

if type(secondDict[key]).__name__ == 'dict':

classLabel = classifier(secondDict[key],featLabels,testVec)

else:classLabel = secondDict[key]

return classLabel

classifier(myTree, ['age','prescript','astigmatic','tearRate'],['young','myope','yes','normal'])

运行结果:'hard'7.画树形图,这里用Graphviz和pydotplus画,数据集需要为数字

#将属性用数字代表,'young'=0,'pre'=1,'presbyopic=2';'myope=0','hyper=1';'no'=0,'yes'=1;'reduced'=0,'normal'=1

a = np.array([0 if line[0]=='young' else 1 if line[0]=='pre' else 2 for line in lenses])

b = np.array([0 if line[1]=='myope' else 1 for line in lenses])

c = np.array([0 if line[2]=='no' else 1 for line in lenses])

d = np.array([0 if line[3]=='reduced' else 1 for line in lenses])

e = [a,b,c,d]

data = np.array(e).T

data

运行结果:

array([[0, 0, 0, 0],

[0, 0, 0, 1],

[0, 0, 1, 0],

[0, 0, 1, 1],

[0, 1, 0, 0],

[0, 1, 0, 1],

[0, 1, 1, 0],

[0, 1, 1, 1],

[1, 0, 0, 0],

[1, 0, 0, 1],

[1, 0, 1, 0],

[1, 0, 1, 1],

[1, 1, 0, 0],

[1, 1, 0, 1],

[1, 1, 1, 0],

[1, 1, 1, 1],

[2, 0, 0, 0],

[2, 0, 0, 1],

[2, 0, 1, 0],

[2, 0, 1, 1],

[2, 1, 0, 0],

[2, 1, 0, 1],

[2, 1, 1, 0],

[2, 1, 1, 1]])#画树形图

from sklearn import tree

clf = tree.DecisionTreeClassifier()

target =np.array([line[-1] for line in lenses])

clf = clf.fit(data,target)

import pydotplus

dot_data = tree.export_graphviz(clf, out_file=None)

graph = pydotplus.graph_from_dot_data(dot_data)

graph.write_pdf("lenses.pdf")

#使用sklearn封装的决策树算法进行分类

clf.predict(np.array([0,0,0,1]).reshape(1,-1))

运行结果:array(['soft'], dtype='<U9')