def loadDataSet():

postingList = [['my', 'dog', 'has', 'flea', 'problems', 'help', 'please'],

['maybe', 'not', 'take', 'him', 'to', 'dog', 'park', 'stupid'],

['my', 'dalmation', 'is', 'so', 'cute', 'I', 'love', 'him'],

['stop', 'posting', 'stupid', 'worthless', 'garbage'],

['mr', 'licks', 'ate', 'my', 'steak', 'how', 'to', 'stop', 'him'],

['quit', 'buying', 'worthless', 'dog', 'food', 'stupid']]

# 给出6个文本list

classVec = [0,1,0,1,0,1] # 人为给出标签 1 有侮辱性文字 0 正常

return postingList, classVec

def creatVocabList(dataSet):

VocabSet = set([]) # 创建一个空list,并使用set的数据类型,保证字典中无重复

for word in dataSet:

VocabSet = VocabSet | set(word) # 操作符‘|’表示两个集合的并集

return list(VocabSet) # 返回list的数据类型

def setOfWords2Vec(vocabList, inputSet): #词集模型

# input为输入要对比的样本 比如postingList[0]

returnVec = [0]*len(vocabList) # 创建所有为0的向量

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1 #vocabList.index(word) 为word在vocabList中的索引值 如果在vocabList中有这个单词,相应位置设为1

else:

print("the word %s is not in VocabList" % word)

return returnVec

# 输出文档向量,向量的元素为0或者1,分别表示字典中的单词在输入文档中是否没出现还是出现。

# 训练算法:计算概率

from numpy import *

def trainNB(trainMatrix, trainLabel): # 输入训练数据矩阵 和 训练标签

numTrainDocs = len(trainMatrix) # 训练样本个数

numWords = len(trainMatrix[0]) # 字典的长度 相当于属性个数

pAbusive = sum(trainLabel)/float(numTrainDocs ) # P(Vj) V={1,0} 含有侮辱性文档的概率,由于是二分类,另外一个 可以用1减去即可。

p0Num = ones(numWords) # 第0类文档中每个词条出现的总数量

p1Num = ones(numWords)

p0Denom = 2.0 # 第0类文档所有词条的数量

p1Denom = 2.0

for i in range(numTrainDocs): # 遍历所有的文档 对每篇训练文档:

if (trainLabel[i] == 1): # 对每个类别

p1Num += trainMatrix[i] # 增加该词条的计算值

p1Denom += sum(trainMatrix[i]) # 增加所有词条的计数值

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p1Vect = log(p1Num/p1Denom) # 对每个类别,对每个词条,计算条件概率

p0Vect = log(p0Num/p0Denom) # 对每个元素除以该类别中的总词数,(用一个数组除以浮点数即可实现)

return p0Vect,p1Vect,pAbusive # 返回两个向量 一个概率

'''

data, label = loadDataSet()

vocab = creatVocabList(data)

#print(vocab)

trainMat = []

for i in data:

trainMat.append(setOfWords2Vec(vocab, i))

p0v, p1v, pAb = trainNB(trainMat, label)

#print(p0v)

#print(p1v)

#print(pAb)

'''

#测试算法 : 朴素贝叶斯分类器

# p(v_j)*(多个P(ai|vj)相乘)

def classifyNB(Vect2classify, p0Vec, p1Vec, pAb): # 朴素贝叶斯分类器

# 输入Vect2classify 为要分类的文档,已经变为向量形式

p1 = sum(Vect2classify*p1Vec) + log(pAb) # log(ab)=log(a)+log(b)

p0 = sum(Vect2classify*p0Vec) + log(1-pAb) # 计算V_NB 括号里面的想成为对应元素相乘

if p1>p0: # 分类

return 1

else:

return 0

def testingNB():

data, label = loadDataSet()

Vocab = creatVocabList(data)

trainMat = []

for i in data:

trainMat.append(setOfWords2Vec(Vocab, i))

p0Vec, p1Vec, pAb = trainNB(trainMat, label)

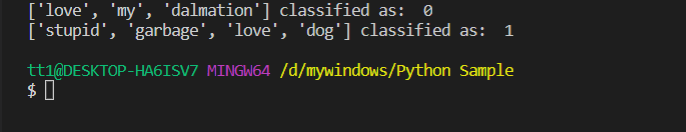

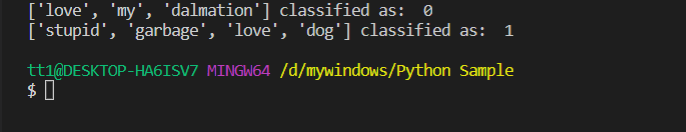

test1 = ['love','my','dalmation']

test2 = ['stupid','garbage','love','dog']

testDoc1 = array(setOfWords2Vec(Vocab, test1)) # 为什么要用array数组?? 注(a)

testDoc2 = array(setOfWords2Vec(Vocab, test2))

print(test1,'classified as: ', classifyNB(testDoc1, p0Vec, p1Vec, pAb))

print(test2,'classified as: ', classifyNB(testDoc2, p0Vec, p1Vec, pAb))

testingNB()

from numpy import *

def creatVocabList(dataSet):

def bagOfWords2Vec(vocabList, inputSet):

def trainNB(): # 上面已经解读

def classifyNB():

def textSeg(emailText):

def spamTest(): # 垃圾邮件测试

DocList = [] #

labelList = [] # 标签list

FullList = [] #

for i in range(1, 26): # 每个文件夹下25个txt文件

emailText = open('email/spam/%d.txt' % i).read()

wordList = textSeg(emailText)

DocList.append(wordList) # append extend 区别,决策树里面有详细讲解

FullList.extend(wordList)

labelList.append(1) # 人为标记

emailText = open('email/ham/%d.txt' % i).read()

wordList = textSeg(emailText)

DocList.append(wordList) # append extend 区别,决策树里面有详细讲解

FullList.extend(wordList)

labelList.append(0) # 人为标记

Vocab = creatVocabList(DocList) # 创建字典

# 随机抽取训练测试样本,总共50个样本 选取10个测试

traingSetIndex = list(range(50))

testSetIndex = []

for i in range(10):

index = int(random.uniform(0,50)) # 随机生成0-50的数

testSetIndex.append(traingSetIndex[index]) # 选出来的放入 testSetIndex

del(traingSetIndex[index]) # 还要删除traingSetIndex相应的位置索引

trainMat = []

trainLabel = []

for i in traingSetIndex:

trainMat.append(bagOfWords2Vec(Vocab, DocList[i]))

trainLabel.append(labelList[i])

p0Vec, p1Vec, pAb = trainNB(trainMat, trainLabel)

errorcount = 0

for i in testSetIndex: # 测试

test = bagOfWords2Vec(Vocab, DocList(i))

if classifyNB(test, p0Vec, p1Vec, pAb) != labelList[i]:

errorcount += 1

print('the error is: ', float(errorcount)/len(testSetIndex))

From:贝叶斯学习 -- matlab、python代码分析(4)