1.MRUnit进行单元测试

加入依赖

<dependency>

<groupId>org.apache.mrunit</groupId>

<artifactId>mrunit</artifactId>

<version>1.1.0</version>

<classifier>hadoop2</classifier>

<scope>test</scope>

</dependency>定义一个数据输出实体类,并加上数据的验证条件:

public class NcdcRecordParser {

private static final int MISSING_TEMPERATURE = 9999;

private String year;

private int airTemperature;

private String quality;

public void parse(String record) {

// 从15到19列获取年份信息

year = record.substring(15, 19);

String airTemperatureString;

// 判断气温的正负

if (record.charAt(87) == '+') {

//从88到92列获取温度信息

airTemperatureString = record.substring(88, 92);

} else {

airTemperatureString = record.substring(87, 92);

}

airTemperature = Integer.parseInt(airTemperatureString);

//获取质量验证值

quality = record.substring(92, 93);

}

public void parse(Text record) {

parse(record.toString());

}

public boolean isValidTemperature() {

return airTemperature != MISSING_TEMPERATURE && quality.matches("[01459]");

}

public String getYear() {

return year;

}

public int getAirTemperature() {

return airTemperature;

}

}mapper类:

public class MaxTemperatureMapper

extends Mapper<LongWritable, Text, Text, IntWritable> {

private NcdcRecordParser parser = new NcdcRecordParser();

@Override

public void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

parser.parse(value);

if (parser.isValidTemperature()) {

context.write(new Text(parser.getYear()),

new IntWritable(parser.getAirTemperature()));

}

}

}测试类:

public class MaxTemperatureMapperTest {

// 气温为负数

@Test

public void processesValidRecord() throws IOException, InterruptedException {

Text value = new Text("0043011990999991950051518004+68750+023550FM-12+0382" +

// Year ^^^^

"99999V0203201N00261220001CN9999999N9-00111+99999999999");

// Temperature ^^^^^

new MapDriver<LongWritable, Text, Text, IntWritable>()

.withMapper(new MaxTemperatureMapper())

.withInput(new LongWritable(0), value)

.withOutput(new Text("1950"), new IntWritable(-11))

.runTest();

}

// 气温为正数

@Test

public void processesPositiveTemperatureRecord() throws IOException,

InterruptedException {

Text value = new Text("0043011990999991950051518004+68750+023550FM-12+0382" +

// Year ^^^^

"99999V0203201N00261220001CN9999999N9+00111+99999999999");

// Temperature ^^^^^

new MapDriver<LongWritable, Text, Text, IntWritable>()

.withMapper(new MaxTemperatureMapper())

.withInput(new LongWritable(0), value)

.withOutput(new Text("1950"), new IntWritable(11))

.runTest();

}

// 气温值过大

@Test

public void ignoresMissingTemperatureRecord() throws IOException,

InterruptedException {

Text value = new Text("0043011990999991950051518004+68750+023550FM-12+0382" +

// Year ^^^^

"99999V0203201N00261220001CN9999999N9+99991+99999999999");

// Temperature ^^^^^

new MapDriver<LongWritable, Text, Text, IntWritable>()

.withMapper(new MaxTemperatureMapper())

.withInput(new LongWritable(0), value)

.runTest();

}

// 质量无效数据

@Test

public void ignoresSuspectQualityRecord() throws IOException,

InterruptedException {

Text value = new Text("0043011990999991950051518004+68750+023550FM-12+0382" +

// Year ^^^^

"99999V0203201N00261220001CN9999999N9+00112+99999999999");

// Temperature ^^^^^

// Suspect quality ^

new MapDriver<LongWritable, Text, Text, IntWritable>()

.withMapper(new MaxTemperatureMapper())

.withInput(new LongWritable(0), value)

.runTest();

}

}reducer类:

public class MaxTemperatureReducer

extends Reducer<Text, IntWritable, Text, IntWritable> {

@Override

public void reduce(Text key, Iterable<IntWritable> values,

Context context)

throws IOException, InterruptedException {

int maxValue = Integer.MIN_VALUE;

for (IntWritable value : values) {

maxValue = Math.max(maxValue, value.get());

}

context.write(key, new IntWritable(maxValue));

}

}测试类:

public class MaxTemperatureReducerTest {

@Test

public void returnsMaximumIntegerInValues() throws IOException{

new ReduceDriver<Text, IntWritable, Text, IntWritable>()

.withReducer(new MaxTemperatureReducer())

.withInput(new Text("1950"),

Arrays.asList(new IntWritable(10), new IntWritable(5)))

.withOutput(new Text("1950"), new IntWritable(10))

.runTest();

}

}2.虚拟机环境测试

使用Tool接口实现一个MapReduce驱动程序:

public class MaxTemperatureDriver extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

if (args.length != 2) {

System.err.printf("Usage: %s [generic options] <input> <output>\n",

getClass().getSimpleName());

ToolRunner.printGenericCommandUsage(System.err);

return -1;

}

Job job = new Job(getConf(), "Max temperature");

job.setJarByClass(getClass());

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(MaxTemperatureMapper.class);

job.setCombinerClass(MaxTemperatureReducer.class);

job.setReducerClass(MaxTemperatureReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

return job.waitForCompletion(true) ? 0 : 1;

}

public static void main(String[] args) throws Exception {

int exitCode = ToolRunner.run(new MaxTemperatureDriver(), args);

System.exit(exitCode);

}

}在虚拟机运行

./hadoop jar /usr/local/hadoop-1.0-SNAPSHOT.jar MaxTemperatureDriver file:///usr/local/sample.txt file:///usr/local/output验证:

在本地运行测试类与本地文件比对(注意:本机要先配置hadoop):

public class MaxTemperatureDriverTest {

public static class OutputLogFilter implements PathFilter {

public boolean accept(Path path) {

return !path.getName().startsWith("_");

}

}

//vv MaxTemperatureDriverTestV2

@Test

public void test() throws Exception {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "file:///");

conf.set("mapreduce.framework.name", "local");

conf.setInt("mapreduce.task.io.sort.mb", 1);

Path input = new Path("input/ncdc/micro");

Path output = new Path("output");

FileSystem fs = FileSystem.getLocal(conf);

fs.delete(output, true); // delete old output

MaxTemperatureDriver driver = new MaxTemperatureDriver();

driver.setConf(conf);

int exitCode = driver.run(new String[] {

input.toString(), output.toString() });

assertThat(exitCode, is(0));

checkOutput(conf, output);

}

//^^ MaxTemperatureDriverTestV2

private void checkOutput(Configuration conf, Path output) throws IOException {

FileSystem fs = FileSystem.getLocal(conf);

Path[] outputFiles = FileUtil.stat2Paths(

fs.listStatus(output, new OutputLogFilter()));

assertThat(outputFiles.length, is(1));

BufferedReader actual = asBufferedReader(fs.open(outputFiles[0]));

BufferedReader expected = asBufferedReader(

getClass().getResourceAsStream("/expected.txt"));

String expectedLine;

while ((expectedLine = expected.readLine()) != null) {

assertThat(actual.readLine(), is(expectedLine));

}

assertThat(actual.readLine(), nullValue());

actual.close();

expected.close();

}

private BufferedReader asBufferedReader(InputStream in) throws IOException {

return new BufferedReader(new InputStreamReader(in));

}

}本机mini集群测试:

public class MaxTemperatureDriverMiniTest extends ClusterMapReduceTestCase {

public static class OutputLogFilter implements PathFilter {

public boolean accept(Path path) {

return !path.getName().startsWith("_");

}

}

@Override

protected void setUp() throws Exception {

if (System.getProperty("test.build.data") == null) {

System.setProperty("test.build.data", "/tmp");

}

if (System.getProperty("hadoop.log.dir") == null) {

System.setProperty("hadoop.log.dir", "/tmp");

}

super.setUp();

}

// Not marked with @Test since ClusterMapReduceTestCase is a JUnit 3 test case

public void test() throws Exception {

Configuration conf = createJobConf();

Path localInput = new Path("input/ncdc/micro");

Path input = getInputDir();

Path output = getOutputDir();

// Copy input data into test HDFS

getFileSystem().copyFromLocalFile(localInput, input);

MaxTemperatureDriver driver = new MaxTemperatureDriver();

driver.setConf(conf);

int exitCode = driver.run(new String[] {

input.toString(), output.toString() });

assertThat(exitCode, is(0));

// Check the output is as expected

Path[] outputFiles = FileUtil.stat2Paths(

getFileSystem().listStatus(output, new OutputLogFilter()));

assertThat(outputFiles.length, is(1));

InputStream in = getFileSystem().open(outputFiles[0]);

BufferedReader reader = new BufferedReader(new InputStreamReader(in));

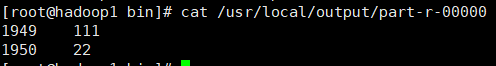

assertThat(reader.readLine(), is("1949\t111"));

assertThat(reader.readLine(), is("1950\t22"));

assertThat(reader.readLine(), nullValue());

reader.close();

}

}