在 Kaggle 上面的 Notebook 给可爱的学弟学妹们用于参考... 代码这个东西一定要自己多写,我一边听着林宥嘉的《想自由》,一边写出了大致的实现。K 近邻算法大概做的是一件什么事情呢?你去商店买衣服的时候,突然忘记了自己要买的衣服多大尺码比较合适(S/M/L/XL 这种)。这个时候你就要找几个身材和你差不多的几个店内顾客问一问了,结果你发现你这样的身材的人大多买的是 XL 的衣服,所以你最后告诉老板你也买 XL 的衣服,果然是机智聪明啊。

下面是解决该问题的思路重点:

- 你需要找几个身材和你相似的人?(K 的个数)

- 你是怎么判断其它人身材和你的相似程度的?(距离度量方式)

- 你最终参考的是最多被购买的尺码。(毕竟不同的人建议可能不同)

关于数据集的读入

MNIST 数据集可以在这里获取:THE MNIST DATABASE of handwritten digits . 你一定很好奇——为什么在 Kaggle 里面数据集是 CSV 格式,而在数据集官网提供的是四个压缩文件?这没什么好稀奇的,你只需要根据不同的数据格式采用不同的数据读取套路就好了,只要最后的数据格式的维度一致即可。

数据集已经解压

def load_mnist(path, kind='train'):

"""Load MNIST data from `path`"""

labels_path = os.path.join(path,'%s-labels-idx1-ubyte'% kind)

images_path = os.path.join(path,'%s-images-idx3-ubyte'% kind)

with open(labels_path,'rb') as lbpath:

labels = np.frombuffer(lbpath.read(), dtype=np.uint8,

offset=8)

with open(images_path,'rb') as imgpath:

images = np.frombuffer(imgpath.read(), dtype=np.uint8,

offset=16).reshape(len(labels), 784)

return images, labels数据集未解压

def load_mnist(path, kind='train'):

"""Load MNIST data from `path`"""

labels_path = os.path.join(path,

'%s-labels-idx1-ubyte.gz'

% kind)

images_path = os.path.join(path,

'%s-images-idx3-ubyte.gz'

% kind)

with gzip.open(labels_path, 'rb') as lbpath:

labels = np.frombuffer(lbpath.read(), dtype=np.uint8,

offset=8)

with gzip.open(images_path, 'rb') as imgpath:

images = np.frombuffer(imgpath.read(), dtype=np.uint8,

offset=16).reshape(len(labels), 784)

return images, labels数据集已经被转为 CSV 格式且无测试集标签(Kaggle)

# It will takes about 1 ~ 2 minutes (depends on CPU)

train_data = np.genfromtxt('../input/train.csv', delimiter=',',

skip_header=1).astype(np.dtype('uint8'))

X_train = train_data[:,1:]

y_train = train_data[:,:1]

X_test = np.genfromtxt('../input/test.csv', delimiter=',',

skip_header=1).astype(np.dtype('uint8'))检查数据导入是否顺利

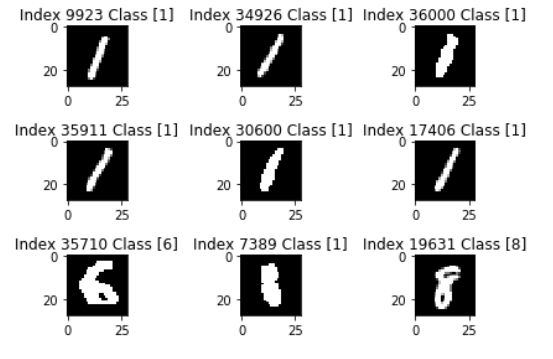

np.random.seed(0);

indices = list(np.random.randint(m_train, size=9))

for i in range(9):

plt.subplot(3,3,i + 1)

plt.imshow(X_train[indices[i]].reshape(28,28), cmap='gray', interpolation='none')

plt.title("Index {} Class {}".format(indices[i], y_train[indices[i]]))

plt.tight_layout()

定义距离度量

def euclidean_distance(vector1, vector2):

return np.sqrt(np.sum(np.power(vector1 - vector2, 2)))

def absolute_distance(vector1, vector2):

return np.sum(np.absolute(vector1 - vector2))找相似邻居(K Neighbours)

import operator

def get_neighbours(X_train, test_instance, k):

distances = []

neighbors = []

for i in range(0, X_train.shape[0]):

dist = euclidean_distance(X_train[i], test_instance)

distances.append((i, dist))

distances.sort(key=operator.itemgetter(1))

for x in range(k):

# print(distances[x])

neighbors.append(distances[x][0])

return neighbors得到投票最多的建议

def predictkNNClass(output, y_train):

classVotes = {}

for i in range(len(output)):

# print(output[i], y_train[output[i]])

if y_train[output[i]][0] in classVotes:

classVotes[y_train[output[i]][0]] += 1

else:

classVotes[y_train[output[i]][0]] = 1

sortedVotes = sorted(classVotes.items(), key=operator.itemgetter(1), reverse=True)

# print(sortedVotes)

return sortedVotes[0][0]拿实例来进行测试

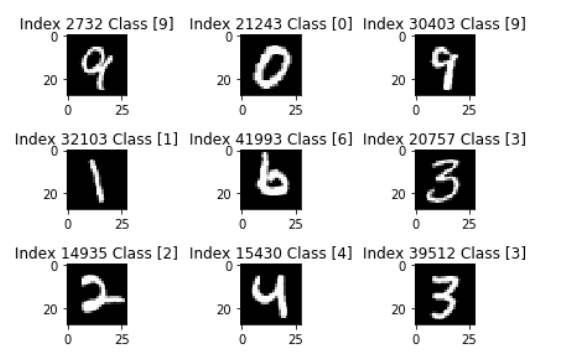

instance_num = 666

k = 9

plt.imshow(X_test[instance_num].reshape(28,28), cmap='gray', interpolation='none')

instance_neighbours = get_neighbours(X_train, X_test[instance_num], 9)

indices = instance_neighbours

for i in range(9):

plt.subplot(3,3,i + 1)

plt.imshow(X_train[indices[i]].reshape(28,28), cmap='gray', interpolation='none')

plt.title("Index {} Class {}".format(indices[i], y_train[indices[i]]))

plt.tight_layout()

predictkNNClass(instance_neighbours, y_train)