由于工作的需要,最近又要配置一遍HBase集群了,正好先在自己的电脑上再熟悉一下。

Hbase的前提工作是必须要装好Hadoop集群,我在之前的博客上讲解了如何装伪分布式和全分布式,这里大家可以去看一下Hadoop集群的搭建

启动ZK和Hadoop集群

若将zk和hadoop配置到环境变量,则使用:

zkServer.sh start

start-hdfs.sh

start-yarn.sh

若没有配置环境变量,则需要进去zk的bin目录下,hadoop的sbin目录下启动。

配置HBase的配置信息

这里我使用的是Xshell,可以直接将下好的tar包拉取到root目录下,解压到/usr/local下

tar -xvf hbase-1.3.1-bin.tar.gz -C /usr/local

进入到hbase的conf目录下

cd /usr/local/hbase-1.3.1/conf

export JAVA_HOME=/usr/local/jdk1.8.0_152 //添加自己机器上的jdk

export HBASE_MANAGES_ZK=false //不使用hbase自带的zookeeper

修改hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://node01:9000/hbase</value>

<!--这里的为hdfs的ip和端口,可能hdfs端口为8020-->

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!-- 0.98 后的新变动,之前版本没有.port,默认端口为 60000 -->

<property>

<name>hbase.master.port</name>

<value>16000</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>node01:2181,node02:2181,node03:2181</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/usr/local/zookeeper-3.4.10/zkData</value>

</property>

</configuration>

vi regionservers

node01

node02

node03

替换Jar包

因为hbase依赖于hadoop和zookeeper,而自带的关于hadoop、zookeeper的jar包不合适,所以要将依赖的包替换成相对应hadoop、zookeeper版本的jar包。

jar包在lib目录下

删除如下jar包

rm -rf /usr/local/hbase-1.3.1/lib/hadoop-*

rm -rf /usr/local/hbase-1.3.1/lib/zookeeper-3.4.6.jar

依赖的新jar包列表

hadoop-annotations-2.7.2.jar

hadoop-auth-2.7.2.jar

hadoop-client-2.7.2.jar

hadoop-common-2.7.2.jar

hadoop-hdfs-2.7.2.jar

hadoop-mapreduce-client-app-2.7.2.jar

hadoop-mapreduce-client-common-2.7.2.jar

hadoop-mapreduce-client-core-2.7.2.jar

hadoop-mapreduce-client-hs-2.7.2.jar

hadoop-mapreduce-client-hs-plugins-2.7.2.jar

hadoop-mapreduce-client-jobclient-2.7.2.jar

hadoop-mapreduce-client-jobclient-2.7.2-tests.jar

hadoop-mapreduce-client-shuffle-2.7.2.jar

hadoop-yarn-api-2.7.2.jar

hadoop-yarn-applications-distributedshell-2.7.2.jar

hadoop-yarn-applications-unmanaged-am-launcher-2.7.2.jar

hadoop-yarn-client-2.7.2.jar

hadoop-yarn-common-2.7.2.jar

hadoop-yarn-server-applicationhistoryservice-2.7.2.jar

hadoop-yarn-server-common-2.7.2.jar

hadoop-yarn-server-nodemanager-2.7.2.jar

hadoop-yarn-server-resourcemanager-2.7.2.jar

hadoop-yarn-server-tests-2.7.2.jar

hadoop-yarn-server-web-proxy-2.7.2.jar

zookeeper-3.4.5.jar

可以在你目前安装好的hadoop和zookeeper的lib目录下寻找对应的jar包,操作为

find /usr/local/hadoop-2.7.2/ -name hadoop-annotations*

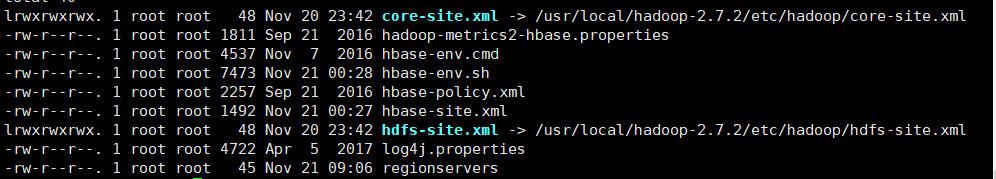

建立软连接

软连接相当于创建了快捷连接方式

ln -s /usr/local/hadoop-2.7.2/etc/hadoop/core-site.xml /usr/local/hbase-1.3.1/conf/core-site.xml

ln -s /usr/local/hadoop-2.7.2/etc/hadoop/hdfs-site.xml /usr/local/hbase-1.3.1/conf/hdfs-site.xml

在conf中显示如下

远程拷贝HBase

需要将HBase拷贝到想创建的集群的其他机器上,比如我这里在node01上进行的操作,要将HBase拷贝到node02,node03上

scp -r /usr/local/hbase-1.3.1/ node02:/usr/local/

scp -r /usr/local/hbase-1.3.1/ node03:/usr/local/

启动与关闭服务

方式一

bin/hbase-daemon.sh start master //启动HMaster

bin/hbase-daemon.sh start regionserver //启动HRegionServer

方式二

bin/start-hbase.sh //启动

bin/stop-hbase.sh //关闭

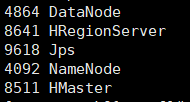

使用JPS可以看到进程为

在浏览器上输入ip:16010可以查看HBase网页

常见问题汇总

使用第一种方式,出现

org.apache.hadoop.hbase.ClockOutOfSyncException: org.apache.hadoop.hbase.ClockOutOfSyncException: Server ub9001,60020,1343694176934 has been rejected; Reported time is too far out of sync with master. Time difference of 410865ms > max allowed of 180000ms

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

这是因为集群时间不同步的原因,这里有两种方案:

方案一:配置集群时间同步

方案二:修改hbase.master.maxclockskew

<property>

<name>hbase.master.maxclockskew</name>

<value>180000</value>

<description>Time difference of regionserver from master</description>

</property>

使用start-hbase.sh后,出现

node01: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

node01: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

node01: Error: A JNI error has occurred, please check your installation and try again

node01: Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/FileSystem

node01: at java.lang.Class.getDeclaredMethods0(Native Method)

node01: at java.lang.Class.privateGetDeclaredMethods(Class.java:2701)

node01: at java.lang.Class.privateGetMethodRecursive(Class.java:3048)

node01: at java.lang.Class.getMethod0(Class.java:3018)

node01: at java.lang.Class.getMethod(Class.java:1784)

这时,需要将hbase-env.sh中的HBASE_MASTER_OPTS和HBASE_REGIONSERVER_OPTS前面加#注释掉。

打开后出现如下代码,且无HMaster,检查集群是否打开,ZK和Hadoop是否正常。

node02: starting regionserver, logging to /usr/local/hbase-1.3.1/bin/../logs/hbase-root-regionserver-spark02.out

node03: starting regionserver, logging to /usr/local/hbase-1.3.1/bin/../logs/hbase-root-regionserver-spark03.out

node01: starting regionserver, logging to /usr/local/hbase-1.3.1/bin/../logs/hbase-root-regionserver-spark01.out

node03: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

node03: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

node02: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

node02: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

关闭时,出现:stopping hbasecat: /tmp/hbase-root-master.pid: No such file or directory,需要手动kill掉,这是因为没有Master节点或者pid丢失,若为pid文件丢失,可在hbase-env.sh中配置export HBASE_PID_DIR=/usr/local/hadoop-2.7.2/pids

打开集群发现只有一台HregionServer在线,其余为死亡状态,日志如下:

2018-11-20 16:53:19,226 ERROR [main] regionserver.HRegionServerCommandLine: Region server exiting

java.lang.RuntimeException: HRegionServer Aborted

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.start(HRegionServerCommandLine.java:68)

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.run(HRegionServerCommandLine.java:87)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:126)

at org.apache.hadoop.hbase.regionserver.HRegionServer.main(HRegionServer.java:2721)

配置一下集群时间同步,再从主节点重新拷贝到其他节点就好了。