版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/u012292754/article/details/84190612

1 Spark SQl 使用场景

- Ad-hoc querying of data in files

- Live SQL analytics over streaming data

- ETL capabilities alongside familiar SQL

- Interaction with external Databases

- Scalable query performance with large clusters

2 加载数据

- Load data directly into a DataFrame

- Load data into an RDD and transform it

- load data from local or cloud

2.1 启动 spark shell

[hadoop@node1 spark-2.1.3-bin-2.6.0-cdh5.7.0]$ ./bin/spark-shell --master local[2] --jars /home/hadoop/mysql-connector-java-5.1.44-bin.jar

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

18/11/17 20:17:12 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/11/17 20:17:12 WARN spark.SparkConf:

SPARK_WORKER_INSTANCES was detected (set to '1').

This is deprecated in Spark 1.0+.

Please instead use:

- ./spark-submit with --num-executors to specify the number of executors

- Or set SPARK_EXECUTOR_INSTANCES

- spark.executor.instances to configure the number of instances in the spark config.

Spark context Web UI available at http://192.168.30.131:4040

Spark context available as 'sc' (master = local[2], app id = local-1542457034249).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.1.3

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_181)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

2.2 加载数据

2.2.1 数据加载为 RDD

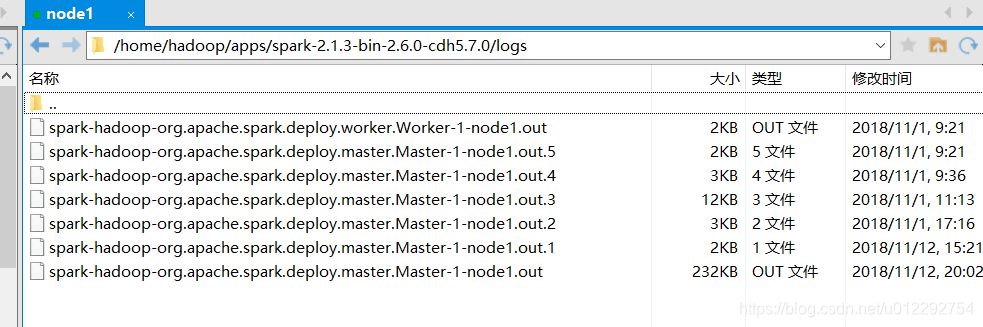

scala> val masterLog = sc.textFile("file:///home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/logs/spark-hadoop-org.apache.spark.deploy.master.Master-1-node1.out")

masterLog: org.apache.spark.rdd.RDD[String] = file:///home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/logs/spark-hadoop-org.apache.spark.deploy.master.Master-1-node1.out MapPartitionsRDD[1] at textFile at <console>:24

scala> val workerLog = sc.textFile("file:///home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/logs/spark-hadoop-org.apache.spark.deploy.worker.Worker-1-node1.out")

workerLog: org.apache.spark.rdd.RDD[String] = file:///home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/logs/spark-hadoop-org.apache.spark.deploy.worker.Worker-1-node1.out MapPartitionsRDD[3] at textFile at <console>:24

scala> val allLog = sc.textFile("file:///home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/logs/*out*")

allLog: org.apache.spark.rdd.RDD[String] = file:///home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/logs/*out* MapPartitionsRDD[5] at textFile at <console>:24

scala> masterLog.count

res0: Long = 1372

scala> workerLog.count

res1: Long = 23

scala> allLog.count

scala> workerLog.collect

res3: Array[String] = Array(Spark Command: /usr/apps/jdk1.8.0_181-amd64/jre/bin/java -cp /home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/conf/:/home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/jars/* -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://node1:7077, ========================================, Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties, 18/11/01 09:09:34 INFO Worker: Started daemon with process name: 1534@node1, 18/11/01 09:09:34 INFO SignalUtils: Registered signal handler for TERM, 18/11/01 09:09:34 INFO SignalUtils: Registered signal handler for HUP, 18/11/01 09:09:34 INFO SignalUtils: Registered signal handler for INT, 18/11/01 09:09:34 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... u...

scala>

scala> workerLog.collect.foreach(println)

Spark Command: /usr/apps/jdk1.8.0_181-amd64/jre/bin/java -cp /home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/conf/:/home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/jars/* -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://node1:7077

========================================

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

18/11/01 09:09:34 INFO Worker: Started daemon with process name: 1534@node1

18/11/01 09:09:34 INFO SignalUtils: Registered signal handler for TERM

18/11/01 09:09:34 INFO SignalUtils: Registered signal handler for HUP

18/11/01 09:09:34 INFO SignalUtils: Registered signal handler for INT

18/11/01 09:09:34 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/11/01 09:09:34 INFO SecurityManager: Changing view acls to: hadoop

18/11/01 09:09:34 INFO SecurityManager: Changing modify acls to: hadoop

18/11/01 09:09:34 INFO SecurityManager: Changing view acls groups to:

18/11/01 09:09:34 INFO SecurityManager: Changing modify acls groups to:

18/11/01 09:09:34 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

18/11/01 09:09:35 INFO Utils: Successfully started service 'sparkWorker' on port 32865.

18/11/01 09:09:35 INFO Worker: Starting Spark worker 192.168.30.131:32865 with 1 cores, 1024.0 MB RAM

18/11/01 09:09:35 INFO Worker: Running Spark version 2.1.3

18/11/01 09:09:35 INFO Worker: Spark home: /home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0

18/11/01 09:09:35 INFO Utils: Successfully started service 'WorkerUI' on port 8081.

18/11/01 09:09:35 INFO WorkerWebUI: Bound WorkerWebUI to 0.0.0.0, and started at http://192.168.30.131:8081

18/11/01 09:09:35 INFO Worker: Connecting to master node1:7077...

18/11/01 09:09:35 INFO TransportClientFactory: Successfully created connection to node1/192.168.30.131:7077 after 88 ms (0 ms spent in bootstraps)

18/11/01 09:09:36 INFO Worker: Successfully registered with master spark://node1:7077

18/11/01 09:21:12 ERROR Worker: RECEIVED SIGNAL TERM

存在的问题: 无法用 SQL 查询,必须转换为 DataFrame

2.3 RDD 转换为 DataFrame

https://spark.apache.org/docs/2.1.3/sql-programming-guide.html#programmatically-specifying-the-schema

scala> import org.apache.spark.sql.Row

import org.apache.spark.sql.Row

scala> val masterRDD = masterLog.map(x => Row(x))

masterRDD: org.apache.spark.rdd.RDD[org.apache.spark.sql.Row] = MapPartitionsRDD[8] at map at <console>:33

scala> import org.apache.spark.sql.types._

import org.apache.spark.sql.types._

scala> val schemaString = "line"

schemaString: String = line

scala> val fields = schemaString.split(" ").map(fieldName => StructField(fieldName, StringType, nullable = true))

fields: Array[org.apache.spark.sql.types.StructField] = Array(StructField(line,StringType,true))

scala> val schema = StructType(fields)

schema: org.apache.spark.sql.types.StructType = StructType(StructField(line,StringType,true))

scala> val masterDF = spark.createDataFrame(masterRDD, schema)

java.lang.IllegalArgumentException: Error while instantiating 'org.apache.spark.sql.hive.HiveSessionState':

at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$$reflect(SparkSession.scala:989)

at org.apache.spark.sql.SparkSession.sessionState$lzycompute(SparkSession.scala:116)

at org.apache.spark.sql.SparkSession.sessionState(SparkSession.scala:115)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:62)

at org.apache.spark.sql.SparkSession.createDataFrame(SparkSession.scala:560)

at org.apache.spark.sql.SparkSession.createDataFrame(SparkSession.scala:315)

... 50 elided

报错,修正方式https://www.cnblogs.com/fly2wind/p/7704761.html

scala> val masterDF = spark.createDataFrame(masterRDD, schema)

18/11/17 20:56:34 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

masterDF: org.apache.spark.sql.DataFrame = [line: string]

scala> masterDF.printSchema

root

|-- line: string (nullable = true)

scala> masterDF.show

+--------------------+

| line|

+--------------------+

|Spark Command: /u...|

|=================...|

|18/11/17 20:47:36...|

|18/11/17 20:47:36...|

|18/11/17 20:47:36...|

|18/11/17 20:47:36...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

|18/11/17 20:47:37...|

+--------------------+

only showing top 20 rows

scala> masterDF.show(false)

+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|line |

+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|Spark Command: /usr/apps/jdk1.8.0_181-amd64/bin/java -cp /home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/conf/:/home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/jars/*:/home/hadoop/apps/hadoop-2.6.0-cdh5.7.0/etc/hadoop/ -Xmx1g org.apache.spark.deploy.master.Master --host node1 --port 7077 --webui-port 8080|

|======================================== |

|18/11/17 20:47:36 INFO master.Master: Started daemon with process name: 2345@node1 |

|18/11/17 20:47:36 INFO util.SignalUtils: Registered signal handler for TERM |

|18/11/17 20:47:36 INFO util.SignalUtils: Registered signal handler for HUP |

|18/11/17 20:47:36 INFO util.SignalUtils: Registered signal handler for INT |

|18/11/17 20:47:37 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable |

|18/11/17 20:47:37 INFO spark.SecurityManager: Changing view acls to: hadoop |

|18/11/17 20:47:37 INFO spark.SecurityManager: Changing modify acls to: hadoop |

|18/11/17 20:47:37 INFO spark.SecurityManager: Changing view acls groups to: |

|18/11/17 20:47:37 INFO spark.SecurityManager: Changing modify acls groups to: |

|18/11/17 20:47:37 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set() |

|18/11/17 20:47:37 INFO util.Utils: Successfully started service 'sparkMaster' on port 7077. |

|18/11/17 20:47:37 INFO master.Master: Starting Spark master at spark://node1:7077 |

|18/11/17 20:47:37 INFO master.Master: Running Spark version 2.1.3 |

|18/11/17 20:47:37 INFO util.log: Logging initialized @1932ms |

|18/11/17 20:47:37 INFO server.Server: jetty-9.2.z-SNAPSHOT |

|18/11/17 20:47:37 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6c093ae{/app,null,AVAILABLE,@Spark} |

|18/11/17 20:47:37 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@8551861{/app/json,null,AVAILABLE,@Spark} |

|18/11/17 20:47:37 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@28d35df{/,null,AVAILABLE,@Spark} |

+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

only showing top 20 rows

2.4 将 DataFrame 转换为 SQL 表

如果对DF 不熟悉,可以将 DF 转换为 SQL 表

scala> masterDF.createOrReplaceTempView("master_logs")

scala> spark.sql("select * from master_logs limit 10").show(false)

+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|line |

+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|Spark Command: /usr/apps/jdk1.8.0_181-amd64/bin/java -cp /home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/conf/:/home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/jars/*:/home/hadoop/apps/hadoop-2.6.0-cdh5.7.0/etc/hadoop/ -Xmx1g org.apache.spark.deploy.master.Master --host node1 --port 7077 --webui-port 8080|

|======================================== |

|18/11/17 20:47:36 INFO master.Master: Started daemon with process name: 2345@node1 |

|18/11/17 20:47:36 INFO util.SignalUtils: Registered signal handler for TERM |

|18/11/17 20:47:36 INFO util.SignalUtils: Registered signal handler for HUP |

|18/11/17 20:47:36 INFO util.SignalUtils: Registered signal handler for INT |

|18/11/17 20:47:37 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable |

|18/11/17 20:47:37 INFO spark.SecurityManager: Changing view acls to: hadoop |

|18/11/17 20:47:37 INFO spark.SecurityManager: Changing modify acls to: hadoop |

|18/11/17 20:47:37 INFO spark.SecurityManager: Changing view acls groups to: |

+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

2.5 json / parquet

如果使用 json 或 parquet,就不用指定 schema

scala> val usersDF = spark.read.format("parquet").load("file:///home/hadoop/apps/spark-2.1.3-bin-2.6.0-cdh5.7.0/examples/src/main/resources/users.parquet")

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

usersDF: org.apache.spark.sql.DataFrame = [name: string, favorite_color: string ... 1 more field]

scala> usersDF.printSchema

root

|-- name: string (nullable = true)

|-- favorite_color: string (nullable = true)

|-- favorite_numbers: array (nullable = true)

| |-- element: integer (containsNull = true)

scala> usersDF.show

18/11/17 22:49:52 WARN ParquetRecordReader: Can not initialize counter due to context is not a instance of TaskInputOutputContext, but is org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl

+------+--------------+----------------+

| name|favorite_color|favorite_numbers|

+------+--------------+----------------+

|Alyssa| null| [3, 9, 15, 20]|

| Ben| red| []|

+------+--------------+----------------+

或者使用SQL

scala> usersDF.createOrReplaceTempView("users_info")

scala> spark.sql("select * from users_info where name='Ben'").show

18/11/17 22:53:02 WARN ParquetRecordReader: Can not initialize counter due to context is not a instance of TaskInputOutputContext, but is org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl

+----+--------------+----------------+

|name|favorite_color|favorite_numbers|

+----+--------------+----------------+

| Ben| red| []|

+----+--------------+----------------+