数据下载:

数据为kaggle上的关于蘑菇分类的数据,地址:https://www.kaggle.com/uciml/mushroom-classification

也可在这里下载:https://github.com/ffzs/dataset/blob/master/mushrooms.csv

数据准备:

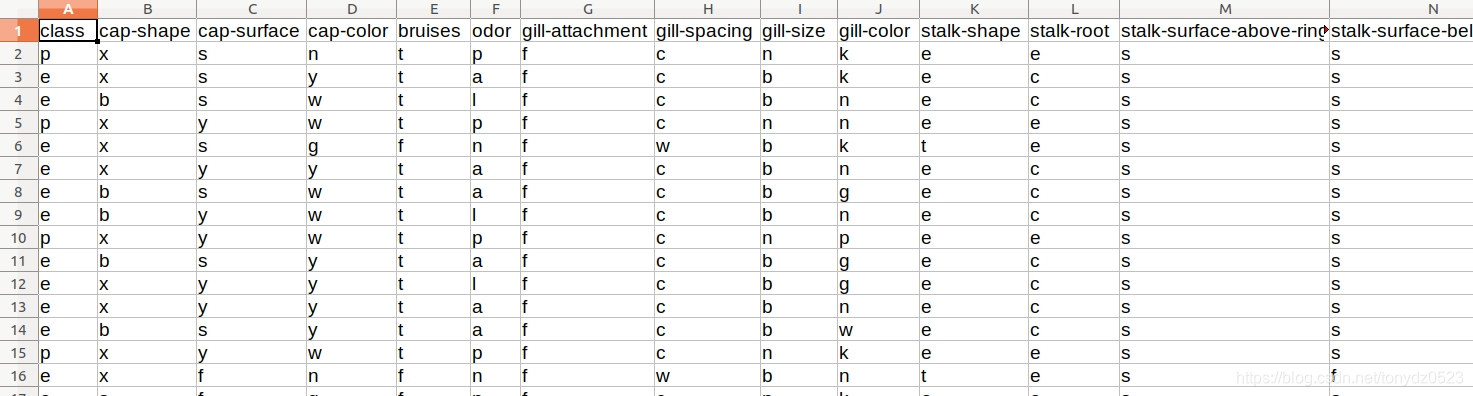

本数据集用于分类毒蘑菇和可食用蘑菇,共22个特征值,其中特征描述都是字符,用于机器学习的话,要将特征转换成数值。

from pyspark.sql import SparkSession

from pyspark import SparkConf, SparkContext

spark = SparkSession.builder.master('local[1]').appName('learn_ml').getOrCreate()

# 载入数据

df0 = spark.read.csv('file:///home/ffzs/python-projects/learn_spark/mushrooms.csv', header=True, inferSchema=True, encoding='utf-8')

# 查看是否有缺失值

# df0.toPandas().isna().sum()

df0.toPandas().isna().values.any()

# False 没有缺失值

# 先使用StringIndexer将字符转化为数值,然后将特征整合到一起

from pyspark.ml.feature import StringIndexer, VectorAssembler

old_columns_names = df0.columns

new_columns_names = [name+'-new' for name in old_columns_names]

for i in range(len(old_columns_names)):

indexer = StringIndexer(inputCol=old_columns_names[i], outputCol=new_columns_names[i])

df0 = indexer.fit(df0).transform(df0)

vecAss = VectorAssembler(inputCols=new_columns_names[1:], outputCol='features')

df0 = vecAss.transform(df0)

# 更换label列名

df0 = df0.withColumnRenamed(new_columns_names[0], 'label')

# 创建新的只有label和features的表

dfi = df0.select(['label', 'features'])

# 数据概观

dfi.show(5, truncate=0)

+-----+------------------------------------------------------------------------------+

|label|features |

+-----+------------------------------------------------------------------------------+

|1.0 |(22,[1,3,4,7,8,9,10,19,20,21],[1.0,1.0,6.0,1.0,7.0,1.0,2.0,2.0,2.0,4.0]) |

|0.0 |(22,[1,2,3,4,8,9,10,19,20,21],[1.0,3.0,1.0,4.0,7.0,1.0,3.0,1.0,3.0,1.0]) |

|0.0 |(22,[0,1,2,3,4,8,9,10,19,20,21],[3.0,1.0,4.0,1.0,5.0,3.0,1.0,3.0,1.0,3.0,5.0])|

|1.0 |(22,[2,3,4,7,8,9,10,19,20,21],[4.0,1.0,6.0,1.0,3.0,1.0,2.0,2.0,2.0,4.0]) |

|0.0 |(22,[1,2,6,8,10,18,19,20,21],[1.0,1.0,1.0,7.0,2.0,1.0,1.0,4.0,1.0]) |

+-----+------------------------------------------------------------------------------+

only showing top 5 rows

# 将数据集分为训练集和测试集

train_data, test_data = dfi.randomSplit([4.0, 1.0], 100)

使用分类器进行分类:

逻辑回归,支持多项逻辑(softmax)和二项逻辑回归

pyspark.ml.classification.LogisticRegression(self, featuresCol="features", labelCol="label", predictionCol="prediction", maxIter=100, regParam=0.0, elasticNetParam=0.0, tol=1e-6, fitIntercept=True, threshold=0.5, thresholds=None, probabilityCol="probability", rawPredictionCol="rawPrediction", standardization=True, weightCol=None, aggregationDepth=2, family="auto")

部分参数

regParam: 正则化参数(>=0)

elasticNetParam: ElasticNet混合参数,0-1之间,当alpha为0时,惩罚为L2正则化,当为1时为L1正则化

fitIntercept: 是否拟合一个截距项

Standardization: 是否在拟合数据之前对数据进行标准化

aggregationDepth: 树聚合所建议的深度(>=2)

from pyspark.ml.classification import LogisticRegression

blor = LogisticRegression(regParam=0.01)

blorModel = blor.fit(train_data)

result = blorModel.transform(test_data)

# 计算准确率

result.filter(result.label == result.prediction).count()/result.count()

# 0.9661954517516902

决策树(Decision tree)

pyspark.ml.classification.DecisionTreeClassifier(featuresCol='features', labelCol='label', predictionCol='prediction', probabilityCol='probability', rawPredictionCol='rawPrediction', maxDepth=5, maxBins=32, minInstancesPerNode=1, minInfoGain=0.0, maxMemoryInMB=256, cacheNodeIds=False, checkpointInterval=10, impurity='gini', seed=None)

部分参数

checkpointInterval:设置checkpoint区间(>=1),或宕掉checkpoint(-1),例如10意味着缓冲区(cache)将会每迭代10次获得一次checkpoint

fit(datasset,params=None)

impurity: 信息增益计算的准则,选项"entropy", “gini”

maxBins:连续特征离散化的最大分箱,必须>=2 并且>=分类特征分类的数量

maxDepth:树的最大深度

minInfoGain:分割结点所需的最小的信息增益

minInstancesPerNode:每个结点最小实例个数

from pyspark.ml.classification import DecisionTreeClassifier

dt = DecisionTreeClassifier(maxDepth=5)

dtModel = dt.fit(train_data)

result = dtModel.transform(test_data)

# accuracy

result.filter(result.label == result.prediction).count()/result.count()

# 1.0

梯度增强树 (Gradient boosting tree)

pyspark.ml.classification.GBTClassifier(featuresCol='features', labelCol='label', predictionCol='prediction', maxDepth=5, maxBins=32, minInstancesPerNode=1, minInfoGain=0.0, maxMemoryInMB=256, cacheNodeIds=False, checkpointInterval=10, lossType='logistic', maxIter=20, stepSize=0.1, seed=None, subsamplingRate=1.0)

部分参数

checkpointInterval: 同DecisionTreeClassifier

fit(dataset,params=None)方法

lossType: GBT要最小化的损失函数,选项:logistic

maxBins: 同DecisionTreeClassifier

maxDepth: 同DecisionTreeClassifier

maxIter: 同DecisionTreeClassifier

minInfoGain: 同DecisionTreeClassifier

minInstancesPerNode:同DecisionTreeClassifier

stepSize: 每次迭代优化的步长

subsamplingRate: 同RandomForesetClassier

from pyspark.ml.classification import GBTClassifier

gbt = GBTClassifier(maxDepth=5)

gbtModel = gbt.fit(train_data)

result = gbtModel.transform(test_data)

# accuracy

result.filter(result.label == result.prediction).count()/result.count()

# 1.0

随机森林(Random forest)

pyspark.ml.classification.RandomForestClassifier(featuresCol='features', labelCol='label', predictionCol='prediction', probabilityCol='probability', rawPredictionCol='rawPrediction', maxDepth=5, maxBins=32, minInstancesPerNode=1, minInfoGain=0.0, maxMemoryInMB=256, cacheNodeIds=False, checkpointInterval=10, impurity='gini', numTrees=20, featureSubsetStrategy='auto', seed=None, subsamplingRate=1.0)

部分参数

checkpoint:同DecisionTreeClassifier

featureSubsetStrategy:每棵树上要分割的特征数目,选项为"auto",“all”, “onethird”, “sqrt”, “log2”, “(0.0-1.0],”[1-n]"

fit(dataset,params=None)方法

impurity: 同DecisionTreeClassifier

maxBins:同DecisionTreeClassifier

maxDepth:同DecisionTreeClassifier

minInfoGain: 同DecisionTreeClassifier

numTrees: 训练树的个数

subsamplingRate: 用于训练每颗决策树的样本个数,区间(0,1]

from pyspark.ml.classification import RandomForestClassifier

rf = RandomForestClassifier(numTrees=10, maxDepth=5)

rfModel = rf.fit(train_data)

result = rfModel.transform(test_data)

# accuracy

result.filter(result.label == result.prediction).count()/result.count()

# 1.0

朴素贝叶斯(Naive bayes)

pyspark.ml.classification.NaiveBayes(featuresCol='features', labelCol='label', predictionCol='prediction', probabilityCol='probability', rawPredictionCol='rawPrediction', smoothing=1.0, modelType='multinomial', thresholds=None, weightCol=None)

部分参数

modelType: 选项:multinomial(多项式)和bernoulli(伯努利)

smoothing: 平滑参数,应该>=0,默认为1.0

from pyspark.ml.classification import NaiveBayes

nb = NaiveBayes()

nbModel = nb.fit(train_data)

result = nbModel.transform(test_data)

#accuracy

result.filter(result.label == result.prediction).count()/result.count()

#0.9231714812538414

支持向量机(SVM)

pyspark.ml.classification.LinearSVC(featuresCol='features', labelCol='label', predictionCol='prediction', maxIter=100, regParam=0.0, tol=1e-06, rawPredictionCol='rawPrediction', fitIntercept=True, standardization=True, threshold=0.0, weightCol=None, aggregationDepth=2)

from pyspark.ml.classification import LinearSVC

svm = LinearSVC(maxIter=10, regPcaram=0.01)

svmModel = svm.fit(train_data)

result = svmModel.transform(test_data)

# accuracy

result.filter(result.label == result.prediction).count()/result.count()

# 0.9797172710510141