版权声明:知识就是为了传播! https://blog.csdn.net/weixin_36171533/article/details/82790968

资源指标API及自定义指标API

资源指标:metrics-server

自定义指标

1.8以后引入了资源api指标监视

资源指标:metrics-server

自定义指标:prometheus,k8s-prometheus-adapter

k8s的中的prometheus需要k8s-prometheus-adapter转换一下才可以使用

新一代架构:

核心指标流水线:

kubelet,metrics-service以及API service提供api组成;cpu累计使用率,内存实时使用率,pod的资源占用率和容器磁盘占用率;

监控流水线:

用于从系统收集各种指标数据并提供终端用户,存储系统以及HPA,他们包括核心指标以及很多非核心指标,非核心指标本身不能被k8s解析

metrics-server:API service

卸载上次实验的安装:

[root@master influxdb]# ls

grafana.yaml heapster-rbac.yaml heapster.yaml influxdb.yaml

[root@master influxdb]# kubectl delete -f ./

deployment.apps "monitoring-grafana" deleted

service "monitoring-grafana" deleted

clusterrolebinding.rbac.authorization.k8s.io "heapster" deleted

serviceaccount "heapster" deleted

deployment.apps "heapster" deleted

service "heapster" deleted

deployment.apps "monitoring-influxdb" deleted

service "monitoring-influxdb" deleted

[root@master influxdb]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 14d

kubernetes-dashboard NodePort 10.108.38.237 <none> 443:31619/TCP 4dkubernetes metrics server 参考文档

https://github.com/kubernetes-incubator/metrics-server

会用到的yaml文件:

https://github.com/kubernetes-incubator/metrics-server/tree/master/deploy/1.8%2B

或者

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/metrics-server(建议使用)

下载六个文件并创建

for file in auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml metrics-server-deployment.yaml metrics-server-service.yaml resource-reader.yaml;do wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/metrics-server/$file; done##########################

注意:需要修改的地方:

##########################

metrics-server-deployment.yaml

# - --source=kubernetes.summary_api:''

- --source=kubernetes.summary_api:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250&insecure=true

resource-reader.yaml

resources:

- pods

- nodes

- namespaces

- nodes/stats #新加

开始运行:

kubectl apply -f ./[root@master metrics]# kubectl api-versions

admissionregistration.k8s.io/v1beta1

apiextensions.k8s.io/v1beta1

apiregistration.k8s.io/v1

apiregistration.k8s.io/v1beta1

apps/v1

apps/v1beta1

apps/v1beta2

authentication.k8s.io/v1

authentication.k8s.io/v1beta1

authorization.k8s.io/v1

authorization.k8s.io/v1beta1

autoscaling/v1

autoscaling/v2beta1

batch/v1

batch/v1beta1

certificates.k8s.io/v1beta1

crd.projectcalico.org/v1

events.k8s.io/v1beta1

extensions/v1beta1

metrics.k8s.io/v1beta1 #metrics控制器,有说明成功

networking.k8s.io/v1

policy/v1beta1

rbac.authorization.k8s.io/v1

rbac.authorization.k8s.io/v1beta1

scheduling.k8s.io/v1beta1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1

[root@master metrics]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

canal-98mcn 3/3 Running 0 3d

canal-gnp5r 3/3 Running 3 3d

coredns-78fcdf6894-27npt 1/1 Running 3 15d

coredns-78fcdf6894-mbg8n 1/1 Running 3 15d

etcd-master 1/1 Running 3 15d

kube-apiserver-master 1/1 Running 3 15d

kube-controller-manager-master 1/1 Running 3 15d

kube-flannel-ds-amd64-6ws6q 1/1 Running 1 3d

kube-flannel-ds-amd64-mg9sm 1/1 Running 3 3d

kube-flannel-ds-amd64-sq9wj 1/1 Running 0 3d

kube-proxy-g9n4d 1/1 Running 3 15d

kube-proxy-wrqt8 1/1 Running 2 15d

kube-proxy-x7vc2 1/1 Running 1 15d

kube-scheduler-master 1/1 Running 3 15d

kubernetes-dashboard-767dc7d4d-cj75v 1/1 Running 0 1d

metrics-server-v0.2.1-5cf9489f67-vzdvt 2/2 Running 0 1h #这个状态是正常的##########################

master新开一个反向代理端口

[root@master ~]# kubectl proxy --port=8080

Starting to serve on 127.0.0.1:8080

成功获取数据

[root@master test]# curl http://localhost:8080/apis/metrics.k8s.io/v1beta1/nodes

[root@master metrics]# curl http://localhost:8080/apis/metrics.k8s.io/v1beta1/nodes

{

"kind": "NodeMetricsList",

"apiVersion": "metrics.k8s.io/v1beta1",

"metadata": {

"selfLink": "/apis/metrics.k8s.io/v1beta1/nodes"

},

"items": [

{

"metadata": {

"name": "master",

"selfLink": "/apis/metrics.k8s.io/v1beta1/nodes/master",

"creationTimestamp": "2018-09-20T05:10:31Z"

},

"timestamp": "2018-09-20T05:10:00Z",

"window": "1m0s",

"usage": {

"cpu": "68m",

"memory": "1106256Ki"

}

},

{

"metadata": {

"name": "node1",

"selfLink": "/apis/metrics.k8s.io/v1beta1/nodes/node1",

"creationTimestamp": "2018-09-20T05:10:31Z"

},

"timestamp": "2018-09-20T05:10:00Z",

"window": "1m0s",

"usage": {

"cpu": "29m",

"memory": "478428Ki"

}

},

{

"metadata": {

"name": "node2",

"selfLink": "/apis/metrics.k8s.io/v1beta1/nodes/node2",

"creationTimestamp": "2018-09-20T05:10:31Z"

},

"timestamp": "2018-09-20T05:10:00Z",

"window": "1m0s",

"usage": {

"cpu": "36m",

"memory": "416112Ki"

}

}

]

}

测试:注意(有的时候会需要等一会)

[root@master metrics]# kubectl top nodes

error: metrics not available yet

[root@master metrics]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 67m 6% 1078Mi 62%

node1 28m 2% 469Mi 53%

node2 34m 3% 403Mi 46% ######################################################

自定义指标:prometheus,k8s-prometheus-adapter

注意:prometheus是不能直接解析k8s的指标的,需要借助k8s-prometheus-adapter转换成api

k8s-prometheus-adapter可以直接到node上查询

prometheus是一个非常强大的Restfeel API格式的系统

通过PromQL转化为:Custom Metrics API,Custom Metrics API包含k8s-prometheus-adapter

每一个节点的日志都在节点上

[root@node1 ~]# cd /var/log/containers/

[root@node1 containers]# ls

canal-gnp5r_kube-system_calico-node-147ea4221691816daec058f339ff00955671d8e447ebe80e55db567cfe048b0b.log

canal-gnp5r_kube-system_calico-node-920a4bc03378bb20c9cedad09371f7ca54b7a3a5bbbae9f110398e15dbf2fcb9.log

canal-gnp5r_kube-system_install-cni-19934c343c79ef6e14486f6cdd90c373f53e05af6a7591fab985842ae79fcc40.log

canal-gnp5r_kube-system_install-cni-cc52f958f08409bb0c7dafbd8c246dcf4fe9e7c1291f98d00b3827ebf34f5973.log

canal-gnp5r_kube-system_kube-flannel-0089fda34bbe4bf8d13cd66d5a80fa8282b4528d7ab40b467d68891d7bfbca29.log

canal-gnp5r_kube-system_kube-flannel-c42d355765d21ad0b45a4867790bdb78005058dad90dcd76c6836ffe85570af5.log

kube-flannel-ds-amd64-6ws6q_kube-system_install-cni-c7399a596f6c6903c6bcc34fd1c770791dc21421ed8da6929cb44fd4baaceee6.log

kube-flannel-ds-amd64-6ws6q_kube-system_kube-flannel-95a5f4c7038c7ef20d999b4863e410fd9479913adc146674d9d02c4f467b6671.log

kube-flannel-ds-amd64-6ws6q_kube-system_kube-flannel-c524d27b095f2b9f05bca5d8ba01b3adfa752e4572d02b2ad8f774f211fa979f.log

kube-proxy-x7vc2_kube-system_kube-proxy-50ec6393d30b1e0288780d013a0107a5f68ef75d2a6cf2e6722dd37d86da875a.log

kube-proxy-x7vc2_kube-system_kube-proxy-8949de853f87ec4780c1cde0cac69a34e537180adb8278a03bf50b32718d7843.logprometheus安装配置清单

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/prometheus

使用马哥修改版的prometheus

git clone https://github.com/jesssecat/k8s-prom.git

创建过程:

1.创建namespace

1.创建namespace

[root@master k8s-prom]# kubectl apply -f namespace.yaml

namespace/prom created

2.安装exporter

[root@master k8s-prom]# cd node_exporter/

[root@master node_exporter]# ls

node-exporter-ds.yaml node-exporter-svc.yaml

[root@master node_exporter]# kubectl apply -f ./

daemonset.apps/prometheus-node-exporter created

service/prometheus-node-exporter created

查看exporter安装成功:

[root@master node_exporter]# kubectl get pods -n prom

NAME READY STATUS RESTARTS AGE

prometheus-node-exporter-4xnhj 1/1 Running 0 2m

prometheus-node-exporter-5z6gd 1/1 Running 0 2m

prometheus-node-exporter-6dwnk 1/1 Running 0 2m3.开始安装prometheus

k8s-prometheus-adapter kube-state-metrics namespace.yaml node_exporter podinfo prometheus README.md

[root@master k8s-prom]# cd prometheus/

[root@master prometheus]# ls

prometheus-cfg.yaml prometheus-deploy.yaml prometheus-rbac.yaml prometheus-svc.yaml

[root@master prometheus]# kubectl apply -f ./

configmap/prometheus-config created

deployment.apps/prometheus-server created

clusterrole.rbac.authorization.k8s.io/prometheus created

serviceaccount/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

service/prometheus created

查看状态:

[root@master prometheus]# kubectl get all -n prom

NAME READY STATUS RESTARTS AGE

pod/prometheus-node-exporter-4xnhj 1/1 Running 0 6m

pod/prometheus-node-exporter-5z6gd 1/1 Running 0 6m

pod/prometheus-node-exporter-6dwnk 1/1 Running 0 6m

pod/prometheus-server-65f5d59585-jr7lx 0/1 Pending 0 2m #没有成功

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus NodePort 10.100.160.51 <none> 9090:30090/TCP 2m

service/prometheus-node-exporter ClusterIP None <none> 9100/TCP 6m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/prometheus-node-exporter 3 3 3 3 3 <none> 6m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/prometheus-server 1 1 1 0 2m

NAME DESIRED CURRENT READY AGE

replicaset.apps/prometheus-server-65f5d59585 1 1 0 2m

备注:

service/prometheus NodePort 10.100.160.51 <none> 9090:30090/TCP 2m

prometheus是使用的内部端口9090 用NodePort映射到外面30090查看具体原因:

[root@master prometheus]# kubectl describe pod/prometheus-server-65f5d59585-jr7lx -n prom

[root@master prometheus]# kubectl describe pod/prometheus-server-65f5d59585-jr7lx -n prom

Name: prometheus-server-65f5d59585-jr7lx

Namespace: prom

Priority: 0

PriorityClassName: <none>

Node: <none>

Labels: app=prometheus

component=server

pod-template-hash=2191815141

Annotations: prometheus.io/scrape=false

Status: Pending

IP:

Controlled By: ReplicaSet/prometheus-server-65f5d59585

Containers:

prometheus:

Image: prom/prometheus:v2.2.1

Port: 9090/TCP

Host Port: 0/TCP

Command:

prometheus

--config.file=/etc/prometheus/prometheus.yml

--storage.tsdb.path=/prometheus

--storage.tsdb.retention=720h

Limits:

memory: 2Gi

Requests:

memory: 2Gi

Environment: <none>

Mounts:

/etc/prometheus/prometheus.yml from prometheus-config (rw)

/prometheus/ from prometheus-storage-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from prometheus-token-rfqgx (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

prometheus-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: prometheus-config

Optional: false

prometheus-storage-volume:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

prometheus-token-rfqgx:

Type: Secret (a volume populated by a Secret)

SecretName: prometheus-token-rfqgx

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 4s (x92 over 5m) default-scheduler 0/3 nodes are available: 3 Insufficient memory.

上面最后一行提示:3个节点的内存都不够用的

开始修改内存:

[root@master prometheus]# cat prometheus-deploy.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-server

namespace: prom

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

component: server

#matchExpressions:

#- {key: app, operator: In, values: [prometheus]}

#- {key: component, operator: In, values: [server]}

template:

metadata:

labels:

app: prometheus

component: server

annotations:

prometheus.io/scrape: 'false'

spec:

serviceAccountName: prometheus

containers:

- name: prometheus

image: prom/prometheus:v2.2.1

imagePullPolicy: Always

command:

- prometheus

- --config.file=/etc/prometheus/prometheus.yml

- --storage.tsdb.path=/prometheus

- --storage.tsdb.retention=720h

ports:

- containerPort: 9090

protocol: TCP

#resources: #新加

# limits: #新加

# memory: 2Gi #新加

volumeMounts:

- mountPath: /etc/prometheus/prometheus.yml

name: prometheus-config

subPath: prometheus.yml

- mountPath: /prometheus/

name: prometheus-storage-volume

volumes:

- name: prometheus-config

configMap:

name: prometheus-config

items:

- key: prometheus.yml

path: prometheus.yml

mode: 0644

- name: prometheus-storage-volume

emptyDir: {}

再次应用一下:

kubeatl apply -f prometheus-deploy.yaml

[root@master prometheus]# kubectl get pods -n prom

NAME READY STATUS RESTARTS AGE

prometheus-node-exporter-4xnhj 1/1 Running 0 14m

prometheus-node-exporter-5z6gd 1/1 Running 0 14m

prometheus-node-exporter-6dwnk 1/1 Running 0 14m

prometheus-server-7c8554cf-9qfgs 1/1 Running 0 1m #server端正常

查看prometheus日志

[root@master prometheus]# kubectl logs prometheus-server-7c8554cf-9qfgs -n prom

level=info ts=2018-09-20T05:56:40.885481171Z caller=main.go:220 msg="Starting Prometheus" version="(version=2.2.1, branch=HEAD, revision=bc6058c81272a8d938c05e75607371284236aadc)"

level=info ts=2018-09-20T05:56:40.885724431Z caller=main.go:221 build_context="(go=go1.10, user=root@149e5b3f0829, date=20180314-14:15:45)"

level=info ts=2018-09-20T05:56:40.88574749Z caller=main.go:222 host_details="(Linux 3.10.0-862.el7.x86_64 #1 SMP Fri Apr 20 16:44:24 UTC 2018 x86_64 prometheus-server-7c8554cf-9qfgs (none))"

level=info ts=2018-09-20T05:56:40.885759306Z caller=main.go:223 fd_limits="(soft=65536, hard=65536)"

level=info ts=2018-09-20T05:56:40.888318801Z caller=main.go:504 msg="Starting TSDB ..."

level=info ts=2018-09-20T05:56:40.916004469Z caller=web.go:382 component=web msg="Start listening for connections" address=0.0.0.0:9090

level=info ts=2018-09-20T05:56:40.924972171Z caller=main.go:514 msg="TSDB started"

level=info ts=2018-09-20T05:56:40.925004887Z caller=main.go:588 msg="Loading configuration file" filename=/etc/prometheus/prometheus.yml

level=info ts=2018-09-20T05:56:40.926337579Z caller=kubernetes.go:191 component="discovery manager scrape" discovery=k8s msg="Using pod service account via in-cluster config"

level=info ts=2018-09-20T05:56:40.926946893Z caller=kubernetes.go:191 component="discovery manager scrape" discovery=k8s msg="Using pod service account via in-cluster config"

level=info ts=2018-09-20T05:56:40.92744512Z caller=kubernetes.go:191 component="discovery manager scrape" discovery=k8s msg="Using pod service account via in-cluster config"

level=info ts=2018-09-20T05:56:40.928130239Z caller=kubernetes.go:191 component="discovery manager scrape" discovery=k8s msg="Using pod service account via in-cluster config"

level=info ts=2018-09-20T05:56:40.928784939Z caller=kubernetes.go:191 component="discovery manager scrape" discovery=k8s msg="Using pod service account via in-cluster config"

level=info ts=2018-09-20T05:56:40.929518073Z caller=main.go:491 msg="Server is ready to receive web requests." #web端已经准备好

打开web界面开始访问:

http://192.168.68.30:30090/graph

可以正常访问

4.开始部署kube-state-metrics

[root@master kube-state-metrics]# ls

kube-state-metrics-deploy.yaml kube-state-metrics-rbac.yaml kube-state-metrics-svc.yaml

主要作用是:把监控数据转换成k8s可以识别的格式

[root@master kube-state-metrics]# kubectl apply -f ./

deployment.apps/kube-state-metrics created

serviceaccount/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

service/kube-state-metrics created

查看状态:

[root@master kube-state-metrics]# kubectl get all -n prom

NAME READY STATUS RESTARTS AGE

pod/kube-state-metrics-58dffdf67d-mwfz9 1/1 Running 0 1m

pod/prometheus-node-exporter-4xnhj 1/1 Running 0 24m

pod/prometheus-node-exporter-5z6gd 1/1 Running 0 24m

pod/prometheus-node-exporter-6dwnk 1/1 Running 0 24m

pod/prometheus-server-7c8554cf-9qfgs 1/1 Running 0 11m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-state-metrics ClusterIP 10.105.236.184 <none> 8080/TCP 1m

service/prometheus NodePort 10.100.160.51 <none> 9090:30090/TCP 20m

service/prometheus-node-exporter ClusterIP None <none> 9100/TCP 24m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/prometheus-node-exporter 3 3 3 3 3 <none> 24m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/kube-state-metrics 1 1 1 1 1m

deployment.apps/prometheus-server 1 1 1 1 20m

NAME DESIRED CURRENT READY AGE

replicaset.apps/kube-state-metrics-58dffdf67d 1 1 1 1m

replicaset.apps/prometheus-server-65f5d59585 0 0 0 20m

replicaset.apps/prometheus-server-7c8554cf 1 1 1 11m5.开始部署k8s-prometheus-adapter

[root@master k8s-prom]# cd k8s-prometheus-adapter/

[root@master k8s-prometheus-adapter]# ls

custom-metrics-apiserver-auth-delegator-cluster-role-binding.yaml custom-metrics-apiserver-service.yaml

custom-metrics-apiserver-auth-reader-role-binding.yaml custom-metrics-apiservice.yaml

custom-metrics-apiserver-deployment.yaml custom-metrics-cluster-role.yaml

custom-metrics-apiserver-resource-reader-cluster-role-binding.yaml custom-metrics-resource-reader-cluster-role.yaml

custom-metrics-apiserver-service-account.yaml hpa-custom-metrics-cluster-role-binding.yaml

注意:

在cat custom-metrics-apiserver-deployment.yaml中需要一个证书:

secret:

secretName: cm-adapter-serving-certs #需要创建后的证书修改成这个名字

开始生成证书:

新开窗口:

[root@master ~]# cd /etc/kubernetes/pki/

[root@master pki]# ls

apiserver.crt apiserver.key ca.crt dashboard.crt etcd front-proxy-client.crt jesse.csr sa.pub

apiserver-etcd-client.crt apiserver-kubelet-client.crt ca.key dashboard.csr front-proxy-ca.crt front-proxy-client.key jesse.key

apiserver-etcd-client.key apiserver-kubelet-client.key ca.srl dashboard.key front-proxy-ca.key jesse.crt sa.key

[root@master pki]# (umask 077; openssl genrsa -out serving.key 2048)

Generating RSA private key, 2048 bit long modulus

..+++

..........................................+++

e is 65537 (0x10001)

[root@master pki]# ls

apiserver.crt apiserver.key ca.crt dashboard.crt etcd front-proxy-client.crt jesse.csr sa.pub

apiserver-etcd-client.crt apiserver-kubelet-client.crt ca.key dashboard.csr front-proxy-ca.crt front-proxy-client.key jesse.key serving.key

apiserver-etcd-client.key apiserver-kubelet-client.key ca.srl dashboard.key front-proxy-ca.key jesse.crt sa.key

开始证书签署请求:

[root@master pki]# openssl req -new -key serving.key -out serving.csr -subj "/CN=serving"

[root@master pki]# openssl x509 -req -in serving.csr -CA ./ca.crt -CAkey ./ca.key -CAcreateserial -out serving.crt -days 3650

Signature ok

subject=/CN=serving

Getting CA Private Key

发现生成:serving.key,serving.crt,serving.csr

开始创建secret:

kubectl create secret generic cm-adapter-serving-certs --from-file=serving.crt=./serving.crt --from-file=serving.key=./serving.key -n prom

[root@master pki]# kubectl create secret generic cm-adapter-serving-certs --from-file=serving.crt=./serving.crt --from-file=serving.key=./serving.key -n prom

secret/cm-adapter-serving-certs created

查看一下是否在prom名称空间里:

[root@master pki]# kubectl get secret -n prom

NAME TYPE DATA AGE

cm-adapter-serving-certs Opaque 2 1m #说明创建成功

default-token-h6ljh kubernetes.io/service-account-token 3 1h

kube-state-metrics-token-dbq2z kubernetes.io/service-account-token 3 39m

prometheus-token-rfqgx kubernetes.io/service-account-token 3 58m6.开始安装k8s-prometheus-adapter

[root@master k8s-prometheus-adapter]# ls

custom-metrics-apiserver-auth-delegator-cluster-role-binding.yaml custom-metrics-apiserver-service.yaml

custom-metrics-apiserver-auth-reader-role-binding.yaml custom-metrics-apiservice.yaml

custom-metrics-apiserver-deployment.yaml custom-metrics-cluster-role.yaml

custom-metrics-apiserver-resource-reader-cluster-role-binding.yaml custom-metrics-resource-reader-cluster-role.yaml

custom-metrics-apiserver-service-account.yaml hpa-custom-metrics-cluster-role-binding.yaml

[root@master k8s-prometheus-adapter]# kubectl apply -f ./

clusterrolebinding.rbac.authorization.k8s.io/custom-metrics:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/custom-metrics-auth-reader created

deployment.apps/custom-metrics-apiserver created

clusterrolebinding.rbac.authorization.k8s.io/custom-metrics-resource-reader created

serviceaccount/custom-metrics-apiserver created

service/custom-metrics-apiserver created

apiservice.apiregistration.k8s.io/v1beta1.custom.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/custom-metrics-server-resources created

clusterrole.rbac.authorization.k8s.io/custom-metrics-resource-reader created

clusterrolebinding.rbac.authorization.k8s.io/hpa-controller-custom-metrics created

查看创建:

[root@master k8s-prometheus-adapter]# kubectl get all -n prom

NAME READY STATUS RESTARTS AGE

pod/custom-metrics-apiserver-5f6b4d857d-m42z2 0/1 Error 2 1m #创建失败

pod/kube-state-metrics-58dffdf67d-mwfz9 1/1 Running 0 42m

pod/prometheus-node-exporter-4xnhj 1/1 Running 0 1h

pod/prometheus-node-exporter-5z6gd 1/1 Running 0 1h

pod/prometheus-node-exporter-6dwnk 1/1 Running 0 1h

pod/prometheus-server-7c8554cf-9qfgs 1/1 Running 0 52m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/custom-metrics-apiserver ClusterIP 10.103.178.155 <none> 443/TCP 1m

service/kube-state-metrics ClusterIP 10.105.236.184 <none> 8080/TCP 42m

service/prometheus NodePort 10.100.160.51 <none> 9090:30090/TCP 1h

service/prometheus-node-exporter ClusterIP None <none> 9100/TCP 1h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/prometheus-node-exporter 3 3 3 3 3 <none> 1h

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/custom-metrics-apiserver 1 1 1 0 1m

deployment.apps/kube-state-metrics 1 1 1 1 42m

deployment.apps/prometheus-server 1 1 1 1 1h

NAME DESIRED CURRENT READY AGE

replicaset.apps/custom-metrics-apiserver-5f6b4d857d 1 1 0 1m

replicaset.apps/kube-state-metrics-58dffdf67d 1 1 1 42m

replicaset.apps/prometheus-server-65f5d59585 0 0 0 1h

replicaset.apps/prometheus-server-7c8554cf 1 1 1 52m

查看错误日志:

kubectl logs pod/custom-metrics-apiserver-5f6b4d857d-m42z2 -n prom

panic: unknown flag: --rate-interval #错误

goroutine 1 [running]:

main.main()

/go/src/github.com/directxman12/k8s-prometheus-adapter/cmd/adapter/adapter.go:41 +0x13b

因为配置文件的信息发生变化,所有在作者github上查找

https://github.com/DirectXMan12/k8s-prometheus-adapter/blob/master/deploy/manifests/custom-metrics-apiserver-deployment.yaml

原有文件改名备份:

[root@master k8s-prometheus-adapter]# mv custom-metrics-apiserver-deployment.yaml{,.bak}

下载新文件:

wget https://raw.githubusercontent.com/DirectXMan12/k8s-prometheus-adapter/master/deploy/manifests/custom-metrics-apiserver-deployment.yaml

编辑配置文件

vim custom-metrics-apiserver-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: custom-metrics-apiserver

name: custom-metrics-apiserver

namespace: prom #把名字改成prom

wget https://raw.githubusercontent.com/DirectXMan12/k8s-prometheus-adapter/master/deploy/manifests/custom-metrics-config-map.yaml

vim custom-metrics-config-map.yaml

修改命名空间:

apiVersion: v1

kind: ConfigMap

metadata:

name: adapter-config

namespace: prom

###############################

删除之后再重新开始创建

[root@master k8s-prometheus-adapter]# kubectl delete -f custom-metrics-apiserver-deployment.yaml

deployment.apps "custom-metrics-apiserver" deleted

[root@master k8s-prometheus-adapter]# kubectl apply -f custom-metrics-apiserver-deployment.yaml

deployment.apps/custom-metrics-apiserver created

[root@master k8s-prometheus-adapter]# kubectl apply -f custom-metrics-config-map.yaml

configmap/adapter-config created

###################################

查看是否创建成功

[root@master k8s-prometheus-adapter]# kubectl get all -n prom

NAME READY STATUS RESTARTS AGE

pod/custom-metrics-apiserver-65f545496-crxtl 1/1 Running 0 1m #成功

pod/kube-state-metrics-58dffdf67d-mwfz9 1/1 Running 0 1h

pod/prometheus-node-exporter-4xnhj 1/1 Running 0 1h

pod/prometheus-node-exporter-5z6gd 1/1 Running 0 1h

pod/prometheus-node-exporter-6dwnk 1/1 Running 0 1h

pod/prometheus-server-7c8554cf-9qfgs 1/1 Running 0 1h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/custom-metrics-apiserver ClusterIP 10.103.178.155 <none> 443/TCP 32m

service/kube-state-metrics ClusterIP 10.105.236.184 <none> 8080/TCP 1h

service/prometheus NodePort 10.100.160.51 <none> 9090:30090/TCP 1h

service/prometheus-node-exporter ClusterIP None <none> 9100/TCP 1h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/prometheus-node-exporter 3 3 3 3 3 <none> 1h

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/custom-metrics-apiserver 1 1 1 1 1m

deployment.apps/kube-state-metrics 1 1 1 1 1h

deployment.apps/prometheus-server 1 1 1 1 1h

NAME DESIRED CURRENT READY AGE

replicaset.apps/custom-metrics-apiserver-65f545496 1 1 1 1m

replicaset.apps/kube-state-metrics-58dffdf67d 1 1 1 1h

replicaset.apps/prometheus-server-65f5d59585 0 0 0 1h

replicaset.apps/prometheus-server-7c8554cf 1 1 1 1h

查看是否加入:kubectl api-versions

[root@master k8s-prometheus-adapter]# kubectl api-versions

admissionregistration.k8s.io/v1beta1

apiextensions.k8s.io/v1beta1

apiregistration.k8s.io/v1

apiregistration.k8s.io/v1beta1

apps/v1

apps/v1beta1

apps/v1beta2

authentication.k8s.io/v1

authentication.k8s.io/v1beta1

authorization.k8s.io/v1

authorization.k8s.io/v1beta1

autoscaling/v1

autoscaling/v2beta1

batch/v1

batch/v1beta1

certificates.k8s.io/v1beta1

crd.projectcalico.org/v1

custom.metrics.k8s.io/v1beta1 #创建成功,它融合了很多的新功能

events.k8s.io/v1beta1

extensions/v1beta1

metrics.k8s.io/v1beta1

networking.k8s.io/v1

policy/v1beta1

rbac.authorization.k8s.io/v1

rbac.authorization.k8s.io/v1beta1

scheduling.k8s.io/v1beta1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1测试一下:

[root@master k8s-prometheus-adapter]# curl http://localhost:8080/apis/custom.metrics.k8s.io/

[root@master k8s-prometheus-adapter]# curl http://localhost:8080/apis/custom.metrics.k8s.io/

{

"kind": "APIGroup",

"apiVersion": "v1",

"name": "custom.metrics.k8s.io",

"versions": [

{

"groupVersion": "custom.metrics.k8s.io/v1beta1",

"version": "v1beta1"

}

],

"preferredVersion": {

"groupVersion": "custom.metrics.k8s.io/v1beta1",

"version": "v1beta1"

}

}[root@master k8s-prometheus-adapter]# curl http://localhost:8080/apis/custom.metrics.k8s.io/v1beta1/

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "custom.metrics.k8s.io/v1beta1",

"resources": []

###########

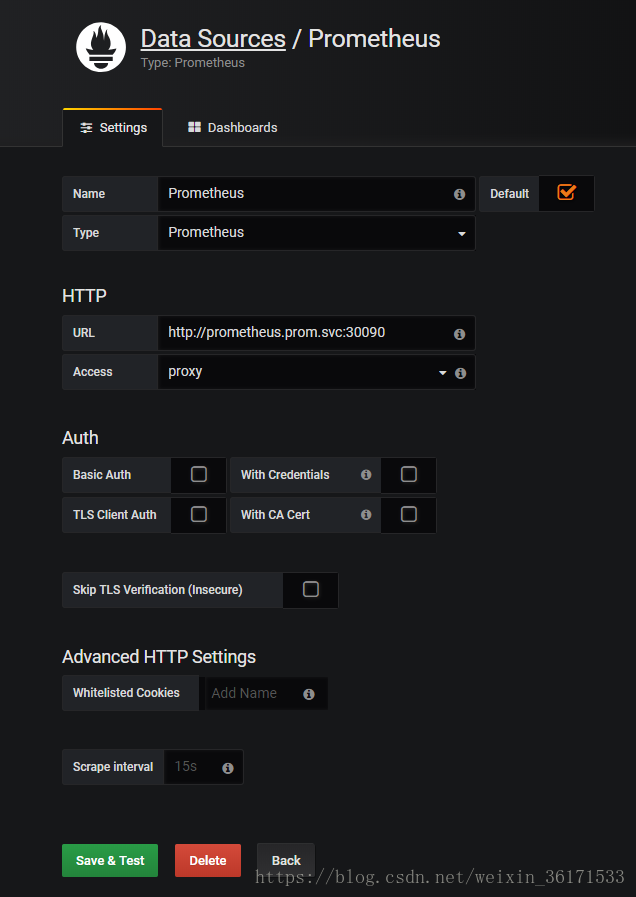

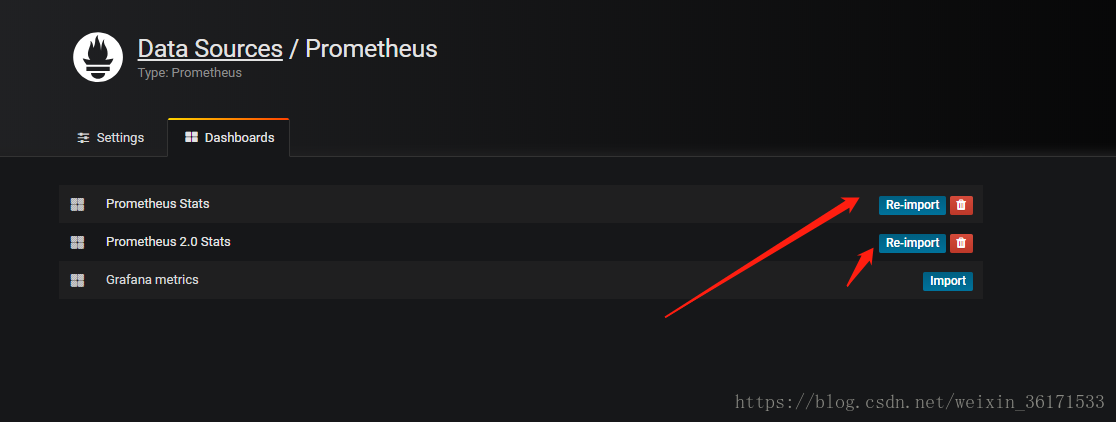

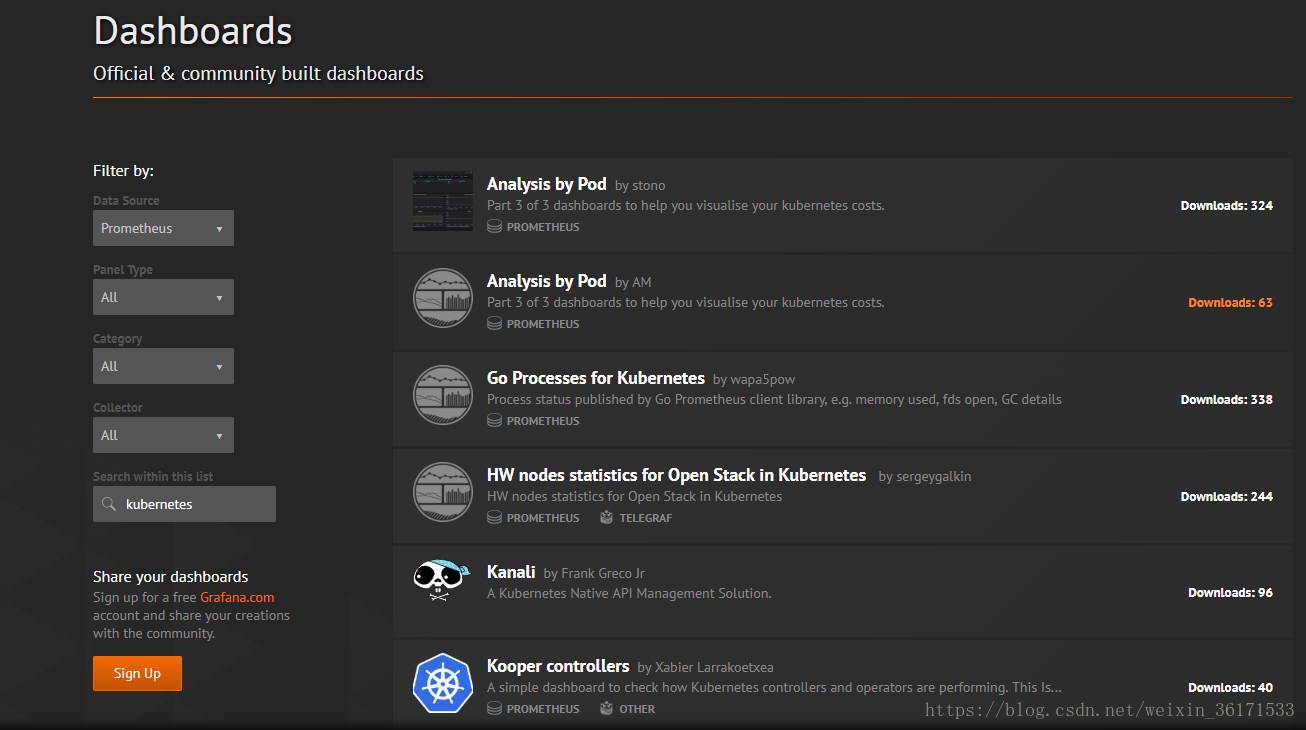

开始安装Grafana

[root@master k8s-prom]# cat grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: prom

spec:

replicas: 1

selector:

matchLabels: #新加

task: monitoring #新加

k8s-app: grafana #新加

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: k8s.gcr.io/heapster-grafana-amd64:v5.0.4

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

env:

#- name: INFLUXDB_HOST

# value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

# If you're only using the API Server proxy, set this value instead:

# value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: prom

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

# You could also use NodePort to expose the service at a randomly-generated port

# type: NodePort

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: grafana

type: NodePort #新加

kubectl apply -f grafana.yaml

[root@master k8s-prom]# kubectl get pods -n prom

NAME READY STATUS RESTARTS AGE

custom-metrics-apiserver-65f545496-crxtl 1/1 Running 0 37m

kube-state-metrics-58dffdf67d-mwfz9 1/1 Running 0 1h

monitoring-grafana-ffb4d59bd-bbff5 1/1 Running 0 3m

prometheus-node-exporter-4xnhj 1/1 Running 0 2h

prometheus-node-exporter-5z6gd 1/1 Running 0 2h

prometheus-node-exporter-6dwnk 1/1 Running 0 2h

prometheus-server-7c8554cf-9qfgs 1/1 Running 0 1h

查看svc

[root@master k8s-prom]# kubectl get svc -n prom

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

custom-metrics-apiserver ClusterIP 10.103.178.155 <none> 443/TCP 1h

kube-state-metrics ClusterIP 10.105.236.184 <none> 8080/TCP 1h

monitoring-grafana NodePort 10.104.15.0 <none> 80:32024/TCP 5m

prometheus NodePort 10.100.160.51 <none> 9090:30090/TCP 2h

prometheus-node-exporter ClusterIP None <none> 9100/TCP 2h

输入地址http://192.168.68.30:32024/就可以访问