一、

原先版本是用heapster来收集资源指标才能看,但是现在heapster要废弃了。

从k8s v1.8开始后,引入了新的功能,即把资源指标引入api;

在使用heapster时,获取资源指标是由heapster自已获取的,heapster有自已的获取路径,没有通过apiserver,后来k8s引入了资源指标API(Metrics API),于是资源指标的数据就从k8s的api中的直接获取,不必再通过其它途径。

metrics-server: 它也是一种API Server,提供了核心的Metrics API,就像k8s组件kube-apiserver提供了很多API群组一样,但它不是k8s组成部分,而是托管运行在k8s之上的Pod。

为了让用户无缝的使用metrics-server当中的API,还需要把这类自定义的API,通过聚合器聚合到核心API组里,

然后可以把此API当作是核心API的一部分,通过kubectl api-versions可直接查看。

metrics-server收集指标数据的方式是从各节点上kubelet提供的Summary API 即10250端口收集数据,收集Node和Pod核心资源指标数据,主要是内存和cpu方面的使用情况,并将收集的信息存储在内存中,所以当通过kubectl top不能查看资源数据的历史情况,其它资源指标数据则通过prometheus采集了。

k8s中很多组件是依赖于资源指标API的功能 ,比如kubectl top 、hpa,如果没有一个资源指标API接口,这些组件是没法运行的;

资源指标:metrics-server

自定义指标: prometheus, k8s-prometheus-adapter

新一代架构:

- 核心指标流水线:由kubelet、metrics-server以及由API server提供的api组成;cpu累计利用率、内存实时利用率、pod的资源占用率及容器的磁盘占用率;

- 监控流水线:用于从系统收集各种指标数据并提供终端用户、存储系统以及HPA,他们包含核心指标以及许多非核心指标。非核心指标不能被k8s所解析;

metrics-server是一个api server,收集cpu利用率、内存利用率等。

二、metrics

(1)卸载上一节heapster创建的资源;

[root@master metrics]# pwd /root/manifests/metrics [root@master metrics]# kubectl delete -f ./ deployment.apps "monitoring-grafana" deleted service "monitoring-grafana" deleted clusterrolebinding.rbac.authorization.k8s.io "heapster" deleted serviceaccount "heapster" deleted deployment.apps "heapster" deleted service "heapster" deleted deployment.apps "monitoring-influxdb" deleted service "monitoring-influxdb" deleted pod "pod-demo" deleted

metrics-server在GitHub上有单独的项目,在kubernetes的addons里面也有关于metrics-server插件的项目yaml文件;

我们这里使用kubernetes里面的yaml:

metrics-server on kubernetes:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/metrics-server

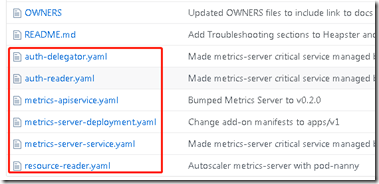

将以下几个文件下载出来:

[root@master metrics-server]# pwd /root/manifests/metrics/metrics-server [root@master metrics-server]# ls auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml metrics-server-deployment.yaml metrics-server-service.yaml resource-reader.yaml

需要修改一些内容:

目前metrics-server的镜像版本已经升级到metrics-server-amd64:v0.3.1了,此前的版本为v0.2.1,两者的启动的参数还是有所不同的。

[root@master metrics-server]# vim resource-reader.yaml ... ... rules: - apiGroups: - "" resources: - pods - nodes - namespaces - nodes/stats #添加此行 verbs: - get - list - watch - apiGroups: - "extensions" resources: - deployments ... ... [root@master metrics-server]# vim metrics-server-deployment.yaml ... ... containers: - name: metrics-server image: k8s.gcr.io/metrics-server-amd64:v0.3.1 #修改镜像(可以从阿里云上拉取,然后重新打标) command: - /metrics-server - --metric-resolution=30s - --kubelet-insecure-tls ##添加此行 - --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP #添加此行 # These are needed for GKE, which doesn't support secure communication yet. # Remove these lines for non-GKE clusters, and when GKE supports token-based auth. #- --kubelet-port=10255 #- --deprecated-kubelet-completely-insecure=true ports: - containerPort: 443 name: https protocol: TCP - name: metrics-server-nanny image: k8s.gcr.io/addon-resizer:1.8.4 #修改镜像(可以从阿里云上拉取,然后重新打标) resources: limits: cpu: 100m memory: 300Mi requests: cpu: 5m memory: 50Mi ... ... # 修改containers,metrics-server-nanny 启动参数,修改好的如下: volumeMounts: - name: metrics-server-config-volume mountPath: /etc/config command: - /pod_nanny - --config-dir=/etc/config - --cpu=80m - --extra-cpu=0.5m - --memory=80Mi - --extra-memory=8Mi - --threshold=5 - --deployment=metrics-server-v0.3.1 - --container=metrics-server - --poll-period=300000 - --estimator=exponential # Specifies the smallest cluster (defined in number of nodes) # resources will be scaled to. #- --minClusterSize={{ metrics_server_min_cluster_size }} ... ... #创建 [root@master metrics-server]# kubectl apply -f ./ clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created serviceaccount/metrics-server created configmap/metrics-server-config created deployment.apps/metrics-server-v0.3.1 created service/metrics-server created #查看,pod已经起来了 [root@master metrics-server]# kubectl get pods -n kube-system |grep metrics-server metrics-server-v0.3.1-7d8bf87b66-8v2w9 2/2 Running 0 9m37s

[root@master ~]# kubectl api-versions |grep metrics metrics.k8s.io/v1beta1 [root@master ~]# kubectl top nodes Error from server (ServiceUnavailable): the server is currently unable to handle the request (get nodes.metrics.k8s.io) #以下为pod中两个容器的日志 [root@master ~]# kubectl logs metrics-server-v0.3.1-7d8bf87b66-8v2w9 -c metrics-server -n kube-system I0327 07:06:47.082938 1 serving.go:273] Generated self-signed cert (apiserver.local.config/certificates/apiserver.crt, apiserver.local.config/certificates/apiserver.key) [restful] 2019/03/27 07:06:59 log.go:33: [restful/swagger] listing is available at https://:443/swaggerapi [restful] 2019/03/27 07:06:59 log.go:33: [restful/swagger] https://:443/swaggerui/ is mapped to folder /swagger-ui/ I0327 07:06:59.783549 1 serve.go:96] Serving securely on [::]:443 [root@master ~]# kubectl logs metrics-server-v0.3.1-7d8bf87b66-8v2w9 -c metrics-server-nanny -n kube-system ERROR: logging before flag.Parse: I0327 07:06:40.684552 1 pod_nanny.go:65] Invoked by [/pod_nanny --config-dir=/etc/config --cpu=80m --extra-cpu=0.5m --memory=80Mi --extra-memory=8Mi --threshold=5 --deployment=metrics-server-v0.3.1 --container=metrics-server --poll-period=300000 --estimator=exponential] ERROR: logging before flag.Parse: I0327 07:06:40.684806 1 pod_nanny.go:81] Watching namespace: kube-system, pod: metrics-server-v0.3.1-7d8bf87b66-8v2w9, container: metrics-server. ERROR: logging before flag.Parse: I0327 07:06:40.684829 1 pod_nanny.go:82] storage: MISSING, extra_storage: 0Gi ERROR: logging before flag.Parse: I0327 07:06:40.689926 1 pod_nanny.go:109] cpu: 80m, extra_cpu: 0.5m, memory: 80Mi, extra_memory: 8Mi ERROR: logging before flag.Parse: I0327 07:06:40.689970 1 pod_nanny.go:138] Resources: [{Base:{i:{value:80 scale:-3} d:{Dec:<nil>} s:80m Format:DecimalSI} ExtraPerNode:{i:{value:5 scale:-4} d:{Dec:<nil>} s: Format:DecimalSI} Name:cpu} {Base:{i:{value:83886080 scale:0} d:{Dec:<nil>} s: Format:BinarySI} ExtraPerNode:{i:{value:8388608 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Name:memory}]

遗憾的是,pod虽然起来了,但是依然不能获取到资源指标;

由于初学,没有什么经验,网上查了一些资料,也没有解决;

上面贴出了日志,如果哪位大佬有此类经验,还望不吝赐教!

二、prometheus

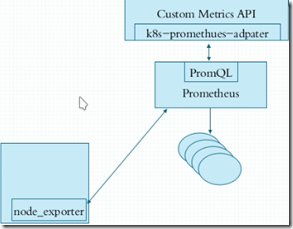

metrics只能监控cpu和内存,对于其他指标如用户自定义的监控指标,metrics就无法监控到了。这时就需要另外一个组件叫prometheus;

node_exporter是agent;

PromQL相当于sql语句来查询数据;

k8s-prometheus-adapter:prometheus是不能直接解析k8s的指标的,需要借助k8s-prometheus-adapter转换成api;

kube-state-metrics是用来整合数据的;

kubernetes中prometheus的项目地址:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/prometheus

马哥的prometheus项目地址:https://github.com/ikubernetes/k8s-prom

1、

[root@master metrics]# git clone https://github.com/iKubernetes/k8s-prom.git [root@master metrics]# cd k8s-prom/ [root@master k8s-prom]# ls k8s-prometheus-adapter kube-state-metrics namespace.yaml node_exporter podinfo prometheus README.md #创建一个叫prom的名称空间 [root@master k8s-prom]# kubectl apply -f namespace.yaml namespace/prom created #部署node_exporter [root@master k8s-prom]# cd node_exporter/ [root@master node_exporter]# ls node-exporter-ds.yaml node-exporter-svc.yaml [root@master node_exporter]# kubectl apply -f ./ daemonset.apps/prometheus-node-exporter created service/prometheus-node-exporter created [root@master ~]# kubectl get pods -n prom NAME READY STATUS RESTARTS AGE prometheus-node-exporter-5tfbz 1/1 Running 0 107s prometheus-node-exporter-6rl8k 1/1 Running 0 107s prometheus-node-exporter-rkx47 1/1 Running 0 107s

部署prometheus:

[root@master k8s-prom]# cd prometheus/ #prometheus-deploy.yaml文件中有限制使用内存的定义,如果内存不够用,可以将此规则删除; [root@master ~]# kubectl describe pods prometheus-server-76dc8df7b-75vbp -n prom 0/3 nodes are available: 1 node(s) had taints that the pod didn't tolerate, 2 Insufficient memory. [root@master prometheus]# kubectl apply -f ./ configmap/prometheus-config created deployment.apps/prometheus-server created clusterrole.rbac.authorization.k8s.io/prometheus created serviceaccount/prometheus created clusterrolebinding.rbac.authorization.k8s.io/prometheus created service/prometheus created #查看prom名称空间下,所有资源信息 [root@master ~]# kubectl get all -n prom NAME READY STATUS RESTARTS AGE pod/prometheus-node-exporter-5tfbz 1/1 Running 0 15m pod/prometheus-node-exporter-6rl8k 1/1 Running 0 15m pod/prometheus-node-exporter-rkx47 1/1 Running 0 15m pod/prometheus-server-556b8896d6-cztlk 1/1 Running 0 3m5s #pod起来了 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/prometheus NodePort 10.99.240.192 <none> 9090:30090/TCP 9m55s service/prometheus-node-exporter ClusterIP None <none> 9100/TCP 15m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/prometheus-node-exporter 3 3 3 3 3 <none> 15m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/prometheus-server 1/1 1 1 3m5s NAME DESIRED CURRENT READY AGE replicaset.apps/prometheus-server-556b8896d6 1 1 1 3m5s

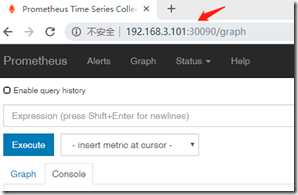

因为用的是NodePort,可以直接在集群外部访问:

浏览器输入:http://192.168.3.102:30090

192.168.3.102:为任意一个node节点的地址,不是master