课程地址:https://coding.imooc.com/class/169.html

最小二乘法的推导博客点击此处

代码实现(参考Bobo实现,如果要看BoBo老师源代码,请点击此处):

# -*- encoding: utf-8 -*-

"""

实现简单的线性回归,

自己实现SimpleLineRegession1过程中的2个错误:

1、deno += (x - x_mean) ** 2 写成 deno = (x - x_mean) ** 2 这里要注意: deno是所有计算结果的累计值

2、 方程方式self.a_ * x + self.b_ 写成 self.a_ * x - self.b_。 计算b的公式b=y_mean - a * x_mean, 但是整个方程是 y = ax+b

"""

import numpy as np

class SimpleLineRegession1(object):

"""

不使用向量化实现简单的线性回归

"""

def __init__(self):

"""

在过程中计算出来的变量统一命令,后缀加上_

"""

self.a_ = None # 表示线性的斜率

self.b_ = None # 表示线

def fit(self, X_train, y_train):

"""

训练模型

:param X_train:

:return:

"""

assert X_train.ndim == 1 and y_train.ndim == 1, 'X和Y必须为1维'

assert len(X_train) == len(y_train), 'X和Y的训练个数不相同'

x_mean = np.mean(X_train)

y_mean = np.mean(y_train)

num = 0.0 # 分子 Numerator and denominator

deno = 0.0

for x, y in zip(X_train, y_train):

num += (x - x_mean) * (y - y_mean)

deno += (x - x_mean) ** 2

self.a_ = num / deno

self.b_ = y_mean - self.a_ * x_mean

def _predict(self, x):

"""

预测单个X的结果 线性方程y = a*x + b

:param x:

:return:

"""

return self.a_ * x + self.b_

def predict(self, X_test):

"""

预测X,X是一维的数据

:param X_test:

:return:

"""

assert X_test.ndim == 1, 'X_test必须是一维数组'

assert self.a_ is not None and self.b_ is not None , '在predict之前请先fit'

y_pridect = [self._predict(x) for x in X_test]

return np.array(y_pridect)

def __repr__(self):

return ('SimpleLineRegession1(a=%s, b=%s)' %(self.a_, self.b_))

class SimpleLineRegession2(object):

"""

不使用向量化实现简单的线性回归

"""

def __init__(self):

"""

在过程中计算出来的变量统一命令,后缀加上_

"""

self.a_ = None # 表示线性的斜率

self.b_ = None # 表示线

def fit(self, X_train, y_train):

"""

训练模型

:param X_train:

:return:

"""

assert X_train.ndim == 1 and y_train.ndim == 1, 'X和Y必须为1维'

assert len(X_train) == len(y_train), 'X和Y的训练个数不相同'

x_mean = np.mean(X_train)

y_mean = np.mean(y_train)

self.a_ = (X_train - x_mean).dot(y_train - y_mean) / (X_train - x_mean).dot(X_train - x_mean)

self.b_ = y_mean - self.a_ * x_mean

def _predict(self, x):

"""

预测单个X的结果 线性方程y = a*x + b

:param x:

:return:

"""

return self.a_ * x + self.b_

def predict(self, X_test):

"""

预测X,X是一维的数据

:param X_test:

:return:

"""

assert X_test.ndim == 1, 'X_test必须是一维数组'

assert self.a_ is not None and self.b_ is not None , '在predict之前请先fit'

y_pridect = [self._predict(x) for x in X_test]

return np.array(y_pridect)

def __repr__(self):

return 'SimpleLineRegession2(a=%s, b=%s)' %(self.a_, self.b_)测试代码:

import numpy as np

from timeit import timeit as timeit

import matplotlib.pyplot as plt

from simplelinerregression import SimpleLineRegession1, SimpleLineRegession2

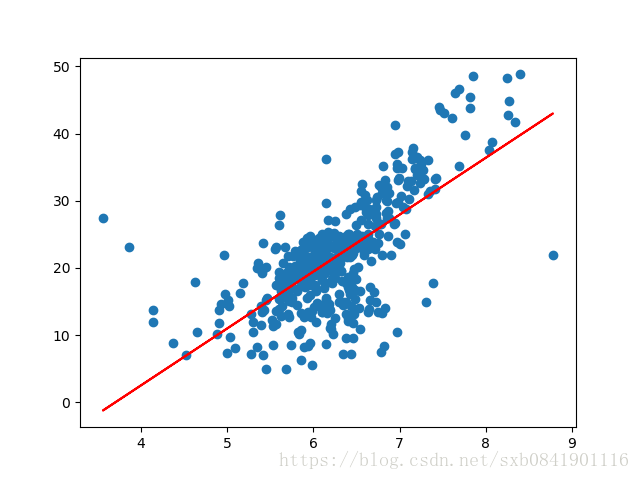

x = np.random.randint(1.0, 6, 10000) + np.random.normal(size=10000)

y = 0.8 * x + 0.4 + np.random.normal(size=len(x))

def test_reg1():

reg1 = SimpleLineRegession1()

reg1.fit(x, y)

reg1.predict(x)

print reg1

def test_reg2():

reg2 = SimpleLineRegession2()

reg2.fit(x, y)

reg2.predict(x)

print reg2

def draw_graph():

x = np.array([1., 2., 3., 4., 5.])

y = np.array([1., 3., 2., 3.0, 5.0])

plt.scatter(x, y)

plt.scatter(x, y, color='green')

plt.axis([0, 6, 0, 6])

reg1 = SimpleLineRegession1()

reg1.fit(x, y)

y_predict = reg1.predict(x)

line_mark = 'y=%sx+%s' % (np.round(reg1.a_, 2), np.round(reg1.b_, 2))

plt.plot(x, y_predict, color='red', label=line_mark)

plt.legend()

plt.show()

if __name__ == '__main__':

print timeit('test_reg1()', "from __main__ import test_reg1", number=3)

print timeit('test_reg2()', "from __main__ import test_reg2", number=3)

draw_graph()运行结果:

运行结果,明显SimpleLineRegession2效率要比SimpleLineRegession1高很多 SimpleLineRegession1(a=0.8018889242367586, b=0.39478340695596614) SimpleLineRegession1(a=0.8018889242367586, b=0.39478340695596614) SimpleLineRegession1(a=0.8018889242367586, b=0.39478340695596614) 0.0413969199446 SimpleLineRegession2(a=0.8018889242367646, b=0.39478340695594794) SimpleLineRegession2(a=0.8018889242367646, b=0.39478340695594794) SimpleLineRegession2(a=0.8018889242367646, b=0.39478340695594794) 0.0128730256884