一、运动目标检测的方法:

(l)帧差法

基本原理就是在图像序列相邻两帧或三帧间采用基于像素的时间差分通过闭值化来提取出图像中的运动区域。帧差法仅仅做运动检测。网上经常有人做个运动检测,再找个轮廓,拟合个椭圆就说跟踪了,并没有建立帧与帧之间目标联系的,没有判断目标产生和目标消失的都不能算是跟踪吧。

首先,将相邻帧图像对应像素值相减得到差分图像,然后对差分图像二值化,在环境亮度变化不大的情况下,如果对应像素值变化小于事先确定的阈值时,可以认为此处为背景像素:如果图像区域的像素值变化很大,可以认为这是由于图像中运动物体引起的,将这些区域标记为前景像素,利用标记的像素区域可以确定运动目标在图像中的位置。

由于相邻两帧间的时间间隔非常短,用前一帧图像作为当前帧的背景模型具有较好的实时性,其背景不积累,且更新速度快、算法简单、计算量小。算法的不足在于对环境噪声较为敏感,阈值的选择相当关键,选择过低不足以抑制图像中的噪声,过高则忽略了图像中有用的变化。对于比较大的、颜色一致的运动目标,有可能在目标内部产生空洞,无法完整地提取运动目标。

(2)光流法

光流法的主要任务就是计算光流场,即在适当的平滑性约束条件下,根据图像序列的时空梯度估算运动场,通过分析运动场的变化对运动目标和场景进行检测与分割。

通常有基于全局光流场和特征点光流场两种方法。

最经典的全局光流场计算方法是L-K(Lueas&Kanada)法和H-S(Hom&Schunck)法,得到全局光流场后通过比较运动目标与背景之间的运动差异对运动目标进行光流分割,缺点是计算量大。特征点光流法通过特征匹配求特征点处的流速,具有计算量小、快速灵活的特点,但稀疏的光流场很难精确地提取运动目标的形状。

总的来说,光流法不需要预先知道场景的任何信息,就能够检测到运动对象,可处理背景运动的情况,但噪声、多光源、阴影和遮挡等因素会对光流场分布的计算结果造成严重影响;而且光流法计算复杂,很难实现实时处理。

(3)背景减除法

基本思想是利用背景的参数模型来近似背景图像的像素值,将当前帧与背景图像进行差分比较实现对运动区域的检测,其中区别较大的像素区域被认为是运动区域,而区别较小的像素区域被认为是背景区域。

背景减除法必须要有背景图像,并且背景图像必须是随着光照或外部环境的变化而实时更新的,因此背景减除法的关键是背景建模及其更新。针对如何建立对于不同场景的动态变化均具有自适应性的背景模型,减少动态场景变化对运动分割的影响,研究人员已提出了许多背景建模算法,但总的来讲可以概括为非回归递推和回归递推两类。非回归背景建模算法是动态的利用从某一时刻开始到当前一段时间内存储的新近观测数据作为样本来进行背景建模。非回归背景建模方法有最简单的帧间差分、中值滤波方法、Toyama等利用缓存的样本像素来估计背景模型的线性滤波器、Elg~al等提出的利用一段时间的历史数据来计算背景像素密度的非参数模型等。回归算法在背景估计中无需维持保存背景估计帧的缓冲区,它们是通过回归的方式基于输入的每一帧图像来更新某个时刻的背景模型。这类方法包括广泛应用的线性卡尔曼滤波法、Stauffe:与Grimson提出的混合高斯模型等。

在opencv中有个BackgroundSubtractorMOG2函数,是以高斯混合模型为基础的背景/前景分割算法,但算法只实现了检测部分。这个算法的一个特点是它为每一个像素选择一个合适数目的高斯分布,其对由于亮度等发生变化引起的场景变化产生更好的适应。

混合高斯模型算法原理,不重复赘述:

https://download.csdn.net/download/m0_37407756/10733651

题外话:该函数在opencv3.0与opencv2.中的使用方法区别:

在opencv2.4版本中,只要加了#include "opencv2/opencv.hpp"头文件,主要的调用代码如下:

int main()

{

VideoCapture video("11.mp4");

Mat frame, mask, thresholdImage, output;

int frameNum = 1;

if (!video.isOpened())

cout << "fail to open!" << endl;

long totalFrameNumber = video.get(CV_CAP_PROP_FRAME_COUNT);

video>>frame;

BackgroundSubtractorMOG bgSubtractor(20, 10, 0.5, false);

while (true){

if (totalFrameNumber == frameNum)

break;

video >> frame;

++frameNum;

bgSubtractor(frame, mask, 0.001);

imshow("mask",mask);

waitKey(10);

}

return 0;

}

在opencv3.0中,使用方式不一样,而且3.0中还少了BackgroundSubtractorMOG函数,只有BackgroundSubtractorMOG2,我的使用代码如下,vs2017+opencv3.4.

int main( int argc, char** argv)

{

VideoCapture video("11.mp4"); //读取文件,注意路径格式,VideoCapture video(0); 表示使用编号为0的摄像头"11.mp4"

int frameNum = 1;

Mat frame, mask, thresholdImage, output;

if (!video.isOpened())

cout << "fail to open!" << endl;

//cout<<video.isOpened();

double totalFrameNumber = video.get(CAP_PROP_FRAME_COUNT);

video >> frame;

Ptr<BackgroundSubtractorMOG2> bgsubtractor = createBackgroundSubtractorMOG2();

bgsubtractor->setVarThreshold(20);

while (true) {

if (totalFrameNumber == frameNum)

break;

video >> frame;

++frameNum;

//bgSubtractor(frame, mask, 0.001);

bgsubtractor->apply(frame, mask, 0.01);

imshow("mask", mask);

waitKey(10);

}

return 0;

}该函数的参数可以参阅博客:https://blog.csdn.net/m0_37901643/article/details/72841289

改进后的BackgroundSubtractorMOG2主要有两点改进点:

(1)增加阴影检测功能(2)算法效率有较大提升。

后者意义不言而喻,前者的意义在于如果不使用一些方法检测得到阴影,那么它有可能被识别为前景物体,导致前景物体得到了错误的形状,从而对后续处理(譬如跟踪)产生不好的影响。

BackgroundSubtractorMOG2中用到的阴影检测原理应该来自于《The Sakbot System for Moving Object Detection and Tracking》,原理大致总结一下:作者在HSV空间中检测阴影(原因在于该颜色空间与人眼感知的更为接近,且对阴影产生的亮度变化更为敏感),原理是作者通过实验发现,阴影覆盖的区域像素点的亮度会降低(V减小),且H和S也会衰减,这算是一个经验性的结论,不过貌似还比较有效果。不过另外一点坑是,opencv在实现该部分内容时,还是在RGB颜色空间中,代码看上去应该还是借鉴了上面的思想。

该函数的源码:

#include "precomp.hpp"

namespace cv

{

/*

Interface of Gaussian mixture algorithm from:

"Improved adaptive Gausian mixture model for background subtraction"

Z.Zivkovic

International Conference Pattern Recognition, UK, August, 2004

http://www.zoranz.net/Publications/zivkovic2004ICPR.pdf

Advantages:

-fast - number of Gausssian components is constantly adapted per pixel.

-performs also shadow detection (see bgfg_segm_test.cpp example)

*/

// default parameters of gaussian background detection algorithm

// 这部分的参数建议把《Improved adaptive Gausian mixture model for background subtraction》读一遍,大部分的就基本理解了

static const int defaultHistory2 = 500; // Learning rate; alpha = 1/defaultHistory2

static const float defaultVarThreshold2 = 4.0f*4.0f;

static const int defaultNMixtures2 = 5; // maximal number of Gaussians in mixture

static const float defaultBackgroundRatio2 = 0.9f; // threshold sum of weights for background test

static const float defaultVarThresholdGen2 = 3.0f*3.0f;

static const float defaultVarInit2 = 15.0f; // initial variance for new components

static const float defaultVarMax2 = 5*defaultVarInit2;

static const float defaultVarMin2 = 4.0f;

// additional parameters

static const float defaultfCT2 = 0.05f; // complexity reduction prior constant 0 - no reduction of number of components

static const unsigned char defaultnShadowDetection2 = (unsigned char)127; // value to use in the segmentation mask for shadows, set 0 not to do shadow detection

static const float defaultfTau = 0.5f; // Tau - shadow threshold, see the paper for explanation

// 注意这个结构体后面没有用到,不用管它

struct GaussBGStatModel2Params

{

//image info

int nWidth;

int nHeight;

int nND;//number of data dimensions (image channels)

bool bPostFiltering;//defult 1 - do postfiltering - will make shadow detection results also give value 255

double minArea; // for postfiltering

bool bInit;//default 1, faster updates at start

/////////////////////////

//very important parameters - things you will change

////////////////////////

float fAlphaT;

//alpha - speed of update - if the time interval you want to average over is T

//set alpha=1/T. It is also usefull at start to make T slowly increase

//from 1 until the desired T

float fTb;

//Tb - threshold on the squared Mahalan. dist. to decide if it is well described

//by the background model or not. Related to Cthr from the paper.

//This does not influence the update of the background. A typical value could be 4 sigma

//and that is Tb=4*4=16;

/////////////////////////

//less important parameters - things you might change but be carefull

////////////////////////

float fTg;

//Tg - threshold on the squared Mahalan. dist. to decide

//when a sample is close to the existing components. If it is not close

//to any a new component will be generated. I use 3 sigma => Tg=3*3=9.

//Smaller Tg leads to more generated components and higher Tg might make

//lead to small number of components but they can grow too large

float fTB;//1-cf from the paper

//TB - threshold when the component becomes significant enough to be included into

//the background model. It is the TB=1-cf from the paper. So I use cf=0.1 => TB=0.

//For alpha=0.001 it means that the mode should exist for approximately 105 frames before

//it is considered foreground

float fVarInit;

float fVarMax;

float fVarMin;

//initial standard deviation for the newly generated components.

//It will will influence the speed of adaptation. A good guess should be made.

//A simple way is to estimate the typical standard deviation from the images.

//I used here 10 as a reasonable value

float fCT;//CT - complexity reduction prior

//this is related to the number of samples needed to accept that a component

//actually exists. We use CT=0.05 of all the samples. By setting CT=0 you get

//the standard Stauffer&Grimson algorithm (maybe not exact but very similar)

//even less important parameters

int nM;//max number of modes - const - 4 is usually enough

//shadow detection parameters

bool bShadowDetection;//default 1 - do shadow detection

unsigned char nShadowDetection;//do shadow detection - insert this value as the detection result

float fTau;

// Tau - shadow threshold. The shadow is detected if the pixel is darker

//version of the background. Tau is a threshold on how much darker the shadow can be.

//Tau= 0.5 means that if pixel is more than 2 times darker then it is not shadow

//See: Prati,Mikic,Trivedi,Cucchiarra,"Detecting Moving Shadows...",IEEE PAMI,2003.

};

// 定义了高斯模型中有关权重和方差的结构体

struct GMM

{

float weight;

float variance;

};

// shadow detection performed per pixel

// should work for rgb data, could be usefull for gray scale and depth data as well

// See: Prati,Mikic,Trivedi,Cucchiarra,"Detecting Moving Shadows...",IEEE PAMI,2003.

static CV_INLINE bool

detectShadowGMM(const float* data, int nchannels, int nmodes,

const GMM* gmm, const float* mean,

float Tb, float TB, float tau)

{

// 输入的是像素,函数判断非背景的像素是前景还是阴影

float tWeight = 0;

// check all the components marked as background:

for( int mode = 0; mode < nmodes; mode++, mean += nchannels )

{

GMM g = gmm[mode];

float numerator = 0.0f;

float denominator = 0.0f;

for( int c = 0; c < nchannels; c++ )

{

numerator += data[c] * mean[c];

denominator += mean[c] * mean[c];// 使用高斯分布中的均值计算得到近似的不受到前景影响的“背景”

}

// no division by zero allowed

if( denominator == 0 )

return false;

// 大前提是该像素“颜色”相对于“背景”有所衰减

// if tau < a < 1 then also check the color distortion

if( numerator <= denominator && numerator >= tau*denominator )

{

float a = numerator / denominator;

float dist2a = 0.0f;

for( int c = 0; c < nchannels; c++ )

{

float dD= a*mean[c] - data[c];

dist2a += dD*dD;

}

// 没看懂,感觉像是作者的经验公式

if (dist2a < Tb*g.variance*a*a)

return true;

};

tWeight += g.weight;

if( tWeight > TB )

return false;

};

return false;

}

//update GMM - the base update function performed per pixel

//

//"Efficient Adaptive Density Estimapion per Image Pixel for the Task of Background Subtraction"

//Z.Zivkovic, F. van der Heijden

//Pattern Recognition Letters, vol. 27, no. 7, pages 773-780, 2006.

//

//The algorithm similar to the standard Stauffer&Grimson algorithm with

//additional selection of the number of the Gaussian components based on:

//

//"Recursive unsupervised learning of finite mixture models "

//Z.Zivkovic, F.van der Heijden

//IEEE Trans. on Pattern Analysis and Machine Intelligence, vol.26, no.5, pages 651-656, 2004

//http://www.zoranz.net/Publications/zivkovic2004PAMI.pdf

// 通过该结构体实现算法的并行计算,可以百度下parallel_for_大致了解下

struct MOG2Invoker : ParallelLoopBody

{

MOG2Invoker(const Mat& _src, Mat& _dst,

GMM* _gmm, float* _mean,

uchar* _modesUsed,

int _nmixtures, float _alphaT,

float _Tb, float _TB, float _Tg,

float _varInit, float _varMin, float _varMax,

float _prune, float _tau, bool _detectShadows,

uchar _shadowVal)

{

src = &_src;// 原图

dst = &_dst;

gmm0 = _gmm;

mean0 = _mean;

modesUsed0 = _modesUsed;

nmixtures = _nmixtures;

alphaT = _alphaT;

Tb = _Tb;

TB = _TB;

Tg = _Tg;

varInit = _varInit;

varMin = MIN(_varMin, _varMax);

varMax = MAX(_varMin, _varMax);

prune = _prune;

tau = _tau;

detectShadows = _detectShadows;

shadowVal = _shadowVal;

cvtfunc = src->depth() != CV_32F ? getConvertFunc(src->depth(), CV_32F) : 0;

}

void operator()(const Range& range) const

{

int y0 = range.start, y1 = range.end;// 每个并行计算单元的输入是一行图像

int ncols = src->cols, nchannels = src->channels();

AutoBuffer<float> buf(src->cols*nchannels);

float alpha1 = 1.f - alphaT;

float dData[CV_CN_MAX];

for( int y = y0; y < y1; y++ )

{

const float* data = buf;

if( cvtfunc )

cvtfunc( src->ptr(y), src->step, 0, 0, (uchar*)data, 0, Size(ncols*nchannels, 1), 0);// 转换为1XN的图像,N为原图的列数

else

data = src->ptr<float>(y);

float* mean = mean0 + ncols*nmixtures*nchannels*y;

GMM* gmm = gmm0 + ncols*nmixtures*y;

uchar* modesUsed = modesUsed0 + ncols*y;

uchar* mask = dst->ptr(y);

// 遍历1XN图像的每个像素

for( int x = 0; x < ncols; x++, data += nchannels, gmm += nmixtures, mean += nmixtures*nchannels )

{

//calculate distances to the modes (+ sort)

//here we need to go in descending order!!!

bool background = false;//return value -> true - the pixel classified as background

//internal:

bool fitsPDF = false;//if it remains zero a new GMM mode will be added

int nmodes = modesUsed[x], nNewModes = nmodes;//current number of modes in GMM

float totalWeight = 0.f;

float* mean_m = mean;

//////

//go through all modes

// 1.计算是否符合当前混合模型

for( int mode = 0; mode < nmodes; mode++, mean_m += nchannels )

{

float weight = alpha1*gmm[mode].weight + prune;//need only weight if fit is found

int swap_count = 0;

////

//fit not found yet

if( !fitsPDF )

{

//check if it belongs to some of the remaining modes

float var = gmm[mode].variance;

//calculate difference and distance

float dist2;

if( nchannels == 3 )

{

dData[0] = mean_m[0] - data[0];

dData[1] = mean_m[1] - data[1];

dData[2] = mean_m[2] - data[2];

dist2 = dData[0]*dData[0] + dData[1]*dData[1] + dData[2]*dData[2];

}

else

{

dist2 = 0.f;

for( int c = 0; c < nchannels; c++ )

{

dData[c] = mean_m[c] - data[c];

dist2 += dData[c]*dData[c];

}

}

//background? - Tb - usually larger than Tg

if( totalWeight < TB && dist2 < Tb*var )

background = true;

//check fit

if( dist2 < Tg*var )

{

/////

//belongs to the mode

fitsPDF = true;

//update distribution

//update weight

weight += alphaT;

float k = alphaT/weight;

//update mean

for( int c = 0; c < nchannels; c++ )

mean_m[c] -= k*dData[c];

//update variance

float varnew = var + k*(dist2-var);

//limit the variance

varnew = MAX(varnew, varMin);

varnew = MIN(varnew, varMax);

gmm[mode].variance = varnew;

//sort

//all other weights are at the same place and

//only the matched (iModes) is higher -> just find the new place for it

for( int i = mode; i > 0; i-- )

{

//check one up

if( weight < gmm[i-1].weight )

break;

swap_count++;

//swap one up

std::swap(gmm[i], gmm[i-1]);

for( int c = 0; c < nchannels; c++ )

std::swap(mean[i*nchannels + c], mean[(i-1)*nchannels + c]);

}

//belongs to the mode - bFitsPDF becomes 1

/////

}

}//!bFitsPDF)

//check prune

if( weight < -prune )// 保证下一次运算中模型的权值非负

{

weight = 0.0;

nmodes--;// 丢弃掉该模型

}

gmm[mode-swap_count].weight = weight;//update weight by the calculated value

totalWeight += weight;

}

//go through all modes

//////

//renormalize weights

// 2.权重归一化

totalWeight = 1.f/totalWeight;

for( int mode = 0; mode < nmodes; mode++ )

{

gmm[mode].weight *= totalWeight;

}

nmodes = nNewModes;

//make new mode if needed and exit

// 3.根据情况增加新的高斯分布

if( !fitsPDF )

{

// replace the weakest or add a new one

int mode = nmodes == nmixtures ? nmixtures-1 : nmodes++;

if (nmodes==1)

gmm[mode].weight = 1.f;

else

{

gmm[mode].weight = alphaT;

// renormalize all other weights

for( int i = 0; i < nmodes-1; i++ )

gmm[i].weight *= alpha1;

}

// init

for( int c = 0; c < nchannels; c++ )

mean[mode*nchannels + c] = data[c];

gmm[mode].variance = varInit;

//sort

//find the new place for it

for( int i = nmodes - 1; i > 0; i-- )

{

// check one up

if( alphaT < gmm[i-1].weight )

break;

// swap one up

std::swap(gmm[i], gmm[i-1]);

for( int c = 0; c < nchannels; c++ )

std::swap(mean[i*nchannels + c], mean[(i-1)*nchannels + c]);

}

}

//set the number of modes

modesUsed[x] = uchar(nmodes);// 更新GMM中实际使用的模型个数

// 4.如果不是背景,根据参数确定是否继续进行阴影判断

mask[x] = background ? 0 :

detectShadows && detectShadowGMM(data, nchannels, nmodes, gmm, mean, Tb, TB, tau) ?

shadowVal : 255;

}

}

}

const Mat* src;

Mat* dst;

GMM* gmm0;

float* mean0;

uchar* modesUsed0;

int nmixtures;

float alphaT, Tb, TB, Tg;

float varInit, varMin, varMax, prune, tau;

bool detectShadows;

uchar shadowVal;

BinaryFunc cvtfunc;

};

BackgroundSubtractorMOG2::BackgroundSubtractorMOG2()

{

frameSize = Size(0,0);

frameType = 0;

nframes = 0;

history = defaultHistory2;

varThreshold = defaultVarThreshold2;

bShadowDetection = 1;

nmixtures = defaultNMixtures2;

backgroundRatio = defaultBackgroundRatio2;

fVarInit = defaultVarInit2;

fVarMax = defaultVarMax2;

fVarMin = defaultVarMin2;

varThresholdGen = defaultVarThresholdGen2;

fCT = defaultfCT2;

nShadowDetection = defaultnShadowDetection2;

fTau = defaultfTau;

}

BackgroundSubtractorMOG2::BackgroundSubtractorMOG2(int _history, float _varThreshold, bool _bShadowDetection)

{

frameSize = Size(0,0);

frameType = 0;

nframes = 0;

history = _history > 0 ? _history : defaultHistory2;

varThreshold = (_varThreshold>0)? _varThreshold : defaultVarThreshold2;

bShadowDetection = _bShadowDetection;

nmixtures = defaultNMixtures2;

backgroundRatio = defaultBackgroundRatio2;

fVarInit = defaultVarInit2;

fVarMax = defaultVarMax2;

fVarMin = defaultVarMin2;

varThresholdGen = defaultVarThresholdGen2;

fCT = defaultfCT2;

nShadowDetection = defaultnShadowDetection2;

fTau = defaultfTau;

}

BackgroundSubtractorMOG2::~BackgroundSubtractorMOG2()

{

}

void BackgroundSubtractorMOG2::initialize(Size _frameSize, int _frameType)

{

frameSize = _frameSize;

frameType = _frameType;

nframes = 0;

int nchannels = CV_MAT_CN(frameType);

CV_Assert( nchannels <= CV_CN_MAX );

// for each gaussian mixture of each pixel bg model we store ...

// the mixture weight (w),

// the mean (nchannels values) and

// the covariance

bgmodel.create( 1, frameSize.height*frameSize.width*nmixtures*(2 + nchannels), CV_32F );

//make the array for keeping track of the used modes per pixel - all zeros at start

bgmodelUsedModes.create(frameSize,CV_8U);

bgmodelUsedModes = Scalar::all(0);

}

void BackgroundSubtractorMOG2::operator()(InputArray _image, OutputArray _fgmask, double learningRate)

{

Mat image = _image.getMat();

bool needToInitialize = nframes == 0 || learningRate >= 1 || image.size() != frameSize || image.type() != frameType;

if( needToInitialize )

initialize(image.size(), image.type());

_fgmask.create( image.size(), CV_8U );

Mat fgmask = _fgmask.getMat();

++nframes;

learningRate = learningRate >= 0 && nframes > 1 ? learningRate : 1./min( 2*nframes, history );

CV_Assert(learningRate >= 0);

// 并行计算,应该是分为了image.rows个计算单元,所以每个计算单元只计算每一行的“图像”

parallel_for_(Range(0, image.rows),

MOG2Invoker(image, fgmask,

(GMM*)bgmodel.data,

(float*)(bgmodel.data + sizeof(GMM)*nmixtures*image.rows*image.cols),

bgmodelUsedModes.data, nmixtures, (float)learningRate,

(float)varThreshold,

backgroundRatio, varThresholdGen,

fVarInit, fVarMin, fVarMax, float(-learningRate*fCT), fTau,

bShadowDetection, nShadowDetection));

}

// 可以调用该函数得到视频中每一帧的不受前景物体存在影响的近似背景图像

void BackgroundSubtractorMOG2::getBackgroundImage(OutputArray backgroundImage) const

{

int nchannels = CV_MAT_CN(frameType);

CV_Assert(nchannels == 1 || nchannels == 3);

Mat meanBackground(frameSize, CV_MAKETYPE(CV_8U, nchannels), Scalar::all(0));

int firstGaussianIdx = 0;

const GMM* gmm = (GMM*)bgmodel.data;

const float* mean = reinterpret_cast<const float*>(gmm + frameSize.width*frameSize.height*nmixtures);

std::vector<float> meanVal(nchannels, 0.f);

for(int row=0; row<meanBackground.rows; row++)

{

for(int col=0; col<meanBackground.cols; col++)

{

int nmodes = bgmodelUsedModes.at<uchar>(row, col);

float totalWeight = 0.f;

for(int gaussianIdx = firstGaussianIdx; gaussianIdx < firstGaussianIdx + nmodes; gaussianIdx++)

{

GMM gaussian = gmm[gaussianIdx];

size_t meanPosition = gaussianIdx*nchannels;

for(int chn = 0; chn < nchannels; chn++)

{

meanVal[chn] += gaussian.weight * mean[meanPosition + chn];// 核心代码,就是利用多个高斯分布的权值、均值的加权和作为背景像素值

}

totalWeight += gaussian.weight;

if(totalWeight > backgroundRatio)

break;

}

float invWeight = 1.f/totalWeight;

switch(nchannels)

{

case 1:

meanBackground.at<uchar>(row, col) = (uchar)(meanVal[0] * invWeight);

meanVal[0] = 0.f;

break;

case 3:

Vec3f& meanVec = *reinterpret_cast<Vec3f*>(&meanVal[0]);

meanBackground.at<Vec3b>(row, col) = Vec3b(meanVec * invWeight);

meanVec = 0.f;

break;

}

firstGaussianIdx += nmixtures;

}

}

meanBackground.copyTo(backgroundImage);

}

}

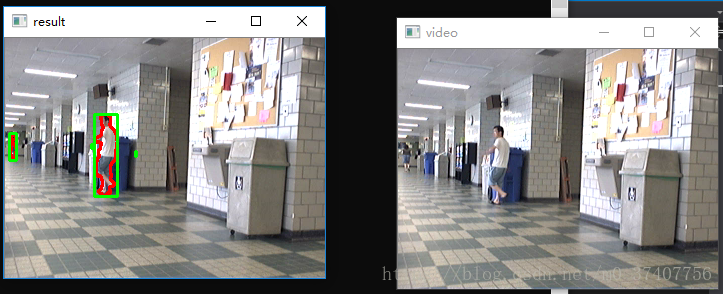

经过实验可以看出,GMM是分离前景和背景,输出为前景二值掩码。 要经过一些形态学运算,找轮廓后会得到检测部分,即目标检测。结果如下图:

到现在目标检测算是初步完成了。