版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/u014281392/article/details/81229260

已经清洗处理了两个数据文件:

- application_{train|test}.csv :客户详细信息

- bureau.csv : 客户历史信用报告

下面对这两个数据中的特征进行合并,然后Light Gradient Boosting Machine训练模型,之前只用客户数据的预测评分结果是0.734,这次加入了客户信用报告信息

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import KFold

from sklearn.metrics import roc_auc_score

import lightgbm as lgb

import gc

import warnings

warnings.filterwarnings('ignore')

%matplotlib inlineload data

train_data = pd.read_csv('data/no_select_train.csv')

test_data = pd.read_csv('data/no_select_test.csv')

bureau_data = pd.read_csv('data/bureau_features.csv')bureau_data.shape(305811, 94)

train_data.shape(307511, 268)

test_data.shape(48744, 267)

新增加了客户历史信用记录

Build Model

def model(features, test_features, n_folds = 10):

# 取出ID列

train_ids = features['SK_ID_CURR']

test_ids = test_features['SK_ID_CURR']

# TARGET

labels = features[['TARGET']].astype(int)

# 去掉ID和TARGET

features = features.drop(['SK_ID_CURR', 'TARGET'], axis = 1)

test_features = test_features.drop(['SK_ID_CURR'], axis = 1)

# 特征名字

feature_names = list(features.columns)

# 10折交叉验证

k_fold = KFold(n_splits = n_folds, shuffle = True, random_state = 50)

# test predictions

test_predictions = np.zeros(test_features.shape[0])

# validation predictions

out_of_fold = np.zeros(features.shape[0])

# Empty array for feature importances

feature_importance_values = np.zeros(len(feature_names))

# 记录每次的scores

valid_scores = []

train_scores = []

# Iterate through each fold

count = 0

for train_indices, valid_indices in k_fold.split(features):

# Training data for the fold

train_features = features.loc[train_indices, :]

train_labels = labels.loc[train_indices, :]

# Validation data for the fold

valid_features = features.loc[valid_indices, :]

valid_labels = labels.loc[valid_indices, :]

# Create the model

model = lgb.LGBMClassifier(n_estimators=10000, objective = 'binary',

class_weight = 'balanced', learning_rate = 0.05,

reg_alpha = 0.1, reg_lambda = 0.1,

subsample = 0.8, n_jobs = -1, random_state = 50)

# Train the model

model.fit(train_features, train_labels, eval_metric = 'auc',

eval_set = [(valid_features, valid_labels), (train_features, train_labels)],

eval_names = ['valid', 'train'], categorical_feature = 'auto',

early_stopping_rounds = 100, verbose = 200)

# Record the best iteration

best_iteration = model.best_iteration_

# 测试集的结果

test_predictions += model.predict_proba(test_features, num_iteration = best_iteration)[:, 1]/n_folds

# 验证集结果

out_of_fold[valid_indices] = model.predict_proba(valid_features, num_iteration = best_iteration)[:, 1]

# feature importance

feature_importance_values += model.feature_importances_ / n_folds

# Record the best score

valid_score = model.best_score_['valid']['auc']

train_score = model.best_score_['train']['auc']

valid_scores.append(valid_score)

train_scores.append(train_score)

# Clean up memory

gc.enable()

del model, train_features, valid_features

gc.collect()

count += 1

print("%d_fold is over"%count)

# Make the submission dataframe

submission = pd.DataFrame({'SK_ID_CURR': test_ids, 'TARGET': test_predictions})

# feature importance

feature_importances = pd.DataFrame({'feature': feature_names, 'importance': feature_importance_values})

# Overall validation score

valid_auc = roc_auc_score(labels, out_of_fold)

# Add the overall scores to the metrics

valid_scores.append(valid_auc)

train_scores.append(np.mean(train_scores))

# dataframe of validation scores

fold_names = list(range(n_folds))

fold_names.append('overall')

# Dataframe of validation scores

metrics = pd.DataFrame({'fold': fold_names,

'train': train_scores,

'valid': valid_scores})

return submission, metrics, feature_importances特征聚合

左连接

train_data = train_data.merge(bureau_data, on = 'SK_ID_CURR', how = 'left')

train_data.shape(307511, 361)

train_data.TARGET.value_counts()0 282686

1 24825

Name: TARGET, dtype: int64

左连接,样本数目不变

test_data = test_data.merge(bureau_data, on = 'SK_ID_CURR', how = 'left')

test_data.shape(48744, 360)

submit5,metrics,feature_importance = model(train_data, test_data, n_folds= 10)Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811087 valid's auc: 0.776033

[400] train's auc: 0.84353 valid's auc: 0.776933

Early stopping, best iteration is:

[405] train's auc: 0.844392 valid's auc: 0.77704

1_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811909 valid's auc: 0.763234

[400] train's auc: 0.844175 valid's auc: 0.763789

Early stopping, best iteration is:

[310] train's auc: 0.830712 valid's auc: 0.763865

2_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811204 valid's auc: 0.771043

[400] train's auc: 0.844348 valid's auc: 0.772213

Early stopping, best iteration is:

[375] train's auc: 0.840635 valid's auc: 0.772462

3_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811886 valid's auc: 0.772251

[400] train's auc: 0.84428 valid's auc: 0.773033

Early stopping, best iteration is:

[411] train's auc: 0.845925 valid's auc: 0.773179

4_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811444 valid's auc: 0.772315

Early stopping, best iteration is:

[277] train's auc: 0.825443 valid's auc: 0.773191

5_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.810696 valid's auc: 0.779534

[400] train's auc: 0.844023 valid's auc: 0.780508

Early stopping, best iteration is:

[321] train's auc: 0.832286 valid's auc: 0.781193

6_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.810864 valid's auc: 0.776371

[400] train's auc: 0.84338 valid's auc: 0.777393

Early stopping, best iteration is:

[447] train's auc: 0.850437 valid's auc: 0.777718

7_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811775 valid's auc: 0.76751

[400] train's auc: 0.844592 valid's auc: 0.76855

Early stopping, best iteration is:

[385] train's auc: 0.842334 valid's auc: 0.768839

8_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811555 valid's auc: 0.773908

[400] train's auc: 0.844837 valid's auc: 0.776798

Early stopping, best iteration is:

[438] train's auc: 0.850265 valid's auc: 0.776938

9_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.812462 valid's auc: 0.76771

[400] train's auc: 0.845181 valid's auc: 0.768348

Early stopping, best iteration is:

[393] train's auc: 0.844196 valid's auc: 0.768489

10_fold is over

submit5.head()| SK_ID_CURR | TARGET | |

|---|---|---|

| 0 | 100001 | 0.264254 |

| 1 | 100005 | 0.554692 |

| 2 | 100013 | 0.220868 |

| 3 | 100028 | 0.252361 |

| 4 | 100038 | 0.705160 |

metrics| fold | train | valid | |

|---|---|---|---|

| 0 | 0 | 0.844392 | 0.777040 |

| 1 | 1 | 0.830712 | 0.763865 |

| 2 | 2 | 0.840635 | 0.772462 |

| 3 | 3 | 0.845925 | 0.773179 |

| 4 | 4 | 0.825443 | 0.773191 |

| 5 | 5 | 0.832286 | 0.781193 |

| 6 | 6 | 0.850437 | 0.777718 |

| 7 | 7 | 0.842334 | 0.768839 |

| 8 | 8 | 0.850265 | 0.776938 |

| 9 | 9 | 0.844196 | 0.768489 |

| 10 | overall | 0.840663 | 0.773266 |

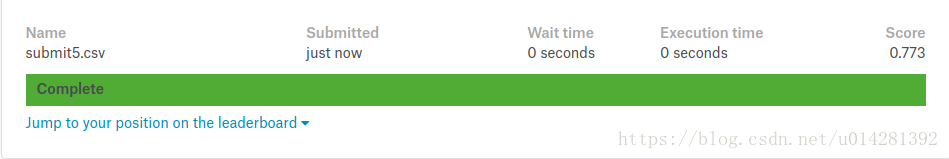

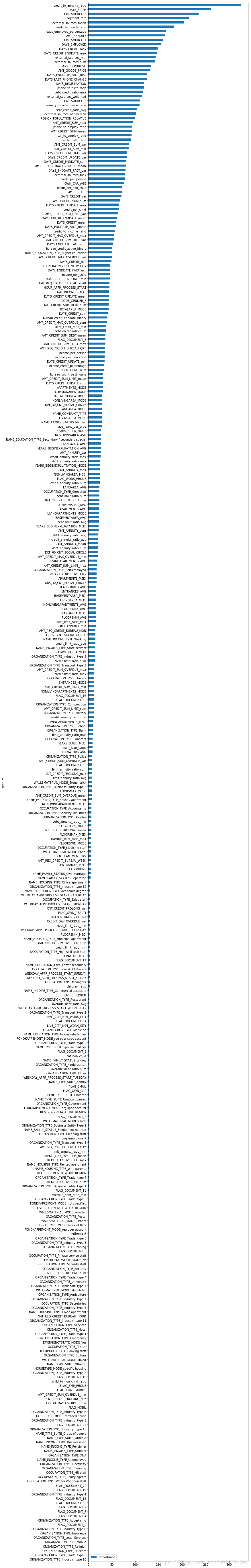

submit5.to_csv('submit5.csv', index = False)特征重要性

feature_importance = feature_importance.sort_values(by = 'importance')

feature_importance = feature_importance.set_index(['feature'])feature_importance.plot(kind = 'barh', figsize = (10, 100))

importance == 0的特征

feature_importance = feature_importance.reset_index()

# importance == 0 的特征

weak_importance_features = list(feature_importance[feature_importance['importance'] == 0].feature)weak_importance_features['ORGANIZATION_TYPE_Industry: type 10',

'FLAG_DOCUMENT_21',

'FLAG_DOCUMENT_20',

'FLAG_DOCUMENT_19',

'child_to_non_child_ratio',

'FLAG_DOCUMENT_17',

'EMERGENCYSTATE_MODE_No',

'ORGANIZATION_TYPE_Industry: type 13',

'FLAG_DOCUMENT_12',

'FLAG_DOCUMENT_10',

'ORGANIZATION_TYPE_XNA',

'FLAG_DOCUMENT_7',

'FLAG_DOCUMENT_5',

'FLAG_DOCUMENT_4',

'FLAG_DOCUMENT_2',

'ORGANIZATION_TYPE_Trade: type 1',

'ORGANIZATION_TYPE_Industry: type 4',

'ORGANIZATION_TYPE_Industry: type 6',

'ORGANIZATION_TYPE_Religion',

'ORGANIZATION_TYPE_Industry: type 8',

'NAME_TYPE_SUITE_Group of people',

'CREDIT_DAY_OVERDUE_min',

'ORGANIZATION_TYPE_Mobile',

'CNT_CREDIT_PROLONG_min',

'OCCUPATION_TYPE_IT staff',

'OCCUPATION_TYPE_HR staff',

'ORGANIZATION_TYPE_Advertising',

'ORGANIZATION_TYPE_Cleaning',

'FLAG_MOBIL',

'FLAG_EMP_PHONE',

'NAME_INCOME_TYPE_Pensioner',

'NAME_INCOME_TYPE_Student',

'FLAG_CONT_MOBILE',

'NAME_INCOME_TYPE_Businessman',

'AMT_CREDIT_SUM_OVERDUE_min']

Drop weak feature

train_data = train_data.drop(weak_importance_features, axis = 1)

test_data = test_data.drop(weak_importance_features, axis = 1)Training model

submit5_1,metrics, feature_importance = model(train_data, test_data, n_folds= 10)Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811087 valid's auc: 0.776033

[400] train's auc: 0.84353 valid's auc: 0.776933

Early stopping, best iteration is:

[405] train's auc: 0.844392 valid's auc: 0.77704

1_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811909 valid's auc: 0.763234

[400] train's auc: 0.844175 valid's auc: 0.763789

Early stopping, best iteration is:

[310] train's auc: 0.830712 valid's auc: 0.763865

2_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811204 valid's auc: 0.771043

[400] train's auc: 0.844348 valid's auc: 0.772213

Early stopping, best iteration is:

[375] train's auc: 0.840635 valid's auc: 0.772462

3_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811886 valid's auc: 0.772251

[400] train's auc: 0.84428 valid's auc: 0.773033

Early stopping, best iteration is:

[411] train's auc: 0.845925 valid's auc: 0.773179

4_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811444 valid's auc: 0.772315

Early stopping, best iteration is:

[277] train's auc: 0.825443 valid's auc: 0.773191

5_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.810696 valid's auc: 0.779534

[400] train's auc: 0.844023 valid's auc: 0.780508

Early stopping, best iteration is:

[321] train's auc: 0.832286 valid's auc: 0.781193

6_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.810864 valid's auc: 0.776371

[400] train's auc: 0.84338 valid's auc: 0.777393

Early stopping, best iteration is:

[447] train's auc: 0.850437 valid's auc: 0.777718

7_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811775 valid's auc: 0.76751

[400] train's auc: 0.844592 valid's auc: 0.76855

Early stopping, best iteration is:

[385] train's auc: 0.842334 valid's auc: 0.768839

8_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.811555 valid's auc: 0.773908

[400] train's auc: 0.844837 valid's auc: 0.776798

Early stopping, best iteration is:

[438] train's auc: 0.850265 valid's auc: 0.776938

9_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.812462 valid's auc: 0.76771

[400] train's auc: 0.845181 valid's auc: 0.768348

Early stopping, best iteration is:

[475] train's auc: 0.855547 valid's auc: 0.768638

10_fold is over

submit5_1.head()| SK_ID_CURR | TARGET | |

|---|---|---|

| 0 | 100001 | 0.264469 |

| 1 | 100005 | 0.554822 |

| 2 | 100013 | 0.220767 |

| 3 | 100028 | 0.252001 |

| 4 | 100038 | 0.705017 |

submit5_1.to_csv('submit5_1.csv',index = False)metrics| fold | train | valid | |

|---|---|---|---|

| 0 | 0 | 0.844392 | 0.777040 |

| 1 | 1 | 0.830712 | 0.763865 |

| 2 | 2 | 0.840635 | 0.772462 |

| 3 | 3 | 0.845925 | 0.773179 |

| 4 | 4 | 0.825443 | 0.773191 |

| 5 | 5 | 0.832286 | 0.781193 |

| 6 | 6 | 0.850437 | 0.777718 |

| 7 | 7 | 0.842334 | 0.768839 |

| 8 | 8 | 0.850265 | 0.776938 |

| 9 | 9 | 0.855547 | 0.768638 |

| 10 | overall | 0.841798 | 0.773265 |

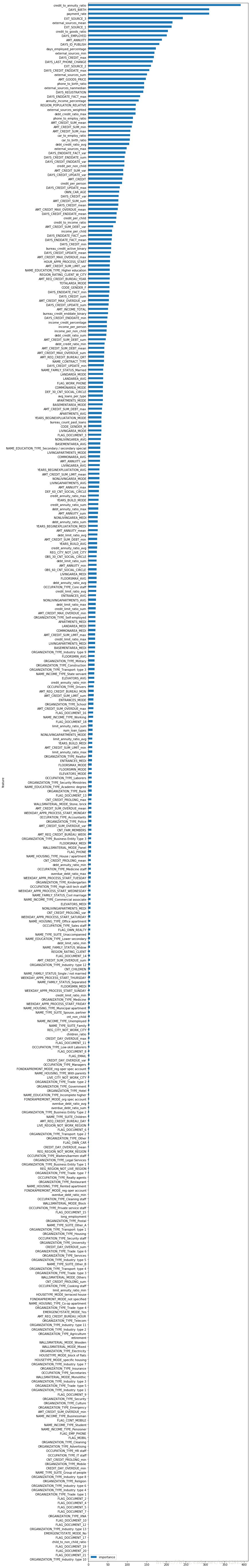

feature_importance.plot(kind = 'barh', figsize = (10, 100))feature importance

扫描二维码关注公众号,回复:

3689654 查看本文章

特征聚合(内连接)

train_data = pd.read_csv('data/no_select_train.csv')

test_data = pd.read_csv('data/no_select_test.csv')

bureau_data = pd.read_csv('data/bureau_features.csv')train_data = train_data.merge(bureau_data, left_on = 'SK_ID_CURR', right_on = 'SK_ID_CURR')

test_data = test_data.merge(bureau_data, on = 'SK_ID_CURR', how = 'left')train_data.shape(263491, 361)

submit6, metrics, feature_importance = model(train_data, test_data, n_folds=10)Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.822727 valid's auc: 0.770619

[400] train's auc: 0.859328 valid's auc: 0.77186

Early stopping, best iteration is:

[323] train's auc: 0.846435 valid's auc: 0.77214

1_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.822 valid's auc: 0.776964

Early stopping, best iteration is:

[247] train's auc: 0.83182 valid's auc: 0.777768

2_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.822354 valid's auc: 0.779729

[400] train's auc: 0.858779 valid's auc: 0.780386

Early stopping, best iteration is:

[311] train's auc: 0.843865 valid's auc: 0.78096

3_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.822429 valid's auc: 0.77889

[400] train's auc: 0.859017 valid's auc: 0.779426

Early stopping, best iteration is:

[368] train's auc: 0.853733 valid's auc: 0.779905

4_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.822714 valid's auc: 0.776522

Early stopping, best iteration is:

[185] train's auc: 0.819432 valid's auc: 0.776777

5_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.822052 valid's auc: 0.781852

[400] train's auc: 0.858622 valid's auc: 0.782497

Early stopping, best iteration is:

[394] train's auc: 0.857795 valid's auc: 0.782713

6_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.822871 valid's auc: 0.766198

Early stopping, best iteration is:

[288] train's auc: 0.840463 valid's auc: 0.76693

7_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.82312 valid's auc: 0.764778

Early stopping, best iteration is:

[297] train's auc: 0.841792 valid's auc: 0.765418

8_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.821681 valid's auc: 0.783672

[400] train's auc: 0.859008 valid's auc: 0.785079

Early stopping, best iteration is:

[406] train's auc: 0.859982 valid's auc: 0.785162

9_fold is over

Training until validation scores don't improve for 100 rounds.

[200] train's auc: 0.823228 valid's auc: 0.771831

[400] train's auc: 0.859472 valid's auc: 0.772901

Early stopping, best iteration is:

[385] train's auc: 0.857328 valid's auc: 0.773237

10_fold is over

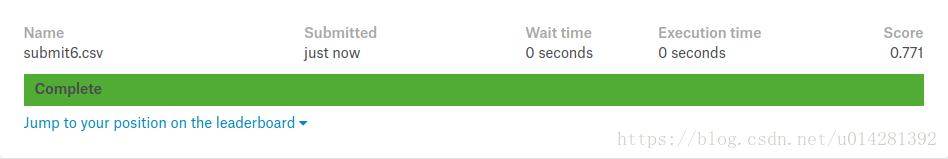

submit6.to_csv('submit6.csv',index = False)metrics| fold | train | valid | |

|---|---|---|---|

| 0 | 0 | 0.846435 | 0.772140 |

| 1 | 1 | 0.831820 | 0.777768 |

| 2 | 2 | 0.843865 | 0.780960 |

| 3 | 3 | 0.853733 | 0.779905 |

| 4 | 4 | 0.819432 | 0.776777 |

| 5 | 5 | 0.857795 | 0.782713 |

| 6 | 6 | 0.840463 | 0.766930 |

| 7 | 7 | 0.841792 | 0.765418 |

| 8 | 8 | 0.859982 | 0.785162 |

| 9 | 9 | 0.857328 | 0.773237 |

| 10 | overall | 0.845265 | 0.776006 |

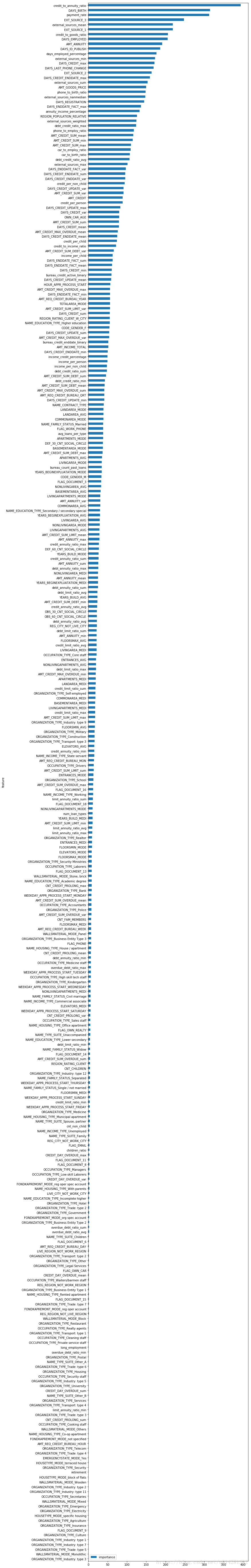

特征的重要性

feature_importance = feature_importance.sort_values(by = 'importance')

feature_importance = feature_importance.set_index('feature')

feature_importance.plot(kind = 'barh', figsize = (10, 100))总结一下:

左连接,保证训练数据数量不变,如果在bureau.csv中没有数据的样本会有大量缺失值,但是在加入客户历史信用报告后,评分从0.723增长到0.773.对于重要性为0的特征,去掉也不影响模型的表现,但是也没有提升.内连接的方式,训练数据会少40000+的样本,对评分结果是有影响的,下降了0.002;接下来,会用更多的数据用户的现金消费和POS消费的数据POS_CASH_balance.csv