版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/lulujiang1996/article/details/81667720

ID3:

关键代码如下:(若要具体代码,请看: https://blog.csdn.net/lulujiang1996/article/details/81191571)

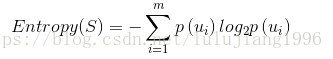

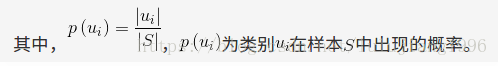

1.整体信息熵 - 某特征条件熵 =信息增益

2.选择信息增益最大的特征

ID3 python 实现

def chooseBestFeature(df):

#计算整体熵

baseEntropy=shannonEnt(df)

bestInfoGain=0.0

bestFeature=-1

# 选特征(属性)

numFeatures=len(df.columns)-1

# 遍历特征

for i in range(numFeatures):

newEntropy=0.0

# 遍历每个特征的子特征,对数据集进行划分,并求每个条件熵

for value in list(set(df.iloc[:,i])):

subSet=splitDataset(df,i,value)

#得到P(x)

prob=len(subSet)/float(len(df))

# P1*h(x1)+P2*h(X2)+...Pn*h(xn)

newEntropy +=prob* shannonEnt(subSet)

# 求信息增益

infogain=baseEntropy-newEntropy

infoGain.append(infogain)

bestInfoGain=max(infoGain)

bestFeature=infoGain.index(bestInfoGain)

return bestFeature

ID3 sklearn实现:

from sklearn import datasets

import numpy as np

from math import log

from sklearn.model_selection import train_test_split

from sklearn import tree

from sklearn.metrics import accuracy_score

np.random.seed(0)

iris=datasets.load_iris()

data_x=iris.data

data_y=iris.target

train_data,test_data,train_y,test_y=train_test_split(data_x,data_y,test_size=0.3)

clf = tree.DecisionTreeClassifier(criterion='entropy')

clf.fit(data_x,data_y)

y_pre=clf.predict(test_data)

y_prob=clf.predict_proba(test_data)

print(accuracy_score(test_y,y_pre))

C4.5

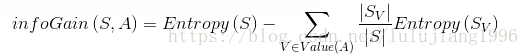

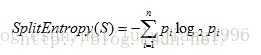

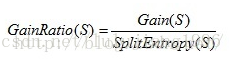

信息增益率 代替信息增益 :

分离信息

信息增益率

(这里进步介绍,分类信息相当于-条件熵,那么以下信息率可以很好理解了。

通过某特征划分(属性多的),其条件熵很小,所以信息增益很大,其分离信息很大,所以信息增率并没有很大的变化)

C4.5python的实现

def splitRate(x):

return shannonEnt(x)

def chooseBestFeature(df):

#计算整体熵

baseEntropy=shannonEnt(df)

bestInfoGain=0.0

bestFeature=-1

# 选特征(属性)

numFeatures=len(df.columns)-1

# 遍历特征

for i in range(numFeatures):

newEntropy=0.0

# 遍历每个特征的子特征,对数据集进行划分,并求每个条件熵

for value in list(set(df.iloc[:,i])):

subSet=splitDataset(df,i,value)

#得到P(x)

prob=len(subSet)/float(len(df))

# P1*h(x1)+P2*h(X2)+...Pn*h(xn)

newEntropy +=prob* shannonEnt(subSet)

# 求信息增益

infogain=baseEntropy-newEntropy

# 计算信息增益比(增益率)----这一步是与 ID3的区别

infoRate=infogain/splitRate(subSet)

infoGain.append(infoRate)

bestInfoGain=max(infoRate)

bestFeature=infoGain.index(bestInfoGain)

return bestFeature

楼主太菜,C4.5 sklearn 上没找到实现[哭泣].

CART

用gini系数衡量数据集划分效果代替香农熵

Python实现:

# 求基尼

def calGini(df):

numEntries = len(df)

labelCounts={}

for featVec in df['class']:

currentLabel = featVec

# 统计每类label的个数

if currentLabel not in labelCounts.keys(): labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

gini=1

# 1- p1^2-p2^2-...

for label in labelCounts.keys():

prop=float(labelCounts[label])/numEntries

gini -=prop*prop

return gini

# 需要选择基尼最大的特征

def chooseBestFeature(df):

bestGiniGain=0.0

bestFeature=-1

Gini=[]

# 选特征(属性)

numFeatures=len(df.columns)-1

# 遍历特征

for i in range(numFeatures):

GiniGain=0.0

# 遍历每个特征的子特征,对数据集进行划分,并求每个子特征的gini

for value in list(set(df.iloc[:,i])):

subSet=splitDataset(df,i,value)

#得到P(x)

prob=len(subSet)/float(len(df))

GiniGain+=prob*calGini(subSet)

# 选择最大gini系数的 特征

Gini.append(GiniGain)

bestGiniGain=max(Gini)

bestFeature=Gini.index(bestGain)

return bestFeature

sklearn下实现:

from sklearn import datasets

import numpy as np

from math import log

from sklearn.model_selection import train_test_split

from sklearn import tree

from sklearn.metrics import accuracy_score

np.random.seed(0)

iris=datasets.load_iris()

data_x=iris.data

data_y=iris.target

train_data,test_data,train_y,test_y=train_test_split(data_x,data_y,test_size=0.3)

clf = tree.DecisionTreeClassifier(criterion='gini')

clf.fit(data_x,data_y)

y_pre=clf.predict(test_data)

y_prob=clf.predict_proba(test_data)

print(accuracy_score(test_y,y_pre))