版权声明:本文为博主原创文章,转载请注明出处:http://blog.csdn.net/w18756901575 https://blog.csdn.net/w18756901575/article/details/78615835

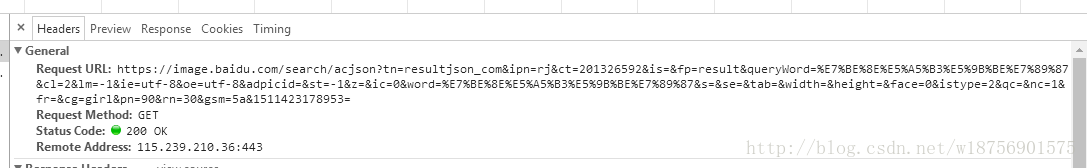

百度图片抓包数据:

参数详情:

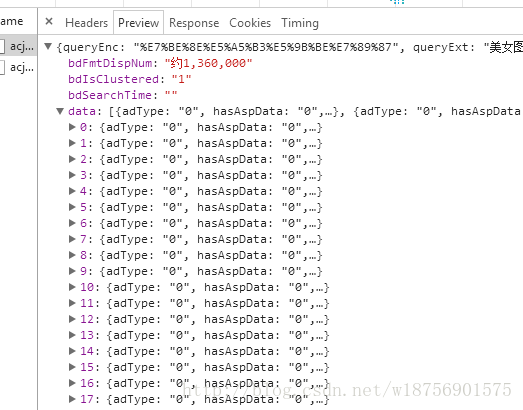

数据解析:

from urllib import request, parse

from http import cookiejar

import re

import time

# 1.提取数据

def main(text,start,length):

hx = hex(start)

s = str(hx)[2:len(hx)]

reqMessage = {

"tn": "resultjson_com",

"ipn": "rj",

"ct": "201326592",

"is": "",

"fp": "result",

"queryWord": text,

"cl": "2",

"lm": "-1",

"ie": "utf-8",

"oe": "utf-8",

"adpicid": "",

"st": "",

"z": "",

"ic": "",

"word": text,

"s": "",

"se": "",

"tab": "",

"width": "",

"height": "",

"face": "",

"istype": "",

"qc": "",

"nc": "",

"fr": "",

"cg": "head",

"pn": str(start),

"rn": str(length),

"gsm": s,

"1511330964840": ""

};

cookie=cookiejar.CookieJar()

cookie_support = request.HTTPCookieProcessor(cookie)

opener = request.build_opener(cookie_support, request.HTTPHandler)

request.install_opener(opener)

reqData = parse.urlencode(reqMessage)

req = request.Request("http://image.baidu.com/search/acjson?" + reqData, headers={

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 Safari/537.36"})

data = request.urlopen(req).read();

rm = re.compile(r'"thumbURL":"[\w/\\:.,;=&]*"')

list = re.findall(rm, data.decode())

index = start+1

result=False

for thumbURL in list:

url = thumbURL[12:len(thumbURL) - 1]

downImg(url, "F:/file/baidu/" + str(index) + ".jpg")

index += 1

result=True

return result

# 下载图片

def downImg(url, path):

print(url)

req=request.Request(url,headers={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 Safari/537.36",

"Referer":"http://image.baidu.com/search/acjson"})

data= request.urlopen(req).read()

file=open(path,"wb")

file.write(data)

file.close()

pass

a=0

while a!=-1:

result= main("美女图片", a*30, 30)

print("暂停中...")

a += 1

if result==False :

a=-1

time.sleep(10)

pass

print("执行完成")信息不多没有什么太多的事情,需要注意的就是下载图片时请求头需要添加User-Agent以及Referer,否则百度会拒绝访问,另外百度的图片只能访问一次,访问一次过后图片链接立即失效.还有抓取的数据以及时间限制,一次性爬取的数量有限.