一、前言

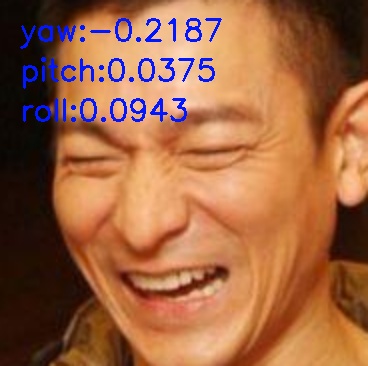

本篇主要记录由mtcnn检测得的关键点作人头姿态估计,思路较为简单,mtcnn是一种可以检测输出5个关键点的人脸检测算法,分别是左眼,右眼,鼻尖,嘴的左角和嘴的右角。当获得图像中人脸的5个2D关键点,再由Opencv中POSIT的姿态估计算法将5个世界坐标系的模板3D关键点通过旋转、平移等变换投射至这5个2D关键点,进而估计得变换参数,最后求得2D平面中的人头的姿态参数,分别为Yaw:摇头 左正右负、Pitch:点头 上负下正、Roll:摆头(歪头)左负 右正

二、Mtcnn-light

对于mtcnn,网上具有较多开源版本,这里使用light版本,优点是速度较快,缺点为模型准确性略有下降,为输出5个关键点,对原来src/mtcnn.cpp中增加重载函数 void findFace(Mat &image , vector<struct Bbox> &resBox );

三、人头姿态估计

人头姿态估计代码参考自https://blog.csdn.net/zzyy0929/article/details/78323363

#include "network.h"

#include "mtcnn.h"

#include <time.h>

#include <iostream>

#include <fstream>

#include <vector>

#include <map>

#include "opencv2/opencv.hpp"

void rot2Euler(cv::Mat faceImg,const cv::Mat& rotation3_3)

{

cv::resize(faceImg,faceImg,cv::Size(faceImg.cols*2,faceImg.rows*2));

double q0 = std::sqrt(1+rotation3_3.at<double>(1,1)+rotation3_3.at<double>(2,2)+rotation3_3.at<double>(3,3))/2;

double q1 = (rotation3_3.at<double>(3,2)-rotation3_3.at<double>(2,3))/(4*q0);

double q2 = (rotation3_3.at<double>(1,3)-rotation3_3.at<double>(3,1))/(4*q0);

double q3 = (rotation3_3.at<double>(2,1)-rotation3_3.at<double>(1,2))/(4*q0);

double yaw = std::asin( 2*(q0*q2 + q1*q3) );

double pitch = std::atan2(2*(q0*q1-q2*q3), q0*q0-q1*q1-q2*q2+q3*q3);

double roll = std::atan2(2*(q0*q3-q1*q2), q0*q0+q1*q1-q2*q2-q3*q3);

std::cout<<"yaw:"<<yaw<<" pitch:"<< pitch <<" roll:"<< roll<< std::endl;

char ch[20];

sprintf(ch, "yaw:%0.4f", yaw);

cv::putText(faceImg,ch,cv::Point(20,40), FONT_HERSHEY_SIMPLEX,1,Scalar(255,23,0),2,3);

sprintf(ch, "pitch:%0.4f", pitch);

cv::putText(faceImg,ch,cv::Point(20,80), FONT_HERSHEY_SIMPLEX,1,Scalar(255,23,0),2,3);

sprintf(ch, "roll:%0.4f", roll);

cv::putText(faceImg,ch,cv::Point(20,120), FONT_HERSHEY_SIMPLEX,1,Scalar(255,23,0),2,3);

cv::imshow("faceImg",faceImg);

}

void headPosEstimate(const cv::Mat & faceImg , const std::vector<cv::Point2d>& facial5Pts )

{

// 3D model points

std::vector<cv::Point3f> model_points;

model_points.push_back(cv::Point3d(-165.0f, 170.0f, -115.0f)); // Left eye

model_points.push_back(cv::Point3d( 165.0f, 170.0f, -115.0f)); // Right eye

model_points.push_back(cv::Point3d(0.0f, 0.0f, 0.0f)); // Nose tip

model_points.push_back(cv::Point3d(-150.0f, -150.0f, -125.0f)); // Left Mouth corner

model_points.push_back(cv::Point3d(150.0f, -150.0f, -125.0f)); // Right Mouth corner

// Camera internals

double focal_length = faceImg.cols;

cv::Point2d center = cv::Point2d(faceImg.cols/2,faceImg.rows/2);

cv::Mat camera_matrix =(cv::Mat_<double>(3,3) << focal_length, 0, center.x, 0,focal_length,center.y,0, 0,1);

cv::Mat dist_coeffs = cv::Mat::zeros(4,1,cv::DataType<double>::type);

cv::Mat rotation_vector;

cv::Mat translation_vector;

cv::solvePnP(model_points,facial5Pts , camera_matrix, dist_coeffs,rotation_vector, translation_vector);

/*投影一条直线而已

std::vector<Point3d> nose_end_point3D;

std::vector<Point2d> nose_end_point2D;

nose_end_point3D.push_back(cv::Point3d(0,0,1000.0));

projectPoints(nose_end_point3D, rotation_vector, translation_vector,camera_matrix, dist_coeffs, nose_end_point2D);

//std::cout << "Rotation Vector " << std::endl << rotation_vector << std::endl;

//std::cout << "Translation Vector" << std::endl << translation_vector << std::endl;

cv::Mat temp(faceImg);

cv::line(temp ,facial5Pts[2], nose_end_point2D[0], cv::Scalar(255,0,0), 2);

cv::imshow("vvvvvvvv" ,temp );

cv::waitKey(1); */

cv::Mat rotation3_3;

cv::Rodrigues(rotation_vector,rotation3_3);

rot2Euler(faceImg.clone(),rotation3_3);

}

void showheadPost(const cv::Mat& img, std::vector<struct Bbox>& resBox)

{

for( vector<struct Bbox>::iterator it=resBox.begin(); it!=resBox.end();it++){

if((*it).exist){

int it_x1 = (*it).y1; //这里需注意,(*it).y1表示第一个点的x坐标,(*it).x1表示y坐标

int it_y1 = (*it).x1;

int it_x2 = (*it).y2;

int it_y2 = (*it).x2;

const cv::Mat faceImg = img(Rect(it_x1 ,it_y1 ,it_x2-it_x1 ,it_y2-it_y1 ));

std::vector<Point2d> face5Pts; //脸部5个点的坐标,原点坐标为(0,0)

for(int i=0 ;i<5 ; ++i ){

face5Pts.push_back(Point2f(*(it->ppoint+i)-it_x1 , *(it->ppoint+i+5)-it_y1 ));

}

headPosEstimate(faceImg , face5Pts);

}

}

}

int main()

{

cv::Mat img = cv::imread("ldh.jpg");

mtcnn find(img.rows, img.cols);

std::vector<struct Bbox> resBox;

find.findFace(img , resBox);

showheadPost(img,resBox);

waitKey(0);

return 0;

}四、实验结果

载入两张图片实验,结果如下所示,可以评估侧脸程度,不过失败时会出现nan的计算