https://github.com/happynear/caffe-windows

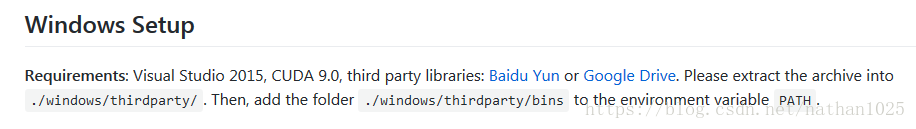

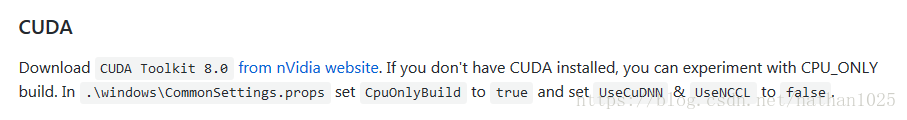

1.首先请选择cuda9.0 ,cudNN7.05,虽然按照下图有矛盾之处(cuda8.0)

2.只需要libcaffe.lib即可

3.拷贝classification.cpp文件到新工程下,将其分解为.h以及新的.cpp

4.依赖文件:

4.1 拷贝caffe-windows/include 下的caffe文件到新工程下

4.2 拷贝\caffe-windows\windows\thirdparty 到新工程下

4.3拷贝 c盘cuda9.0文件夹到新工程下,重命名为cuda

4.4新建caffe_lib文件夹,将caffe-windows编译出来的lib放到该文件下

5.工程配置

5.1 包含目录(主要thirdparty 跟 caffe-windows的caffe目录)

./include;./thirdparty;./thirdparty/Protobuf/include;./thirdparty/Boost;./thirdparty/OpenBLAS/include;./cuda/include;./thirdparty/GLog/include;./thirdparty/GFlags/Include;./caffe_lib;./thirdparty\OpenCV;./thirdparty\OpenCV\include;./thirdparty\OpenCV\include\opencv;./thirdpartyOpenCV\include\opencv2;./thirdparty\OpenBLAS\include

5.2 库目录(主要thirdparty ,cuda 跟 caffe_lib)

./thirdparty/Boost/lib64-msvc-14.0;./thirdparty/Protobuf/lib;./cuda\lib\x64;./thirdparty\GFlags\Lib;./thirdparty\GLog\lib;./thirdparty\LEVELDB\lib;./thirdparty\NCCL\lib;./thirdparty/OpenCV\x64\vc14\lib;./thirdparty\HDF5\lib;./thirdparty\LMDB\lib;./thirdparty\OpenBLAS\lib;./caffe_lib

5.3 附加依赖项目(thirdparty ,cuda ,以及 libcaffe)

glog.lib;libopenblas.dll.a;zlib.lib;szip.lib;libcaffe.lib;gflags_nothreadsd.lib;libboost_chrono-vc140-mt-1_65_1.lib;libboost_chrono-vc140-mt-gd-1_65_1.lib;libboost_date_time-vc140-mt-1_65_1.lib;libboost_date_time-vc140-mt-gd-1_65_1.lib;libboost_filesystem-vc140-mt-1_65_1.lib;libboost_filesystem-vc140-mt-gd-1_65_1.lib;libboost_python-vc140-mt-1_65_1.lib;libboost_python-vc140-mt-gd-1_65_1.lib;libboost_python3-vc140-mt-1_65_1.lib;libboost_python3-vc140-mt-gd-1_65_1.lib;libboost_system-vc140-mt-1_65_1.lib;libboost_system-vc140-mt-gd-1_65_1.lib;libboost_thread-vc140-mt-1_65_1.lib;libboost_thread-vc140-mt-gd-1_65_1.lib;libprotobuf.lib;libprotobuf-lite.lib;libprotoc.lib;cublas.lib;cudart.lib;cuda.lib;cufft.lib;curand.lib;cudnn.lib;gflags.lib;gflagsd.lib;gflags_nothreads.lib;hdf5.lib;hdf5_hl.lib;Shlwapi.lib;LevelDb.lib;lmdb.lib;opencv_world310.lib;

6 其他头文件:"layerhead.h"

namespace caffe

{

extern INSTANTIATE_CLASS(InputLayer);

//REGISTER_LAYER_CLASS(Input);

extern INSTANTIATE_CLASS(InnerProductLayer);

//REGISTER_LAYER_CLASS(InnerProduct);

extern INSTANTIATE_CLASS(DropoutLayer);

//REGISTER_LAYER_CLASS(Dropout);

extern INSTANTIATE_CLASS(ConvolutionLayer);

REGISTER_LAYER_CLASS(Convolution);

extern INSTANTIATE_CLASS(BatchNormLayer);

//REGISTER_LAYER_CLASS(BatchNorm);

extern INSTANTIATE_CLASS(ReLULayer);

REGISTER_LAYER_CLASS(ReLU);

extern INSTANTIATE_CLASS(PoolingLayer);

REGISTER_LAYER_CLASS(Pooling);

extern INSTANTIATE_CLASS(LRNLayer);

REGISTER_LAYER_CLASS(LRN);

extern INSTANTIATE_CLASS(SoftmaxLayer);

REGISTER_LAYER_CLASS(Softmax);

extern INSTANTIATE_CLASS(EltwiseLayer);

//REGISTER_LAYER_CLASS(Eltwise);

extern INSTANTIATE_CLASS(PowerLayer);

//REGISTER_LAYER_CLASS(Power);

extern INSTANTIATE_CLASS(SplitLayer);

//REGISTER_LAYER_CLASS(Split);

extern INSTANTIATE_CLASS(ScaleLayer);

//REGISTER_LAYER_CLASS(Scale);

extern INSTANTIATE_CLASS(BiasLayer);

//REGISTER_LAYER_CLASS(Bias);

extern INSTANTIATE_CLASS(ReshapeLayer);

//extern INSTANTIATE_CLASS(ROIPoolingLayer);

}

7.测试代码

//std::cout << "" << std::endl;

//深度学习初始化

cv::Mat img(32, 32, CV_8UC3);

string resnet_model_file = "models/ResNet56/res56_cifar_train_test.prototxt";

string resnet_trained_file = "models/ResNet56/cifar10_res56_iter_120000.caffemodel";

string resnet_mean_file = "models/ResNet56/mean.binaryproto";

string resnet_label_file = "models/ResNet56/labels.txt";

Classifier classifier(resnet_model_file, resnet_trained_file, resnet_mean_file, resnet_label_file);

std::cout << ""<<std::endl;

img = cv::imread("0.jpg");

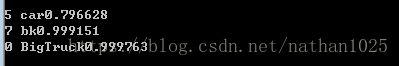

std::vector<Prediction> predictions = classifier.Classify(img);

IplImage Ip_img = IplImage(img);

string str1 = predictions[0].first;

string str2;

std::cout << str1.c_str() << predictions[0].second << std::endl;

8.结果