经典卷积神经网络之—–AlexNet

1.创新点:

应用Relu、Dropout和LRN等

- Relu: 解决了Sigmoid在网络较深时的梯度弥散问题

- Dropout: 随机忽略部分神经元,避免模型过拟合

- 使用的最大池化,避免平均池化的模糊化效果,步长比池化核尺寸小,有重叠,提升特征的丰富性

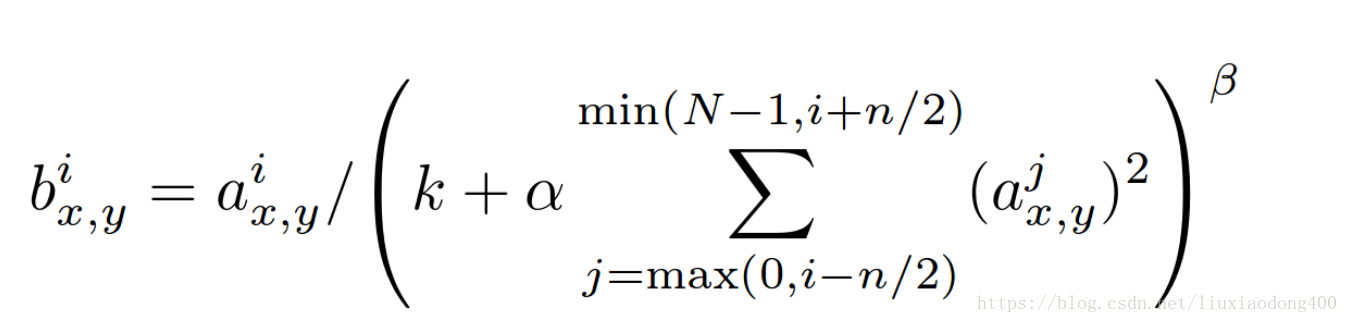

LRN层:对局部神经元的活动创建竞争机制,使得响应较大的值变得相对较大,抑制反馈较小的神经元,提示泛化能力

其中:参数k,n,alpha,belta都是超参数,一般设置k=2,n=5,alpha=2e-05,beta=0.75.

i表示第i个核,x,y表示当前参数在第i个核的位置,N为核的个数

该公式表示:在第i个核的位置,用激活后的Relu输出,除以该核前后核的Relu输出平方总和(前后核的多少由n确定),作为归一化的输出。CUDA加速

- 数据增强:论文中提到对图像的RGB数据进行PCA主成分分析,并对主成分做一个标准差为0.1的高斯扰动,可让错误率下降1%

2.网络结构

计算公式:

1.卷积:

2.池化:

AlexNet共需要训练8层(不包括池化层和LRN层):5个卷积层和3个全连接层。

输入图片:227×227×3

卷积核尺寸:11×11×3,步长为4,共96个不同的卷积核

计算:(227-11+0)/4+1=55

激活:Relu

C1:55×55*96

归一化处理,n=4

LRN1:55×55*96

池化尺寸:3×3,步长为2(步长小于池化尺寸)

计算:(55-3)/2+1=27扫描二维码关注公众号,回复: 3011763 查看本文章

MAXPOOL1:27*27*96

卷积核尺寸:5×5×96,步长为1,padding=2,共256个不同的卷积核

计算:(27-5+2×2)/1+1=27

激活:Relu

C2:27*27*256

归一化处理,n=4

LRN2:27*27*256

池化尺寸:3×3,步长为2(步长小于池化尺寸),

计算:(27-3)/2+1=13

MAXPOOL2:13×13×256

卷积核尺寸:3×3×256,步长为1,padding=1,共384个不同的卷积核

计算:(13-3+1×2)/1+1=13

激活:Relu

C3:13×13*384

卷积核尺寸:3×3×384,步长为1,padding=1,共384个不同的卷积核

计算:(13-3+1×2)/1+1=13

激活:Relu

C4:13×13*384

卷积核尺寸:3×3×384,步长为1,padding=1,共256个不同的卷积核

计算:(13-3+1×2)/1+1=13

激活:Relu

C5:13×13*256

池化尺寸:3×3,步长为2(步长小于池化尺寸),

计算:(13-3)/2+1=6

MAXPOOL3:6×6×256

共4096个神经元,每一个神经元都对6×6×256的核进行卷积运算

FC1:4096个神经元

共4096个神经元,与FC1全连接

dropout

FC2:4096个神经元

共1000个神经元,与FC1全连接

dropout

FC3:1000个神经元

3.代码

import numpy as np

import tensorflow as tf

net_data = np.load("bvlc-alexnet.npy", encoding="latin1").item()

def conv(input, kernel, biases, k_h, k_w, c_o, s_h, s_w, padding="VALID", group=1):

'''

From https://github.com/ethereon/caffe-tensorflow

'''

c_i = input.get_shape()[-1]

assert c_i % group == 0

assert c_o % group == 0

convolve = lambda i, k: tf.nn.conv2d(i, k, [1, s_h, s_w, 1], padding=padding)

if tf.__version__ < "1.0.0":

if group == 1:

conv = convolve(input, kernel)

else:

input_groups = tf.split(3, group, input)

kernel_groups = tf.split(3, group, kernel)

output_groups = [convolve(i, k) for i, k in zip(input_groups, kernel_groups)]

conv = tf.concat(3, output_groups)

else:

if group == 1:

conv = convolve(input, kernel)

else:

input_groups = tf.split(input, group, 3)

kernel_groups = tf.split(kernel, group, 3)

output_groups = [convolve(i, k) for i, k in zip(input_groups, kernel_groups)]

conv = tf.concat(output_groups, 3)

return tf.reshape(tf.nn.bias_add(conv, biases), [-1] + conv.get_shape().as_list()[1:])

def AlexNet(features, feature_extract=False):

"""

Builds an AlexNet model, loads pretrained weights

"""

# conv1

# conv(11, 11, 96, 4, 4, padding='VALID', name='conv1')

k_h = 11

k_w = 11

c_o = 96

s_h = 4

s_w = 4

conv1W = tf.Variable(net_data["conv1"][0])

conv1b = tf.Variable(net_data["conv1"][1])

conv1_in = conv(features, conv1W, conv1b, k_h, k_w, c_o, s_h, s_w, padding="SAME", group=1)

conv1 = tf.nn.relu(conv1_in)

# lrn1

# lrn(2, 2e-05, 0.75, name='norm1')

radius = 2

alpha = 2e-05

beta = 0.75

bias = 1.0

lrn1 = tf.nn.local_response_normalization(conv1, depth_radius=radius, alpha=alpha, beta=beta, bias=bias)

# maxpool1

# max_pool(3, 3, 2, 2, padding='VALID', name='pool1')

k_h = 3

k_w = 3

s_h = 2

s_w = 2

padding = 'VALID'

maxpool1 = tf.nn.max_pool(lrn1, ksize=[1, k_h, k_w, 1], strides=[1, s_h, s_w, 1], padding=padding)

# conv2

# conv(5, 5, 256, 1, 1, group=2, name='conv2')

k_h = 5

k_w = 5

c_o = 256

s_h = 1

s_w = 1

group = 2

conv2W = tf.Variable(net_data["conv2"][0])

conv2b = tf.Variable(net_data["conv2"][1])

conv2_in = conv(maxpool1, conv2W, conv2b, k_h, k_w, c_o, s_h, s_w, padding="SAME", group=group)

conv2 = tf.nn.relu(conv2_in)

# lrn2

# lrn(2, 2e-05, 0.75, name='norm2')

radius = 2

alpha = 2e-05

beta = 0.75

bias = 1.0

lrn2 = tf.nn.local_response_normalization(conv2, depth_radius=radius, alpha=alpha, beta=beta, bias=bias)

# maxpool2

# max_pool(3, 3, 2, 2, padding='VALID', name='pool2')

k_h = 3

k_w = 3

s_h = 2

s_w = 2

padding = 'VALID'

maxpool2 = tf.nn.max_pool(lrn2, ksize=[1, k_h, k_w, 1], strides=[1, s_h, s_w, 1], padding=padding)

# conv3

# conv(3, 3, 384, 1, 1, name='conv3')

k_h = 3

k_w = 3

c_o = 384

s_h = 1

s_w = 1

group = 1

conv3W = tf.Variable(net_data["conv3"][0])

conv3b = tf.Variable(net_data["conv3"][1])

conv3_in = conv(maxpool2, conv3W, conv3b, k_h, k_w, c_o, s_h, s_w, padding="SAME", group=group)

conv3 = tf.nn.relu(conv3_in)

# conv4

# conv(3, 3, 384, 1, 1, group=2, name='conv4')

k_h = 3

k_w = 3

c_o = 384

s_h = 1

s_w = 1

group = 2

conv4W = tf.Variable(net_data["conv4"][0])

conv4b = tf.Variable(net_data["conv4"][1])

conv4_in = conv(conv3, conv4W, conv4b, k_h, k_w, c_o, s_h, s_w, padding="SAME", group=group)

conv4 = tf.nn.relu(conv4_in)

# conv5

# conv(3, 3, 256, 1, 1, group=2, name='conv5')

k_h = 3

k_w = 3

c_o = 256

s_h = 1

s_w = 1

group = 2

conv5W = tf.Variable(net_data["conv5"][0])

conv5b = tf.Variable(net_data["conv5"][1])

conv5_in = conv(conv4, conv5W, conv5b, k_h, k_w, c_o, s_h, s_w, padding="SAME", group=group)

conv5 = tf.nn.relu(conv5_in)

# maxpool5

# max_pool(3, 3, 2, 2, padding='VALID', name='pool5')

k_h = 3

k_w = 3

s_h = 2

s_w = 2

padding = 'VALID'

maxpool5 = tf.nn.max_pool(conv5, ksize=[1, k_h, k_w, 1], strides=[1, s_h, s_w, 1], padding=padding)

# fc6, 4096

fc6W = tf.Variable(net_data["fc6"][0])

fc6b = tf.Variable(net_data["fc6"][1])

flat5 = tf.reshape(maxpool5, [-1, int(np.prod(maxpool5.get_shape()[1:]))])

fc6 = tf.nn.relu(tf.matmul(flat5, fc6W) + fc6b)

# fc7, 4096

fc7W = tf.Variable(net_data["fc7"][0])

fc7b = tf.Variable(net_data["fc7"][1])

fc7 = tf.nn.relu(tf.matmul(fc6, fc7W) + fc7b)

if feature_extract:

return fc7

# fc8, 1000

fc8W = tf.Variable(net_data["fc8"][0])

fc8b = tf.Variable(net_data["fc8"][1])

logits = tf.matmul(fc7, fc8W) + fc8b

probabilities = tf.nn.softmax(logits)

return probabilities