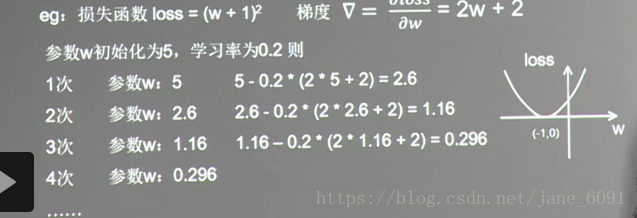

学习率表示为每次参数更新的幅度

wn+1(更新后的参数)=wn(当前参数)-learning_rate*损失函数的导数

例子:

得到loss函数斜率最小的点。

不知道为什么不直接计算梯度令其为0,是不是因为可能局部最小点。。

#tf_3_5.py

#设损失函数loss=(w+1)^2,令w初值为5,反向传播求最优w

import tensorflow as tf

w=tf.Variable(tf.constant(5,dtype=tf.float32))

loss = tf.square(w+1)

train_step=tf.train.GradientDescentOptimizer(0.2).minimize(loss)

with tf.Session() as sess:

init_op=tf.global_variables_initializer()

sess.run(init_op)

for i in range(10):

sess.run(train_step)

w_val=sess.run(w)

loss_val=sess.run(loss)

print("w is",w_val,"loss is ",loss_val)学习率的设置:

如果令learning_rate=1,振荡不收敛,为0.001时学习率小了收敛速度太慢,要迭代太多次

所以最好选择合适的学习率,既不会收敛振荡也不会收敛太慢。。(根据实际情况)

指数衰减学习率

跟batchsize有关

learning_rate=learning_rate_base*learning_rate_decay(衰减率=总样本数/batch_size)

global_step=tf.Variable(0,trainable=False)

learning_rate=tf.train.exponential_decay(LEARNING_RATE_BASE,global_step,LEARNING_RATE_STEP,LEARNING_RATE_DECAY,staircase=True)

#tf_3_6.py

#使用指数衰减的学习率,在迭代初期得到较高的下降速度,可以在较小的训练轮数下取得更有收敛度的值

import tensorflow as tf

LEARNINT_RATE_BASE=0.1

LEARNING_RATE_DECAY=0.99

LEARNING_RATE_STEP=2#喂入多少轮batchsize后,更新一次学习率

#当前轮数

global_step=tf.Variable(0,trainable=False)

#指数衰减的学习率

learning_rate=tf.train.exponential_decay(LEARNINT_RATE_BASE,global_step,LEARNING_RATE_STEP,LEARNING_RATE_DECAY,staircase=True)

w=tf.Variable(tf.constant(5,dtype=tf.float32))

loss=tf.square(w+1)

train_step=tf.train.GradientDescentOptimizer(learning_rate).minimize(loss,global_step=global_step)

with tf.Session() as sess:

init_op=tf.global_variables_initializer()

sess.run(init_op)

for i in range(40):

sess.run(train_step)

learning_rate_val=sess.run(learning_rate)

global_step_val=sess.run(global_step)

w_val=sess.run(w)

loss_val=sess.run(loss)

print("w is",w_val,"learning_rate_val is",learning_rate_val,"global_step_val is",global_step_val,"loss_val is",loss_val)