前言

之前一篇文章《spark sql 在mysql的应用实践》 已经简单描述了spark sql 在我们的业务场景的实践、开发遇到的问题和集群的队列分配问题。这篇主要介绍spark dataset 的cache,了解其参数,基本原理和简单的源码分析。

cache

实际开发过程中,有时候很多地方都会用到同一个dataset, 那么每个地方遇到Action操作的时候都会对同一个算子计算多次,这样会造成执行效率低下的问题,而通过cache操作可以把dataset持久化到内存或者磁盘,提高执行效率。

cache的使用有两种方式,cache()和persist();

/**

* Persist this RDD with the default storage level (`MEMORY_ONLY`).

*/

def cache(): this.type = persist() /**

* Persist this RDD with the default storage level (`MEMORY_ONLY`).

*/

def persist(): this.type = persist(StorageLevel.MEMORY_ONLY)可以看到,cache只有一个默认的缓存级别MEMORY_ONLY ,而persist可以根据情况设置其它的缓存级别。

参数设置

/**

* :: DeveloperApi ::

* Flags for controlling the storage of an RDD. Each StorageLevel records whether to use memory,

* or ExternalBlockStore, whether to drop the RDD to disk if it falls out of memory or

* ExternalBlockStore, whether to keep the data in memory in a serialized format, and whether

* to replicate the RDD partitions on multiple nodes.

*

* The [[org.apache.spark.storage.StorageLevel$]] singleton object contains some static constants

* for commonly useful storage levels. To create your own storage level object, use the

* factory method of the singleton object (`StorageLevel(...)`).

*/

@DeveloperApi

class StorageLevel private(

private var _useDisk: Boolean,

private var _useMemory: Boolean,

private var _useOffHeap: Boolean,

private var _deserialized: Boolean,

private var _replication: Int = 1)

extends Externalizable 从源码中可以看到,缓存有以下几种类型:

useDisk:使用硬盘(外存)

useMemory:使用内存

useOffHeap:使用堆外内存,堆外内存意味着把内存对象分配在Java虚拟机的堆以外的内存,这些内存直接受操作系统管理(而不是虚拟机)。这样做的结果就是能保持一个较小的堆,以减少垃圾收集对应用的影响。这部分内存也会被频繁的使用而且也可能导致OOM,它是通过存储在堆中的DirectByteBuffer对象进行引用,可以避免堆和堆外数据进行来回复制。

deserialized:反序列化,将对象表示成一连串的字节;而反序列化就表示将字节恢复为对象的过程。序列化是对象永久化的一种机制,可以将对象及其属性保存起来,并能在反序列化后直接恢复这个对象 。

replication:备份数(在多个节点上备份,默认为1)

此外,还有缓存级别的设置细化缓存

/**

* Various [[org.apache.spark.storage.StorageLevel]] defined and utility functions for creating

* new storage levels.

*/

object StorageLevel {

val NONE = new StorageLevel(false, false, false, false)

val DISK_ONLY = new StorageLevel(true, false, false, false)

val DISK_ONLY_2 = new StorageLevel(true, false, false, false, 2)

val MEMORY_ONLY = new StorageLevel(false, true, false, true)

val MEMORY_ONLY_2 = new StorageLevel(false, true, false, true, 2)

val MEMORY_ONLY_SER = new StorageLevel(false, true, false, false)

val MEMORY_ONLY_SER_2 = new StorageLevel(false, true, false, false, 2)

val MEMORY_AND_DISK = new StorageLevel(true, true, false, true)

val MEMORY_AND_DISK_2 = new StorageLevel(true, true, false, true, 2)

val MEMORY_AND_DISK_SER = new StorageLevel(true, true, false, false)

val MEMORY_AND_DISK_SER_2 = new StorageLevel(true, true, false, false, 2)

val OFF_HEAP = new StorageLevel(true, true, true, false, 1)dataset与block

dataset的cache由spark的storage模块进行管理,具体实现由BlockManager完成,在逻辑上dataset以block为基本存储单位,dataset的每个partition经过处理后唯一对应一个Block(BlockId 的格式为 rdd_RDD-ID_PARTITION-ID ),根据设置的级不同,block可以存储在磁盘/堆内内存/堆外内存,在实现上,BlockManager用一个LinkedHashMap来管理堆内和堆外存储内存中所有的 Block 对象的实例,只有在dataset的所有block都remove完之后,在driver端的jvm才会释放对dataset的对象引用。

/**

* Component of the [[BlockManager]] which tracks metadata for blocks and manages block locking.

*

* The locking interface exposed by this class is readers-writer lock. Every lock acquisition is

* automatically associated with a running task and locks are automatically released upon task

* completion or failure.

*

* This class is thread-safe.

*/

private[storage] class BlockInfoManager extends Logging {

private type TaskAttemptId = Long

/**

* Used to look up metadata for individual blocks. Entries are added to this map via an atomic

* set-if-not-exists operation ([[lockNewBlockForWriting()]]) and are removed

* by [[removeBlock()]].

*/

@GuardedBy("this")

private[this] val infos = new mutable.HashMap[BlockId, BlockInfo]LinkedHashMap 的新增和删除间接记录了内存的申请和释放,

/**

* Attempt to acquire the appropriate lock for writing a new block.

*

* This enforces the first-writer-wins semantics. If we are the first to write the block,

* then just go ahead and acquire the write lock. Otherwise, if another thread is already

* writing the block, then we wait for the write to finish before acquiring the read lock.

*

* @return true if the block did not already exist, false otherwise. If this returns false, then

* a read lock on the existing block will be held. If this returns true, a write lock on

* the new block will be held.

*/

def lockNewBlockForWriting(

blockId: BlockId,

newBlockInfo: BlockInfo): Boolean = synchronized {

logTrace(s"Task $currentTaskAttemptId trying to put $blockId")

lockForReading(blockId) match {

case Some(info) =>

// Block already exists. This could happen if another thread races with us to compute

// the same block. In this case, just keep the read lock and return.

false

case None =>

// Block does not yet exist or is removed, so we are free to acquire the write lock

infos(blockId) = newBlockInfo

lockForWriting(blockId)

true

}

}/**

* Removes the given block and releases the write lock on it.

*

* This can only be called while holding a write lock on the given block.

*/

def removeBlock(blockId: BlockId): Unit = synchronized {

logTrace(s"Task $currentTaskAttemptId trying to remove block $blockId")

infos.get(blockId) match {

case Some(blockInfo) =>

if (blockInfo.writerTask != currentTaskAttemptId) {

throw new IllegalStateException(

s"Task $currentTaskAttemptId called remove() on block $blockId without a write lock")

} else {

infos.remove(blockId)

blockInfo.readerCount = 0

blockInfo.writerTask = BlockInfo.NO_WRITER

writeLocksByTask.removeBinding(currentTaskAttemptId, blockId)

}

case None =>

throw new IllegalArgumentException(

s"Task $currentTaskAttemptId called remove() on non-existent block $blockId")

}

notifyAll()

}可能有同学已经发现,新增block的时候用的是写入锁,实际上,block信息的读写就是用的读写锁提高多线程操作的性能的;因为每个executor在执行时会生成一个线程池对每个partition(即block)进行读写,为了保证多线程下的线程安全和读写性能,blockManage这里使用了读写锁和ConcurrentHashMultiset。

executor源码:

/**

* Spark executor, backed by a threadpool to run tasks.

*

* This can be used with Mesos, YARN, and the standalone scheduler.

* An internal RPC interface is used for communication with the driver,

* except in the case of Mesos fine-grained mode.

*/

private[spark] class Executor(

executorId: String,

executorHostname: String,

env: SparkEnv,

userClassPath: Seq[URL] = Nil,

isLocal: Boolean = false)

extends Logging {

logInfo(s"Starting executor ID $executorId on host $executorHostname")

// Application dependencies (added through SparkContext) that we've fetched so far on this node.

// Each map holds the master's timestamp for the version of that file or JAR we got.

private val currentFiles: HashMap[String, Long] = new HashMap[String, Long]()

private val currentJars: HashMap[String, Long] = new HashMap[String, Long]()

private val EMPTY_BYTE_BUFFER = ByteBuffer.wrap(new Array[Byte](0))

private val conf = env.conf

// No ip or host:port - just hostname

Utils.checkHost(executorHostname, "Expected executed slave to be a hostname")

// must not have port specified.

assert (0 == Utils.parseHostPort(executorHostname)._2)

// Make sure the local hostname we report matches the cluster scheduler's name for this host

Utils.setCustomHostname(executorHostname)

if (!isLocal) {

// Setup an uncaught exception handler for non-local mode.

// Make any thread terminations due to uncaught exceptions kill the entire

// executor process to avoid surprising stalls.

Thread.setDefaultUncaughtExceptionHandler(SparkUncaughtExceptionHandler)

}

// Start worker thread pool

private val threadPool = ThreadUtils.newDaemonCachedThreadPool("Executor task launch worker")

private val executorSource = new ExecutorSource(threadPool, executorId)BlockInfo 源码:

/**

* Tracks metadata for an individual block.

*

* Instances of this class are _not_ thread-safe and are protected by locks in the

* [[BlockInfoManager]].

*

* @param level the block's storage level. This is the requested persistence level, not the

* effective storage level of the block (i.e. if this is MEMORY_AND_DISK, then this

* does not imply that the block is actually resident in memory).

* @param classTag the block's [[ClassTag]], used to select the serializer

* @param tellMaster whether state changes for this block should be reported to the master. This

* is true for most blocks, but is false for broadcast blocks.

*/

private[storage] class BlockInfo(

val level: StorageLevel,

val classTag: ClassTag[_],

val tellMaster: Boolean) {

/**

* The size of the block (in bytes)

*/

def size: Long = _size

def size_=(s: Long): Unit = {

_size = s

checkInvariants()

}

private[this] var _size: Long = 0

/**

* The number of times that this block has been locked for reading.

*/

def readerCount: Int = _readerCount

def readerCount_=(c: Int): Unit = {

_readerCount = c

checkInvariants()

}

private[this] var _readerCount: Int = 0

/**

* The task attempt id of the task which currently holds the write lock for this block, or

* [[BlockInfo.NON_TASK_WRITER]] if the write lock is held by non-task code, or

* [[BlockInfo.NO_WRITER]] if this block is not locked for writing.

*/

def writerTask: Long = _writerTask

def writerTask_=(t: Long): Unit = {

_writerTask = t

checkInvariants()

}

private[this] var _writerTask: Long = BlockInfo.NO_WRITER

private def checkInvariants(): Unit = {

// A block's reader count must be non-negative:

assert(_readerCount >= 0)

// A block is either locked for reading or for writing, but not for both at the same time:

assert(_readerCount == 0 || _writerTask == BlockInfo.NO_WRITER)

}

checkInvariants()

}cache过程

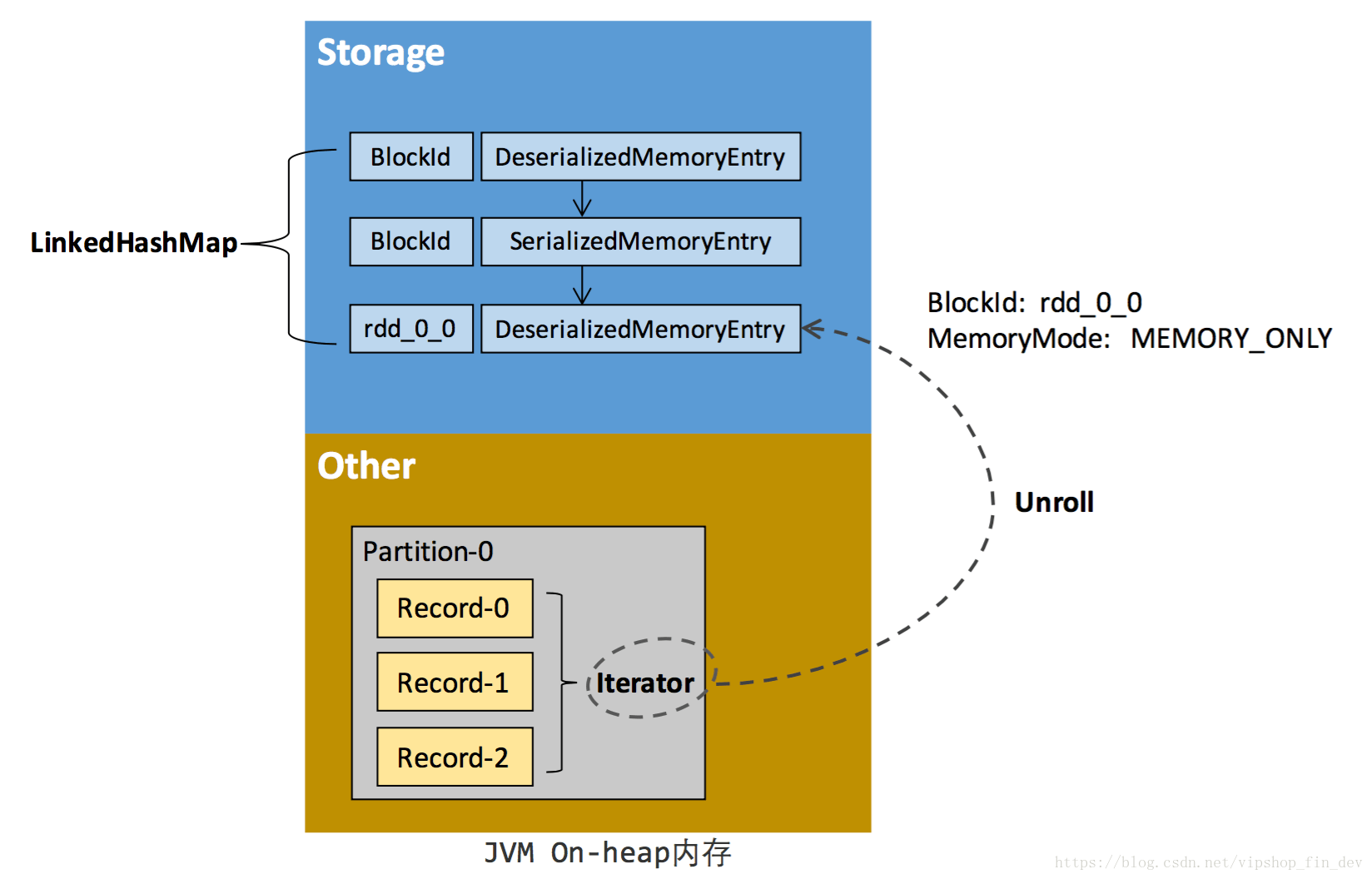

dataset与blockManager的关系如图所示:

- 在缓存dataset到内存之前,我们读取dataset 的partition的每行record的,些 Record 的对象实例在逻辑上占用了 JVM 堆内内存的 other 部分的空间,同一 Partition 的不同 Record 的空间并不连续。RDD 在缓存到存储内存之后,Partition 被转换成 Block,Record 在堆内或堆外存储内存中占用一块连续的空间。将Partition由不连续的存储空间转换为连续存储空间的过程,Spark称之为”展开”(Unroll)。

- Block 有序列化和非序列化两种存储格式,具体以哪种方式取决于该 RDD 的存储级别。非序列化的 Block 以一种 DeserializedMemoryEntry 的数据结构定义,用一个数组存储所有的对象实例,序列化的 Block 则以 SerializedMemoryEntry的数据结构定义,用字节缓冲区(ByteBuffer)来存储二进制数据。

因为不能保证存储空间可以一次容纳 Iterator 中的所有数据,当前的计算任务在 Unroll 时要向 MemoryManager 申请足够的 Unroll 空间来临时占位,空间不足则 Unroll 失败,空间足够时可以继续进行。对于序列化的 Partition,其所需的 Unroll 空间可以直接累加计算,一次申请。而非序列化的 Partition 则要在遍历 Record 的过程中依次申请,即每读取一条 Record,采样估算其所需的 Unroll 空间并进行申请,空间不足时可以中断,释放已占用的 Unroll 空间。如果最终 Unroll 成功,当前 Partition 所占用的 Unroll 空间被转换为正常的缓存 RDD 的存储空间,如下图所示:

由于同一个 Executor 的所有的计算任务共享有限的存储内存空间,当有新的 Block 需要缓存但是剩余空间不足且无法动态占用时,就要对 LinkedHashMap 中的旧 Block 进行淘汰,而被淘汰的 Block 如果其存储级别中同时包含存储到磁盘的要求,则要对其进行落盘,否则直接删除该 Block,按照最近最少使用(LRU)的顺序淘汰,直到满足新 Block 所需的空间。

由以上的cache过程可以看出,cache操作在堆内进行了一次内存的重排,让原先partition不连续的存储空间变成一块连续的内存存储空间,只有当内存不足以存放新的block时才会溢出到磁盘,因此,在缓存之后的dataset上的读取执行上效率更高。

删除cache

对于cache之后的dataset,在executor执行过程中会以最近最少使用的(LRU)方式丢弃旧数据分区,如果确认数据不使用,可以使用dataset.unpersist()方式释放内存,但这只是将remove rdd block的消息发到drive 与executor的执行队列,并非立即执行,所以要避免大量的rdd、dataset同时remove造成通讯队列阻塞。

/**

* SparkContext.scala

* Unpersist an RDD from memory and/or disk storage

*/

private[spark] def unpersistRDD(rddId: Int, blocking: Boolean = true) {

env.blockManager.master.removeRdd(rddId, blocking)

persistentRdds.remove(rddId)

listenerBus.post(SparkListenerUnpersistRDD(rddId))

}以上是我对dataset cache的了解和对参考资料的整理,欢迎批评指正。

赖泽坤 @vip.fcs

参考资料:

1. https://www.ibm.com/developerworks/cn/analytics/library/ba-cn-apache-spark-memory-management/index.html?ca=drs-&utm_source=tuicool&utm_medium=referral

2. https://www.cnblogs.com/starwater/p/6841807.html

3. https://blog.csdn.net/yu0_zhang0/article/details/80424609