前言:

有一个springboot的工程,该工程对外提供一个restful的接口,应用方或其它部门调用该接口推送数据,接收到的数据里面会存放到本地磁盘的一个目录下,一天只会产生一个日志文件(根据业务划分,每一类业务一个文件)。

需求描述:

日志数据格式:

2018-06-28.16:46:38.374 {"bizKey":"DXADXHZWEXTGCFQB","sign":"F068492CC90210167E275977FFBE5A0C","logStr":"{\"id\":\"1004392469380538370\",\"acode\":\"CLICK\",\"app\":\"4.3.4\",\"apprunid\":\"5DB78A8FE3AE_1528272457316\",\"ctime\":\"2018-06-25 13:32:17\",\"create_time\":\"2018-06-25 13:32:17\",\"nt\":\"WIFI\",\"platform\":\"0\",\"r\":\"\",\"stime\":\"2018-06-25 13:32:17\",\"phone\":\"18519087423\",\"p1\":\"SQHomePageViewController\",\"p2\":\"SQRecommendViewController\",\"p3\":\"\",\"android_id\":\"94e3fcc30e90edf2\",\"auid\":\"7da009b4b7878c179d65e20e4d1d589d\",\"idfa\":\"\",\"imei\":\"860797036717585\",\"imsi\":\"460000864499542\",\"install_id\":\"7da009b4b7878c179d65e20e4d1d589d_1515973088836\",\"mac\":\"\",\"wmac\":\"90:ad:f7:86:28:f0\",\"pcode\":\"\",\"uid\":\"\",\"property\":\"22C25529510391525315227D\",\"ar\":\"\",\"at\":\"291366\",\"deviceid\":\"\",\"lat\":\"38.92499077690972\",\"lg\":\"121.65503987630208\",\"os\":\"Android\",\"os_ver\":\"7.0\",\"brand\":\"HUAWEI\",\"xh\":\"HUAWEI NXT-AL10\",\"coordinate\":\"GAODE\",\"city\":\"大连市\",\"trigger_man_phone\":\"18018990297\",\"app_code\":\"1002\",\"cookie\":\"\",\"serialno\":\"CJL5T16217005509\"}","logType":"log","warehouseTableName":"user_tab"}

上述为日志数据格式,通过使用flume实时采集日志,bizkey表示不同的业务日志的标识,按照bizkey值不同来发送到不同的topic

flume agent 配置

# Name the components on this agent

# source 名称

logcollect.sources = taildir-source

# channel 名称

logcollect.channels = risk-channel user-channel

# sink 名称

logcollect.sinks = risk-sink user-sink

# Describe/configure the source

# source 类型

logcollect.sources.taildir-source.type = TAILDIR

# 以json格式记录每个文件的索引绝对路径和最后位置

logcollect.sources.taildir-source.positionFile = /home/bigdata/flume/partition/patition.json

# 文件组,监控文件内容变化,目前只对solar-venus-data.log一个文件做了监控,如果多个,可以使用/u01/solar-venus/log/data/.*.log

logcollect.sources.taildir-source.filegroups = f1

logcollect.sources.taildir-source.filegroups.f1 = /data/.*.log

# source 对应的 channel

logcollect.sources.taildir-source.channels = user-channel risk-channel

# interceptor

# 拦截器

logcollect.sources.taildir-source.interceptors = interceptor

# 拦截器类型(正则) regex_extractor

logcollect.sources.taildir-source.interceptors.interceptor.type = regex_extractor

# 接收日志中包含KCAELBDDCYOXSLGR或DXADXHZWEXTGCFQB的数据

logcollect.sources.taildir-source.interceptors.interceptor.regex = .*(KCAELBDDCYOXSLGR|DXADXHZWEXTGCFQB).*

logcollect.sources.taildir-source.interceptors.interceptor.serializers = s1

logcollect.sources.taildir-source.interceptors.interceptor.serializers.s1.name = key

# selector

# 选择器

logcollect.sources.taildir-source.selector.type = multiplexing

logcollect.sources.taildir-source.selector.header = key

# 日志含有KCAELBDDCYOXSLGR的写入到 risk-channel 通道

logcollect.sources.taildir-source.selector.mapping.KCAELBDDCYOXSLGR = risk-channel

# 日志含有DXADXHZWEXTGCFQB的写入到 user-channel 通道

logcollect.sources.taildir-source.selector.mapping.DXADXHZWEXTGCFQB = user-channel

# Describe/configure the channel

# risk-channel

# channel 类型为 memory 内存

logcollect.channels.risk-channel.type = memory

# 存储在通道中的最大事件数

logcollect.channels.risk-channel.capacity=10000

# 字节缓冲区百分比和信道中所有事件的估计总大小

logcollect.channels.risk-channel.byteCapacityBufferPercentage=2000

# user-channel

logcollect.channels.user-channel.type = memory

logcollect.channels.user-channel.capacity=10000

logcollect.channels.user-channel.byteCapacityBufferPercentage=2000

# Describe/configure the sink

# sink 类型

logcollect.sinks.risk-sink.type = com.sqyc.bigdata.sink.SolarKafkaSink

# kafka topic

logcollect.sinks.risk-sink.kafka.topic = risk_test

logcollect.sinks.risk-sink.kafka.bootstrap.servers = kafka集群地址

logcollect.sinks.risk-sink.kafka.flumeBatchSize = 2000

logcollect.sinks.risk-sink.kafka.producer.acks = 1

# sink 对应的 channel

logcollect.sinks.risk-sink.channel = risk-channel

# user-sink

logcollect.sinks.user-sink.type = com.sqyc.bigdata.sink.SolarKafkaSink

logcollect.sinks.user-sink.kafka.topic = user_test

logcollect.sinks.user-sink.kafka.bootstrap.servers = kafka集群地址

logcollect.sinks.user-sink.kafka.flumeBatchSize = 2000

logcollect.sinks.user-sink.kafka.producer.acks = 1

logcollect.sinks.user-sink.channel = user-channel创建topic

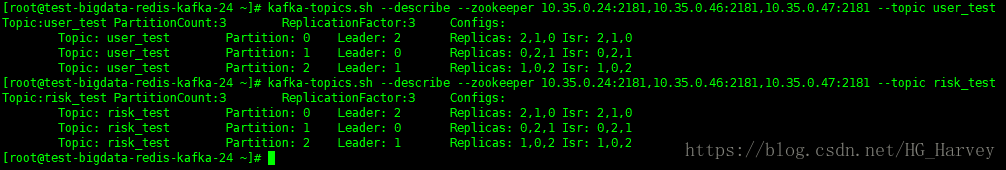

kafka-topics.sh --create --zookeeper zk地址,多个用逗号分隔 --replication-factor 3 --partitions 3 --topic user_testkafka-topics.sh --create --zookeeper zk地址,多个用逗号分隔 --replication-factor 3 --partitions 3 --topic risk_test查看topic 详细信息

kafka-topics.sh --describe --zookeeper zk地址,多个用逗号分隔 --topic topicName创建工程模拟产生日志

代码:

package com.sqyc.bigdata.log;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.text.SimpleDateFormat;

import java.util.Date;

/**

* @description 模拟生成日志

* @author huhanwei

*/

public class LogGenerate {

private static final Logger logger = LoggerFactory.getLogger(LogGenerate.class);

public static void main(String[] args) throws InterruptedException {

logger.info("----------------------------------------> log generate start...");

for (int i = 1; i<= 100000; i++) {

Thread.sleep(200);

System.out.println(getData());

}

logger.info("----------------------------------------> log generate end...");

}

private static String getData() {

String msg = "";

int num = (int)(Math.random() * 3) + 1;

switch (num) {

case 1:

msg = getCurrDate() + " " + "{\"bizKey\":\"KCAELBDDCYOXSLGR\",\"sign\":\"F038492CC90210167E275977FFBE5A0C\"," +

"\"logStr\":\"{\\\"id\\\":\\\"1003392469380538370\\\",\\\"acode\\\":\\\"CLICK\\\",\\\"app\\\":\\\"4.3.4\\\",\\\"apprunid\\\":\\\"5DB78A8FE3AE_1528272457316\\\",\\\"ctime\\\":\\\"2018-06-25 13:32:17\\\",\\\"create_time\\\":\\\"2018-06-25 13:32:17\\\",\\\"nt\\\":\\\"WIFI\\\",\\\"platform\\\":\\\"0\\\",\\\"r\\\":\\\"\\\",\\\"stime\\\":\\\"2018-06-25 13:32:17\\\",\\\"phone\\\":\\\"18519087423\\\",\\\"p1\\\":\\\"SQHomePageViewController\\\",\\\"p2\\\":\\\"SQRecommendViewController\\\",\\\"p3\\\":\\\"\\\",\\\"android_id\\\":\\\"94e3fcc30e90edf2\\\",\\\"auid\\\":\\\"7da009b4b7878c179d65e20e4d1d589d\\\",\\\"idfa\\\":\\\"\\\",\\\"imei\\\":\\\"860797036717585\\\",\\\"imsi\\\":\\\"460000864499542\\\",\\\"install_id\\\":\\\"7da009b4b7878c179d65e20e4d1d589d_1515973088836\\\",\\\"mac\\\":\\\"\\\",\\\"wmac\\\":\\\"90:ad:f7:86:28:f0\\\",\\\"pcode\\\":\\\"\\\",\\\"uid\\\":\\\"\\\",\\\"property\\\":\\\"22C25529510391525315227D\\\",\\\"ar\\\":\\\"\\\",\\\"at\\\":\\\"291366\\\",\\\"deviceid\\\":\\\"\\\",\\\"lat\\\":\\\"38.92499077690972\\\",\\\"lg\\\":\\\"121.65503987630208\\\",\\\"os\\\":\\\"Android\\\",\\\"os_ver\\\":\\\"7.0\\\",\\\"brand\\\":\\\"HUAWEI\\\",\\\"xh\\\":\\\"HUAWEI NXT-AL10\\\",\\\"coordinate\\\":\\\"GAODE\\\",\\\"city\\\":\\\"大连市\\\",\\\"trigger_man_phone\\\":\\\"18018990297\\\",\\\"app_code\\\":\\\"1002\\\",\\\"cookie\\\":\\\"\\\",\\\"serialno\\\":\\\"CJL5T16217005509\\\"}\"," +

"\"logType\":\"log\",\"warehouseTableName\":\"risk_tab\"}";

break;

case 2:

msg = getCurrDate() + " " + "{\"bizKey\":\"DXADXHZWEXTGCFQB\",\"sign\":\"F068492CC90210167E275977FFBE5A0C\"," +

"\"logStr\":\"{\\\"id\\\":\\\"1004392469380538370\\\",\\\"acode\\\":\\\"CLICK\\\",\\\"app\\\":\\\"4.3.4\\\",\\\"apprunid\\\":\\\"5DB78A8FE3AE_1528272457316\\\",\\\"ctime\\\":\\\"2018-06-25 13:32:17\\\",\\\"create_time\\\":\\\"2018-06-25 13:32:17\\\",\\\"nt\\\":\\\"WIFI\\\",\\\"platform\\\":\\\"0\\\",\\\"r\\\":\\\"\\\",\\\"stime\\\":\\\"2018-06-25 13:32:17\\\",\\\"phone\\\":\\\"18519087423\\\",\\\"p1\\\":\\\"SQHomePageViewController\\\",\\\"p2\\\":\\\"SQRecommendViewController\\\",\\\"p3\\\":\\\"\\\",\\\"android_id\\\":\\\"94e3fcc30e90edf2\\\",\\\"auid\\\":\\\"7da009b4b7878c179d65e20e4d1d589d\\\",\\\"idfa\\\":\\\"\\\",\\\"imei\\\":\\\"860797036717585\\\",\\\"imsi\\\":\\\"460000864499542\\\",\\\"install_id\\\":\\\"7da009b4b7878c179d65e20e4d1d589d_1515973088836\\\",\\\"mac\\\":\\\"\\\",\\\"wmac\\\":\\\"90:ad:f7:86:28:f0\\\",\\\"pcode\\\":\\\"\\\",\\\"uid\\\":\\\"\\\",\\\"property\\\":\\\"22C25529510391525315227D\\\",\\\"ar\\\":\\\"\\\",\\\"at\\\":\\\"291366\\\",\\\"deviceid\\\":\\\"\\\",\\\"lat\\\":\\\"38.92499077690972\\\",\\\"lg\\\":\\\"121.65503987630208\\\",\\\"os\\\":\\\"Android\\\",\\\"os_ver\\\":\\\"7.0\\\",\\\"brand\\\":\\\"HUAWEI\\\",\\\"xh\\\":\\\"HUAWEI NXT-AL10\\\",\\\"coordinate\\\":\\\"GAODE\\\",\\\"city\\\":\\\"大连市\\\",\\\"trigger_man_phone\\\":\\\"18018990297\\\",\\\"app_code\\\":\\\"1002\\\",\\\"cookie\\\":\\\"\\\",\\\"serialno\\\":\\\"CJL5T16217005509\\\"}\"," +

"\"logType\":\"log\",\"warehouseTableName\":\"user_tab\"}";

break;

default:

msg = getCurrDate() + " " + "{\"bizKey\":\"TEST\",\"sign\":\"F028492CC90210167E275977FFBE5A0C\",\"logStr\":\"test\",\"logType\":\"log\",\"warehouseTableName\":\"test\"}";

}

return msg;

}

private static String getCurrDate() {

return new SimpleDateFormat("yyyy-MM-dd.HH:mm:ss.SSS").format(new Date());

}

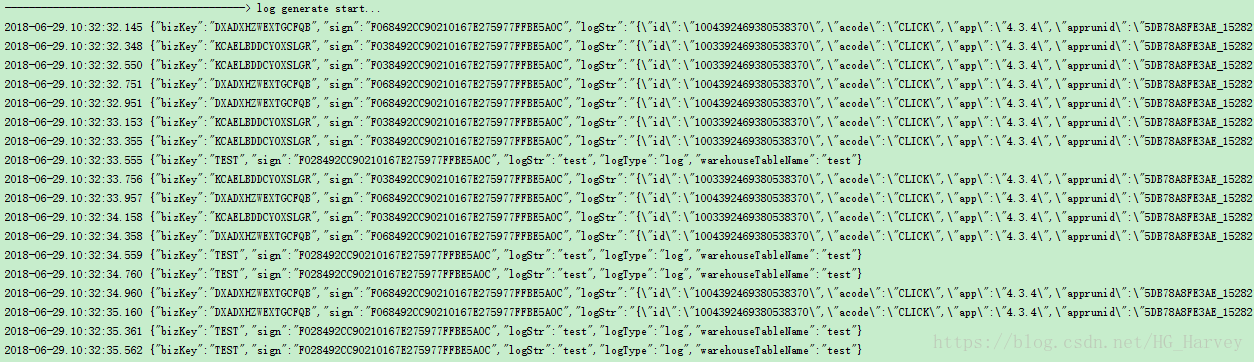

}测试运行:

注意:模拟产生的日志中笔者特意对bizkey加了一个TEST,目的是测试是否能按照我们的预期将bizkey 为 KCAELBDDCYOXSLGR 的数据收集发送到 kafka 的 risk_test topic,bizkey 为 DXADXHZWEXTGCFQB 的发送到 kafka 的 user_test topic

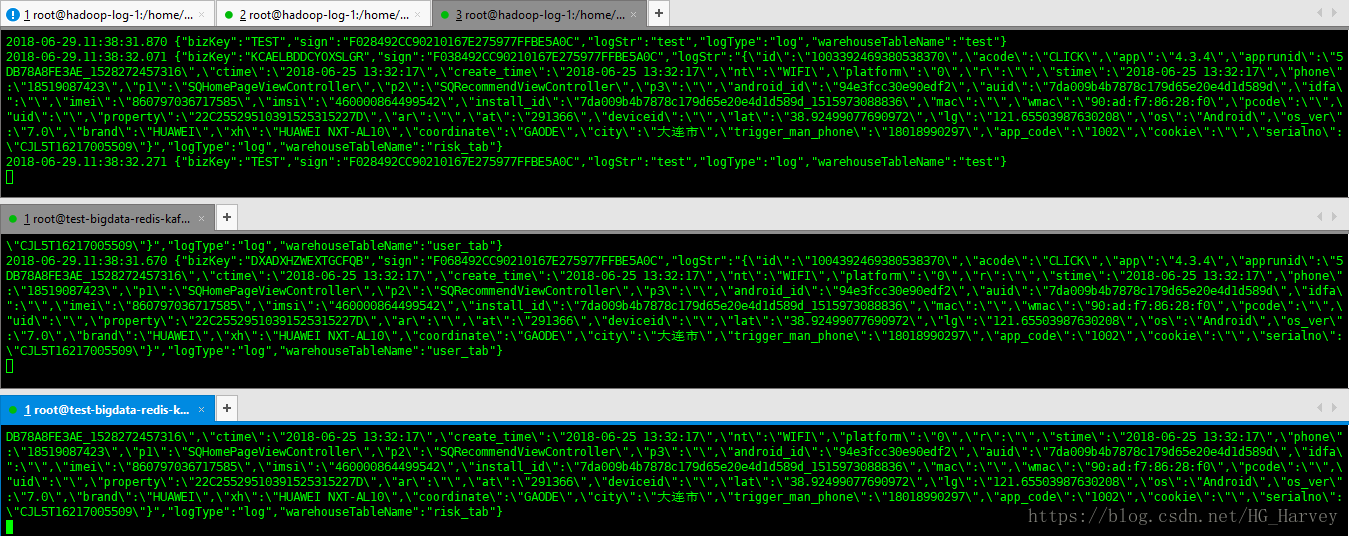

上面的flume kafka topic log-generate都做完了,下面开始整体联调测试

- 启动flume

flume-ng agent \

--conf $FLUME_HOME/conf \

--conf-file $FLUME_HOME/conf/logcollect.conf \

--name logcollect \

-Dflume.root.logger=INFO,console- 启动kafka消费者

kafka-console-consumer.sh --zookeeper zk地址,多个用逗号分隔 --topic user_test

kafka-console-consumer.sh --zookeeper zk地址,多个用逗号分隔 --topic risk_test- 模拟产生日志

将代码打成jar ,执行如下命令

java -jar log-generate.jar >> /home/bigdata/flume/data/data.log- 观察kafka消费者控制台

窗口1:用于产生日志

窗口2:kafka topic user_test

窗口3:kafka topic risk_test