------------------------------------------------------------------------------------------------------------------------------

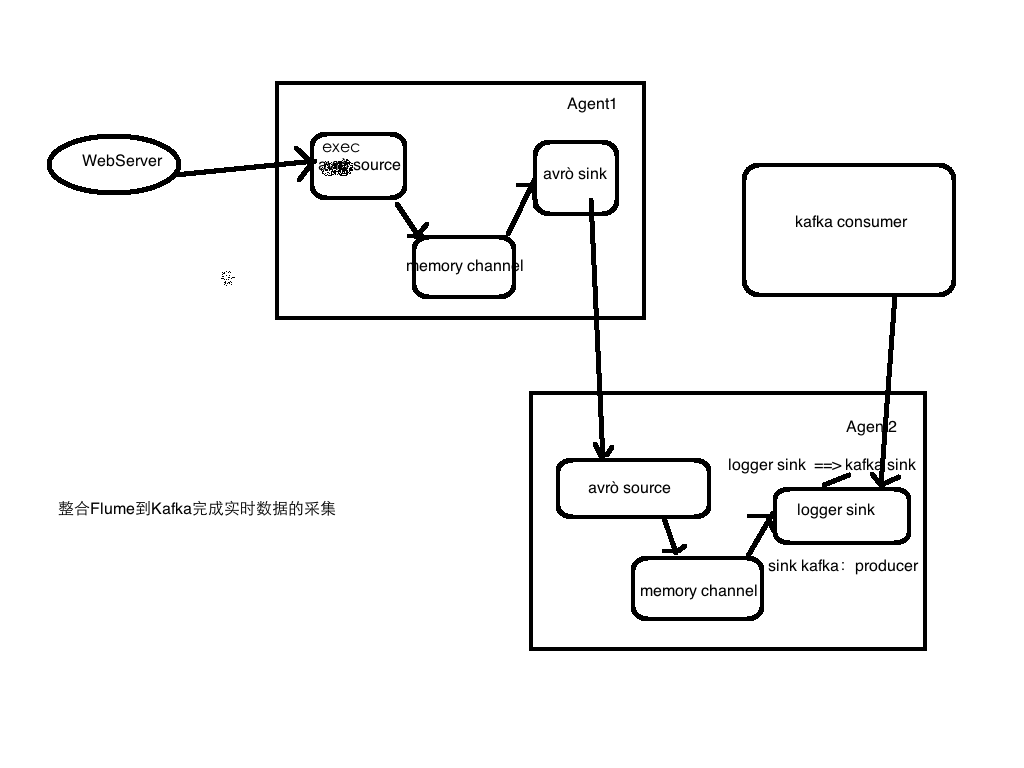

avro-memory-kafka.conf配置:

avro-memory-kafka.sources = avro-source

avro-memory-kafka.sinks = kafka-sinkavro-memory-kafka.channels = memory-channel

avro-memory-kafka.sources.avro-source.type = avro

avro-memory-kafka.sources.avro-source.bind = hadoop000

avro-memory-kafka.sources.avro-source.port = 44444

avro-memory-kafka.sinks.kafka-sink.type = org.apache.flume.sink.kafka.KafkaSink

avro-memory-kafka.sinks.kafka-sink.brokerList = hadoop000:9092

avro-memory-kafka.sinks.kafka-sink.topic = hello_topic

avro-memory-kafka.sinks.kafka-sink.batchSize = 5

avro-memory-kafka.sinks.kafka-sink.requiredAcks =1

avro-memory-kafka.channels.memory-channel.type = memory

avro-memory-kafka.sources.avro-source.channels = memory-channel

avro-memory-kafka.sinks.kafka-sink.channel = memory-channel

启动:

flume-ng agent \

--name avro-memory-kafka \

--conf $FLUME_HOME/conf \

--conf-file $FLUME_HOME/conf/avro-memory-kafka.conf \

-Dflume.root.logger=INFO,console

注意:如何通过代码想文件中追加内容呢?

echo 内容 >> 文件