参考文档:

- Install-guide:https://docs.openstack.org/install-guide/

- OpenStack High Availability Guide:https://docs.openstack.org/ha-guide/index.html

- 理解Pacemaker:http://www.cnblogs.com/sammyliu/p/5025362.html

十九.Glance集成Ceph

1. 配置glance-api.conf

# 在运行glance-api服务的节点修改glance-api.conf文件,含3个控制节点,以controller01节点为例

# 以下只列出涉及glance集成ceph的section

[root@controller01 ~]# vim /etc/glance/glance-api.conf

# 打开copy-on-write功能

[DEFAULT]

show_image_direct_url = True

# 变更默认使用的本地文件存储为ceph rbd存储;

# 注意红色加粗字体部分前后一致

[glance_store]

#stores = file,http

#default_store = file

#filesystem_store_datadir = /var/lib/glance/images/

stores = rbd

default_store = rbd

rbd_store_chunk_size = 8

rbd_store_pool = images

rbd_store_user = glance

rbd_store_ceph_conf = /etc/ceph/ceph.conf

# 变更配置文件,重启服务

[root@controller01 ~]# systemctl restart openstack-glance-api.service

[root@controller01 ~]# systemctl restart openstack-glance-registry.service

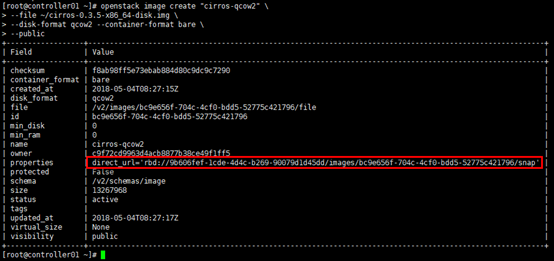

2. 上传镜像

# 镜像上传后,默认地址为ceph集群(ID)的images pool下

[root@controller01 ~]# openstack image create "cirros-qcow2" \

--file ~/cirros-0.3.5-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--public

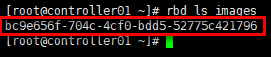

# 检查

[root@controller01 ~]# rbd ls images

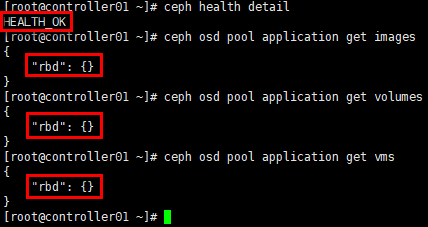

3. 定义pool类型

# images启用后,ceph集群状态变为:HEALTH_WARN

[root@controller01 ~]# ceph -s

# 使用"ceph health detail",能给出解决办法;

# 未定义pool池类型,可定义为'cephfs', 'rbd', 'rgw'等

[root@controller01 ~]# ceph health detail

# 同步解决volumes与vms两个pool的问题

[root@controller01 ~]# ceph osd pool application enable images rbd

[root@controller01 ~]# ceph osd pool application enable volumes rbd

[root@controller01 ~]# ceph osd pool application enable vms rbd

# 查看

[root@controller01 ~]# ceph health detail

[root@controller01 ~]# ceph osd pool application get images

[root@controller01 ~]# ceph osd pool application get volumes

[root@controller01 ~]# ceph osd pool application get vms

二十.Cinder集成Ceph

1. 配置cinder.conf

# cinder利用插件式结构,支持同时使用多种后端存储;

# 在cinder-volume所在节点设置cinder.conf中设置相应的ceph rbd驱动即可;

# 含3个计算(存储)节点,以compute01节点为例;

# 以下只列出涉及cinder集成ceph的section

[root@compute01 ~]# vim /etc/cinder/cinder.conf

# 后端使用ceph存储

[DEFAULT]

enabled_backends = ceph

# 新增[ceph] section;

# 注意红色加粗字体部分前后一致

[ceph]

# ceph rbd驱动

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

# 如果配置多后端,则"glance_api_version"必须配置在[DEFAULT] section

glance_api_version = 2

rbd_user = cinder

rbd_secret_uuid = 10744136-583f-4a9c-ae30-9bfb3515526b

volume_backend_name = ceph

# 变更配置文件,重启服务

[root@controller01 ~]# systemctl restart openstack-glance-api.service

[root@controller01 ~]# systemctl restart openstack-glance-registry.service

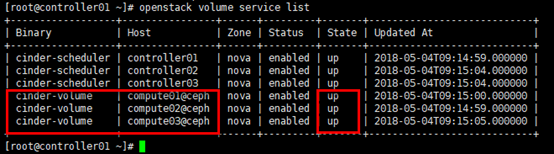

2. 验证

# 查看cinder服务状态,cinder-volume集成ceph后,状态"up";

# 或:cinder service-list

[root@controller01 ~]# openstack volume service list

3. 生成volume

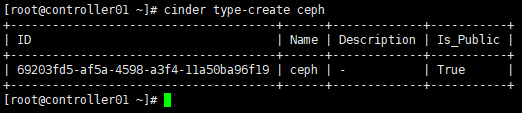

1)设置卷类型

# 在控制节点为cinder的ceph后端存储创建对应的type,在配置多存储后端时可区分类型;

# 可通过"cinder type-list"查看

[root@controller01 ~]# cinder type-create ceph

# 为ceph type设置扩展规格,键值" volume_backend_name",value值"ceph"

[root@controller01 ~]# cinder type-key ceph set volume_backend_name=ceph

[root@controller01 ~]# cinder extra-specs-list

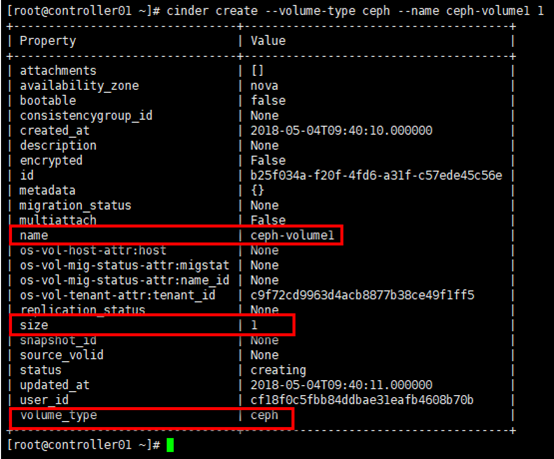

2)生成volume

# 生成volume;

# 最后的数字"1"代表容量为1G

[root@controller01 ~]# cinder create --volume-type ceph --name ceph-volume1 1

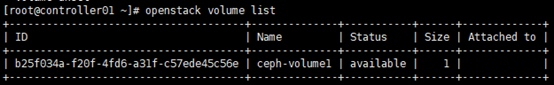

# 检查生成的volume;

# 或:cinder list

[root@controller01 ~]# openstack volume list

# 检查ceph集群的volumes pool

[root@controller01 ~]# rbd ls volumes