参考文档:

- Install-guide:https://docs.openstack.org/install-guide/

- OpenStack High Availability Guide:https://docs.openstack.org/ha-guide/index.html

- 理解Pacemaker:http://www.cnblogs.com/sammyliu/p/5025362.html

三.Mariadb集群

1. 安装mariadb

# 在全部controller节点安装mariadb,以controller01节点为例

[root@controller01 ~]# yum install mariadb mariadb-server python2-PyMySQL -y

# 安装galera相关插件,利用galera搭建multi-master架构

[root@controller01 ~]# yum install mariadb-server-galera mariadb-galera-common galera xinetd rsync -y

2. 初始化mariadb

# 在全部控制节点初始化数据库密码,以controller01节点为例;

# root初始密码为空

[root@controller01 ~]# systemctl restart mariadb.service

[root@controller01 ~]# mysql_secure_installation

Enter current password for root (enter for none):

Set root password? [Y/n] y

New password:

Re-enter new password:

Remove anonymous users? [Y/n] y

Disallow root login remotely? [Y/n] n

Remove test database and access to it? [Y/n] y

Reload privilege tables now? [Y/n] y

3. 修改mariadb配置文件

# 在全部控制节点/etc/my.cnf.d/目录下新增openstack.cnf配置文件,主要设置集群同步相关参数,以controller01节点为例,个别涉及ip地址/host名等参数根据实际情况修改

[root@controller01 my.cnf.d]# cat /etc/my.cnf.d/openstack.cnf

[mysqld]

binlog_format = ROW

bind-address = 172.30.200.31

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

[galera]

bind-address = 172.30.200.31

wsrep_provider = /usr/lib64/galera/libgalera_smm.so

wsrep_cluster_address ="gcomm://controller01,controller02,controller03"

wsrep_cluster_name = openstack-cluster-01

wsrep_node_name = controller01

wsrep_node_address = 172.30.200.31

wsrep_on=ON

wsrep_slave_threads=4

wsrep_sst_method=rsync

default_storage_engine=InnoDB

[embedded]

[mariadb]

[mariadb-10.1]

4. 构建mariadb集群

# 停止全部控制节点的mariadb服务,以controller01节点为例

[root@controller01 ~]# systemctl stop mariadb.service

# 任选1个控制节点以如下方式启动mariadb服务,这里选择controller01节点

[root@controller01 ~]# /usr/libexec/mysqld --wsrep-new-cluster --user=root &

# 其他控制节点加入mariadb集群,以controller02节点为例;

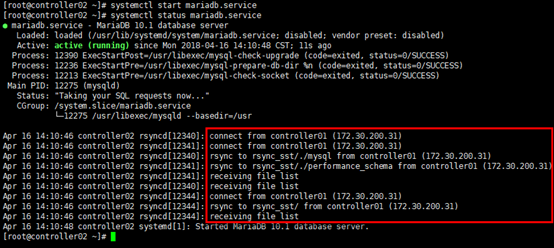

# 启动后加入集群,controller02节点从controller01节点同步数据,也可同步查看mariadb日志/var/log/mariadb/mariadb.log

[root@controller02 ~]# systemctl start mariadb.service

[root@controller02 ~]# systemctl status mariadb.service

# 重新启动controller01节点;

# 启动前删除contrller01节点的数据

[root@controller01 ~]# pkill -9 mysql

[root@controller01 ~]# rm -rf /var/lib/mysql/*

# 注意以system unit方式启动mariadb服务时的权限

[root@controller01 ~]# chown mysql:mysql /var/run/mariadb/mariadb.pid

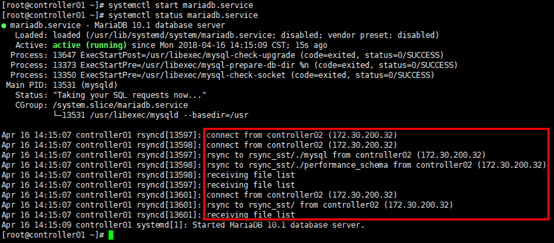

# 启动后查看节点所在服务状态,controller01节点从controller02节点同步数据

[root@controller01 ~]# systemctl start mariadb.service

[root@controller01 ~]# systemctl status mariadb.service

# 查看集群状态

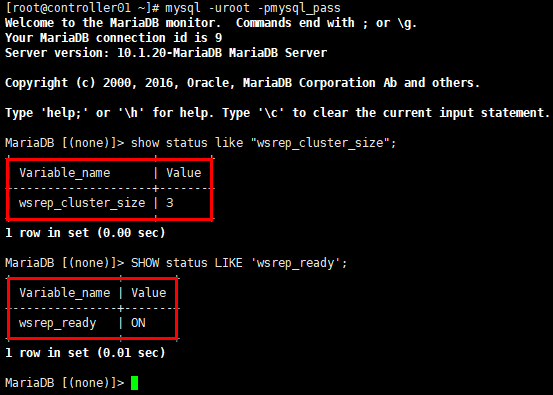

[root@controller01 ~]# mysql -uroot -pmysql_pass

MariaDB [(none)]> show status like "wsrep_cluster_size";

MariaDB [(none)]> SHOW status LIKE 'wsrep_ready';

5. 设置心跳检测clustercheck

准备脚本

# 下载clustercheck脚本

[root@controller01 ~]# wget https://raw.githubusercontent.com/olafz/percona-clustercheck/master/clustercheck

# 赋权

[root@controller01 ~]# chmod +x clustercheck

[root@controller01 ~]# cp ~/clustercheck /usr/bin/

创建心跳检测用户

# 在任意控制节点创建clustercheck_user用户并赋权;

# 注意账号/密码与脚本中的账号/密码对应,这里采用的是脚本默认的账号/密码,否则需要修改clustercheck脚本文件

[root@controller01 ~]# mysql -uroot -pmysql_pass

MariaDB [(none)]> GRANT PROCESS ON *.* TO 'clustercheckuser'@'localhost' IDENTIFIED BY 'clustercheckpassword!';

MariaDB [(none)]> FLUSH PRIVILEGES;

检测配置文件

# 在全部控制节点新增心跳检测服务配置文件/etc/xinetd.d/mysqlchk,以controller01节点为例

[root@controller01 ~]# touch /etc/xinetd.d/mysqlchk

[root@controller01 ~]# vim /etc/xinetd.d/mysqlchk

# default: on

# description: mysqlchk

service mysqlchk

{

port = 9200

disable = no

socket_type = stream

protocol = tcp

wait = no

user = root

group = root

groups = yes

server = /usr/bin/clustercheck

type = UNLISTED

per_source = UNLIMITED

log_on_success =

log_on_failure = HOST

flags = REUSE

}

启动心跳检测服务

# 修改/etc/services,变更tcp9200端口用途,以controller01节点为例

[root@controller01 ~]# vim /etc/services

#wap-wsp 9200/tcp # WAP connectionless session service

mysqlchk 9200/tcp # mysqlchk

# 启动xinetd服务,以controller01节点为例

[root@controller01 ~]# systemctl daemon-reload

[root@controller01 ~]# systemctl enable xinetd

[root@controller01 ~]# systemctl start xinetd

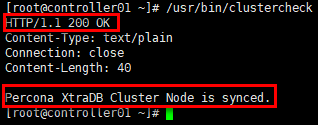

测试心跳检测脚本

# 在全部控制节点验证,以controller01节点为例

[root@controller01 ~]# /usr/bin/clustercheck

四.RabbitMQ集群

采用openstack官方的安装方法,在未更新erlang的情况下,rabbitmq不是最新版本。

如果需要部署最新版本rabbitmq集群,可参考:http://www.cnblogs.com/netonline/p/7678321.html

1. 安装rabbitmq

# 在全部控制节点,使用aliyun的epel镜像,以controller01节点为例

[root@controller01 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@controller01 ~]# yum install erlang rabbitmq-server -y

# 设置开机启动

[root@controller01 ~]# systemctl enable rabbitmq-server.service

2. 构建rabbitmq集群

# 任选1个控制节点首先启动rabbitmq服务,这里选择controller01节点

[root@controller01 ~]# systemctl start rabbitmq-server.service

[root@controller01 ~]# rabbitmqctl cluster_statu

# 分发.erlang.cookie

[root@controller01 ~]# scp /var/lib/rabbitmq/.erlang.cookie [email protected]:/var/lib/rabbitmq/

[root@controller01 ~]# scp /var/lib/rabbitmq/.erlang.cookie [email protected]:/var/lib/rabbitmq/

# 修改controller02/03节点.erlang.cookie文件的用户/组,以controller02节点为例

[root@controller02 ~]# chown rabbitmq:rabbitmq /var/lib/rabbitmq/.erlang.cookie

# 注意修改全部控制节点.erlang.cookie文件的权限,默认即400权限,可不修改

[root@controller02 ~]# ll /var/lib/rabbitmq/.erlang.cookie

# 启动controller02/03节点的rabbitmq服务

[root@controller02 ~]# systemctl start rabbitmq-server

[root@controller03 ~]# systemctl start rabbitmq-server

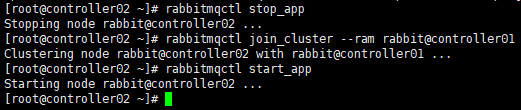

# 构建集群,controller02/03节点以ram节点的形式加入集群

[root@controller02 ~]# rabbitmqctl stop_app

[root@controller02 ~]# rabbitmqctl join_cluster --ram rabbit@controller01

[root@controller02 ~]# rabbitmqctl start_app

[root@controller03 ~]# rabbitmqctl stop_app

[root@controller03 ~]# rabbitmqctl join_cluster --ram rabbit@controller01

[root@controller03 ~]# rabbitmqctl start_app

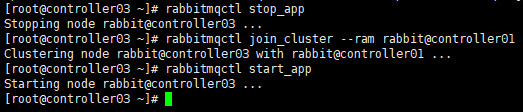

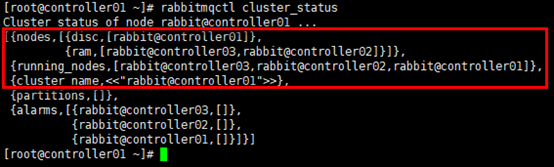

# 任意节点可验证集群状态

[root@controller01 ~]# rabbitmqctl cluster_status

3. rabbitmq账号

# 在任意节点新建账号并设置密码,以controller01节点为例

[root@controller01 ~]# rabbitmqctl add_user openstack rabbitmq_pass

# 设置新建账号的状态

[root@controller01 ~]# rabbitmqctl set_user_tags openstack administrator

# 设置新建账号的权限

[root@controller01 ~]# rabbitmqctl set_permissions -p "/" openstack ".*" ".*" ".*"

# 查看账号

[root@controller01 ~]# rabbitmqctl list_users

4. 镜像队列ha

# 设置镜像队列高可用

[root@controller01 ~]# rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all"}'

# 查看镜像队列策略

[root@controller01 ~]# rabbitmqctl list_policies

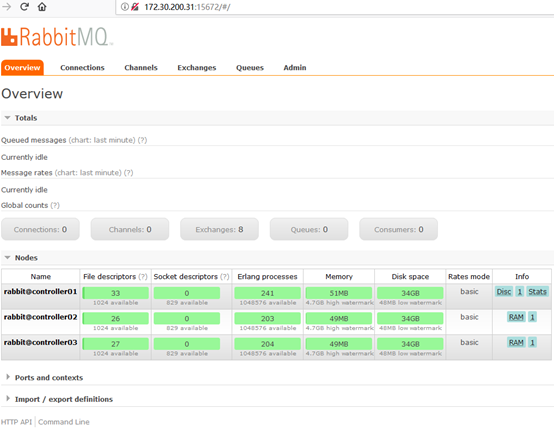

5. 安装web管理插件

# 在全部控制节点安装web管理插件,以controller01节点为例

[root@controller01 ~]# rabbitmq-plugins enable rabbitmq_management

访问任意节点,如:http://172.30.200.31:15672

五.Memcached集群

Memcached是无状态的,各控制节点独立部署,openstack各服务模块统一调用多个控制节点的memcached服务即可。

采用openstack官方的安装方法,如果需要部署最新版本memcached,可参考:http://www.cnblogs.com/netonline/p/7805900.html

以下配置以controller01节点为例。

1. 安装memcached

# 在全部控制节点安装memcached [root@controller01 ~]# yum install memcached python-memcached -y

2. 设置memcached

# 在全部安装memcached服务的节点设置服务监听地址 [root@controller01 ~]# sed -i 's|127.0.0.1,::1|0.0.0.0|g' /etc/sysconfig/memcached

3. 开机启动

[root@controller01 ~]# systemctl enable memcached.service [root@controller01 ~]# systemctl start memcached.service [root@controller01 ~]# systemctl status memcached.service