项目需求

自定义输入格式,将明星微博数据排序后按粉丝数 关注数 微博数 分别输出到不同文件中。

数据集

下面是部分数据,猛戳此链接下载完整数据集

数据格式: 明星 明星微博名称 粉丝数 关注数 微博数

黄晓明 黄晓明 22616497 506 2011

张靓颖 张靓颖 27878708 238 3846

羅志祥 羅志祥 30763518 277 3843

劉嘉玲 劉嘉玲 12631697 350 2057

李娜 李娜 23309493 81 631

成龙 成龙 22485765 5 758

...

思路分析

自定义的InputFormat读取明星微博数据,通过getSortedHashtableByValue分别对明星follower、friend、statuses数据进行排序,然后利用MultipleOutputs输出不同项到不同的文件中。

程序

Weibo.java

package com.hadoop.WeiboCount;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.WritableComparable;

public class WeiBo implements WritableComparable <Object >

{

//直接利用java的基本数据类型int,定义成员变量friends、followers、statuses

private int friends;

private int followers;

private int statuses;

public int getFriends()

{

return friends;

}

public void setFriends(int friends)

{

this.friends = friends;

}

public int getFollowers()

{

return followers;

}

public void setFollowers(int followers)

{

this.followers = followers;

}

public int getStatuses()

{

return statuses;

}

public void setStatuses(int statuses)

{

this.statuses = statuses;

}

public WeiBo(){};

public WeiBo(int friends,int followers,int statuses)

{

this.followers = followers;

this.friends = friends;

this.statuses = statuses;

}

public void set(int friends,int followers,int statuses)

{

this.followers = followers;

this.friends = friends;

this.statuses = statuses;

}

// 实现WritableComparable的readFields()方法,以便该数据能被序列化后完成网络传输或文件输入

public void readFields(DataInput in) throws IOException

{

// TODO Auto-generated method stub

friends = in.readInt();

followers = in.readInt();

statuses = in.readInt();

}

// 实现WritableComparable的write()方法,以便该数据能被序列化后完成网络传输或文件输出

public void write(DataOutput out) throws IOException

{

// TODO Auto-generated method stub

out.writeInt(followers);

out.writeInt(friends);

out.writeInt(statuses);

}

public int compareTo(Object o)

{

// TODO Auto-generated method stub

return 0;

}

}

WeiboCount.java

package com.hadoop.WeiboCount;

import java.io.IOException;

import java.util.Arrays;

import java.util.Comparator;

import java.util.HashMap;

import java.util.Set;

import java.util.Map;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.MultipleOutputs;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class WeiboCount extends Configured implements Tool

{

public static class WeiBoMapper extends Mapper<Text, WeiBo, Text, Text>

{

@Override

protected void map(Text key, WeiBo value, Context context) throws IOException, InterruptedException

{

context.write(new Text("follower"),new Text(key.toString() + "\t" + value.getFollowers()));

context.write(new Text("friend"),new Text(key.toString() + "\t" + value.getFriends()));

context.write(new Text("statuses"),new Text(key.toString() + "\t" + value.getStatuses()));

//都写入到context里

}

}

public static class WeiBoReducer extends Reducer<Text, Text, Text, IntWritable>

{

private MultipleOutputs<Text, IntWritable> mos;

protected void setup(Context context) throws IOException,InterruptedException

{

mos = new MultipleOutputs<Text, IntWritable>(context);

}

private Text text = new Text();

protected void reduce(Text Key, Iterable<Text> Values,Context context) throws IOException, InterruptedException

{

int N = context.getConfiguration().getInt("reduceHasMaxLength", Integer.MAX_VALUE);

Map<String,Integer> m = new HashMap<String,Integer>();

for(Text value:Values)

{

//value=名称+(粉丝数 或 关注数 或 微博数)

String[] records = value.toString().split("\t");

m.put(records[0], Integer.parseInt(records[1].toString()));

}

//对Map内的数据进行排序

Map.Entry<String, Integer>[] entries = getSortedHashtableByValue(m);

for(int i = 0; i< N&&i< entries.length;i++)

{

if(Key.toString().equals("follower"))

{

mos.write("follower",entries[i].getKey(), entries[i].getValue());

}else if(Key.toString().equals("friend"))

{

mos.write("friend", entries[i].getKey(), entries[i].getValue());

}else if(Key.toString().equals("status"))

{

mos.write("statuses", entries[i].getKey(), entries[i].getValue());

}

}

}

protected void cleanup(Context context) throws IOException,InterruptedException

{

mos.close();

}

}

public int run(String[] args) throws Exception

{

Configuration conf = new Configuration();// 配置文件对象conf

Path mypath = new Path(args[1]);//Path对象mypath

FileSystem hdfs = mypath.getFileSystem(conf);// 创建输出路径

if (hdfs.isDirectory(mypath))

{

hdfs.delete(mypath, true);

}

Job job = new Job(conf, "weibo");// 构造任务

job.setJarByClass(WeiboCount.class);// 主类

job.setMapperClass(WeiBoMapper.class);// Mapper

job.setMapOutputKeyClass(Text.class);// Mapper key输出类型

job.setMapOutputValueClass(Text.class);// Mapper value输出类型

job.setReducerClass(WeiBoReducer.class);// Reducer

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));// 输入路径

FileOutputFormat.setOutputPath(job, new Path(args[1]));// 输出路径

job.setInputFormatClass(WeiboInputFormat.class);// 自定义输入格式

//自定义文件输出类别

MultipleOutputs.addNamedOutput(job, "follower", TextOutputFormat.class,Text.class, IntWritable.class);

MultipleOutputs.addNamedOutput(job, "friend", TextOutputFormat.class,Text.class, IntWritable.class);

MultipleOutputs.addNamedOutput(job, "status", TextOutputFormat.class,Text.class, IntWritable.class);

job.waitForCompletion(true);

return 0;

}

//对Map内的数据进行排序(只适合小数据量)

public static Map.Entry[] getSortedHashtableByValue(Map h)

{

Set set = h.entrySet();

Map.Entry[] entries = (Map.Entry[]) set.toArray(new Map.Entry[set.size()]);

Arrays.sort(entries, new Comparator()

{

public int compare(Object arg0, Object arg1)

{

Long key1 = Long.valueOf(((Map.Entry) arg0).getValue().toString());

Long key2 = Long.valueOf(((Map.Entry) arg1).getValue().toString());

return key2.compareTo(key1);

} });

return entries;

}

public static void main(String[] args) throws Exception

{

String[] args0 = {

args[0], args[1]

// "hdfs://centpy:9000/weibo/weibo.txt",

// "hdfs://centpy:9000/weibo/out/"

};

int ec = ToolRunner.run(new Configuration(), new WeiboCount(), args0);

System.exit(ec);

}

}

WeiboInputFormat.java

package com.hadoop.WeiboCount;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.InputSplit;

import org.apache.hadoop.mapreduce.RecordReader;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.util.LineReader;

public class WeiboInputFormat extends FileInputFormat<Text,WeiBo>

{

@Override

public RecordReader<Text, WeiBo> createRecordReader(InputSplit arg0,TaskAttemptContext arg1) throws IOException, InterruptedException

{

//这里默认是系统实现的的RecordReader,按行读取,下面我们自定义这个类WeiboRecordReader。

return new WeiboRecordReader();

}

public class WeiboRecordReader extends RecordReader<Text, WeiBo>

{

public LineReader in;

public Text lineKey; //声明key类型

public WeiBo lineValue;//声明 value类型

public Text line;

@Override

public void initialize(InputSplit input, TaskAttemptContext context) throws IOException, InterruptedException

{

FileSplit split=(FileSplit)input;//获取split

Configuration job=context.getConfiguration();

Path file=split.getPath();//得到文件路径

FileSystem fs=file.getFileSystem(job);

FSDataInputStream filein=fs.open(file);//打开文件

in=new LineReader(filein,job);

line=new Text();

lineKey=new Text();//新建一个Text实例作为自定义格式输入的key

lineValue = new WeiBo();//新建一个WeiBo实例作为自定义格式输入的value

}

@Override

public boolean nextKeyValue() throws IOException, InterruptedException

{

int linesize=in.readLine(line);

if(linesize==0) return false;

//通过分隔符'\t',将每行的数据解析成数组 pieces

String[] pieces = line.toString().split("\t");

if(pieces.length != 5)

{

throw new IOException("Invalid record received");

}

int a,b,c;

try

{

a = Integer.parseInt(pieces[2].trim());//粉丝 , trim()是去两边空格的方法

b = Integer.parseInt(pieces[3].trim());//关注

c = Integer.parseInt(pieces[4].trim());//微博数

}catch(NumberFormatException nfe)

{

throw new IOException("Error parsing floating poing value in record");

}

//自定义key和value值

lineKey.set(pieces[0]);

lineValue.set(b, a, c);

return true;

}

@Override

public void close() throws IOException

{

if(in !=null)

{

in.close();

}

}

@Override

public Text getCurrentKey() throws IOException,InterruptedException

{

return lineKey;

}

@Override

public WeiBo getCurrentValue() throws IOException, InterruptedException

{

return lineValue;

}

@Override

public float getProgress() throws IOException, InterruptedException

{

return 0;

}

}

}

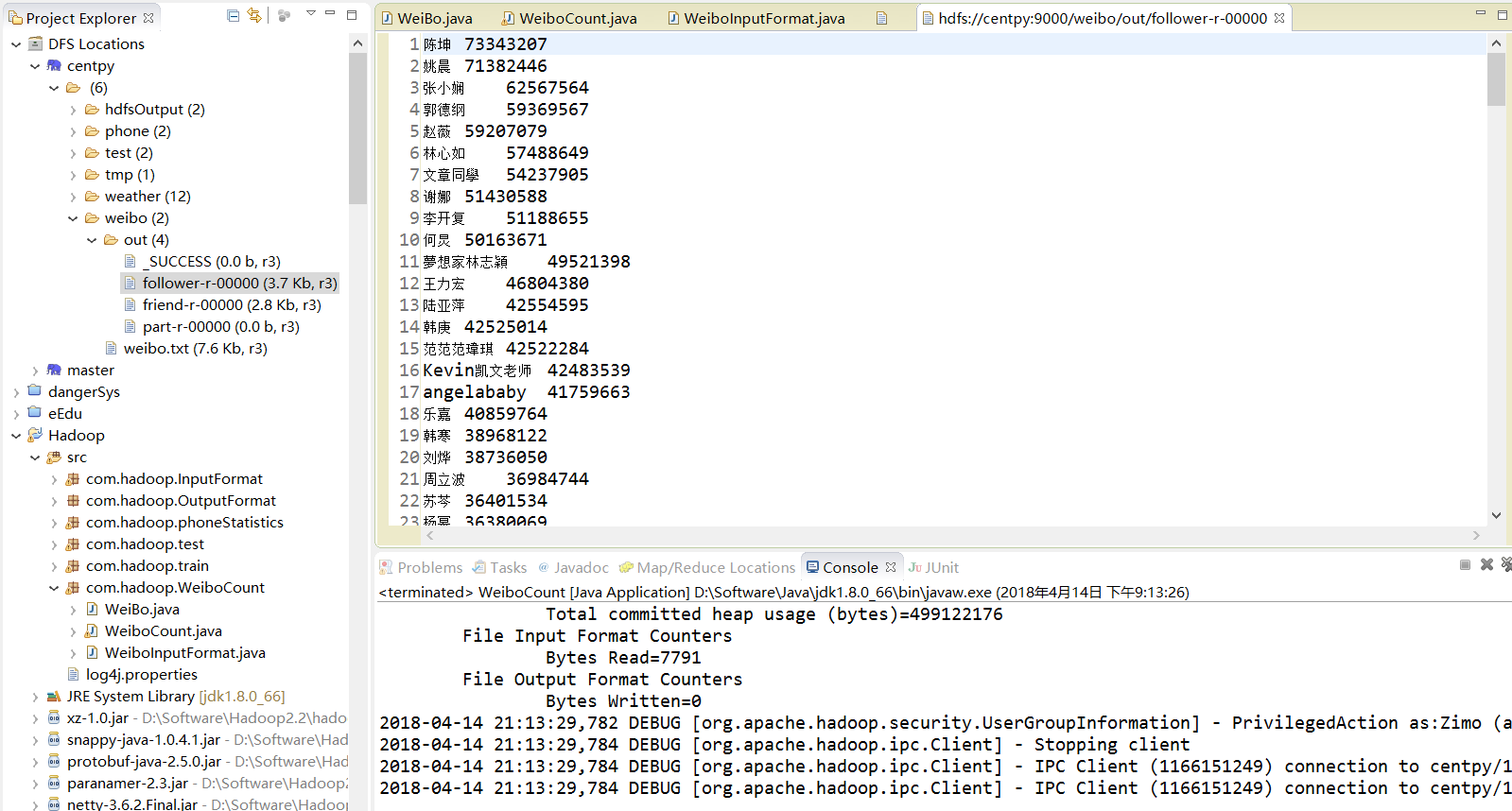

实验结果

以上就是博主为大家介绍的这一板块的主要内容,这都是博主自己的学习过程,希望能给大家带来一定的指导作用,有用的还望大家点个支持,如果对你没用也望包涵,有错误烦请指出。如有期待可关注博主以第一时间获取更新哦,谢谢!

版权声明:本文为博主原创文章,未经博主允许不得转载。