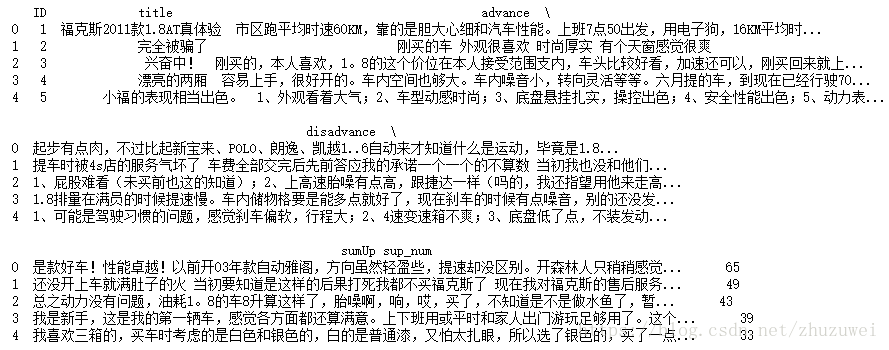

一. 获取数据

import numpy as np

import pandas as pd

import jieba

# 1. 读取数据,数据预处理

comment_path = "G:\\myLearning\\pythonLearning201712\\carComments\\01\\myCleanedComments.CSV"

comment_df = pd.read_csv(comment_path, engine = 'python')

print(comment_df.head())

del comment_df['ID']

del comment_df['title']

del comment_df['sumUp']

del comment_df['sup_num']# 2.读入停用词

# 也可使用open(filename).readlines()获取停用词

stopwords_path = "G:\\myLearning\\pythonLearning201712\\myDicts\\新建文件夹\\综合stopword.txt"

stopwords = pd.read_csv(stopwords_path,names = ['stopwords'], sep = 'aaa',encoding = 'utf-8', engine = 'python')

# 3.自定义分词函数

import re

def my_cut_func(my_str):

my_str = re.sub('[a-zA-Z]*','',my_str) # 去除字母

my_str = re.sub('\d|\s','',my_str) # 去除数字和空格stopwords_path

my_str_list = jieba.cut(my_str)

# 去除停用词和长度为1的词

my_str_list = [word for word in my_str_list if word not in stopwords and len(word) > 1]

return my_str_list

# 4.加载搜狗汽车词典: 原文件必须为utf-8格式

jieba.load_userdict('G:\\myLearning\\sougou词库\\汽车词汇大全【官方推荐】.txt')

# 5.将所有文本合并为一个大字符串

pos_comments = list(comment_df['advance'])

pos_comments = [str(item) for item in pos_comments]

all_pos_comments = ''.join(pos_comments)

neg_comments = list(comment_df['disadvance'])

neg_comments = [str(item) for item in neg_comments]

all_neg_comments = ''.join(neg_comments)二. 获取词频矩阵:基于bow

# 1. 基于词袋模型(unigram, bigram 和 trigram等)

# (1)读取数据

pos_comment_df = pd.DataFrame()

neg_comment_df = pd.DataFrame()

pos_comment_df['txt'] = comment_df.advance

pos_comment_df['tag'] = 1.0

neg_comment_df['txt'] = comment_df.disadvance

neg_comment_df['tag'] = 0.0

df0 = pos_comment_df.append(neg_comment_df)

# (2)分词和预处理

# 加载搜狗汽车词典: 原文件必须为utf-8格式

jieba.load_userdict('G:\\myLearning\\sougou词库\\汽车词汇大全【官方推荐】.txt')

# 读入停用词

# 也可使用open(filename).readlines()获取停用词

stopwords_path = "G:\\myLearning\\pythonLearning201712\\myDicts\\新建文件夹\\综合stopword.txt"

stopwords = pd.read_csv(stopwords_path,names = ['stopwords'], sep = 'aaa',encoding = 'utf-8', engine = 'python')

# 自定义分词函数

import re

def cuttxt(my_str):

my_str = re.sub('[a-zA-Z0-9]*','',str(my_str)) # 去除字母和字母

my_str = re.sub('\s','',my_str) # 去除空格、换行符等。

my_str_list = jieba.cut(my_str)

# 去除停用词和长度为1的词

my_str_list = [word for word in my_str_list if word not in stopwords and len(word) > 1]

return ' '.join(my_str_list)

df0['cleantxt'] = df0.txt.apply(cuttxt)

df0.head()# (3) 利用sklearn中的CountVectorizer生成词频的稀疏矩阵

from sklearn.feature_extraction.text import CountVectorizer

countvec = CountVectorizer(min_df = 10) # 在10个以上的文档中出现过才收入,此处的min_df是可调整优化的参数

wordmtx = countvec.fit_transform(df0.cleantxt) # 生成稀疏矩阵

wordmtx

<1x4266 sparse matrix of type '<class 'numpy.int64'>'

with 6 stored elements in Compressed Sparse Row format>三. 利用神经网络进行情感分析

import tensorflow as tf

from sklearn.preprocessing import OneHotEncoder

ohe = OneHotEncoder(sparse=True) # 默认是稀疏矩阵, 设置为False时返回标准矩阵

# 1.获取训练和测试数据集

nn_X_data = wordmtx.todense()

nn_y_data = np.mat(df0.tag).reshape([-1,1])

ohe_y_data = ohe.fit_transform(nn_y_data) # 默认是稀疏矩阵

ohe_y_data = ohe_y_data.todense()

m,n = wordmtx.shape

from sklearn.model_selection import train_test_split

nn_X_train,nn_X_test,nn_y_train,nn_y_test = train_test_split(nn_X_data, ohe_y_data, test_size = 0.3, random_state = 1)

# 2.定义placeholder

X_place = tf.placeholder(tf.float32, [None, n])

y_place = tf.placeholder(tf.float32, [None, 2])

# 权值初始化

def weight_variable(shape):

# 用正态分布来初始化权值

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

# 本例中用relu激活函数,所以用一个很小的正偏置较好

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

# 3.定义FC1

# with tf.name_scope('conv1') as scope:

W_fc1 = weight_variable([n, 128])

b_fc1 = bias_variable([128])

h_fc1 = tf.nn.relu(tf.matmul(X_place, W_fc1) + b_fc1)# 4.定义FC2

with tf.name_scope('conv2') as scope:

W_fc2 = weight_variable([128, 2])

b_fc2 = bias_variable([2])

y_pred = tf.nn.softmax(tf.matmul(h_fc1, W_fc2) + b_fc2)

y_pred = tf.clip_by_value(y_pred, 1e-10, 1.0) # 可防止计算tf.log(y_pred)时出现溢出的情况# 5.定义损失函数

cross_entropy = -tf.reduce_sum(y_place * tf.log(y_pred))

trian_step = tf.train.GradientDescentOptimizer(0.05).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_pred, 1), tf.arg_max(y_place, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# 6. 建立模型

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(101): # 迭代101次

sess.run(train_step, feed_dict={X_place:nn_X_train, y_place:nn_y_train})

if i%10 == 0:

# print(i, sess.run(cross_entropy, feed_dict={X_place:nn_X_train, y_place:nn_y_train} ))

print(i,sess.run(accuracy, feed_dict={X_place:nn_X_test, y_place:nn_y_test} ))

print(sess.run(y_pred, feed_dict={X_place:nn_X_test}))

四. 注意事项

1. 训练时易出现loss为nan的情况,解决办法有:

(1)、对输入数据进行归一化处理;

(2)、对于层数较多的情况,各层都做batch_nomorlization;

(5)、减小学习率lr。

2. 语句y_pred = tf.clip_by_value(y_pred, 1e-10, 1.0) 可防止计算tf.log(y_pred)时出现溢出迭代情况。当y_pred中有0值时,计算tf.log(y_pred)溢出,会返回nan.

3. 尝试改变学习率或者使用AdamOptimizer等优化器。