前言

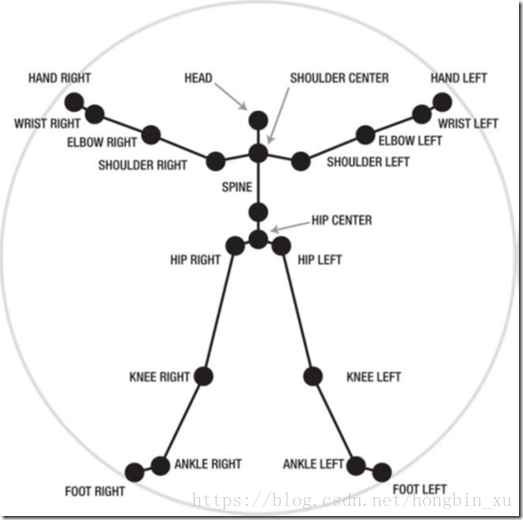

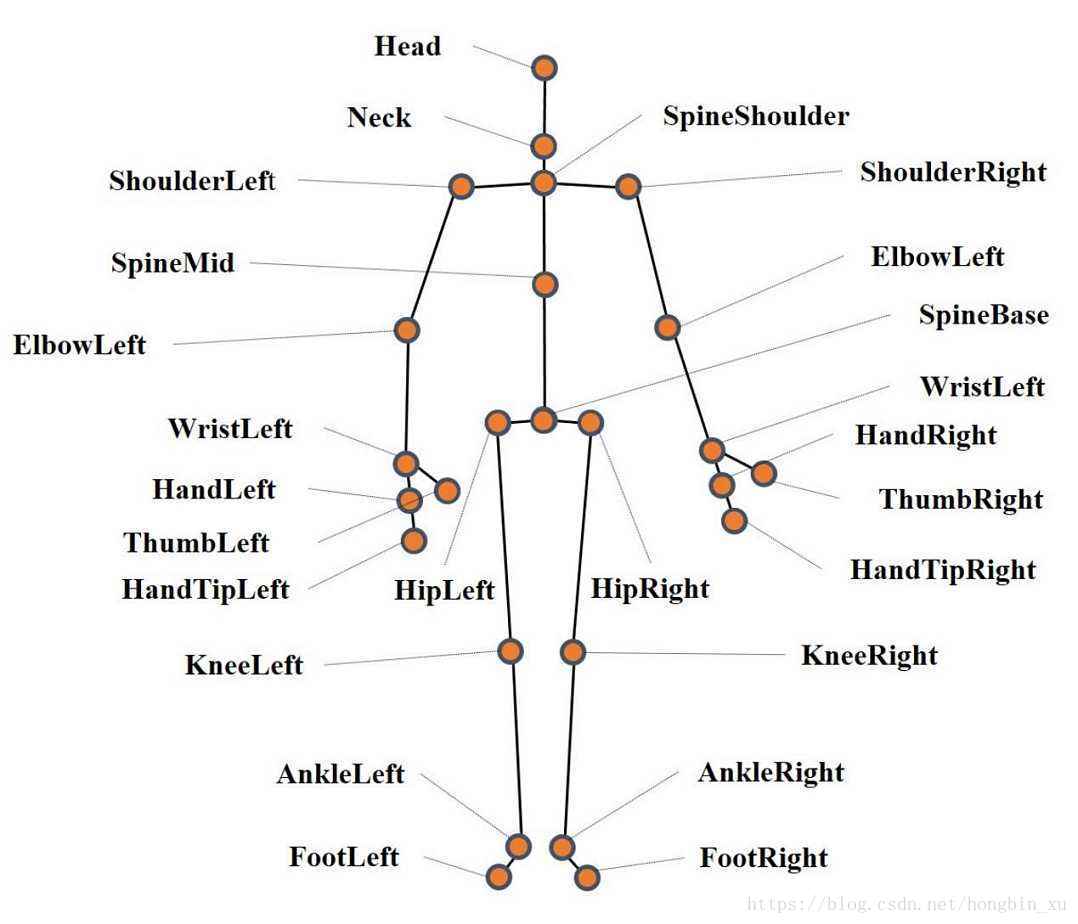

Kinect可以通过处理深度数据来得到人体各个关节点的位置坐标,比如:头、手、脚等等。下面是人体的20个关节点的示意图:

这篇学习笔记的目标就是通过Kinect获取人体的骨骼点数据。

代码

#include <Windows.h>

#include <iostream>

#include <NuiApi.h>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

void drawSkeleton(cv::Mat &img, cv::Point pointSet[], int which_one);

int main(int argc, char * argv[])

{

cv::Mat skeletonImg;

skeletonImg.create(240, 320, CV_8UC3);

cv::Point skeletonPoint[NUI_SKELETON_COUNT][NUI_SKELETON_POSITION_COUNT] = { cv::Point(0, 0) };

bool tracked[NUI_SKELETON_COUNT];

// 1、初始化NUI

HRESULT hr = NuiInitialize(NUI_INITIALIZE_FLAG_USES_SKELETON);

if (FAILED(hr))

{

cout << "NuiInitialize failed" << endl;

return hr;

}

// 2、定义骨骼信号事件句柄

HANDLE skeletonEvent = CreateEvent(NULL, TRUE, FALSE, NULL);

// 3、打开骨骼数据跟踪事件

hr = NuiSkeletonTrackingEnable(skeletonEvent, 0);

if (FAILED(hr))

{

cout << "Could not open skeleton tracking event" << endl;

NuiShutdown();

return hr;

}

cv::namedWindow("skeletonImg", CV_WINDOW_AUTOSIZE);

// 4、开始读取骨骼数据

while (1)

{

NUI_SKELETON_FRAME skeletonFrame = { 0 }; //骨骼帧的定义

bool foundSkeleton = false;

// 4.1、无限等待新的数据,收到数据就返回

if (WaitForSingleObject(skeletonEvent, INFINITE) == 0)

{

// 4.2、读取骨骼数据帧的数据,读到的数据地址存在skeletonFrame

hr = NuiSkeletonGetNextFrame(0, &skeletonFrame);

if (SUCCEEDED(hr))

{

//NUI_SKELETON_COUNT是检测到的骨骼数(即,跟踪到的人数)

for (int i = 0;i < NUI_SKELETON_COUNT;i++)

{

NUI_SKELETON_TRACKING_STATE trackingState = skeletonFrame.SkeletonData[i].eTrackingState;

// 4.3、Kinect最多检测到6个人,但只能跟踪2个人的骨骼,再检查是否跟踪到了

if (trackingState == NUI_SKELETON_TRACKED)

{

foundSkeleton = true;

}

}

}

if (!foundSkeleton)

{

continue;

}

// 4.4、平滑骨骼帧,消除抖动

NuiTransformSmooth(&skeletonFrame, NULL);

skeletonImg.setTo(0);

for (int i = 0;i < NUI_SKELETON_COUNT;i++)

{

// 判断是否是一个正确骨骼的条件:骨骼被跟踪到并且肩部中心(颈部位置)必须跟踪到

if (skeletonFrame.SkeletonData[i].eTrackingState == NUI_SKELETON_TRACKED && skeletonFrame.SkeletonData[i].eSkeletonPositionTrackingState[NUI_SKELETON_POSITION_SHOULDER_CENTER] != NUI_SKELETON_POSITION_NOT_TRACKED)

{

float fx, fy;

// 拿到所有跟踪到的关节点的坐标,并转换为我们的深度空间的坐标,因为我们是在深度图像中

// 把这些关节点标记出来的

// NUI_SKELETON_POSITION_COUNT为跟踪到的一个骨骼的关节点的数目,为20

for (int j = 0;j < NUI_SKELETON_POSITION_COUNT;j++)

{

NuiTransformSkeletonToDepthImage(skeletonFrame.SkeletonData[i].SkeletonPositions[j], &fx, &fy);

skeletonPoint[i][j].x = (int)fx;

skeletonPoint[i][j].y = (int)fy;

}

for (int j = 0;j < NUI_SKELETON_POSITION_COUNT;j++)

{

if (skeletonFrame.SkeletonData[i].eSkeletonPositionTrackingState[j] != NUI_SKELETON_POSITION_NOT_TRACKED)

{

cv::circle(skeletonImg, skeletonPoint[i][j], 3, cv::Scalar(0, 255, 255), 1, 8, 0);

tracked[i] = true;

}

}

drawSkeleton(skeletonImg, skeletonPoint[i], i);

}

}

imshow("skeletonImg", skeletonImg);

}

else

{

cout << "Buffer length of received texture is bogus\r\n" << endl;

}

if (cv::waitKey(20) == 27)

break;

}

//5、关闭NUI链接

NuiShutdown();

cv::destroyAllWindows();

return 0;

}

//通过传入关节点的位置,把骨骼画出来

void drawSkeleton(cv::Mat &img, cv::Point pointSet[], int which_one)

{

cv::Scalar color;

switch (which_one)

{

case 0:

color = cv::Scalar(255, 0, 0);

break;

case 1:

color = cv::Scalar(0, 255, 0);

break;

case 2:

color = cv::Scalar(0, 0, 255);

break;

case 3:

color = cv::Scalar(255, 255, 0);

break;

case 4:

color = cv::Scalar(255, 0, 255);

break;

case 5:

color = cv::Scalar(0, 255, 255);

break;

}

// 脊柱

if ((pointSet[NUI_SKELETON_POSITION_HEAD].x != 0 || pointSet[NUI_SKELETON_POSITION_HEAD].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HEAD], pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SPINE].x != 0 || pointSet[NUI_SKELETON_POSITION_SPINE].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], pointSet[NUI_SKELETON_POSITION_SPINE], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SPINE].x != 0 || pointSet[NUI_SKELETON_POSITION_SPINE].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HIP_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_CENTER].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SPINE], pointSet[NUI_SKELETON_POSITION_HIP_CENTER], color, 2);

// 左上肢

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT], pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT], pointSet[NUI_SKELETON_POSITION_WRIST_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HAND_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_HAND_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_WRIST_LEFT], pointSet[NUI_SKELETON_POSITION_HAND_LEFT], color, 2);

// 右上肢

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT], pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT], pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HAND_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_HAND_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT], pointSet[NUI_SKELETON_POSITION_HAND_RIGHT], color, 2);

// 左下肢

if ((pointSet[NUI_SKELETON_POSITION_HIP_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HIP_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HIP_CENTER], pointSet[NUI_SKELETON_POSITION_HIP_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_HIP_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HIP_LEFT], pointSet[NUI_SKELETON_POSITION_KNEE_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_KNEE_LEFT], pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_FOOT_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_FOOT_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT], pointSet[NUI_SKELETON_POSITION_FOOT_LEFT], color, 2);

// 右下肢

if ((pointSet[NUI_SKELETON_POSITION_HIP_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HIP_CENTER], pointSet[NUI_SKELETON_POSITION_HIP_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HIP_RIGHT], pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT], pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_FOOT_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_FOOT_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT], pointSet[NUI_SKELETON_POSITION_FOOT_RIGHT], color, 2);

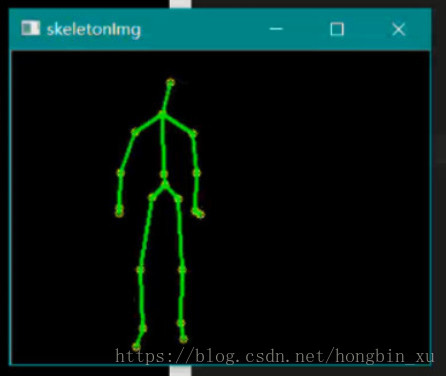

}结果

说明

整个程序流程如下:

- 初始化NUI;

- 定义骨骼信号事件句柄;

- 打开骨骼数据跟踪事件;

- 无限等待新的数据,收到数据就返回;

- 得到数据后绘制骨架,根据不同的用户ID使用不同颜色;

1、初始化NUI

// 1、初始化NUI

HRESULT hr = NuiInitialize(NUI_INITIALIZE_FLAG_USES_SKELETON);

if (FAILED(hr))

{

cout << "NuiInitialize failed" << endl;

return hr;

}初始化时要使用骨架数据(NUI_INITIALIZE_FLAG_USES_SKELETON)。

2、定义骨骼信号事件句柄

// 2、定义骨骼信号事件句柄

HANDLE skeletonEvent = CreateEvent(NULL, TRUE, FALSE, NULL);定义一个事件句柄,其对应骨骼数据是否准备好,即是否可读。

3、打开骨骼数据跟踪事件

// 3、打开骨骼数据跟踪事件

hr = NuiSkeletonTrackingEnable(skeletonEvent, 0);

if (FAILED(hr))

{

cout << "Could not open skeleton tracking event" << endl;

NuiShutdown();

return hr;

}4、等待数据

// 4.1、无限等待新的数据,收到数据就返回

if (WaitForSingleObject(skeletonEvent, INFINITE) == 0)

{

...

}查询skeletonEvent,如果有信号就读取并进行下一步处理,如果没有就无限等待下去(INFINITE)。

5、处理得到的骨架数据

好了,总算到了重头戏了,前面的都是相对基本的套路。

骨骼数据相关的结构体

NUI_SKELETON_FRAME结构体中保存了骨骼帧的数据,可以看下它的定义:

typedef struct _NUI_SKELETON_FRAME

{

LARGE_INTEGER liTimeStamp;

DWORD dwFrameNumber;

DWORD dwFlags;

Vector4 vFloorClipPlane;

Vector4 vNormalToGravity;

NUI_SKELETON_DATA SkeletonData[ 6 ];

} NUI_SKELETON_FRAME;简要说明:

liTimeStamp记录自Kinect传感器初始化(调用NuiInitialize函数)以来经过的累计毫秒时间;dwFrameNumber是深度数据帧中的用来产生骨骼数据帧的帧编号;SkeletonData表示的就是骨骼数据段,也是其中最重要的一个变量。

SkeletonData是一个NUI_SKELETON_DATA类型的结构体,保存了骨骼数据。注意到,它还是个数组,有6个元素,对应可知Kinect最多可以跟踪6个用户。NUI_SKELETON_DATA结构体定义如下:

typedef struct _NUI_SKELETON_DATA

{

NUI_SKELETON_TRACKING_STATE eTrackingState;

DWORD dwTrackingID;

DWORD dwEnrollmentIndex;

DWORD dwUserIndex;

Vector4 Position;

Vector4 SkeletonPositions[ 20 ];

NUI_SKELETON_POSITION_TRACKING_STATE eSkeletonPositionTrackingState[ 20 ];

DWORD dwQualityFlags;

} NUI_SKELETON_DATA;可能有的人会想,又有一堆东西了,继续跟踪每个结构体成员的定义耐心往下看看吧。。。

1、NUI_SKELETON_TRACKING_STATE eTrackingState:eTrackingState表示骨骼数据跟踪状态。NUI_SKELETON_TRACKING_STATE是一个枚举类型,定义如下:

typedef

enum _NUI_SKELETON_TRACKING_STATE

{ NUI_SKELETON_NOT_TRACKED = 0,

NUI_SKELETON_POSITION_ONLY = ( NUI_SKELETON_NOT_TRACKED + 1 ) ,

NUI_SKELETON_TRACKED = ( NUI_SKELETON_POSITION_ONLY + 1 )

} NUI_SKELETON_TRACKING_STATE;嘛,从字面意思看大致就能理解了:

NUI_SKELETON_NOT_TRACKED:表示没有跟踪到;NUI_SKELETON_POSITION_ONLY:表示检测到了骨骼数据,但是没有激活跟踪模式,即Position字段有值,没有其他数据,不常用可以不考虑;NUI_SKELETON_TRACKED:表示跟踪到骨骼数据;

2、DWORD dwTrackingID:跟踪用户的ID。每个Kinect跟踪的用户都会有一个ID,尽管这个值不确定,如果用户离开了Kinect的视野,当前用户的ID值就会失效,下次进入视野时又会重新分配新的ID值。

3、Vector4 Position:表示整个骨架的中间点。比如说用户到摄像头的距离,可以直接使用这个参数来表示,即将其视作骨架中心点到摄像头的距离。

Vector4表示空间坐标,定义如下:

typedef struct _Vector4

{

FLOAT x;

FLOAT y;

FLOAT z;

FLOAT w;

} Vector4;

4、Vector4 SkeletonPositions[ 20 ]:20个人体关节点的位置,使用Vector4即表示其坐标。注意,只有在前面提到的eTrackingState等于NUI_SKELETON_TRACKED的时候,这个数据才不是0。

5、NUI_SKELETON_POSITION_TRACKING_STATE eSkeletonPositionTrackingState[ 20 ]:eSkeletonPositionTrackingState跟前面的SkeletonPositions对应,其表示跟踪的关节点的好坏。

看看NUI_SKELETON_POSITION_TRACKING_STATE的定义:

typedef

enum _NUI_SKELETON_POSITION_TRACKING_STATE

{ NUI_SKELETON_POSITION_NOT_TRACKED = 0,

NUI_SKELETON_POSITION_INFERRED = ( NUI_SKELETON_POSITION_NOT_TRACKED + 1 ) ,

NUI_SKELETON_POSITION_TRACKED = ( NUI_SKELETON_POSITION_INFERRED + 1 )

} NUI_SKELETON_POSITION_TRACKING_STATE;又是一个枚举类型,定义了三种状态:

NUI_SKELETON_POSITION_NOT_TRACKED:没有跟踪到;NUI_SKELETON_POSITION_INFERRED:表示骨骼点数据是通过前一帧或是其他已跟踪到的骨骼点的数据推断而来的,而这个骨骼点本身是没有跟踪到的;NUI_SKELETON_POSITION_TRACKED:骨骼点成功跟踪,并且是从当前帧中得到的;

骨骼帧的获取

NUI_SKELETON_FRAME skeletonFrame = { 0 }; //骨骼帧的定义

bool foundSkeleton = false;

// 读取骨骼数据帧的数据,读到的数据地址存在skeletonFrame

hr = NuiSkeletonGetNextFrame(0, &skeletonFrame);

if (SUCCEEDED(hr))

{

//NUI_SKELETON_COUNT是检测到的骨骼数(即,跟踪到的人数)

for (int i = 0;i < NUI_SKELETON_COUNT;i++)

{

NUI_SKELETON_TRACKING_STATE trackingState = skeletonFrame.SkeletonData[i].eTrackingState;

// Kinect最多检测到6个人,但只能跟踪2个人的骨骼,再检查是否跟踪到了

if (trackingState == NUI_SKELETON_TRACKED)

{

foundSkeleton = true;

}

}

}

if (!foundSkeleton)

{

continue;

}skeletonFrame是NUI_SKELETON_FRAME类型的结构体。foundSkeleton是一个bool值,表示是否找到了骨骼点数据。

通过NuiSkeletonGetNextFrame函数来获取骨骼点数据。获取的数据会保存在skeletonFrame中。前面介绍了NUI_SKELETON_FRAME和NUI_SKELETON_DATA结构体,这里不做赘述了,直接给出答案。skeletonFrame.SkeletonData是一个数组,有6个元素,我们通过判断每一个skeletonFrame.SkeletonData[i].eTrackingState的值,来判断这个ID是否已经分配出去,即已跟踪到目标。如果跟踪到,其值应该等于NUI_SKELETON_TRACKED。

如果跟踪了某个目标,就令foundSkeleton为True,如果没有就不改变它,没有跟踪就直接跳过这次循环。

平滑骨骼帧

// 4.4、平滑骨骼帧,消除抖动

NuiTransformSmooth(&skeletonFrame, NULL);相当于对骨骼帧做一个滤波,防止出现抖动或是动作不连续引起的帧与帧之间的跳变。简单点说就是让动作连续,防止帧之间出现跳变。

其第一个参数就是骨骼帧,第二个参数可以用来配置滤波的参数,平滑的程度等等,这里直接取NULL即采用默认配置即可。

获取关节点坐标

for (int i = 0;i < NUI_SKELETON_COUNT;i++)

{

// 判断是否是一个正确骨骼的条件:骨骼被跟踪到并且肩部中心(颈部位置)必须跟踪到

if (skeletonFrame.SkeletonData[i].eTrackingState == NUI_SKELETON_TRACKED && skeletonFrame.SkeletonData[i].eSkeletonPositionTrackingState[NUI_SKELETON_POSITION_SHOULDER_CENTER] != NUI_SKELETON_POSITION_NOT_TRACKED)

{

float fx, fy;

// 拿到所有跟踪到的关节点的坐标,并转换为我们的深度空间的坐标,因为我们是在深度图像中

// 把这些关节点标记出来的

// NUI_SKELETON_POSITION_COUNT为跟踪到的一个骨骼的关节点的数目,为20

for (int j = 0;j < NUI_SKELETON_POSITION_COUNT;j++)

{

NuiTransformSkeletonToDepthImage(skeletonFrame.SkeletonData[i].SkeletonPositions[j], &fx, &fy);

skeletonPoint[i][j].x = (int)fx;

skeletonPoint[i][j].y = (int)fy;

}

for (int j = 0;j < NUI_SKELETON_POSITION_COUNT;j++)

{

if (skeletonFrame.SkeletonData[i].eSkeletonPositionTrackingState[j] != NUI_SKELETON_POSITION_NOT_TRACKED)

{

cv::circle(skeletonImg, skeletonPoint[i][j], 3, cv::Scalar(0, 255, 255), 1, 8, 0);

tracked[i] = true;

}

}

drawSkeleton(skeletonImg, skeletonPoint[i], i);

}

}NUI_SKELETON_COUNT是最多可以跟踪的用户数,即为6。遍历所有的这些ID数,寻找跟踪的再作处理。

skeletonFrame.SkeletonData[i].eSkeletonPositionTrackingState是一个数组,有20个元素,表示20个骨骼点是否跟踪到了。而这20个骨骼点是预先定义好了顺序的:

typedef

enum _NUI_SKELETON_POSITION_INDEX

{ NUI_SKELETON_POSITION_HIP_CENTER = 0,

NUI_SKELETON_POSITION_SPINE = ( NUI_SKELETON_POSITION_HIP_CENTER + 1 ) ,

NUI_SKELETON_POSITION_SHOULDER_CENTER = ( NUI_SKELETON_POSITION_SPINE + 1 ) ,

NUI_SKELETON_POSITION_HEAD = ( NUI_SKELETON_POSITION_SHOULDER_CENTER + 1 ) ,

NUI_SKELETON_POSITION_SHOULDER_LEFT = ( NUI_SKELETON_POSITION_HEAD + 1 ) ,

NUI_SKELETON_POSITION_ELBOW_LEFT = ( NUI_SKELETON_POSITION_SHOULDER_LEFT + 1 ) ,

NUI_SKELETON_POSITION_WRIST_LEFT = ( NUI_SKELETON_POSITION_ELBOW_LEFT + 1 ) ,

NUI_SKELETON_POSITION_HAND_LEFT = ( NUI_SKELETON_POSITION_WRIST_LEFT + 1 ) ,

NUI_SKELETON_POSITION_SHOULDER_RIGHT = ( NUI_SKELETON_POSITION_HAND_LEFT + 1 ) ,

NUI_SKELETON_POSITION_ELBOW_RIGHT = ( NUI_SKELETON_POSITION_SHOULDER_RIGHT + 1 ) ,

NUI_SKELETON_POSITION_WRIST_RIGHT = ( NUI_SKELETON_POSITION_ELBOW_RIGHT + 1 ) ,

NUI_SKELETON_POSITION_HAND_RIGHT = ( NUI_SKELETON_POSITION_WRIST_RIGHT + 1 ) ,

NUI_SKELETON_POSITION_HIP_LEFT = ( NUI_SKELETON_POSITION_HAND_RIGHT + 1 ) ,

NUI_SKELETON_POSITION_KNEE_LEFT = ( NUI_SKELETON_POSITION_HIP_LEFT + 1 ) ,

NUI_SKELETON_POSITION_ANKLE_LEFT = ( NUI_SKELETON_POSITION_KNEE_LEFT + 1 ) ,

NUI_SKELETON_POSITION_FOOT_LEFT = ( NUI_SKELETON_POSITION_ANKLE_LEFT + 1 ) ,

NUI_SKELETON_POSITION_HIP_RIGHT = ( NUI_SKELETON_POSITION_FOOT_LEFT + 1 ) ,

NUI_SKELETON_POSITION_KNEE_RIGHT = ( NUI_SKELETON_POSITION_HIP_RIGHT + 1 ) ,

NUI_SKELETON_POSITION_ANKLE_RIGHT = ( NUI_SKELETON_POSITION_KNEE_RIGHT + 1 ) ,

NUI_SKELETON_POSITION_FOOT_RIGHT = ( NUI_SKELETON_POSITION_ANKLE_RIGHT + 1 ) ,

NUI_SKELETON_POSITION_COUNT = ( NUI_SKELETON_POSITION_FOOT_RIGHT + 1 )

} NUI_SKELETON_POSITION_INDEX;下面是这20个骨骼点对应的位置的示意图:

按照NUI_SKELETON_POSITION_INDEX定义的参数来访问数组元素,判断是否跟踪到了数据,代码如下:

if (skeletonFrame.SkeletonData[i].eTrackingState == NUI_SKELETON_TRACKED && skeletonFrame.SkeletonData[i].eSkeletonPositionTrackingState[NUI_SKELETON_POSITION_SHOULDER_CENTER] != NUI_SKELETON_POSITION_NOT_TRACKED)随后将骨骼点在空间坐标系中的坐标转换为在图像坐标系中的坐标,详细的函数参数说明就不做介绍了,可以自行查看函数原型。代码如下:

NuiTransformSkeletonToDepthImage(skeletonFrame.SkeletonData[i].SkeletonPositions[j], &fx, &fy);随后会在程序中调用cv::circle函数,将骨骼点画出来,此时的骨骼点是经过了坐标转换后得到的图像坐标系中的坐标。

之后就是绘制骨架了,放到后面详细介绍,需要自己定义函数drawSkeleton来实现,调用该函数的代码如下:

drawSkeleton(skeletonImg, skeletonPoint[i], i);绘制骨架

drawSkeleton函数定义如下:

//通过传入关节点的位置,把骨骼画出来

void drawSkeleton(cv::Mat &img, cv::Point pointSet[], int which_one)

{

cv::Scalar color;

switch (which_one)

{

case 0:

color = cv::Scalar(255, 0, 0);

break;

case 1:

color = cv::Scalar(0, 255, 0);

break;

case 2:

color = cv::Scalar(0, 0, 255);

break;

case 3:

color = cv::Scalar(255, 255, 0);

break;

case 4:

color = cv::Scalar(255, 0, 255);

break;

case 5:

color = cv::Scalar(0, 255, 255);

break;

}

// 脊柱

if ((pointSet[NUI_SKELETON_POSITION_HEAD].x != 0 || pointSet[NUI_SKELETON_POSITION_HEAD].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HEAD], pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SPINE].x != 0 || pointSet[NUI_SKELETON_POSITION_SPINE].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], pointSet[NUI_SKELETON_POSITION_SPINE], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SPINE].x != 0 || pointSet[NUI_SKELETON_POSITION_SPINE].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HIP_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_CENTER].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SPINE], pointSet[NUI_SKELETON_POSITION_HIP_CENTER], color, 2);

// 左上肢

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT], pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT], pointSet[NUI_SKELETON_POSITION_WRIST_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HAND_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_HAND_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_WRIST_LEFT], pointSet[NUI_SKELETON_POSITION_HAND_LEFT], color, 2);

// 右上肢

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT], pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT], pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HAND_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_HAND_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT], pointSet[NUI_SKELETON_POSITION_HAND_RIGHT], color, 2);

// 左下肢

if ((pointSet[NUI_SKELETON_POSITION_HIP_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HIP_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HIP_CENTER], pointSet[NUI_SKELETON_POSITION_HIP_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_HIP_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HIP_LEFT], pointSet[NUI_SKELETON_POSITION_KNEE_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_KNEE_LEFT], pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_FOOT_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_FOOT_LEFT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT], pointSet[NUI_SKELETON_POSITION_FOOT_LEFT], color, 2);

// 右下肢

if ((pointSet[NUI_SKELETON_POSITION_HIP_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HIP_CENTER], pointSet[NUI_SKELETON_POSITION_HIP_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_HIP_RIGHT], pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT], pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_FOOT_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_FOOT_RIGHT].y != 0))

cv::line(img, pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT], pointSet[NUI_SKELETON_POSITION_FOOT_RIGHT], color, 2);

}比较长,但是思路很简单,就是根据不同的ID(共6个),分配不同的颜色,然后根据20个关节点两两画直线,绘制出骨架。

参考资料

- https://blog.csdn.net/zouxy09/article/details/8161617

- 源码中的

NuiSensor.h,大多数用到的数据类型都是在里面定义的;