强化学习的主要组成:agent, environment, a policy, a reward signal, a value function, [a model of the environment]

Reinforcement learning is a computational approach to understanding and automating goal-directed learning and decision making.

Reinforcement learning uses the formal framework of Markov decision processes to define the interaction between a learning agent and its environment in terms of states, actions, and rewards.

An Extended Example: Tic-Tac-Toe

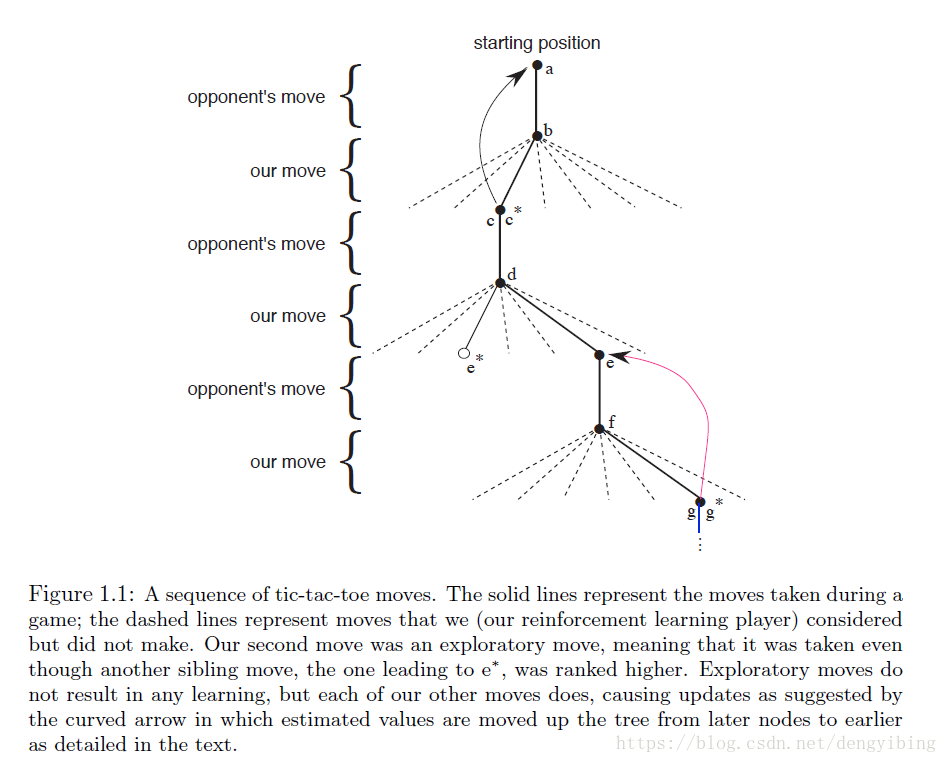

游戏如下图

大多数时候move greedily(move lead to the state with greatest value)

偶尔exploratory move(moves experience state that we might otherwise never see)

如下称为temporal-difference learning method

the update to the estimated value of s can be written below

- denote the state before the greedy move

- denote the state after the move

- denote the step-size parameter, which influence the rate of learning

The tic-tac-toe player is model-free in this sense with respect to its opponent: it has no model of its opponent of any kind

sumary

The use of value functions distinguishes reinforcement learning methods from evolutionary methods that search directly in policy space guided by scalar evaluations of entire policies.